How we built our mini data center. Part 3 - Relocation

Continuing Part 1 - Colocation

In continuation of Part 2 - Hermoson

Hello! Judging by the reviews, letters and comments to the past two parts of our article - you liked it, and this is important. I remind you that we owe the construction of our own mini data center to the “residents” of Habr, it was you who helped us with the implementation of our ideas with our articles. Therefore, this series of articles is grateful to users and commentators, from whose ideas we “drew” inspiration.

Just want to say. We do not claim to be certified according to the TIER class (we simply cannot), we do not say that we have done something new or perfect, we only tell what we have done in a short period of time in less than 14 days with our needs and abilities. Treat this article as a “visual food for the brain,” this is not ideal, it’s just the experience of one project that may help you avoid mistakes in your business. We have not allowed them enough.

So, let's begin. We had literally a couple of days before the physical relocation of all the equipment, in our mini data center almost everything was ready, except for the main one — the Internet channels. We quickly agreed to connect two independent providers, but as we wrote earlier, they set deadlines for us, up to 6 months. It turned out that we chose a place of deployment near a group of providers and complicated the broach only coordination with the owners of wells / supports.

')

After talking with the technical director of the first provider, we still persuaded him to do “quickly” and submit a link as soon as possible. The brigade left and literally the next day of signing the contract gave us optics (it was, by the way, about 100 meters). I could not photograph the whole process, I traveled between data-cents, solved related issues.

The second provider had a central office in Kiev and this connected us hand and foot. Sorry for the details, but without the central office they cannot even "sneeze", let alone stretch the cable. We were announced the final term of 11 months and we finally numb. After talking with the provider, the manager suggested a way out. Namely - contractors. They can stretch quickly and efficiently without additional papers (since they already have permission). Contact them.

Unlike the first provider (sewage), they offered to stretch along the “airway”. In general, we don’t care, this is a backup channel and we didn’t count on it, but its absence did not give us the opportunity to start, because everything should be “in mind”. Paid, agreed - work went. At first they offered to pull through the houses, then through the pillars, then through both, as a result, because of the creation of OSMD, they were forced to do only through the pillars (not to get to the roofs and not to agree) on the treetops jumping from branch to branch. Indeed, this broach had to be seen, the guys risked their lives literally every second, pushing the cable through the tree, climbing on it without insurance and jumping onto the next one. We must pay tribute to the guys - professionals.

And it would seem that everything, optics is laid out, there is a link on the first provider, on the second they still claim and wait for people at the “base station” to join in there, they said it was a question for 1-2 days. We are generally satisfied and are thinking about moving.

Moving day

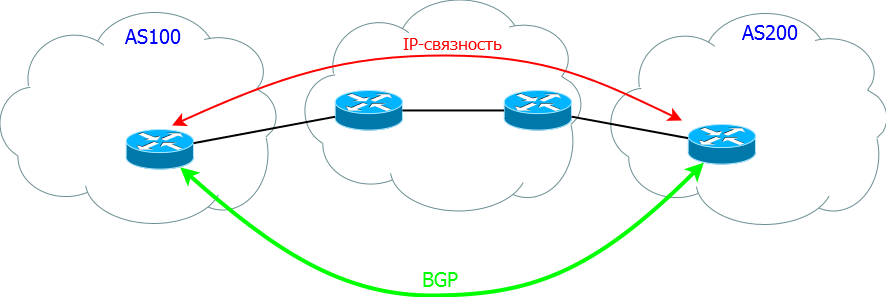

Probably the most difficult for us - it was to think about how to move with minimal downtime. It turned out that we moved on Friday. One of the hardest things to do is raise a BGP tunnel. I will try to explain what it is on the fingers. BGP - Border Gateway Protocol, a protocol by which one provider with its AS (autonomous network) communicates with another provider and with its AS (autonomous network). Roughly speaking, this is a junction that allows you to transfer traffic from one provider to another and so along the chain.

The difficulty in this situation arose in the fact that at that time we had one AS to which our IPs were attached. In order to change data from one provider to another, you first need to reconfigure the main router (our Cisco), and then also make changes to the RIPE and the provider. Thus, it is impossible to do this before the move, everything will be laid down. And to do in time - there are risks of big downtime. Based on the fact that we moved according to plan - we warned all users in advance by mailing, on Twitter and on the forums. Therefore, we decided to change the settings after the move. But more about that later.

So, we started to turn off the equipment. We take out the glands.

Wipe from dust.

Considering that we drove there with three servers and added them little by little (carrying on hands on the 5-6 floor) - we didn’t know how much it would not just be disconnecting and carrying everything, because servers at the time of the move was already 14 pieces, plus IBM IP-KVM. But the blessing we brought along with the guys to help and another machine (because everything in my car would not fit).

Having run between the floors several times, we realized that we wouldn’t let it all down, and asked to turn on the freight elevator (in order to turn it on you need to look for the building owner, take keys, arrange, etc.), in general it was just the case when it was needed. Thanks for this guys.

Junk has accumulated quite a bit, 14 servers, 1 client, PDU sockets, disks, and other stuff for SCS installation. Loaded and go.

To go not so far, about 5-8 km, so we go slowly, carefully. In our new mini-tsoda, colleagues are already waiting for us to unload and connect. We arrived, unloaded the iron on the table.

Began to engage in the installation of network equipment, its connection, broach and laying of cables. For this case also bought lugs. It is very convenient with them.

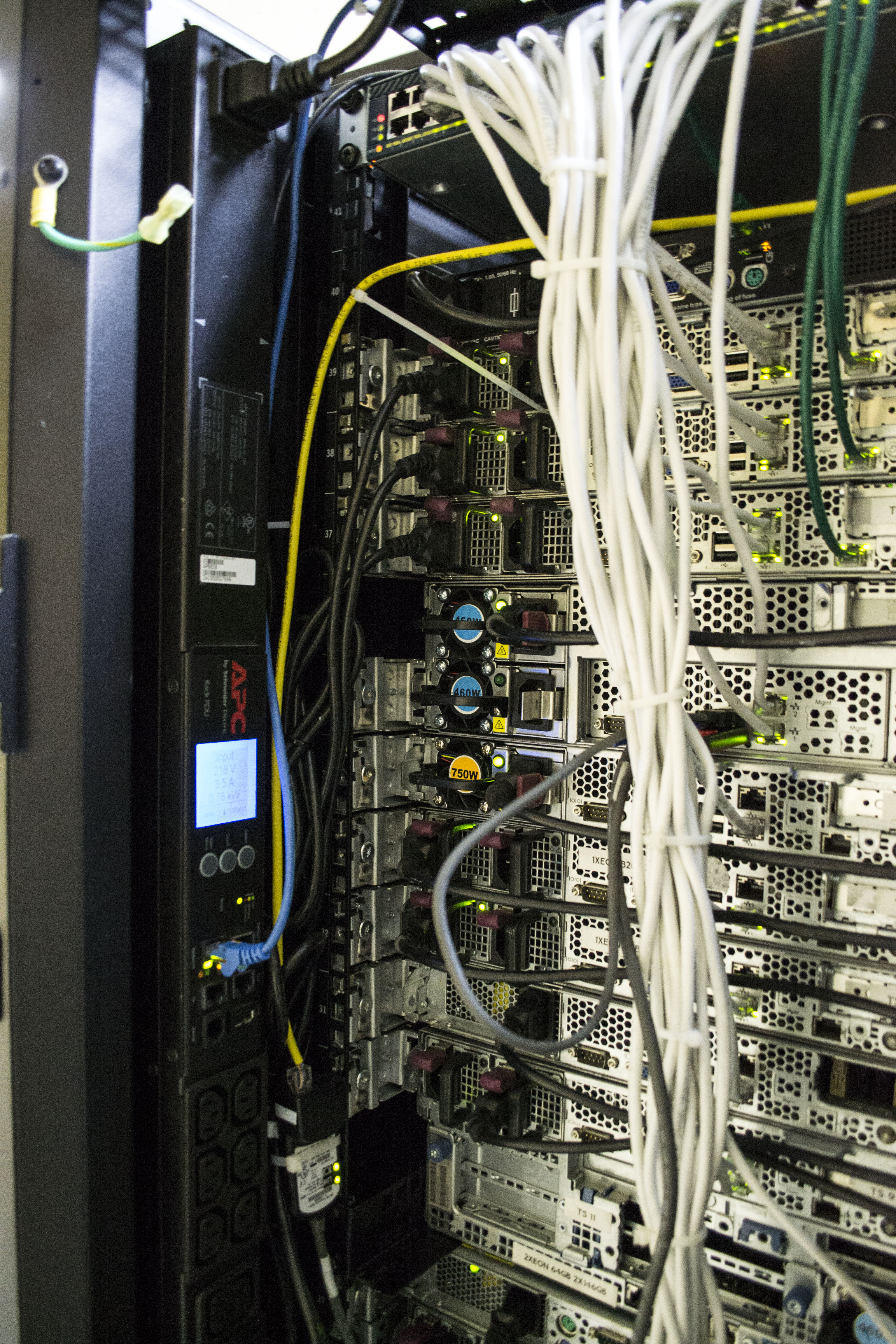

We mount APC PDU (we managed to buy one managed one).

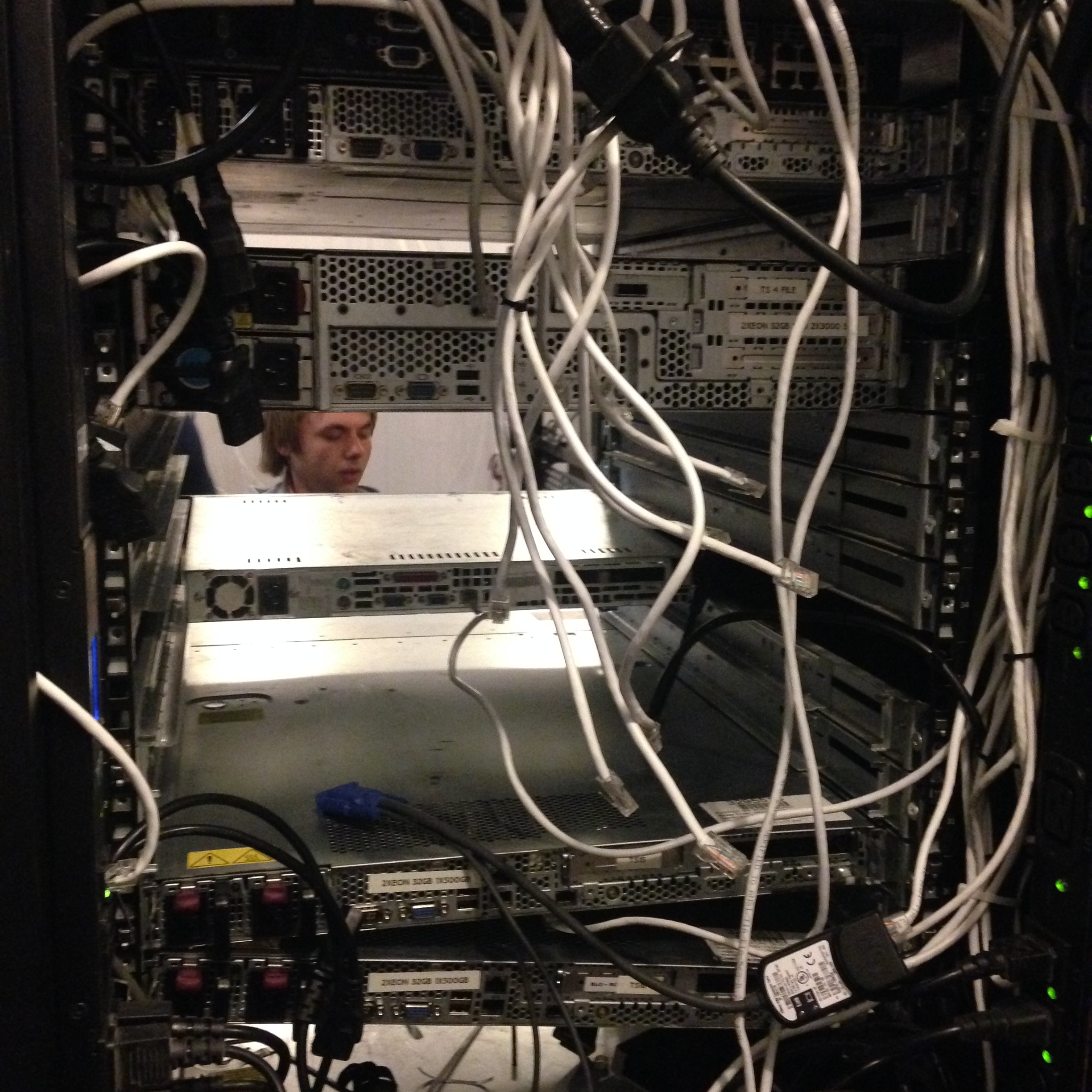

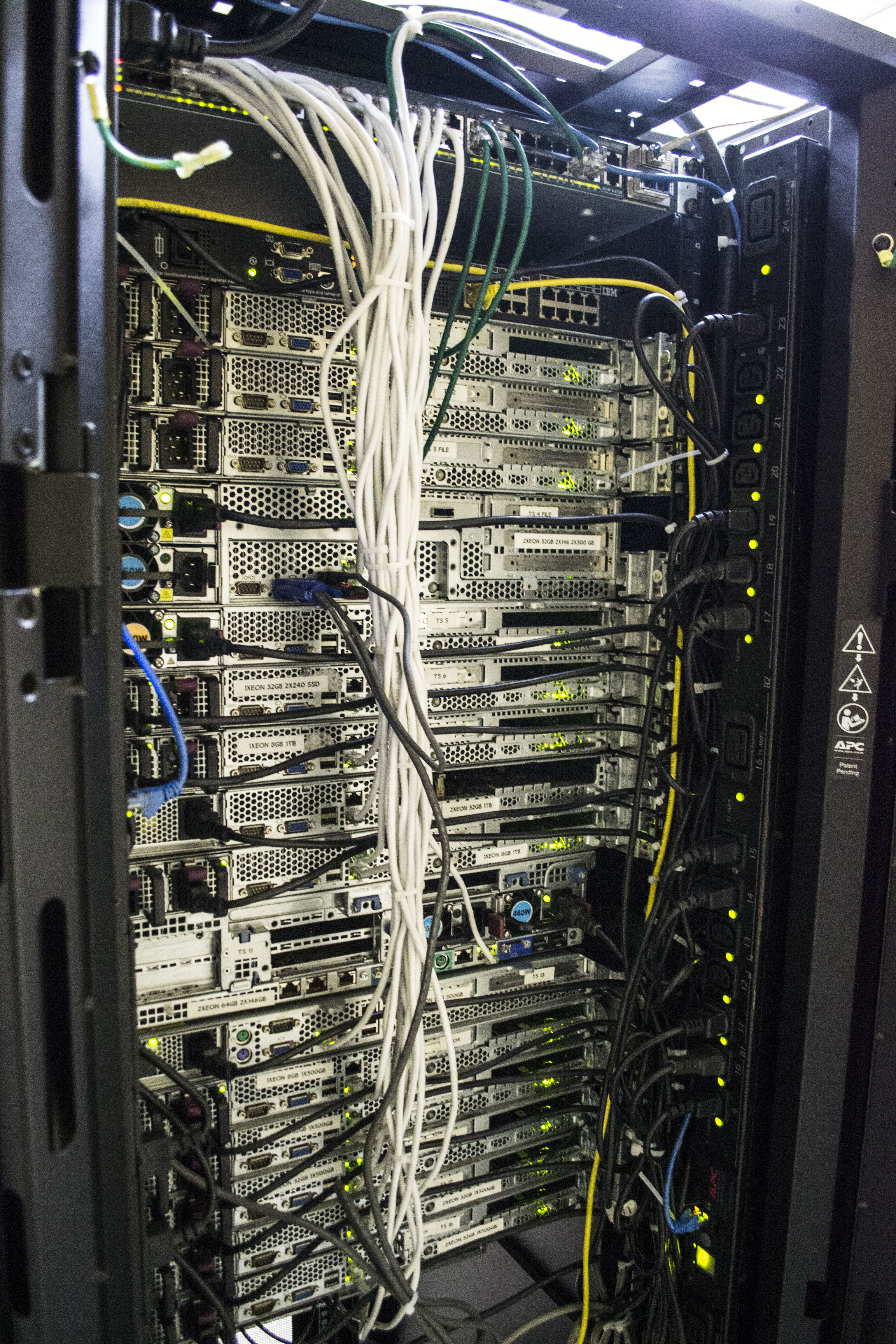

Begin to fill the cabinet.

Inserted glands, tsiska, while the test cable was thrown only to the tsiska.

Started to configure tsiska. These were also those perversions. The monitor on the floor, the sistemnik on the chair, all in a “suspended” state. Yes, it happens like that :)

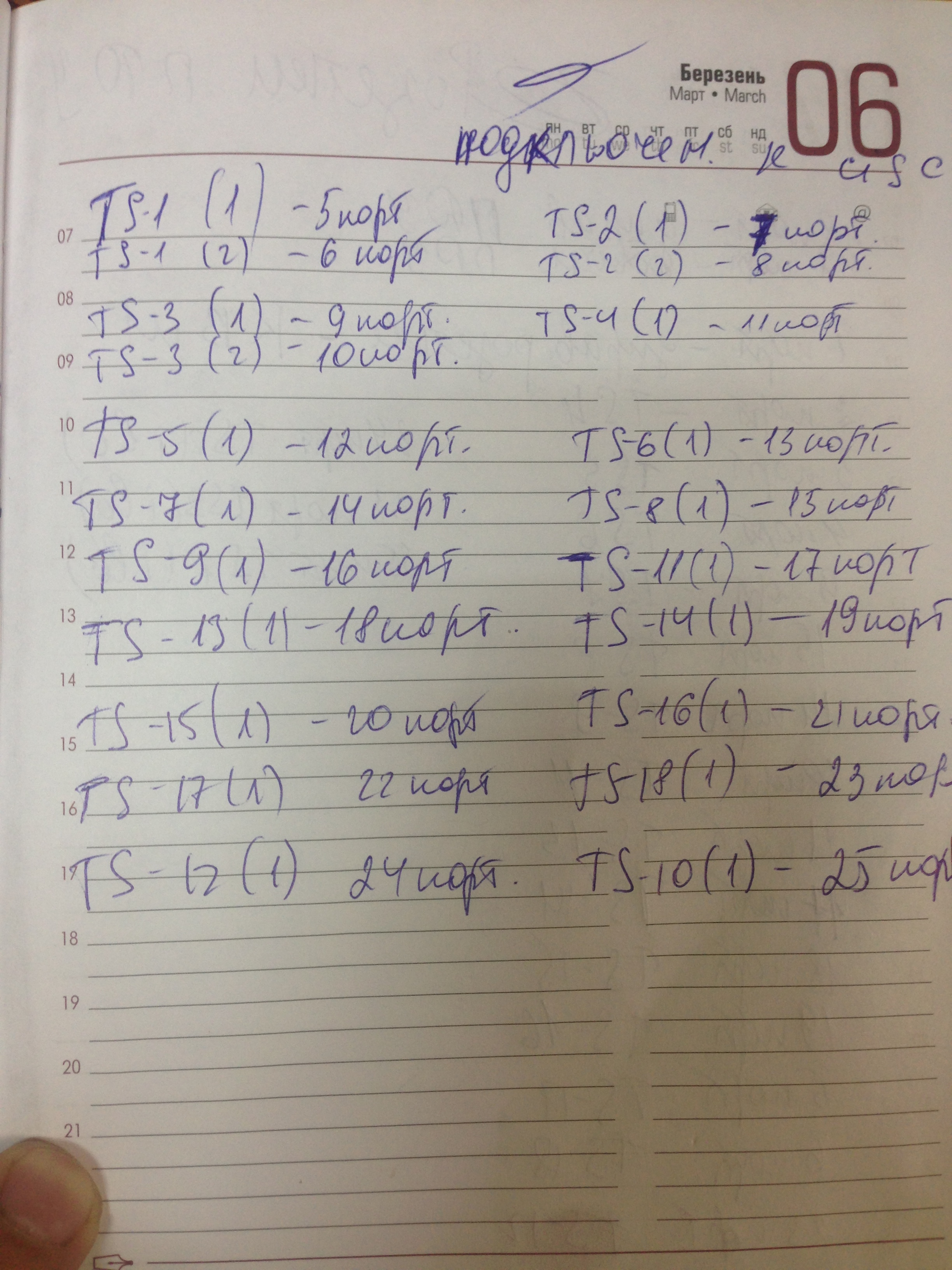

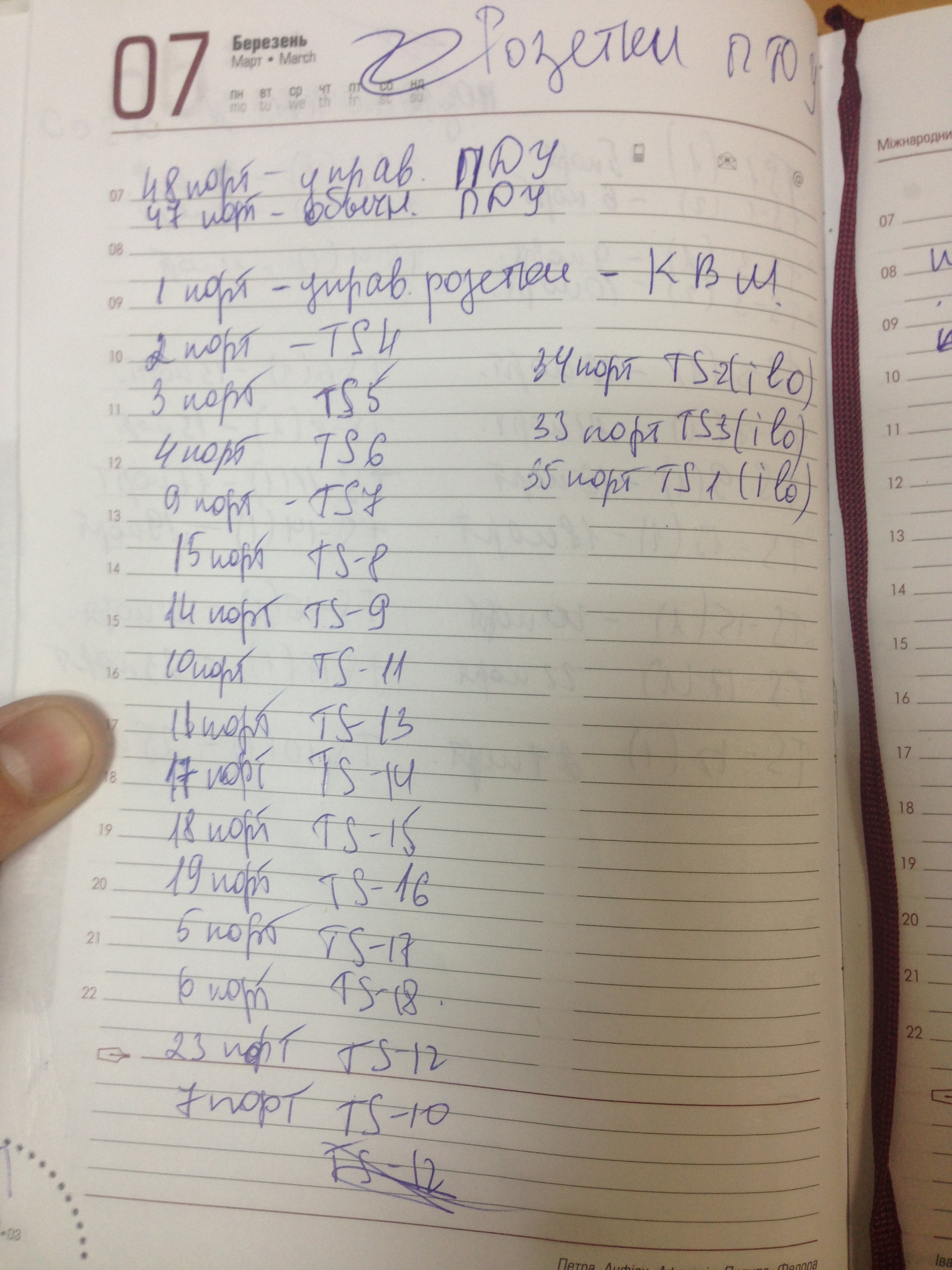

While setting up a tsiska, we began a census of equipment, Cisco ports, and APC PDU ports. This is a very important point, it helped us more than once.

It was already evening, 4 hours, maybe half past four ... it would seem that the main work was done .., but if we knew how wrong ... We started to lift the BGP tunnel (we received the settings in advance) - and the FIG ... Link is there, but he does not want to work. The problem emerged quickly and turned out because of our inexperience. We connected a gigabit link through optical fiber. Our Cisco supports 2x10GB. We boldly inserted optics into it (in fact, this is my joint, I did not consult with our admins, they scolded me for a long time) - and nothing. It turned out that if the port is gigabit and the module is 10 gigabit, it will not work. We started urgently calling the provider, asking for help, how and what to do ... but then it turned out that everyone had gone home (Friday, before the 3rd weekend and holiday) ... and we became stupor.

There was an option to connect everything through a media converter (as we did in the future), optics is included - a twisted pair goes out, but we did not have it, and the shops were all closed, and they would start working only after 3 days. I had to call those through friends. director of the company, to tear his employee from home, so that he in the office took the module 10G for their site and connected. And only then, our tsiska began to perceive the link adequately and it all worked (joint).

We finished all the work and waited ... when the records, routes and everything were updated, and everything would work. It was already 8 in the evening, everyone was tired, one guy was even called an ambulance, one of ours scratched his hand, and the other of the guys whom we called for help as a physical. Strength - splashed from the sight of blood with his nose on the threshold and cut his chin.

At about 3-4 o'clock in the morning, traffic began to rise slowly. Everything worked, we ran and eliminated problems on the go. But IPv6 did not work. Neither in the morning, nor in a day ... When communicating with the provider, it turned out that the manager entered into an agreement (including on IPv6) without knowing that the provider physically does not have IPv6 networks, and even less the ability to route them. It was a collapse. But thanks again to the connection with those. director of the provider, he found a way to “push” traffic past them through a neighboring provider with IPv6 and everything worked for us, though it was already a little late, some of the clients left us.

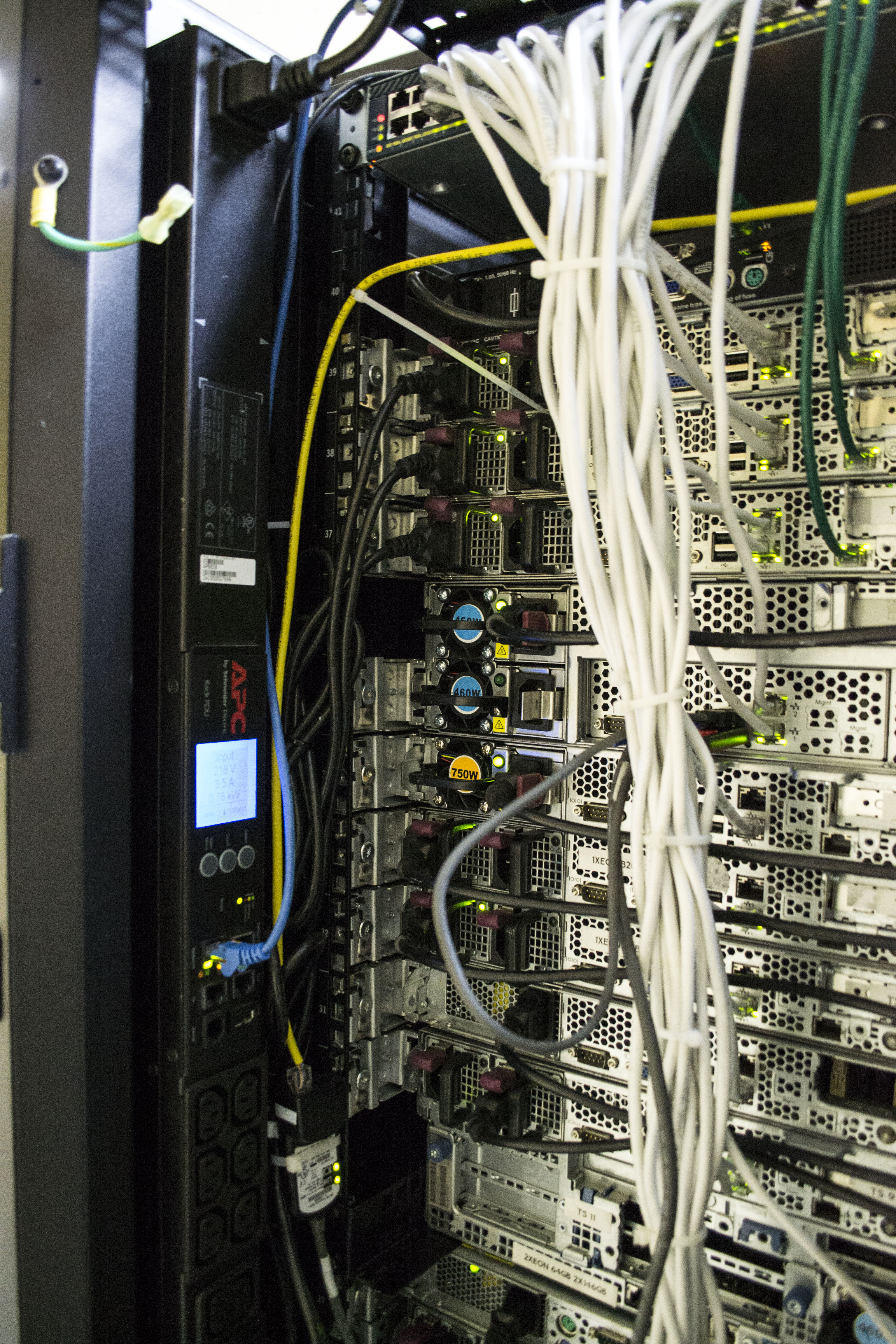

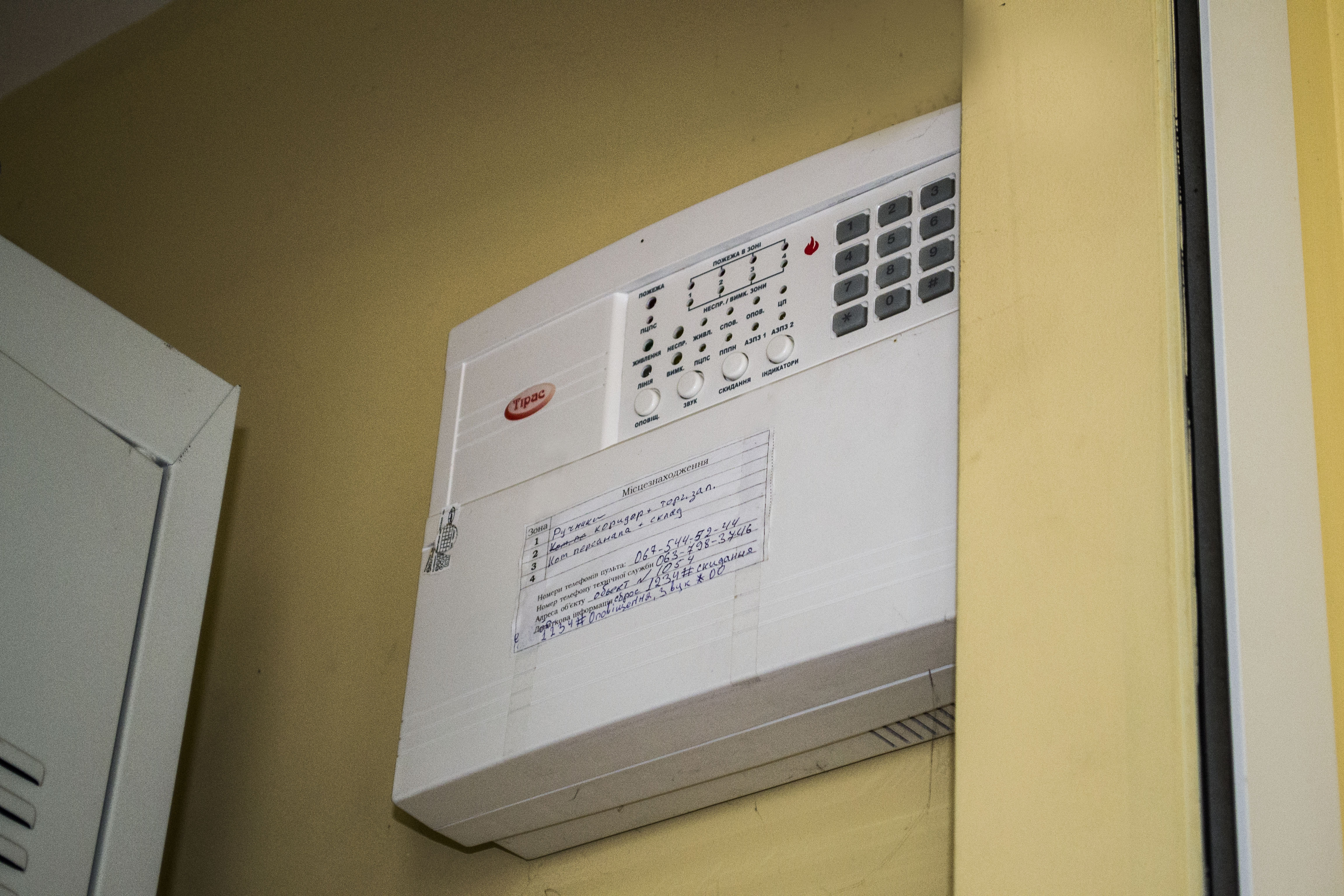

Upon completion of all the work, our data center looked like this:

This is the end of our move. As you can see, there were a lot of little things we didn’t think about right away, made a lot of mistakes for which we paid later. We sincerely hope that this article will help to avoid our mistakes - to other people who try their luck in a similar matter.

Well, as always, there is one nice bonus. We plan to write a separate article on the financial part of our project (perhaps without specific numbers, but in a comparative analysis it will be clear). From it you will understand what is cost-effective, what is not, where you can save and much more.

We will also continue to publish articles on Habré about the work of our mini data center, about the difficulties that arise and about our innovations. In one of the upcoming articles, you will see how we selected the "self-assembly" equipment for specific purposes and what we ultimately chose. Thanks for attention!

Source: https://habr.com/ru/post/312870/

All Articles