Why do we spend $ 2 million a year at 99.99% accuracy in data processing at Dadat?

Have you ever wondered why it is generally possible to correct errors and typos in text data, for example, in addresses and names? Why do we think that “ Terskaya ” is, most likely, Tverskaya Street, and not some fantastic Vasiliyatorsky Street? And what if it is Komsomolsky Avenue, in which twenty typos were made?

Our life experience suggests that ordered low-entropic states are less likely than high-entropic unordered states. That is, “Terskaya” is more likely Tverskaya with one typographical error than Komsomolsky Prospect with twenty typos. However, in life there are many controversial cases where the probabilities are not so straightforward.

For example, " March 8 1 12 " is " 1st street March 8, house 12" or " March 8 street, house 1, apartment 12" ? Both addresses exist and correct, but which one corresponds to the original one?

Gray zone

When processing addresses, there are three groups of results:

- Good ones - there were no mistakes or they were definitely fixed.

- Bad ones - nothing can be done: there is a lack of data or an equiprobable ambiguity, as in the example of March 8;

- Incomprehensible - the errors were corrected, but at the same time they made some assumptions.

The front of the battle for quality is that there are as many green addresses as possible, but there are no red ones among them.

For spam mailing, it is usually enough for more spam to reach its addressees, while not sending emails to very obvious “black holes”. That is, it is important that the base after processing become better than it was. Or at least it did not get worse. This is perhaps the only case where the percentage of recognized data has an independent value.

In other cases, you need to quickly get a subset of "green" data, where there are absolutely no errors, and immediately start them into work: issue loans, send goods, use for scoring, and write insurance policies. Got 50% of the green data? Bad, but already valuable for business. 80%? Super, the amount of handmade reduced by 5 times! 95%?

And with the rest of the "gray" can be sorted out later. Paranoidly bringing the entire database up to 100% usually does not make much sense from a business point of view, therefore, valuable and current customers are corrected forcibly (usually involving manual labor of employees or raising primary documentation). And the rest remain in the “gray zone” until the client himself contacts the company.

Red in green skin

But this all makes sense only when our "green" - really green . Such that we believe them as ourselves and even more. When they do not need to check.

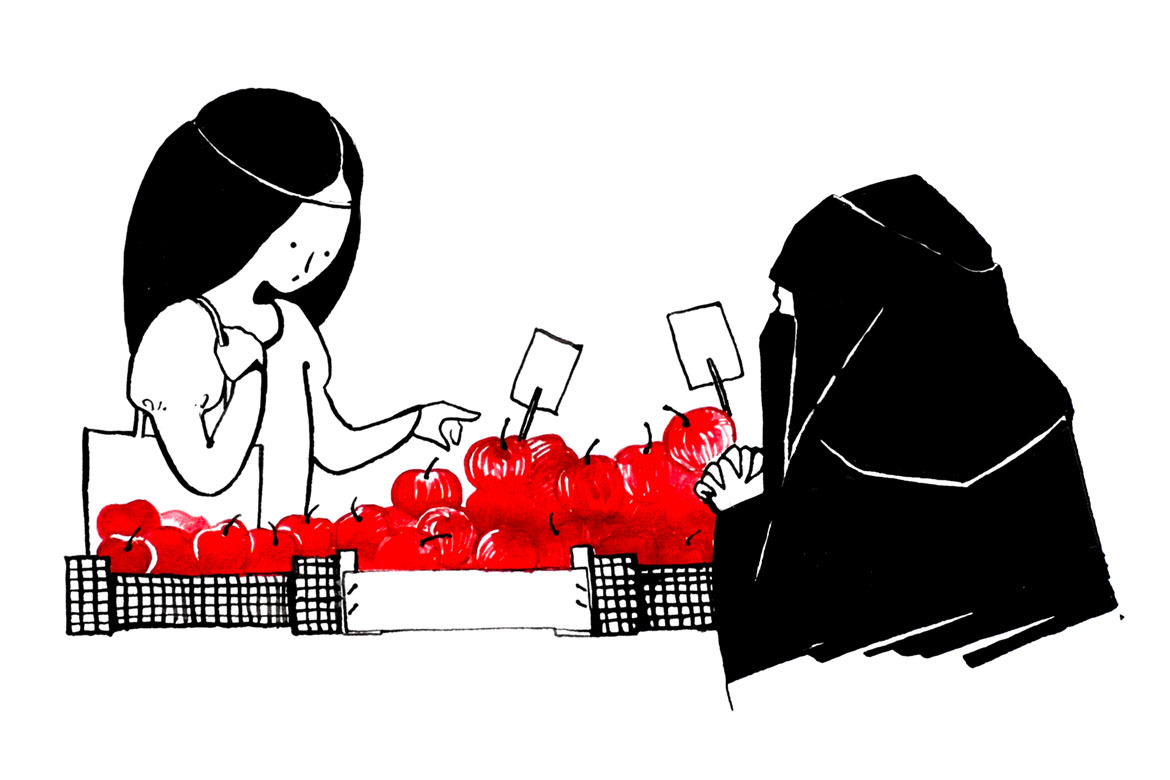

Suppose we have a basket with two dozen apples. We know that among them are poisoned. Bite - die. But how many of them - is unknown. Maybe everyone is poisoned, and maybe not one.

The consumer value of such a basket is close to zero. An intelligent person will decide to take at least one apple, only if starvation is the alternative.

Now suppose that in 5 out of 20 apples a plate is stuck "this apple is definitely not poisoned." Apples are the same, only there are also signs. Now another thing. Yes, 5 apples out of 20 is a bit, but you can eat them. And if they are not 5, but 15? Values are three times more!

And if there will be 19 of them, but on the tablet it will not be “definitely not poisoned”, but “95%, which is not poisoned”? Take the risk? It is obvious that the use value of such a basket drops sharply.

These labels in the analysis of semistructured data are called quality codes. In fact, these are the same tablets: "definitely not poisoned," "not during pregnancy and lactation," "potassium cyanide, 100 mg."

Break to fix

Ten years ago we already had excellent algorithms in “Dadat” that corrected even very complex addresses: with typos, disturbed order, with renames and reassignments.

Each transformation was well explained in the logic of the program, but from the point of view of a person there were many savage instances when the resulting cleared address was completely different from the original one. In fact, any kind of abracadabra, even if it is not a valid 8g2h98oaz9, can be an address with a lot of typos. But this is the logic of the program, and not a person with common sense.

Therefore, we have added the process of validating the parsed data. Validation specifically spoils good data and makes bad data. For validation, completely different algorithms are used than for parsing - they try to distort the parsed data so that the original address is obtained again. Thus several things are checked at once:

- Was there a parsing error?

- Is the resulting address the same as the original address, or the algorithms have imagined something?

- Does the quality code reflect what happened to the address?

The data validated in this way are very green. For validated data, we achieved an accuracy of 99.99%, or no more than 1 error per 10,000 records. Such accuracy is guaranteed daily updated tests from tens of millions of examples. This is a superhuman level of quality. Brilliant victory of the human mind over itself!

The main thing here is “daily updated tests of tens of millions of examples.” Creating tests is not a one-time task, but an ongoing process, as reference books change, and life brings new cases. Without tests, it is impossible to guarantee that the “green” data is really “green”, and without this, for most real-world applications, everything loses its meaning.

The magic of "nines"

The difference between the quality of 99.99% and 99.98% is two times. In the first case, 1 error per 10,000, and in the second - 2 errors per 10,000 records.

Achievement and continuous maintenance of each guaranteed "nine" is more expensive than all the previous "nine" together. These are not only significantly more complex algorithms, but also significantly more extensive tests that should representatively reflect different cases in real data and different branches of these more complex algorithms.

If 90% can be guaranteed with a representative test of 10 thousand test pairs (source address and expected cleared), then for 99% you will need about 100 thousand, and for 99.99% - at least 10 million tests. Not random records, namely, tests, the representativeness of each case in which reflects the occurrence of the problem in real data.

Since it is extremely difficult to achieve a distribution of occurrences on such volumes, it is necessary to use even larger volumes in order to meet everything. As a result, the minimum body of tests for Russian addresses is approximately 50 million test pairs, which are updated by specialists a few percent a year. This means that tens and hundreds of thousands of addresses are manually checked monthly. Gradually, the processes are being debugged and the maintenance of such a building decreases, but it is hardly worth counting on in the first years.

The accuracy of " Dadaty " costs us about two million dollars a year.

The development of parsers is quite possible for talented enthusiasts and small teams, but high and guaranteed quality indicators on arbitrary data are practically unattainable. For the same reasons, the transfer of technology from one country and language to another is practically equivalent to developing from scratch, since the lion's share of time and expenses are qualitative tests, not algorithms.

findings

The described phenomena are characteristic not only for addresses, but also for any results of work with semi-structured data used in real business. For deep machine learning, big data, for text, voice, pictures and video - everywhere there will be these patterns:

- Accurate quality codes are more important than data processing accuracy.

- Quality codes should be assigned to the wrong algorithm that processes the data.

- Accuracy is unattainable without good tests.

- Good tests for a long time to create and expensive to maintain.

')

Source: https://habr.com/ru/post/312858/

All Articles