Scrolling endlessly scrolling page

Scrolling endlessly scrolling page

Welcome to the Scrapy tips from the pros! This month we’ll share a few tricks to help speed up your web scraping work. As the leading maintainers of Scrapy, we face every obstacle you can imagine. So do not worry - you are in safe hands. Feel free to contact us on twitter or facebook with any suggestions for future articles.

In the era of one-page apps and tons of AJAX requests on one page, many websites have replaced the forward / back navigation button with a fancy mechanism for endless page scrolling. Websites using this mechanism load a new entity each time a user reaches the end of the page while scrolling vertically (remember Twitter, Facebook, Google Images). Even though UX experts claim that the infinite scrolling mechanism provides an excessive amount of data for users, we see an increasing number of web pages resorting to providing an infinite list of results.

One of the first things we do when developing our web scraper is that we are looking for user interface components on the site that contain links to the next page of results. Unfortunately, such links are not presented on pages with endless scrolling.

Although this scenario may seem like a classic case for JavaScript frameworks such as Splash or Selenium, it is really easy to implement. All you need to do instead of simulating user interaction through one of those frameworks is to examine your browser's AJAX requests while scrolling the page and then recreate these requests in your spider in Scrapy.

Let's use Spidy Quotes as an example and build a spider that gets all the elements listed on the page.

Page inspection

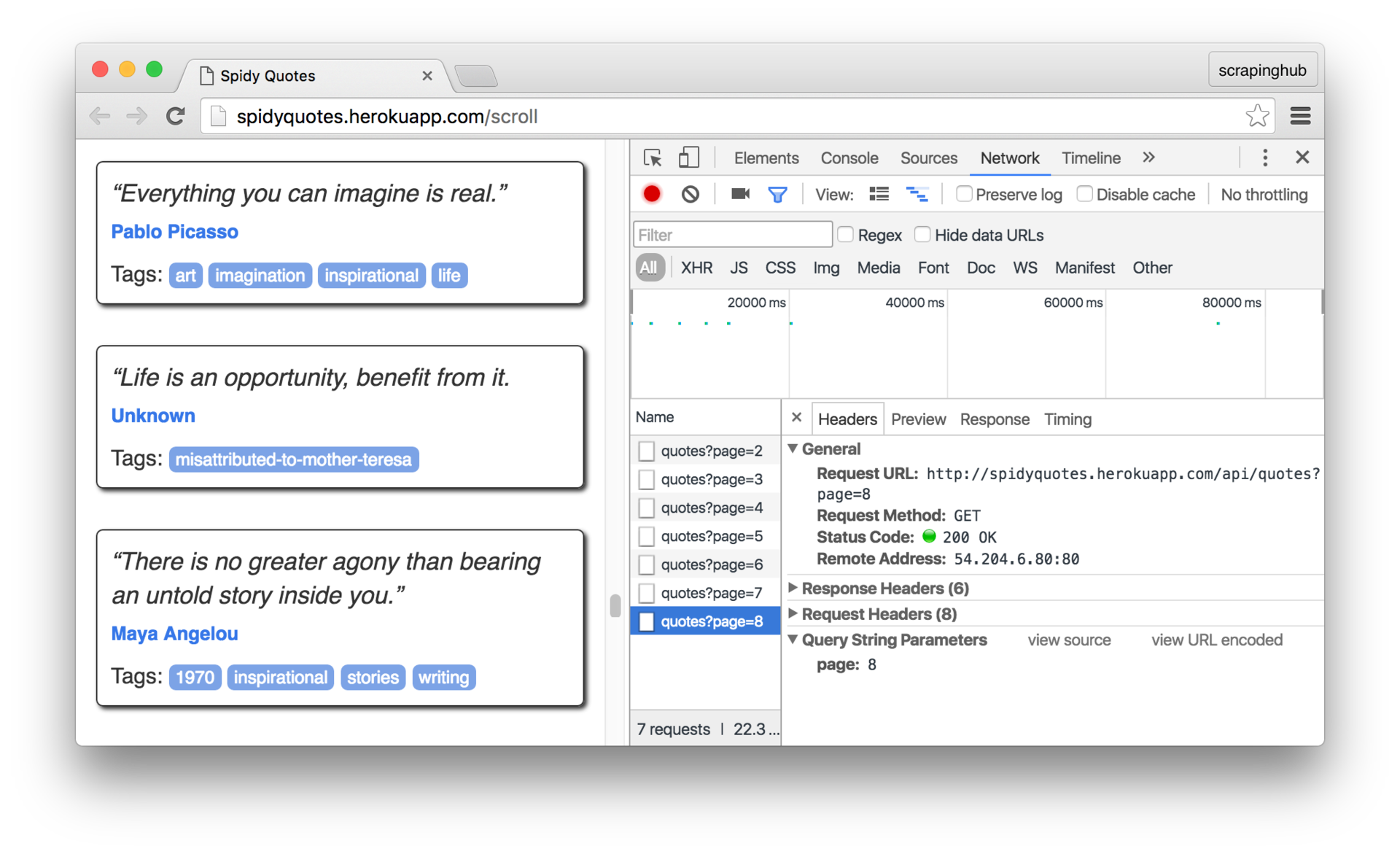

First of all, we need to understand how endless scrolling on the page works. And we can do this using the Network panel in the developer tools in the browser. Open the panel and scroll the page to see the requests sent by the browser:

Click on the request to view it in more detail. As we can see, the browser sends a request for /api/quotes?page=x and receives a JSON object like this in the response:

{ "has_next":true, "page":8, "quotes":[ { "author":{ "goodreads_link":"/author/show/1244.Mark_Twain", "name":"Mark Twain" }, "tags":["individuality", "majority", "minority", "wisdom"], "text":"Whenever you find yourself on the side of the ..." }, { "author":{ "goodreads_link":"/author/show/1244.Mark_Twain", "name":"Mark Twain" }, "tags":["books", "contentment", "friends"], "text":"Good friends, good books, and a sleepy ..." } ], "tag":null, "top_ten_tags":[["love", 49], ["inspirational", 43], ...] } This is the information we need for our spider. All you need to do in it is to generate requests for /api/quotes?page=x increasing x until the value of the has_next field becomes false . The great thing about this is that we don’t even have to scrap the HTML content to get the data we need. This is all contained in a beautiful machine-readable JSON format.

Building a spider

Here is our spider. It retrieves the target data from the content in JSON-format, which we received in response from the server. This approach is simpler and more reliable than digging through the HTML tree of the page, hoping that changing the markup will not break our spiders.

import json import scrapy class SpidyQuotesSpider(scrapy.Spider): name = 'spidyquotes' quotes_base_url = 'http://spidyquotes.herokuapp.com/api/quotes?page=%s' start_urls = [quotes_base_url % 1] download_delay = 1.5 def parse(self, response): data = json.loads(response.body) for item in data.get('quotes', []): yield { 'text': item.get('text'), 'author': item.get('author', {}).get('name'), 'tags': item.get('tags'), } if data['has_next']: next_page = data['page'] + 1 yield scrapy.Request(self.quotes_base_url % next_page) If you want to do something else, using the knowledge gained, then you can experiment with creating a spider for our blog, as it also uses an endless scrolling to load old posts

Conclusion

If you feel somewhat discouraged about the prospect of scraping websites with endless scrolling, then I hope you now feel more confident. The next time you deal with a page based on AJAX call calls as a result of user actions, see what kind of request your browser makes and play it in your spider. The response to the request is usually in JSON format, which makes your spider much easier.

')

Source: https://habr.com/ru/post/312816/

All Articles