How neural networks are now used: from research projects to entertainment services

In the 1960s, a new subsection of computer science appeared - artificial intelligence (AI). Half a century later, engineers continue to develop natural language processing and machine learning to meet the expectations of a strong AI.

In 1cloud, we write in a blog not only about ourselves [ customer focus , security ], but also analyze interesting topics like mental models or DNA-based storage systems .

Today we will talk about how machine learning is used now: why neural networks are popular with physicists, how YouTube recommender algorithms work, and whether machine learning will help “reprogram” our diseases.

')

/ Zufzzi / Wikimedia / CC0

There are an order of magnitude more mathematical functions than possible networks for their approximation. But deep neural networks somehow find the right answer. Harvard's Henry Lin (Henry Lin) and Max Tegmark (Max Tegmark) from the Massachusetts Institute of Technology are trying to figure out why this is happening.

Scientists have concluded that the Universe is controlled by only a small part of all possible functions: the laws of physics can be described by functions with a simple set of properties. That is why deep neural networks do not need to approximate all possible mathematical functions, but only a small part of them.

“For reasons incomprehensible to the end, our Universe can be accurately described by low-order polynomial Hamiltonians,” say Lin and Tegmark. Usually, polynomials describing the laws of physics have degrees from 2 to 4. Therefore, Lin and Tegmark believe that depth learning is uniquely suitable for building “physical” models.

The laws of physics have several important properties - they are usually symmetrical with respect to rotation and displacement. These properties also simplify the work of neural networks - for example, approximation of the object recognition process. Neural networks do not need to approximate an infinite number of possible mathematical functions, but only a small number of the simplest ones.

Another property of the Universe that neural networks can use is the hierarchy of its structure. Artificial neural networks are often created in the likeness of biological ones, so the ideas of scientists explain not only the successful work of in-depth learning machines, but the ability of the human brain to understand the Universe. Evolution has led to the emergence of the structure of the human brain, which is ideally suited for parsing a complex Universe into understandable components.

The work of scientists from Harvard and MIT should help to significantly accelerate the development of artificial intelligence. Mathematicians can improve the analytical understanding of the work of neural networks, physicists and biologists - to understand how the phenomena of nature, neural networks and our brain are interrelated, which will lead to a transition to a new level of work with AI.

Recently, scientists from the University of Texas at Austin and Cornell University, Richard McPherson (Richard McPherson), Reese Shokr and Vitaly Shmatikov published an article about their own method of image recognition based on artificial neural networks. With this system, you can identify a person through a database, even on specially protected images.

The experiment was carried out on several methods of protecting or obfuscating faces - pixelation, blurring and the new P3 method (Privacy-Preserving Photo Sharing), the essence of which is to encrypt a part of the JPEG image and separate the information that is valuable for recognition from the rest. During the study, scientists used neural networks to recognize people on four data sets, which are used to test algorithms for determining faces, objects, and handwritten numbers.

According to the MNIST database consisting of black and white handwritten numbers, the developed neural network determines handwriting with an accuracy of about 80% on images protected by the P3 algorithm with a level of 20 (recommended by the creators of P3 as the most appropriate value). On mosaic-protected (i.e., when pixelated) with blocks of resolution 8 × 8 or lower, the recognition accuracy exceeds 80%. The accuracy of random guessing in this case is only 10%.

In the CIFAR-10 dataset, consisting of color images of cars and animals, scientists achieved 75% accuracy with P3 protection, 70% with 4 × 4 pixelization and 50% with 8 × 8 blocks. The accuracy of random guessing in this case is also is 10%.

In the AT & T dataset of 40-person black-and-white photos, they achieved 57% accuracy with blur, 97% with P3 protection, and more than 95% with pixelation. The accuracy of the random selection in this example is 2.5%.

When testing on a FaceScrub dataset from photos of 530 celebrities, scientists achieved 53% recognition accuracy on pixelation with 16 × 16 blocks and 40% accuracy on protection P3. The accuracy of random guessing in this case is 0.19%.

The main reason why this method works in such difficult conditions is that it does not need to preliminarily indicate the concrete features that correspond to a person and do not even need to understand what partially encrypted or obscured images hide.

Instead, neural networks automatically identify relevant elements and learn to use correlations between hidden and visible information (for example, significant and “minor” parts in the JPEG image view). This is one of the examples of how neural networks learn to perform tasks, in the solution of which for a long time human intelligence was much more successful than artificial.

Developers from Google Paul Covington, Jay Adams and Emre Sargin described the work of the YouTube recommendation system based on deep neural networks.

As with other Google products, YouTube has begun the transition to the use of deep neural networks for most learning problems. The developed recommendation system is based on the Google Brain technology, which has recently been made publicly available as TensorFlow .

This technology is a flexible environment for experimenting with various architectures of deep neural networks and the application of large-scale distributed learning. Models developed by Google engineers study approximately one billion parameters and are trained in hundreds of billions of examples.

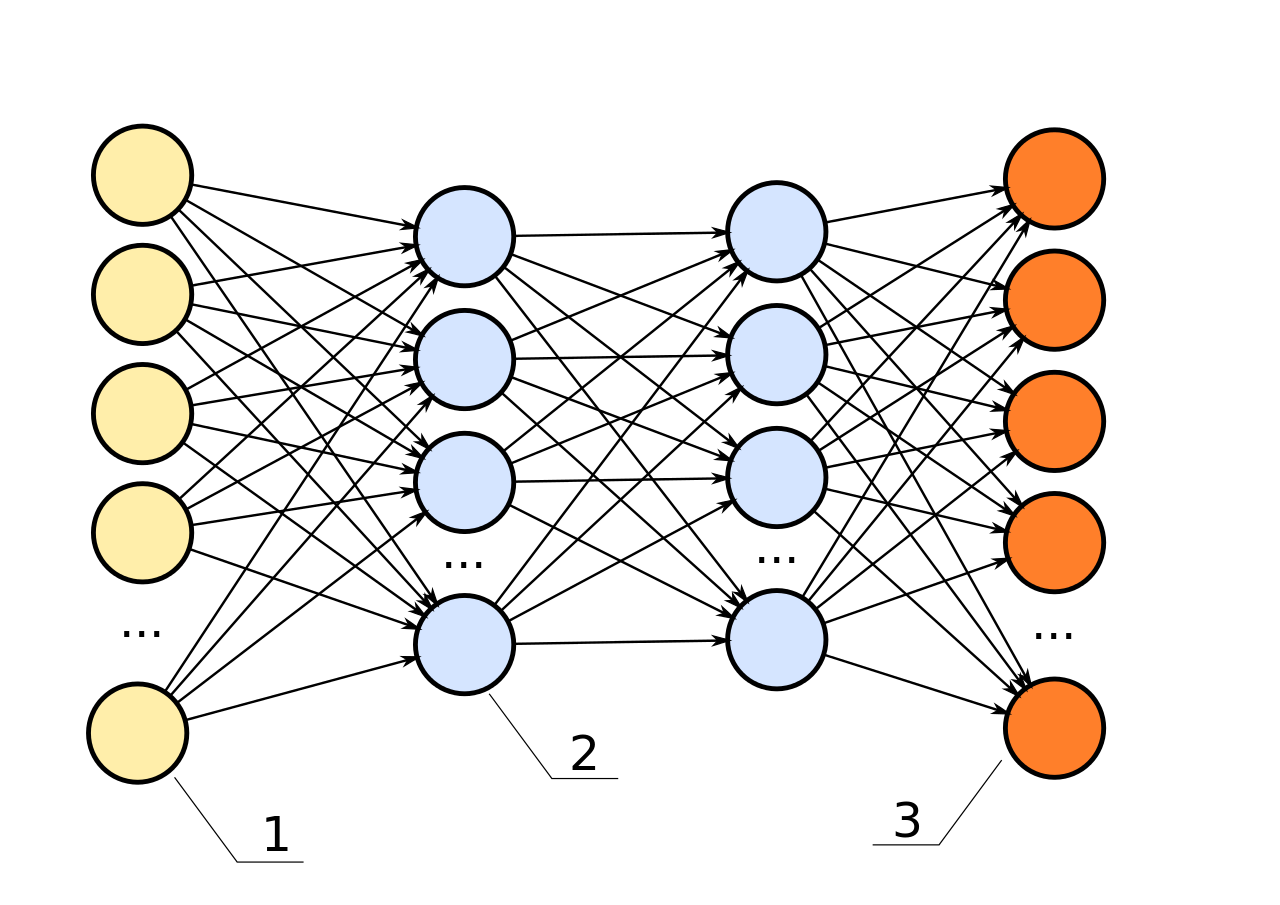

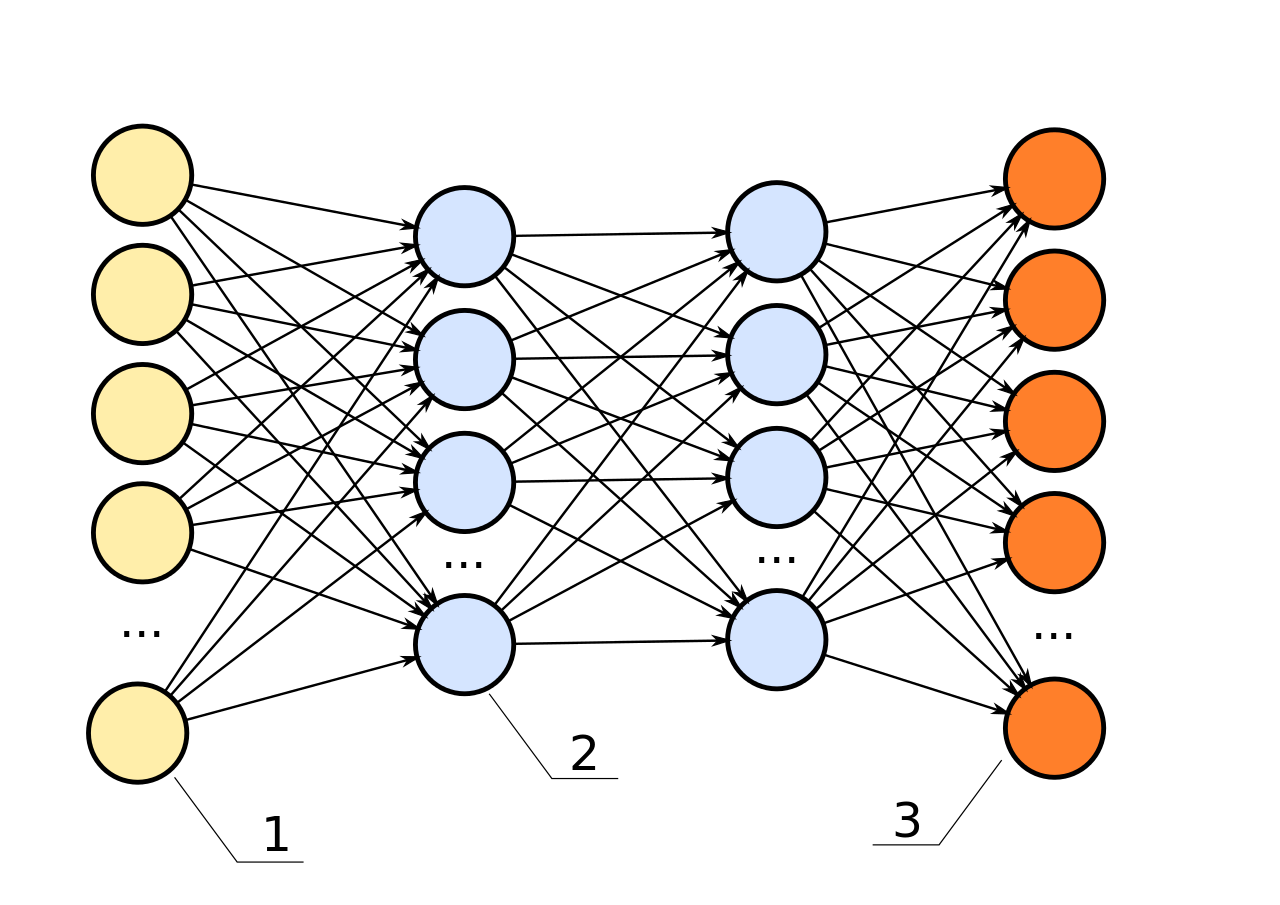

The system consists of two neural networks: one for generating “candidates” [for viewing], and the other for ranking them. The candidate generation network collects events from the YouTube user's activity history as input and highlights hundreds of small subcategories of videos from a huge set.

In fact, the candidate generation network allows you to select videos that were viewed by users with similar interests. The similarity between users is determined by how many identical videos they viewed (by video ID), by their search queries and demographic data (age, gender, etc.).

During the process of generating candidates a huge amount of YouTube videos are “sifted” to hundreds of videos that may be of interest to the user. Previously, the matrix factorization method was used for this purpose, and the first versions of the neural network model repeated this behavior using shallow networks that used only the previously viewed videos. Google engineers call their approach a non-linear generalization of factorization techniques.

And although YouTube has feedback mechanisms (like / dislike, polls, etc.), developers use the implicit [user-system] interaction in the form of views, where the video viewed to the end of the video is considered a positive example, to teach the neural network model, and submitted to the issuance, but not viewed - negative. The choice at this level is based on a much more extensive user history, which helps to select more accurate recommendations when explicit feedback is not enough.

To show the user a small number of “best” recommendations in the form of a list, it is necessary to precisely separate the “candidates” according to their relative importance to the user. The ranking network performs this task by defining its own assessment for each video and applying a rich set of characteristics describing the video and the user. Videos with the highest score are ranked and issued to the user. The two-step approach allows developers to work with millions of videos, and at the same time be sure that the set selected for each user will be personalized and interesting.

A large amount of video content is uploaded to YouTube every second, and for such a service it is very important to recommend “fresh” videos to keep users active. In addition to tracking new videos, developers are striving to improve the system in the direction of automatic search and distribution of popular content. The distribution of video popularity can vary greatly over time, but the average probability of watching a video over a period of training of several weeks will be in the form of a multinomial distribution over the entire set of content.

Microsoft believes that cancer is like a computer virus and can be defeated by breaking the code. Employees of the company use artificial intelligence in a new medical project for the destruction of cancer cells.

In one of the directions of this medical project, machine learning and natural language processing are used - they are necessary for scientists to assess the entire volume of previously collected data when selecting a treatment plan for patients.

IBM is working on a similar project using the Watson Oncology program, which analyzes the patient's condition using the collected data. In another project, Microsoft uses a computer vision system for radiology to track the development of tumors.

In addition, the corporation plans to learn how to program the cells of the immune system as computer code. Jeanette M. Wing, vice president of Microsoft Research, explained that the computers of the future can not only be silicon, but also living matter, which pushes the company to search for possible ways of bioprogramming.

Artificial neural networks are far from a new concept, it appeared in the 1950s, and many breakthrough research in this area occurred in the 1980s and 1990s. But today, engineers have at their disposal tools that are much more powerful, and new significant ways to develop machine learning may not yet have been invented.

“AI is a new electricity. Artificial intelligence is doing the same thing that electricity did 100 years ago, transforming industry beyond industry, ” says Andrew Ng, senior researcher at Baidu Research.

What else can you read in our blog on Habré on artificial intelligence, neural networks and machine learning:

In 1cloud, we write in a blog not only about ourselves [ customer focus , security ], but also analyze interesting topics like mental models or DNA-based storage systems .

Today we will talk about how machine learning is used now: why neural networks are popular with physicists, how YouTube recommender algorithms work, and whether machine learning will help “reprogram” our diseases.

')

/ Zufzzi / Wikimedia / CC0

The universe only seems immense

There are an order of magnitude more mathematical functions than possible networks for their approximation. But deep neural networks somehow find the right answer. Harvard's Henry Lin (Henry Lin) and Max Tegmark (Max Tegmark) from the Massachusetts Institute of Technology are trying to figure out why this is happening.

Scientists have concluded that the Universe is controlled by only a small part of all possible functions: the laws of physics can be described by functions with a simple set of properties. That is why deep neural networks do not need to approximate all possible mathematical functions, but only a small part of them.

“For reasons incomprehensible to the end, our Universe can be accurately described by low-order polynomial Hamiltonians,” say Lin and Tegmark. Usually, polynomials describing the laws of physics have degrees from 2 to 4. Therefore, Lin and Tegmark believe that depth learning is uniquely suitable for building “physical” models.

The laws of physics have several important properties - they are usually symmetrical with respect to rotation and displacement. These properties also simplify the work of neural networks - for example, approximation of the object recognition process. Neural networks do not need to approximate an infinite number of possible mathematical functions, but only a small number of the simplest ones.

Another property of the Universe that neural networks can use is the hierarchy of its structure. Artificial neural networks are often created in the likeness of biological ones, so the ideas of scientists explain not only the successful work of in-depth learning machines, but the ability of the human brain to understand the Universe. Evolution has led to the emergence of the structure of the human brain, which is ideally suited for parsing a complex Universe into understandable components.

The work of scientists from Harvard and MIT should help to significantly accelerate the development of artificial intelligence. Mathematicians can improve the analytical understanding of the work of neural networks, physicists and biologists - to understand how the phenomena of nature, neural networks and our brain are interrelated, which will lead to a transition to a new level of work with AI.

Identification by image

Recently, scientists from the University of Texas at Austin and Cornell University, Richard McPherson (Richard McPherson), Reese Shokr and Vitaly Shmatikov published an article about their own method of image recognition based on artificial neural networks. With this system, you can identify a person through a database, even on specially protected images.

The experiment was carried out on several methods of protecting or obfuscating faces - pixelation, blurring and the new P3 method (Privacy-Preserving Photo Sharing), the essence of which is to encrypt a part of the JPEG image and separate the information that is valuable for recognition from the rest. During the study, scientists used neural networks to recognize people on four data sets, which are used to test algorithms for determining faces, objects, and handwritten numbers.

According to the MNIST database consisting of black and white handwritten numbers, the developed neural network determines handwriting with an accuracy of about 80% on images protected by the P3 algorithm with a level of 20 (recommended by the creators of P3 as the most appropriate value). On mosaic-protected (i.e., when pixelated) with blocks of resolution 8 × 8 or lower, the recognition accuracy exceeds 80%. The accuracy of random guessing in this case is only 10%.

In the CIFAR-10 dataset, consisting of color images of cars and animals, scientists achieved 75% accuracy with P3 protection, 70% with 4 × 4 pixelization and 50% with 8 × 8 blocks. The accuracy of random guessing in this case is also is 10%.

In the AT & T dataset of 40-person black-and-white photos, they achieved 57% accuracy with blur, 97% with P3 protection, and more than 95% with pixelation. The accuracy of the random selection in this example is 2.5%.

When testing on a FaceScrub dataset from photos of 530 celebrities, scientists achieved 53% recognition accuracy on pixelation with 16 × 16 blocks and 40% accuracy on protection P3. The accuracy of random guessing in this case is 0.19%.

The main reason why this method works in such difficult conditions is that it does not need to preliminarily indicate the concrete features that correspond to a person and do not even need to understand what partially encrypted or obscured images hide.

Instead, neural networks automatically identify relevant elements and learn to use correlations between hidden and visible information (for example, significant and “minor” parts in the JPEG image view). This is one of the examples of how neural networks learn to perform tasks, in the solution of which for a long time human intelligence was much more successful than artificial.

New YouTube recommendation system

Developers from Google Paul Covington, Jay Adams and Emre Sargin described the work of the YouTube recommendation system based on deep neural networks.

As with other Google products, YouTube has begun the transition to the use of deep neural networks for most learning problems. The developed recommendation system is based on the Google Brain technology, which has recently been made publicly available as TensorFlow .

This technology is a flexible environment for experimenting with various architectures of deep neural networks and the application of large-scale distributed learning. Models developed by Google engineers study approximately one billion parameters and are trained in hundreds of billions of examples.

The system consists of two neural networks: one for generating “candidates” [for viewing], and the other for ranking them. The candidate generation network collects events from the YouTube user's activity history as input and highlights hundreds of small subcategories of videos from a huge set.

In fact, the candidate generation network allows you to select videos that were viewed by users with similar interests. The similarity between users is determined by how many identical videos they viewed (by video ID), by their search queries and demographic data (age, gender, etc.).

During the process of generating candidates a huge amount of YouTube videos are “sifted” to hundreds of videos that may be of interest to the user. Previously, the matrix factorization method was used for this purpose, and the first versions of the neural network model repeated this behavior using shallow networks that used only the previously viewed videos. Google engineers call their approach a non-linear generalization of factorization techniques.

And although YouTube has feedback mechanisms (like / dislike, polls, etc.), developers use the implicit [user-system] interaction in the form of views, where the video viewed to the end of the video is considered a positive example, to teach the neural network model, and submitted to the issuance, but not viewed - negative. The choice at this level is based on a much more extensive user history, which helps to select more accurate recommendations when explicit feedback is not enough.

To show the user a small number of “best” recommendations in the form of a list, it is necessary to precisely separate the “candidates” according to their relative importance to the user. The ranking network performs this task by defining its own assessment for each video and applying a rich set of characteristics describing the video and the user. Videos with the highest score are ranked and issued to the user. The two-step approach allows developers to work with millions of videos, and at the same time be sure that the set selected for each user will be personalized and interesting.

A large amount of video content is uploaded to YouTube every second, and for such a service it is very important to recommend “fresh” videos to keep users active. In addition to tracking new videos, developers are striving to improve the system in the direction of automatic search and distribution of popular content. The distribution of video popularity can vary greatly over time, but the average probability of watching a video over a period of training of several weeks will be in the form of a multinomial distribution over the entire set of content.

The development of medicine and biocomputers

Microsoft believes that cancer is like a computer virus and can be defeated by breaking the code. Employees of the company use artificial intelligence in a new medical project for the destruction of cancer cells.

In one of the directions of this medical project, machine learning and natural language processing are used - they are necessary for scientists to assess the entire volume of previously collected data when selecting a treatment plan for patients.

IBM is working on a similar project using the Watson Oncology program, which analyzes the patient's condition using the collected data. In another project, Microsoft uses a computer vision system for radiology to track the development of tumors.

In addition, the corporation plans to learn how to program the cells of the immune system as computer code. Jeanette M. Wing, vice president of Microsoft Research, explained that the computers of the future can not only be silicon, but also living matter, which pushes the company to search for possible ways of bioprogramming.

Old ideas work in new ways

Artificial neural networks are far from a new concept, it appeared in the 1950s, and many breakthrough research in this area occurred in the 1980s and 1990s. But today, engineers have at their disposal tools that are much more powerful, and new significant ways to develop machine learning may not yet have been invented.

“AI is a new electricity. Artificial intelligence is doing the same thing that electricity did 100 years ago, transforming industry beyond industry, ” says Andrew Ng, senior researcher at Baidu Research.

What else can you read in our blog on Habré on artificial intelligence, neural networks and machine learning:

- Artificial Intelligence: What Scientists Think About It

- Supercomputers: Trends and Development Problems

- Big Data: Silver Bullet or another tool

- The development of cloud technology and robotics in the new decade

If you are interested in reading what we write about us (1cloud)

Source: https://habr.com/ru/post/312806/

All Articles