Why do we need the Ho-Kashyap algorithm?

Recently, a publication appeared on Habré about the Ho-Kashyap algorithm (the Ho-Kashyap procedure, which is also the NSCO algorithm, the smallest root-mean-square error). It seemed to me not very clear and I decided to sort out the topic myself. It turned out that in the Russian-speaking Internet the topic was not well disassembled, so I decided to issue an article based on the search results.

Despite the boom of neural networks in machine learning, linear classification algorithms remain much simpler to use and interpret. But at the same time, sometimes you don’t want to use any advanced methods, such as the support vector or logistic regression method, and the temptation is to drive all the data into one large linear least-squares regression , even more so it knows how to build MS Excel.

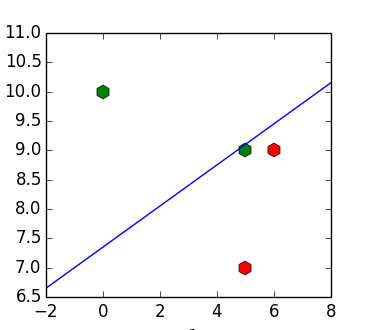

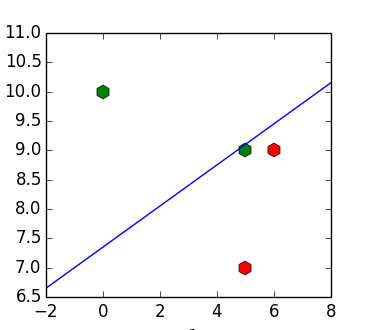

The problem with this approach is that even if the input data is linearly separable, the resulting classifier may not separate them. For example, for a set of points![X = [(6, 9), (5, 7), (5, 9), (10, 1)]](https://tex.s2cms.ru/svg/%20X%20%3D%20%5B(6%2C%209)%2C%20(5%2C%207)%2C%20(5%2C%209)%2C%20(10%2C%201)%5D%20) ,

, ![y = [1, 1, -1, -1]](https://tex.s2cms.ru/svg/%20y%20%3D%20%5B1%2C%201%2C%20-1%2C%20-1%5D%20) get the dividing line

get the dividing line %20%3D%200%20) (example borrowed from (1)):

(example borrowed from (1)):

')

The question is - is it possible to somehow get rid of this particular behavior?

To begin, we formalize the subject of the article.

Dana matrix each line

each line  which corresponds to the characteristic description of the object

which corresponds to the characteristic description of the object  (including constant

(including constant  ) and class label vectors

) and class label vectors  where

where  - object label

- object label  . We want to build a linear classifier of the form.

. We want to build a linear classifier of the form. %20%3D%20y%20) .

.

The easiest way to do this is to construct a least-squares regression for that is, minimize the sum of squared deviations

that is, minimize the sum of squared deviations %5E2%20) . The optimal weight can be found by the formula

. The optimal weight can be found by the formula  where

where  - pseudoinverse matrix. Thus, a picture from the beginning of the article.

- pseudoinverse matrix. Thus, a picture from the beginning of the article.

For convenience of writing, we multiply each line of inequality element by element. on

on  and call the resulting matrix on the left

and call the resulting matrix on the left  (here

(here  means line by line multiplication). Then the condition for OLS-regression is reduced to the form

means line by line multiplication). Then the condition for OLS-regression is reduced to the form  , and the minimization problem - to minimize

, and the minimization problem - to minimize %5E2%20) .

.

At this point, you can recall that the condition for the separation of classes is%20%3D%20y%20) or

or %20%3D%201%20) and since we want to separate classes, we need to solve this problem.

and since we want to separate classes, we need to solve this problem.

We introduce a vector , wherein

, wherein  responsible for the distance from the element

responsible for the distance from the element  to the dividing line (

to the dividing line (  ). Since we want all elements to be classified correctly, we introduce the condition

). Since we want all elements to be classified correctly, we introduce the condition  . Then the task will be reduced to

. Then the task will be reduced to %5E2%20) and will decide how

and will decide how  . You can manually pick up such values

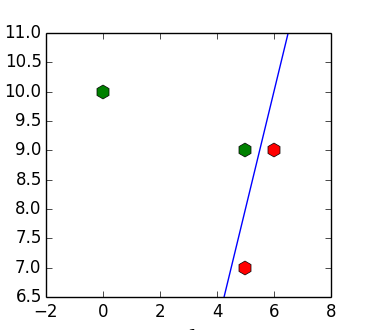

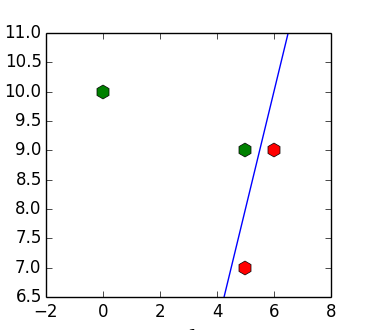

. You can manually pick up such values  that the resulting plane will divide our sample:

that the resulting plane will divide our sample:

The Ho-Kashyap algorithm is designed to pick automatically. Algorithm diagram (

automatically. Algorithm diagram (  - step number

- step number %7D%20) usually taken equal

usually taken equal  ):

):

I want to calculate the indentation vector in some way, like%7D%20%3D%20%5Cmax(Yw%5E%7B(k)%7D%2C%200)%20) because it minimizes the loss function

because it minimizes the loss function %5E2%20) . Unfortunately, the condition

. Unfortunately, the condition  does not allow us to do this, and instead it is proposed to take a step on the positive part of the loss function gradient

does not allow us to do this, and instead it is proposed to take a step on the positive part of the loss function gradient %7D%20%3D%20b%5E%7Bk%7D%20-%20Yw%5E%7B(k)%7D%20) :

: %7D%20%3D%20b%5E%7B(k)%7D%20-%20%5Cmu%20(e%5E%7B(k)%7D%20-%20%7Ce%5E%7Bk%7D%7C)%20) where

where  - step gradient descent, decreasing in the course of solving the problem.

- step gradient descent, decreasing in the course of solving the problem.

In the case of a linearly separable sample, the algorithm always converges and converges to the separating plane (if all the elements of the gradient are non-negative, they are all zero).

non-negative, they are all zero).

In the case of a linearly inseparable sample, the loss function can be arbitrarily small, since it suffices to multiply and

and  on a constant to change its value and, in principle, the algorithm may not converge. The search does not give any specific recommendations on this topic.

on a constant to change its value and, in principle, the algorithm may not converge. The search does not give any specific recommendations on this topic.

It can be noted that if the object is classified correctly, then the error in the stated optimization problem (%5E2%20) ) the error on it can be reduced to zero. If the object is classified incorrectly, then the minimum error on it is equal to the square of its indent from the dividing line (

) the error on it can be reduced to zero. If the object is classified incorrectly, then the minimum error on it is equal to the square of its indent from the dividing line ( %5E2%20) ). Then the loss function can be rewritten in the form:

). Then the loss function can be rewritten in the form:

%5E2%20-%202%5Cmax(0%2C%201%20-%20w%20Y_i)%20%20)

In turn, the loss function of a linear SVM is:

%20)

Thus, the problem solved by the Ho-Kashyap algorithm is an analogue of SVM with a quadratic loss function (it penalizes farther for emissions far from the dividing plane) and ignores the width of the dividing strip (that is, it’s not looking for a plane as far as possible from the nearest properly classified elements, and any dividing plane).

It may be recalled that the OLS-regression is an analogue of Fisher’s two-class linear discriminant (their solutions coincide up to a constant). The Ho-Kashpyap algorithm can also be applied to the case classes - in this

classes - in this  and

and  become matrices

become matrices  and

and  size

size  and

and  accordingly where

accordingly where  - the dimension of the problem, and

- the dimension of the problem, and  - number of objects. In this case, the columns corresponding to the wrong classes should have negative values.

- number of objects. In this case, the columns corresponding to the wrong classes should have negative values.

parpalak for a convenient editor.

rocket3 for the original article.

Despite the boom of neural networks in machine learning, linear classification algorithms remain much simpler to use and interpret. But at the same time, sometimes you don’t want to use any advanced methods, such as the support vector or logistic regression method, and the temptation is to drive all the data into one large linear least-squares regression , even more so it knows how to build MS Excel.

The problem with this approach is that even if the input data is linearly separable, the resulting classifier may not separate them. For example, for a set of points

')

The question is - is it possible to somehow get rid of this particular behavior?

Linear classification task

To begin, we formalize the subject of the article.

Dana matrix

>>> import numpy as np >>> X = np.array([[6, 9, 1], [5, 7, 1], [5, 9, 1], [0, 10, 1]]) >>> y = np.array([[1], [1], [-1], [-1]]) The easiest way to do this is to construct a least-squares regression for

>>> w = np.dot(np.linalg.pinv(X), y) >>> w array([[ 0.15328467], [-0.4379562 ], [ 3.2189781 ]]) >>> np.dot(X, w) array([[ 0.19708029], [ 0.91970803], [ 0.04379562], [-1.16058394]]) Linear separability

For convenience of writing, we multiply each line of inequality element by element.

>>> Y = y * X >>> Y array([[ 6, 9, 1], [ 5, 7, 1], [ -5, -9, -1], [ 0, -10, -1]]) At this point, you can recall that the condition for the separation of classes is

We introduce a vector

>>> b = np.ones([4, 1]) >>> b[3] = 10 >>> w = np.dot(np.linalg.pinv(Y), b) >>> np.dot(Y, w) array([[ 0.8540146 ], [ 0.98540146], [ 0.81021898], [ 10.02919708]]) Ho-Kashyap Algorithm

The Ho-Kashyap algorithm is designed to pick

- Calculate the OLS-regression coefficients (

).

- Calculate the indentation vector

.

- If the decision is not agreed (

), then repeat step 1.

I want to calculate the indentation vector in some way, like

>>> e = -np.inf * np.ones([4, 1]) >>> b = np.ones([4, 1]) >>> while np.any(e < 0): ... w = np.dot(np.linalg.pinv(Y), b) ... e = b - np.dot(Y, w) ... b = b - e * (e < 0) ... >>> b array([[ 1.], [ 1.], [ 1.], [ 12.]]) >>> w array([[ 2.], [-1.], [-2.]])

In the case of a linearly separable sample, the algorithm always converges and converges to the separating plane (if all the elements of the gradient are

In the case of a linearly inseparable sample, the loss function can be arbitrarily small, since it suffices to multiply

Connection of the Ho-Kashyap algorithm and linear SVM

It can be noted that if the object is classified correctly, then the error in the stated optimization problem (

In turn, the loss function of a linear SVM is:

Thus, the problem solved by the Ho-Kashyap algorithm is an analogue of SVM with a quadratic loss function (it penalizes farther for emissions far from the dividing plane) and ignores the width of the dividing strip (that is, it’s not looking for a plane as far as possible from the nearest properly classified elements, and any dividing plane).

Multidimensional case

It may be recalled that the OLS-regression is an analogue of Fisher’s two-class linear discriminant (their solutions coincide up to a constant). The Ho-Kashpyap algorithm can also be applied to the case

Thanks

parpalak for a convenient editor.

rocket3 for the original article.

Links

(1) http://www.csd.uwo.ca/~olga/Courses/CS434a_541a/Lecture10.pdf

(2) http://research.cs.tamu.edu/prism/lectures/pr/pr_l17.pdf

(3) http://web.khu.ac.kr/~tskim/PatternClass Lec Note 07-1.pdf

(4) A.E. Lepsky, A.G. Bronevich Mathematical methods of pattern recognition. Lecture course

(5) Tu J., Gonzalez R. Principles of Pattern Recognition

(6) R.Duda, P.Hart Pattern Recognition and Scene Analysis

Source: https://habr.com/ru/post/312774/

All Articles