Administrator's summary: the difference between a microscope and a hammer when building a SAN (updated)

One day, one of the clients of the integrator company where I worked asked to quickly draw a draft of a small storage system. As luck would have it, a special person on SAN was unavailable and the task was assigned to me. At that time, my knowledge of storage was reduced to the impenetrable idea of " Fiber Channel is cool and iSCSI is not very ."

For all those who got into a similar situation or are slightly interested in the topic of SAN, we prepared a cycle of materials in the format "outline". Today’s article focuses on storage technology for small and medium sized organizations. I will try not to get bogged down with theory and use more examples.

Storage systems are different and moderately unusual

If the engineer is not particularly familiar with data storage networks (DSS), then choosing the right device often begins with studying the market in terms of its own stereotypes. For example, I used to usually stop at simple DAS-systems, which surprisingly complemented with its illogical thesis about the “coolness” of the Fiber Channel. On the other hand, DAS was understandable and did not require reading long administrator’s manuals and diving into the dark world of storage networks.

If the organization simply runs out of space on a shared network drive, then an inexpensive server with a relatively high density of disks will suffice, as a reserve for the future. Of the specialized systems, network file storage (NAS), such as Synology DS414 SLim, is not bad . On it, it is convenient to create shared folders, and the rights are flexibly configured, and there is integration with Active Directory.

What I like about Synology's storage is a user-friendly interface with many plug-ins for any usage scenarios. But their behavior is very strange. For example, one customer had Synology DS411 + II. It worked fine until the next reboot, after which it did not turn on. Do not ask how I came to this, but the startup algorithm after a crash was as follows:

1. Remove all disks, turn on the device, turn off the device;

2. Insert one disk, turn on the device, turn off the device;

')

3. Insert the second disk, turn on the device, turn off the device;

4. Repeat for the third and fourth disc. After installing the fourth disk, the device turns on and works.

The method was published on the Synology forum and it turned out that I am not the only one so lucky. Since then I prefer small servers with GNU \ Linux on board, they have at least easier diagnostics.

From assemblies for NAS I can recommend Openmediavault .

Everything becomes more complicated when you need to increase the disk capacity of existing servers, or there are thoughts about high availability. This is where the temptation arises to build a full-fledged NAS or go to the other extreme, limiting itself to a simple DAS disk shelf.

SAN, Storage Area Network is an architectural solution for connecting external storage devices across the network, such as disk arrays and tape libraries. Moreover, connect at the block level so that the client works with them in the same way as with ordinary local disks. In the Russian-language literature, the abbreviation SHD (Data Storage Network) is used - do not confuse it with the Data Storage System, which can be any disk shelf.

- Direct Attached Storage (DAS) - external disk or disk array connected directly to the server at the block level.

In this article, I will not touch on software implementations, such as Storage Spaces in a Windows environment, and I will limit myself to the iron and architectural nuances of storage.

Why a separate storage network

Let's start with typical solutions for data storage, which involve the use of special networks and interfaces, as with them the most questions.

The most inexpensive way to organize a SAN is the Serial Attached SCSI (SAS) interface. The one with which the disks are connected in any modern server. Use SAS and for direct connection of external disk array to the server.

For a DAS array, it is possible to organize a fault-tolerant connection to several servers. This is done using Multipath , client switching technology and data storage along several routes. But the most popular is the partitioning of disks between servers, which already independently assemble RAID groups of them and divide them into volumes. Such a scheme is called "Shared JBOD ".

To connect to the server, adapters (HBAs) for a specific interface are used, which simply allow the OS to see the finished disk volumes.

It is worth noting that SAS supports three standards:

SAS-1, at a speed of 3 GB / s per device;

SAS-2, at a speed of 6 Gb / s;

- SAS-3, providing already 12 Gb / s.

When planning your architecture, you should also keep in mind the differences in SAS connectors , which often leads to confusion when ordering cables. The most popular when connecting external equipment are SFF-8088 (mini-SAS) and SFF-8644 (mini-SAS HD).

As a SCSI part, SAS supports expanders , which allows you to connect up to 65,535 devices to a single controller and port. Of course, the figure is rather theoretical and does not take into account the various overhead costs. Most often, there are controllers with a real limitation of 128 disks, but it is not so convenient to scale such a SAN for two or more servers with simple expanders. As a more adequate alternative, you can use SAS switches. In fact, these are the same expanders, but with the support of resource allocation among servers, the so-called "zoning". For example, for the SAS-2 standard, LSI 6160 is the most popular.

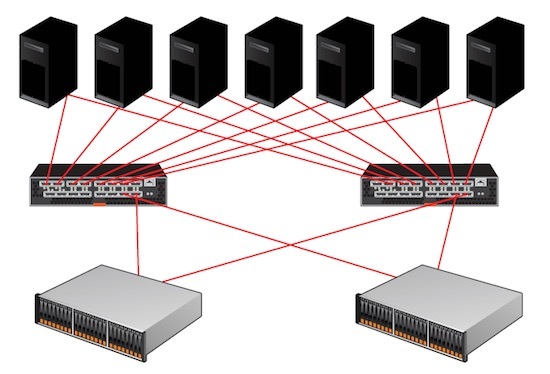

Using SAS switches, it is possible to implement fault-tolerant schemes for multiple servers without a single point of failure.

Of the benefits of using SAS include:

Low cost solution;

High throughput - even with SAS-2, 24 Gb / s per port of the controller will be obtained;

- Low latency.

Not without drawbacks:

There are no mechanisms for replication by means of disk array;

- There is a limitation on the length of the cable segment: a normal copper SAS cable longer than 10 m is not found, an active one is no more than 25 m. There are also optical SAS cables with a limitation of 100 meters, but they are significantly more expensive.

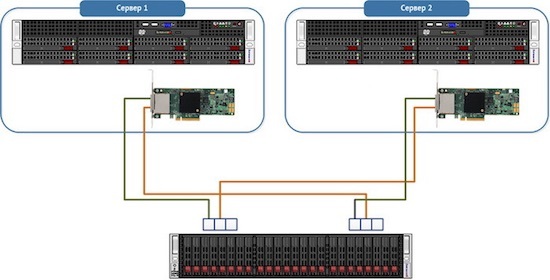

As a typical solution for small and medium-sized organizations, let's analyze the creation of a small failover cluster of virtual machines. For the cluster, select two nodes with a single disk array. As a conditional average volume of a disk volume we choose 1 TB.

I note that with software solutions like StarWind Native SAN, you can get the same cluster without a separate disk array, or with simple JBOD. In addition, most hypervisors support NFS or SMB 3.0 network resources as storage. But in software implementations, there are more nuances and "weak links" due to the greater complexity of the system. Yes, and performance is usually lower.

To build such a system will need:

Two servers;

Disk array;

HBA for servers;

- Connecting cables.

For example, let's take a disk array as an HP MSA 2040 with twelve bays for HDD. To connect we will use SAS 3.0 at a speed of 12 Gb / s. We calculate the total cost of the storage system as the first configurator :

| Disk shelf HP MSA 2040 | 360 250 ₽ |

| Dual Raid Controller 8x12 Gb SAS | 554 130 ₽ |

| HDD 600GB SAS 12G x4 | 230 560 ₽ |

| Cable mSAS external 2m x4 | 41 920 ₽ |

| HP SmartArray P441 8-external channel SAS 12G x2 | 189 950 ₽ |

| Total | 1 376 810 ₽ |

And here is the wiring diagram:

Each server will connect to each storage controller for multipathing.

In my opinion, SAS 3.0 is optimal if you do not need distributed SAN networks and do not require detailed differentiation of access rights to the storage system. For a small organization, you can achieve an excellent balance between price and performance.

After acquiring a second array in the future, it will be possible to connect each server to the controller of each disk shelf, but as the number of clients grows, this will seriously complicate the architecture. For more clients, it is better to purchase one SAS switch. Or two, to build a fault-tolerant solution.

The traditional choice for building a SAN is Fiber Channel (FC) , an interface that connects network nodes over an optical fiber.

FC supports several speeds: from 1 to 128 Gb / s (128GFC standard was released just in 2016). Mostly used are 4GFC, 8GFC and 16GFC.

Significant differences compared with SAS-systems are manifested in the design of large SAN:

Expansion is not done at the expense of expanders, but by the capabilities of the network topology

- The maximum cable length when using single-mode fiber can reach 50 km.

In small organizations, a single-switch structure is usually used when one server is connected to a disk array through one switch. Such a scheme is the basis of other topologies: cascade (cascade), lattice (mesh) and ring (ring).

The most scalable and fault-tolerant scheme is called “core-edge” (core-edge). It resembles the well-known “star” network topology, but only in the middle are two central switches that distribute traffic peripherally. A special case of this scheme is the “switched fabric” (switched fabric), without peripheral switches.

When designing you should pay attention to different types of transceivers. These are special modules that convert a digital signal into an optical signal, for which LEDs or laser emitters are used. Transceivers support different wavelengths and different optical cables, which affects the length of the segment.

There are two types of transceivers:

Shortwave (Short Wave, SW, SX) - suitable only for multimode fibers;

- Longwave (Long Wave, LW, LX), compatible with multimode and single-mode fiber.

The cable is connected to both types by the LC connector, but the SC connectors are quite rare.

Typical HBA with two FC ports

When choosing equipment for a SAN, it is not superfluous to check all the components against the iron manufacturer’s compatibility tables. Active network equipment is always better to choose a single brand to avoid compatibility problems even in theory - this is standard practice for such systems.

The advantages of solutions at FC include:

Ability to build a geographically distributed SAN;

Minimum latency;

High speed data transfer;

- The ability to replicate and create snapshots by disk array.

On the other side of the scale traditionally lies the cost.

Storage systems from the SAS section can also be built on the 16GFC, replacing only the HBA and the disk shelf controller. The cost at the same time will increase to 1 845 790 ₽.

In my practice, I met at the customer even an FC-based DAS array filled with disks less than half. Why not use SAS? The most original answer was: “what could have been?”.

In a more complex infrastructure, FC becomes structurally more like TCP / IP. The protocol also describes the layers, like the TCP \ IP stack, there are routers and switches, even “tagging” is described to isolate segments in the manner of a VLAN. In addition, name resolution and device discovery services are running on FC switches.

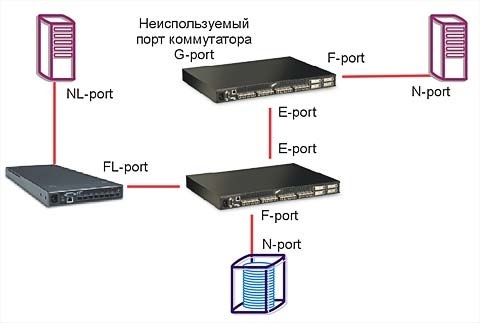

I will not delve into the subtleties, because quite a few good articles have already been written on the topic of FC. I’ll only pay attention to the fact that when choosing switches and routers for SAN, you need to pay attention to logical types of ports. Different models support different combinations of basic types from the table:

| Device Type | Name | Description |

| Server | N_Port (Node port) | Used to connect to a switch or end device. |

| NL_Port (Node Loop port) | Port with loop topology support. | |

| Switch\ Router | F_Port (Fabric port | To connect N_Port, "loop" is not supported. |

| FL_Port (Fabric Loop port), | Port with "loop" support. | |

| E_Port (Expansion port | Port for connecting switches. | |

| EX_port | Port for connecting the switch and router. | |

| TE_port (Trunking Expansion port) | E-port with VSAN support. | |

| Are common | L_Port (Loop port) | Any port with loop support (NL_port or FL_port). |

| G_port (Generic port) | Any unused device port with auto detection. |

The article would be incomplete without mentioning the option of building a SAN on InfiniBand . This protocol allows to achieve really high data transfer rates, but at a cost goes far beyond the scope of SMB.

Using conventional Ethernet

You can do without learning new types of networks using good old Ethernet.

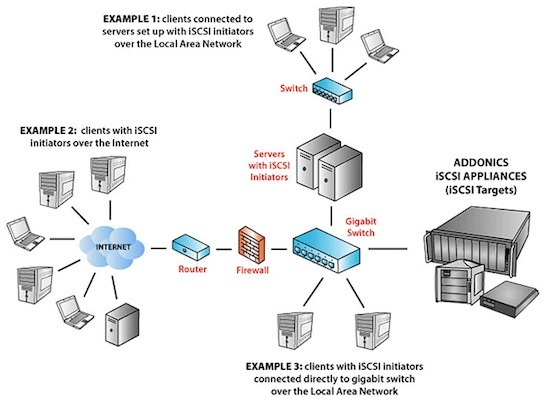

A popular protocol for creating SANs on Ethernet networks is called the Internet Small Computer Systems Interface (iSCSI) . It is built on top of TCP \ IP, and its main plus is in decent work on a regular gigabit network. In everyday life, such solutions are often called "free SAN". Of course, gigabit for serious tasks is not enough, and your service network of 10 Gb / s.

The undoubted advantages include the low cost of basic equipment. Since iSCSI is implemented by software, you can install the corresponding applications on regular servers. Most SOHO NAS class supports this protocol initially.

The customer once sharply faced the question of moving Exchange 2003 from a dying server. We decided to virtualize it with minimal downtime. To do this, raised the iSCSI-target on the NAS Synology DS411 from the first part of the article and connected to the Exchange. Then the database was transferred there and migrated to MS Virtual Server 2005 using disk2vhd. After a successful migration, the database was moved back. Later, such operations were carried out during the transition from MS Virtual Server to VMware.

Of course, to build a SAN on iSCSI, even if there is enough of a gigabit network for tasks, you should not “release” it to a common LAN. It will work, but broadcast requests and other service traffic will certainly affect the speed and will interfere with users. It is better to build a separate isolated network with its own equipment. Or, as a last resort, at least select the subnet with iSCSI in a separate VLAN. It is worth noting that in order to achieve maximum performance of such systems it is necessary to include support for Jumbo Frames throughout the entire packet path.

As a budget saving measure, the idea of merging gigabit ports using link aggregation ( LACP ) may arise. But, as VitalKoshalew correctly noted in the comments, the real balancing between a separate server and storage using LACP will not work. A better budget solution would be to use Multipath technologies at the top levels of the OSI model.

By the way, a completely correct iSCSI solution based on a 10 GB network, with hardware acceleration supported by iSCSI network cards and the corresponding switches comes close in cost to FC.

Such a network scheme is possible due to the fact that iSCSI runs on top of TCP \ IP.

Among interesting solutions based on iSCSI, it is possible to note the operation of thin clients without a terminal server — an iSCSI volume is used instead of local disks. A gigabit network is quite enough for such work, and it is not so easy to implement something similar by other means.

Advantages of the solution:

Low cost;

The possibility of building a geographically distributed network;

- Ease of design and maintenance.

Minuses:

Delays in accessing data can be significant, especially when working with a pool of virtual machines;

- Increased processor load if special HBAs with iSCSI hardware support are not used.

There is also a more "adult" alternative to iSCSI. You can use the same Ethernet network, but wrap the storage protocol directly into Ethernet frames, bypassing TCP \ IP. The protocol is called Fiber Channel over Ethernet (FCoE) and uses 10 GB Ethernet for operation. In addition to traditional optics, you can use special copper cables or twisted pair category 6a .

An important difference from FC is that the Ethernet port can be used with TCP \ IP. This requires special network adapters, the so-called Converged Network Adapter (CNA) with FC and FCoE support, although there are software solutions. Since the protocol runs below the TCP \ IP level, a simple switch will not work. In addition, there must be support for Jumbo Frames and Data Center Bridging (DCB, sometimes there is Data Center Ethernet). Such solutions usually cost more (for example, Cisco Nexus series).

In theory, FCoE can be run in a gigabit network without using DCB, but this is a very extraordinary solution, for which I have not seen stories about successful launches.

If you go back to our small but proud virtualization cluster, then for it the 10 Gb / s iSCSI and FCoE solutions will be almost the same in cost, but in the case of iSCSI you can use cheap gigabit networks.

Also worth mentioning is the rather exotic ATA over Ethernet (AoE) protocol , which is similar in its work with FCoE. Disk arrays with it are rare, software solutions are usually used.

What is the result

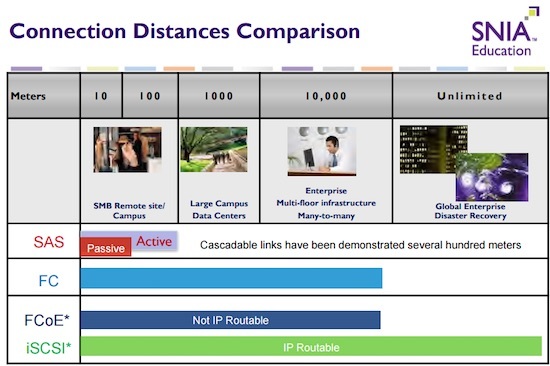

The choice of a specific implementation of the storage system requires a thoughtful study of the specific situation. You should not connect a disk array using FC simply because the "optics" sounds proudly. A SAS solution will give similar or even greater performance where architecturally appropriate. If you do not take into account the cost and complexity of the service, the distance between the connections will be a significant difference between all the storage connection technologies described. This idea is well illustrated by one of the frames of the SNIA presentation:

If, after reading the article, you want to study the original SAN world in more detail, I can recommend the following bestsellers:

Brocade - Basics of SAN Design

HPE SAN Design Reference Guide

IBM - Introduction to Storage Area Networks

- Best practices for building FC SAN

We are thinking over the publication of other articles on server technologies in the "educational program" format, so it would be great to receive feedback from you in the form of an assessment of this material. If any topics are especially interesting to you - be sure to tell about them in the comments.

Source: https://habr.com/ru/post/312648/

All Articles