How we filtered bots and lowered the bounce rate from 90% to 42%

A few months ago, we have greatly increased the bounce rate on Google Analytics. We did a standard set of actions that are recommended to do on the Web: we created an “without spiders and bots” presentation in analytics (the “Filtering robots” setting in the view), checked the quality of the Analytics code setting, checked and adjusted the session duration, and so on. All this took time, but gave no results. The failure rate on some days exceeded 90%. At the same time, the quality of the content on our website or the structure of the incoming traffic has not changed in any obvious way. It just “happened overnight” and that’s it. Since I did not find anything like that described on the Web, I decided to describe how we found and fixed the problem and reduced the bounce rate to an acceptable 42-55%.

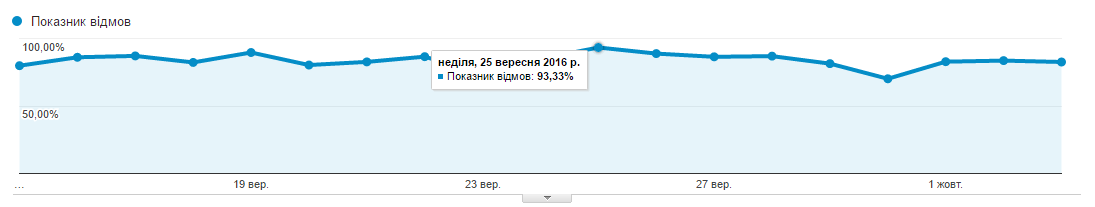

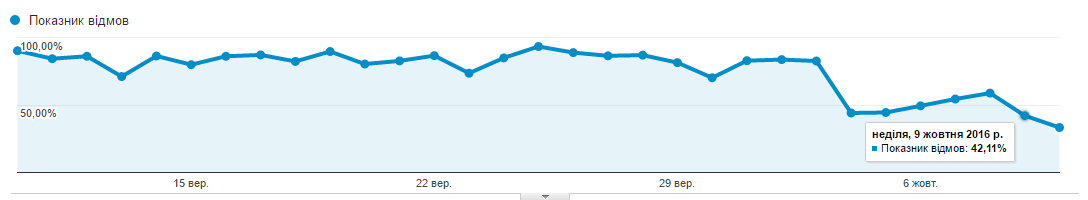

I will give a screenshot to illustrate the original problem:

')

Since all the standard schemes did not give any results, I had to think for myself and look for the problem. Analytics did not help, and I started checking through Yandex.Metrica. Overall metrics were quite acceptable (up to 10% of failures). After reading a few articles about why failures in the Metric may be acceptable, and in Analytics off scale, it became clear where to look for the problem. In short: Metrics considers as refusals all visits that lasted less than 15 seconds, and Analytics - everything, after which there were no other visits to the page. Thus, I began to look at the report on the duration of visits to Metrics and Analytics and realized that I had an unrealistically large percentage of visits with a duration of 0:00 seconds, up to 50% of daily sessions. A few more read articles allowed us to discard the hypothesis about non-triggered code and bots that sneak through the Analytics filter. I had no signs of referral spam either.

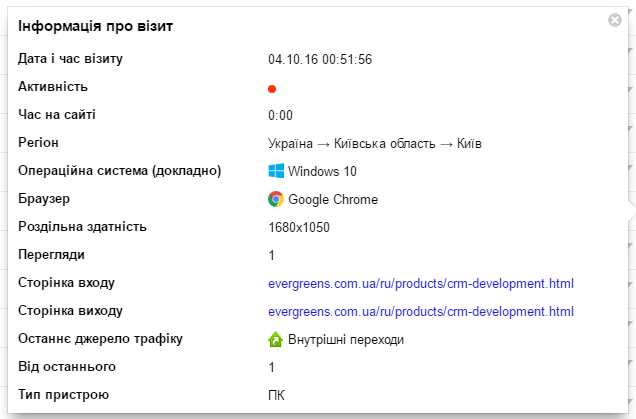

In the end, I simply filtered out visits with a duration of 0:00 in the web browser and decided to try to find a pattern. Here is what I got:

Each “visitor” came from his own subnet, with a clearly specified User Agent, screen resolution and operating system, that is, for Metrics and Analytics, it was not perceived as a bot.

The only thing that betrayed it was the rhythmic character of the visits every 1 hour, 1 minute, and 0, the duration of viewing. I took screenshots to our sysadmin Andrew and asked to see what it is, according to the server logs. The first IPShnik made us wonder: someone from the League subnet went to us. Law.

In total, there were 43 IP addresses in logs that accessed different pages of our site from different providers, with different User Agents.

We didn’t find anything interesting on the RIPE, unremarkable IPs from ordinary subnets. Besides the fact that the bots went at intervals of 1: 1 there was nothing more common in them.

We blocked all found list in iptables.

During the day, we still caught several new IPs, threw out an algorithm for automatically filtering such bot traffic in case, after blocking some bots, they will be replaced by new ones. However, no one else was found. There were only a couple of curious User Agents, but no one at 1:01.

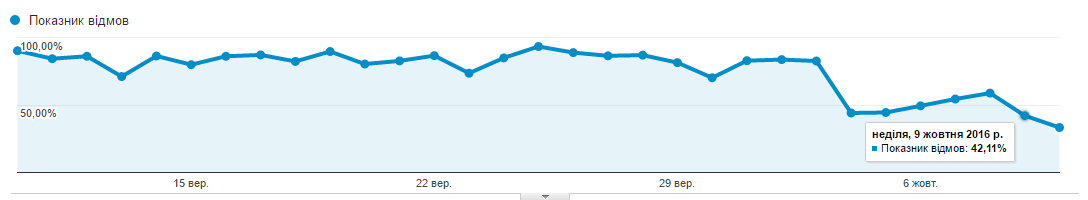

A day later, the bounce rate for Google Analytics began to return to normal and plummeted from 89% to 42.75%.

Today, almost a week after the events described, the failure rate is kept within an acceptable framework of 42-55%, the overall dynamics can be seen on the graph. Where a sharp decline - we have filtered bots.

There are only two hypotheses about “what it was”.

The first is that one of us has incorrectly configured some kind of monitor bots. We at one time played with different software to check the status of the server. Could something to include and forget. The disadvantage of this theory is that I don’t remember a single service that would declare that they send requests from different subnets to different pages of the site from different User Agents. Therefore, most likely it is not.

The second hypothesis: this is some form of bot attack unknown to the general public, possibly aimed at precisely increasing the bounce rate and, as a result, pessimization in Google search results.

If you also encountered this, I will be glad to comment. If you need more detailed instructions on how we searched for and blocked it, write too.

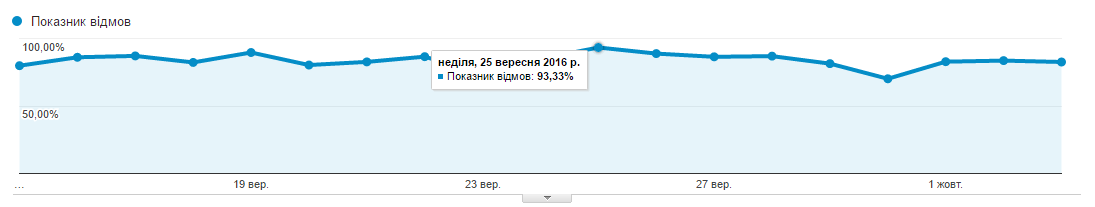

I will give a screenshot to illustrate the original problem:

')

Since all the standard schemes did not give any results, I had to think for myself and look for the problem. Analytics did not help, and I started checking through Yandex.Metrica. Overall metrics were quite acceptable (up to 10% of failures). After reading a few articles about why failures in the Metric may be acceptable, and in Analytics off scale, it became clear where to look for the problem. In short: Metrics considers as refusals all visits that lasted less than 15 seconds, and Analytics - everything, after which there were no other visits to the page. Thus, I began to look at the report on the duration of visits to Metrics and Analytics and realized that I had an unrealistically large percentage of visits with a duration of 0:00 seconds, up to 50% of daily sessions. A few more read articles allowed us to discard the hypothesis about non-triggered code and bots that sneak through the Analytics filter. I had no signs of referral spam either.

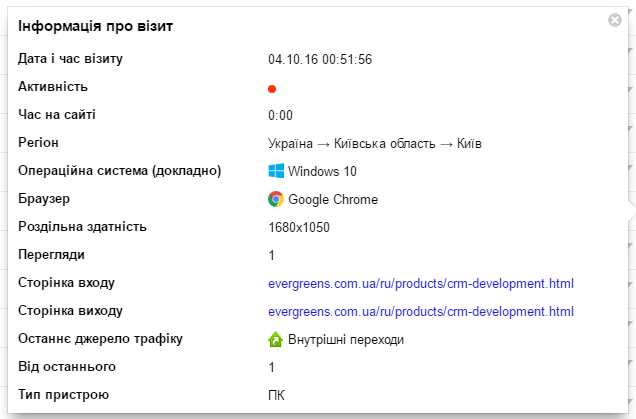

In the end, I simply filtered out visits with a duration of 0:00 in the web browser and decided to try to find a pattern. Here is what I got:

Each “visitor” came from his own subnet, with a clearly specified User Agent, screen resolution and operating system, that is, for Metrics and Analytics, it was not perceived as a bot.

The only thing that betrayed it was the rhythmic character of the visits every 1 hour, 1 minute, and 0, the duration of viewing. I took screenshots to our sysadmin Andrew and asked to see what it is, according to the server logs. The first IPShnik made us wonder: someone from the League subnet went to us. Law.

there is aypishnik for 4:56, now I will check other approaches

inetnum: 193.150.7.0 - 193.150.7.255

netname: LIGA-UA-NET2

remarks: LIGA ZAKON

In total, there were 43 IP addresses in logs that accessed different pages of our site from different providers, with different User Agents.

We didn’t find anything interesting on the RIPE, unremarkable IPs from ordinary subnets. Besides the fact that the bots went at intervals of 1: 1 there was nothing more common in them.

We blocked all found list in iptables.

During the day, we still caught several new IPs, threw out an algorithm for automatically filtering such bot traffic in case, after blocking some bots, they will be replaced by new ones. However, no one else was found. There were only a couple of curious User Agents, but no one at 1:01.

A day later, the bounce rate for Google Analytics began to return to normal and plummeted from 89% to 42.75%.

Today, almost a week after the events described, the failure rate is kept within an acceptable framework of 42-55%, the overall dynamics can be seen on the graph. Where a sharp decline - we have filtered bots.

There are only two hypotheses about “what it was”.

The first is that one of us has incorrectly configured some kind of monitor bots. We at one time played with different software to check the status of the server. Could something to include and forget. The disadvantage of this theory is that I don’t remember a single service that would declare that they send requests from different subnets to different pages of the site from different User Agents. Therefore, most likely it is not.

The second hypothesis: this is some form of bot attack unknown to the general public, possibly aimed at precisely increasing the bounce rate and, as a result, pessimization in Google search results.

If you also encountered this, I will be glad to comment. If you need more detailed instructions on how we searched for and blocked it, write too.

Source: https://habr.com/ru/post/312640/

All Articles