Using ES6 generators using the example of koa.js

Author: Alexander Trischenko, Senior Front-end Developer, DataArt

Content:

• Iterators. Generators.

• Using generators (Redux, Koa)

• Why do we use koa.js

• Future. Async Await and koa.js 2.x

Generators - new specification, new feature, which we can use in ECMAScript 6. I will begin the article with a story about iterators, without which it is impossible to understand generators, I will tell you directly about the specification and what generators are in general, about their use in real cases . Consider two examples: React + Redux as a front-end case and koa.js as a backend. Then I’ll focus on koa.js, the future of JavaScript, asynchronous functions, and koa.js 2.

The article used, including borrowed snippets (references to the source are given at the end), and I immediately apologize that parts of the code are laid out in the form of pictures.

')

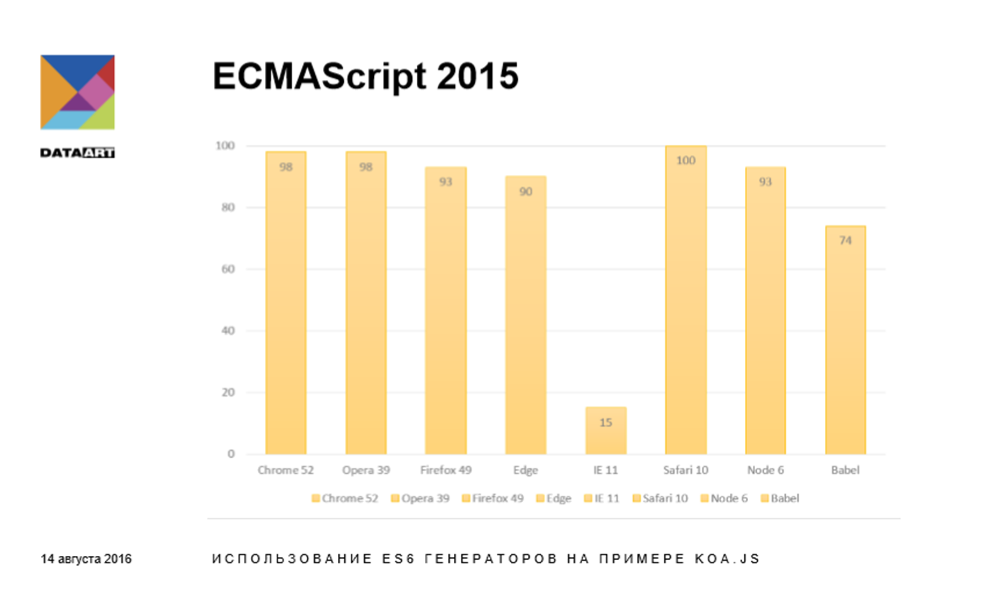

ECMAScript 6 (2015) is supported well enough to use it. The diagram shows that, in principle, everything is not bad even with Microsoft in Edge, big problems are observed only in Internet Explorer (the vertical axis of coordinates is the functionality support, in%). Safari 10 pleasantly surprises, according to the statements of the WebKit team, everything works.

Iterators

• Now all that can be iterated is an iterable object.

• Anything that does not move by itself - you can force using your Symbol.iterator.

Each enumerated, iterated data type, each iterated data structure gets an iterator and becomes iterable. You can loop through a string, an array, you can loop through new data structures, such as Map and Set — all of them must contain their own iterator. Now we can directly access the iterator itself. Also, there was an opportunity to make the untreated one get over, and sometimes it can be very convenient.

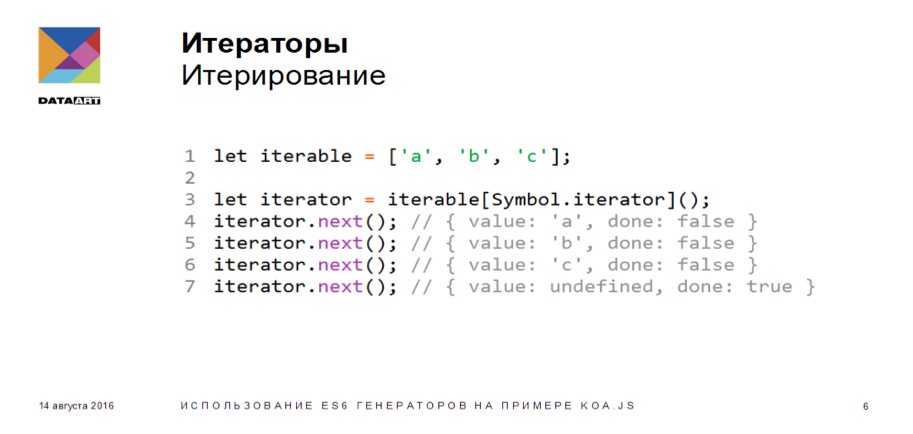

What is an iterator? As you can see, there is a simplest array. The simplest array has a key — an iterator symbol, in fact, a factory that returns an iterator. Each call to this factory will return a new instance of the iterator, we can iterate through them independently of each other. As a result, we received a variable that stores a reference to an iterator. Further, with the help of the only method next, we iterate over it. The next method returns to us an object that contains two keys, the first value is the iterator value itself, the second is the iterator state done: false.

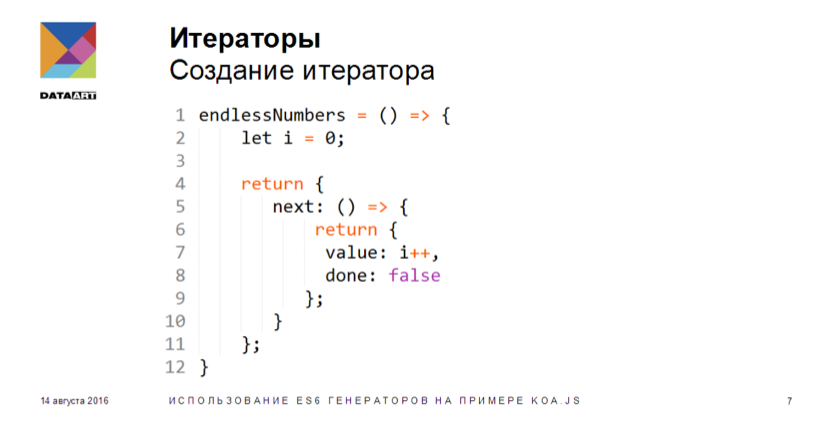

We can describe the iterator by ourselves. In principle, it is a factory, a normal function. Suppose we have a function endlessNumbers, an index and a method next. An object with a single next method that returns an iterable value and status. This iterator will never reach the end, because we will never assign the done key to true.

Iterators are used quite widely, especially in applications that implement non-standard approaches to working with information. In my free time, I write JavaScript sequencer using the Web Audio API. I have a task: to play a certain note at certain intervals. Laying it in a loop would be inconvenient, so I use an iterator that just “spits” notes into the media player.

Prerequisites for the emergence of generators have arisen for a long time. You can see the dynamics of Node.js popularity over the past five years, it indirectly reflects the popularity of JavaScript in general. Also on the chart reflects the frequency of the request Callback Hell - it is proportional to the distribution of JavaScript. That is, the more popular JavaScript became, the more developers and clients suffered.

The noodles presented on the image are a structural visualization of the code written without generators. That is, this is what we all have to work with - we get used to it and, having no choice, we take it for granted. When the developers began trying to combat this phenomenon, there was such a thing as a promise. The idea was to take all of our Callback (callback functions) and “smudge” them throughout the code, declaring where it is more convenient for us. However, in reality, we still have the same callback functions, just presented in a slightly different form. The developers continued the struggle - so the generators and asynchronous functions appeared.

Generators

Application

• Writing synchronous code.

• Writing suspended functions.

• Writing complex iterators.

Generators allow you to write synchronous code. I used to say that the advantage of JavaScript is just in its asynchrony, now let's talk how to write synchronous JavaScript code. In fact, this code will be pseudo-synchronous, since the Event Loop will not stop if you have any timeouts in the background while you wait for the execution of the suspended generator. You can easily perform any other necessary operations in the background. We can write suspended functions, the use of which is rather narrow, in contrast to asynchronous actions that are used very widely. There is also the possibility of writing complex iterators. For example, we can write an iterator that will go to the database each time and in turn iterate one value from it.

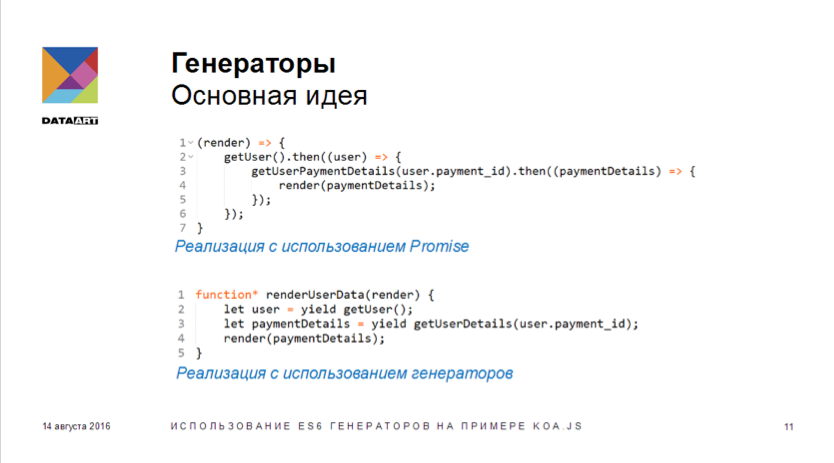

Above and below you can see snippets that are absolutely identical in functionality. We have a certain function which should descend in a database and take away user.payment_id. After that, it should go to the external API and pick up the payment details user that are relevant for the current day. We have the phenomenon The Pyramid Of Doom, when the callback function is inside another callback function, and the degree of nesting can increase infinitely. Naturally, the result is not always acceptable, even having encapsulated all operations in separate functions, we still get “noodles” at the output.

There is a solution that allows us to do the same with a generator: the generator has a slightly different syntax - function * (with an asterisk). The generator can be named and unnamed. As you can see, we have the yield keyword. The yield statement, which pauses the execution of the generator, allows us to wait for the execution of the GetUser method. When we get the data, put it in the user, then continue with the execution, in the same way we get paymentDetails, and then we can draw all the information for our user.

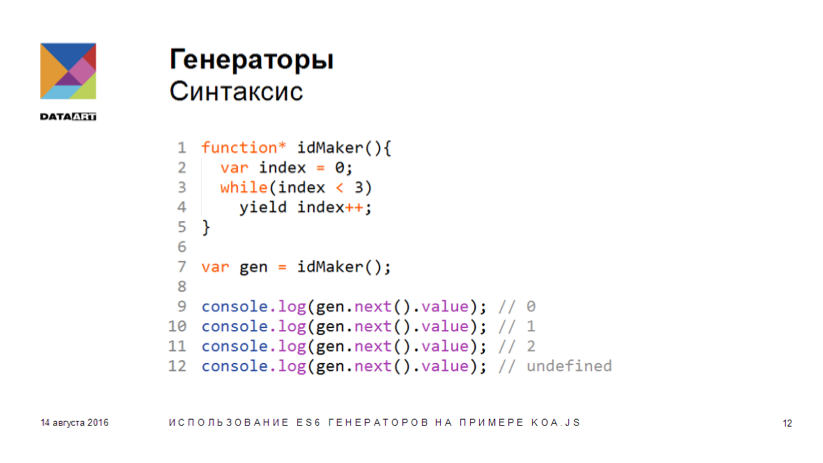

Consider the possibility of implementing generators - how can we sort them out? Here we see the previously described construction. As was shown on the iterator, there is also a number iterator, which will return a value from 0 to 3, and which we will iterate. That is, we can use the next method.

Next () method

• May take as argument a value that will be thrown into the generator

• The return value is an object with two keys:

value - the part of the expression received from the generator.

done - the state of the generator.

The next method is no different from the same in iterators, we can get value and done, as two parameters, we can forward the value to the generator and get the value from the generator.

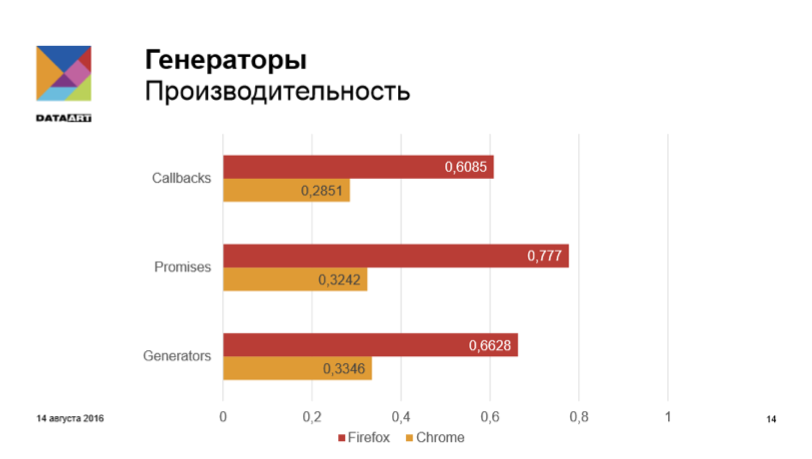

The next question is performance. How it makes sense to use what we talked about? At the time of the report, there was no testing tool at my disposal, so I wrote mine. As a result, thousands of iterations were able to achieve an average of various technologies. In Chrome, promises and generators are not very different from each other, and one or the other differ in a big way. If we consider that the time spent on performing one iteration using Callback, Promise or generators is calculated in milliseconds, in reality there is no particular difference. I think that bothering, saving on matches, it is not worth it. So, you can freely use what you prefer.

No modern JavaScript report can do without React. I will talk in particular about Redux.

redux-saga

• This is a library.

• This is a library written on generators.

• This is a library that hides impure functions out of sight.

• This is a library that allows you to write synchronous code.

In functional programming there is a very important principle - we must use “real functions” . Our function should not affect the environment, it should work with the arguments that we pass to it. In one form or another, our callback functions often turn into an impure function, and this, of course, is to be avoided. Hence, the main purpose of redux-saga is the ability to write synchronous (pseudo-synchronous) code.

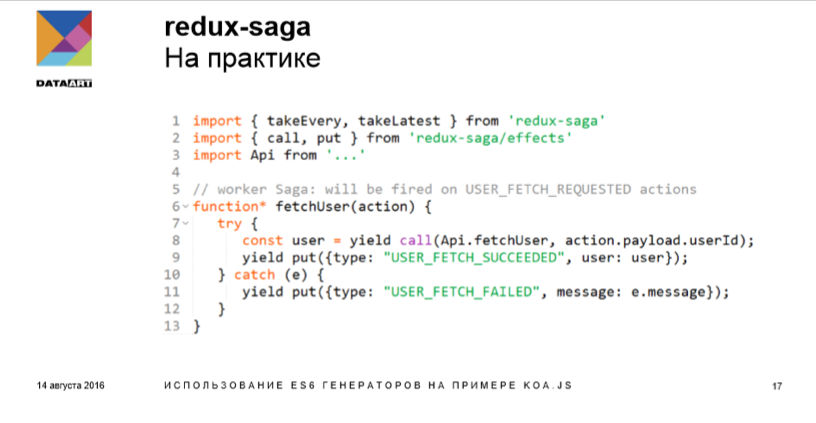

The bottom line is that in the same way we can use the generators to stop the execution of our saga. It can be said that saga is a peculiar analogue of action, which causes another action. After waiting for a response, we use the dispatcher to initiate the desired event in our reducer and transmit the necessary information.

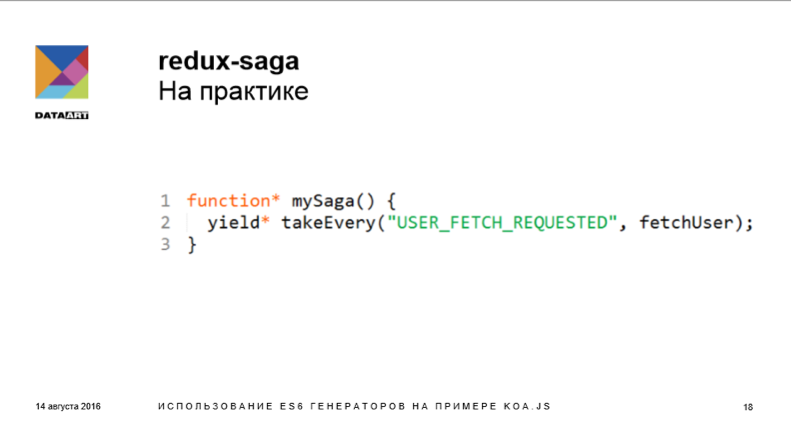

The point is quite simple: as a result, we performed an asynchronous operation very simply and quickly. Actually, the minimum saga looks like this: there is a generator that calls our saga, calls takeEvery - one of the saga methods, which allows us to initiate the “USER_FETCH_REQUESTED” event inside our reducer. You may have noticed that yield here comes with an asterisk. This is a delegation of the generator operation, we can delegate our generator to another generator.

redux-saga: afterword

• Sagas (Sagas) are not declared as ordinary Actions, they must be implemented via sagaMiddleware.

• Obviously, sagaMiddleware itself is something like the middleware of your store in Redux.

We talked about the frontend, now it's time to tell about the backend, that is, about koa. I came across many frameworks on the backend, kraken.js and koa.js seemed to be the most interesting for me, I’ll dwell on the second one.

koa.js

In a nutshell these are:

• node.js-server development framework.

• node.js framework, which uses ES6 generators, asynchronous functions of ES2016.

• node.js framework written by the express.js command.

Given the credibility of the express.js team, the company's resources, the framework is credible and is developing rapidly. At the moment, a solid community has formed around it, it has acquired a bunch of libraries - it is often very easy to find some solution for middleware koa.

• “New generation framework”

What is koa? In fact, this is a framework that provides us with an engine for middlemen (middleware), and their architectural diagram is very similar to the well-known game of spoiled phones. Here there is a state that is in turn transmitted between middleware, each of which affects or does not affect this state (I will further show an example of a logger, which has no effect). With this middleware, we will work. Recall that koa.js is a middleware framework on generators. That is, if we are talking about routing, various useful HTTP methods, security systems, protection against CSRF attacks, cross-domain queries, template engines, etc. - we won’t find anything in koa. In koa there is only an engine for middleware, and a lot of them are written by the koa.js team itself.

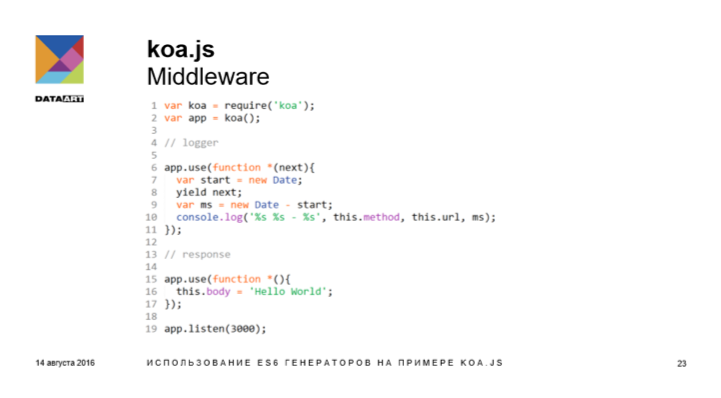

This is the most simple application on koa.js. There is a logging implementation - the simplest implementation of middleware on koa.js. This is a generator that returns its state both before it is returned and after it returns, which allows you to calculate the time spent on the execution of our application. Please note that they are executed in the order of the announcement: what was announced above will start working first.

koa.js

Benefits:

• Having a huge number of libraries wrapped in co.js.

• Modularity and lightness.

• Ability to write more understandable code.

• Ability to write less code.

• High community activity.

It would seem that koa.js is a poor framework, in which there is almost nothing. At the same time, there are many libraries, and most of the standard service functionality is presented in the form of middleware. You need cross-domain queries - just connect the package and forward the middleware. If settings are required - you just need to pass the parameters, and you will have cross-domain requests. Authorization with jwt-token is required - the same thing: three lines of code are needed. If you need to work with the database - please.

There are many such cases - working with the framework becomes like a game with a constructor: all you have to do is try different packages and everything will work. Everyone was waiting for such encapsulation possibilities, and now they are at our disposal. As a result of the lack of functionality inside the framework, it became lighter, there are also some standard components that need to be finished later. Now you can write more understandable code. Generators allow you to write pseudo-synchronous code, so you can reduce the number of obscure and unnecessary things in the application. As a result, the ability to write less code appeared. There is active community support, many plug-ins that are starting to compete with each other. The best win, many are eliminated while doing this, which is of course generally useful.

The table presents a comparison of the delivery of Koa, Express and Connect frameworks. As you can see, koa has nothing but the middleware kernel.

It is worth saying a few words about co.js itself:

Co.js is a wrapper around generators and promises that allows us to simplify working with asynchronous operations. It is more correct to designate co as "Coroutines in JavaScript". The idea of coroutines is not new, and exists in other programming languages for a long time.

The basic idea is to transfer control from the main program to the coroutine, which in turn can return control to the main program. Actually part of this process and implement the generators in JavaScript.

If we bring everything to the more familiar matters for the JS developer, co.js performs the generator, eliminating the need to call the next () generator sequentially. In turn, co returns another promise, which allows us to track its completion and catch errors (for this you can use the catch method). The coolest thing is that in co you can yield for an array of promises (a la Promise.all). It is worth noting that co copes with the delegation of generators.

koa.js

A couple of useful packages to start:

• koa-cors - allow cross-domain queries in one line.

• koa-route - full-fledged routing.

• koa-jwt - server implementation of authorization using jwt-token.

• koa-bodyparse - body parser for incoming requests.

• koa-send - static control.

Above, as an example, there are several middleware that you can use in a real application. The koa-cors package allows cross-domain requests, koa-route provides routing similar to Express, etc.

The main disadvantages of koa.js are the reverse side of the fact that the framework comes bare, the need to constantly monitor the quality of the packages, which at the same time do not promise to be dependent on each other, and sometimes getting rid of bugs becomes difficult. The second problem is the selection of the team, because at the moment, unfortunately, not many people work with koa.js. Because of this, the time to introduce a new person into the project increases. And if the project is small, it can be unprofitable. T. h. Use koa.js in the work need with the mind.

koa.js 2

New generation framework?

Koa.js 2 is a very tricky framework. It works on a specification that does not exist. That is, you can find articles about asynchronous functions, where it is said that this is ECMAScript 7 or ECMAScript 2016. But in fact, despite the fact that Babel, Google Chrome and Microsoft Edge support asynchronous functions, they do not exist. Many expected that the asynchronous functions will be included in the official release of ECMAScript 7 (2016), but in the end it came out with bug fixes and two new features, which were limited to innovations. In the meantime, koa.js 2 works on asynchronous functions, the developers write to them. And all this is positioned as a new generation framework.

Async functions

Overview

• Async is a Promise.

• Await is a Promise.

Asynchronous functions - both Async and Await - this is Promise.

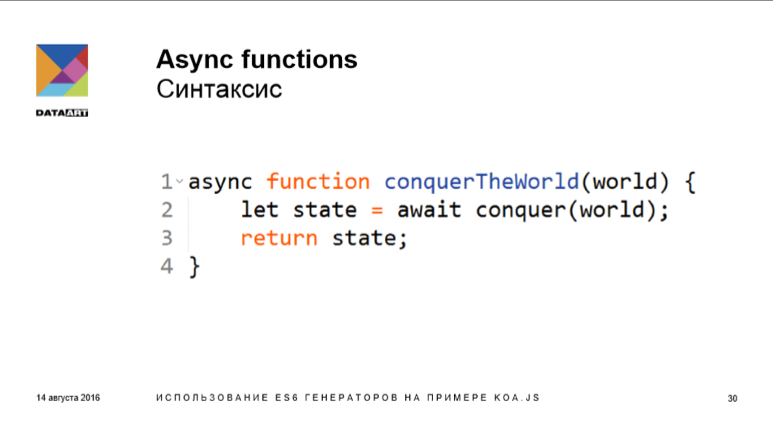

Suppose we have such a code. If we remove async and await, put an asterisk near the function and put yield before the conquer, we get a generator. It would seem, what's the difference? And it is that we expect the usual Promise in conquer, it is not necessary to wrap our asynchronous functions in any generators, this is simply not required - we can take the usual new method to get the fetch server request. Then it is necessary to wait for the result, and when we get it, we will put it in the state and thus return the state.

Async functions

Afterword

• Asynchronous functions are more convenient than generators (less code, there is no need to wrap promises for generators).

• Asynchronous functions are not yet part of the standard and it is not clear whether they will become part of it.

Asynchronous functions are certainly more convenient than generators, they allow us to write less code. In this case, there is no need to write a binding code, you can take any library that returns us a promise (which is almost all modern libraries). This saves a lot of time and money. The minus - asynchronous function is still a draft draft specification. So, in the end, it may turn out the same as with screen capture in WebRTC: applications using this functionality have appeared, and as a result, it was abandoned.

The moral of everything I described in the article is quite simple: I am not saying that the generators are a replacement for a Promise or a Callback. I'm not saying that asynchronous functions can replace generators, callback functions, and promises. But we have new tools for writing code to make it beautiful and structured. But their use is at your discretion. It is worth deciding in terms of rationality and applicability of each tool in your specific project.

List of resources used

Drawings borrowed here: 1 , 2 , 3 .

Snippets borrowed here .

Source: https://habr.com/ru/post/312638/

All Articles