What happened when we were tired of looking at the schedules of 5,000 servers in monitoring (and when there were more than 10,000 servers)

We in Odnoklassniki are looking for bottlenecks in the infrastructure consisting of more than 10 thousand servers. When we were a bit tired of monitoring 5000 servers manually, we needed an automated solution.

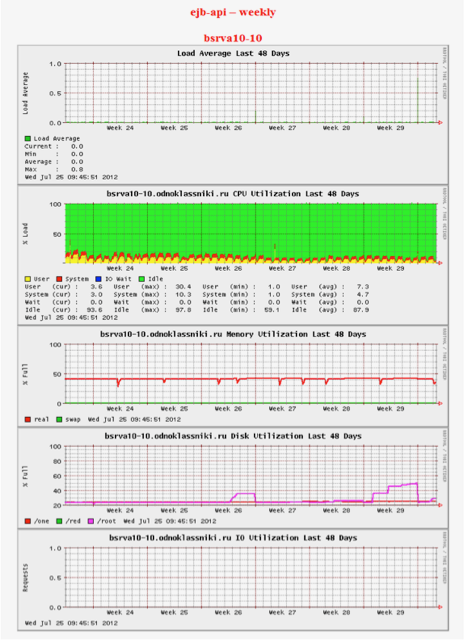

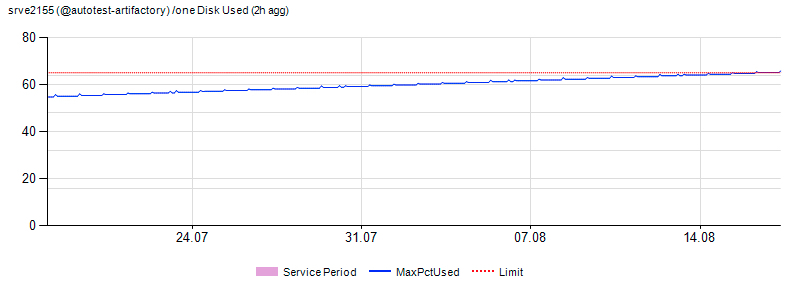

More precisely, not so. When in about antiquity appeared about the 20th server, they began to use Big Brother - the simplest monitoring, which simply collects statistics and shows it in the form of small pictures. Everything is very, very simple. Neither approximate nor somehow enter the ranges of permissible changes. Just watch the pictures. Like these ones:

')

Two engineers spent one working day a week, simply looking at them and placing tickets where the schedule seemed “not so.” I understand, it sounds really strange, but it started with a few machines, and then somehow suddenly grew to 5000 instances.

Therefore, we made a new monitoring system - and now we spend 1-2 hours a week processing an alert for work with 10 thousand servers. I'll tell you how it works.

Why is it necessary

Incident management was set up long before work began. In the "iron" part of the servers worked properly and well maintained. Difficulties were in predicting bottlenecks and identifying atypical errors. In 2013, a failure in Odnoklassniki led to the fact that the site was unavailable for several days, which we have already written about . We have learned from this, and paid a lot of attention to the prevention of incidents.

With the growing number of servers, it became important not only to efficiently manage all resources, monitor and take them into account, but also quickly fix dangerous trends that could lead to the appearance of bottlenecks in the site infrastructure. And also prevent the escalation of detected problems. It is about how we analyze and predict the state of our IT resources, and will be discussed.

Some statistics

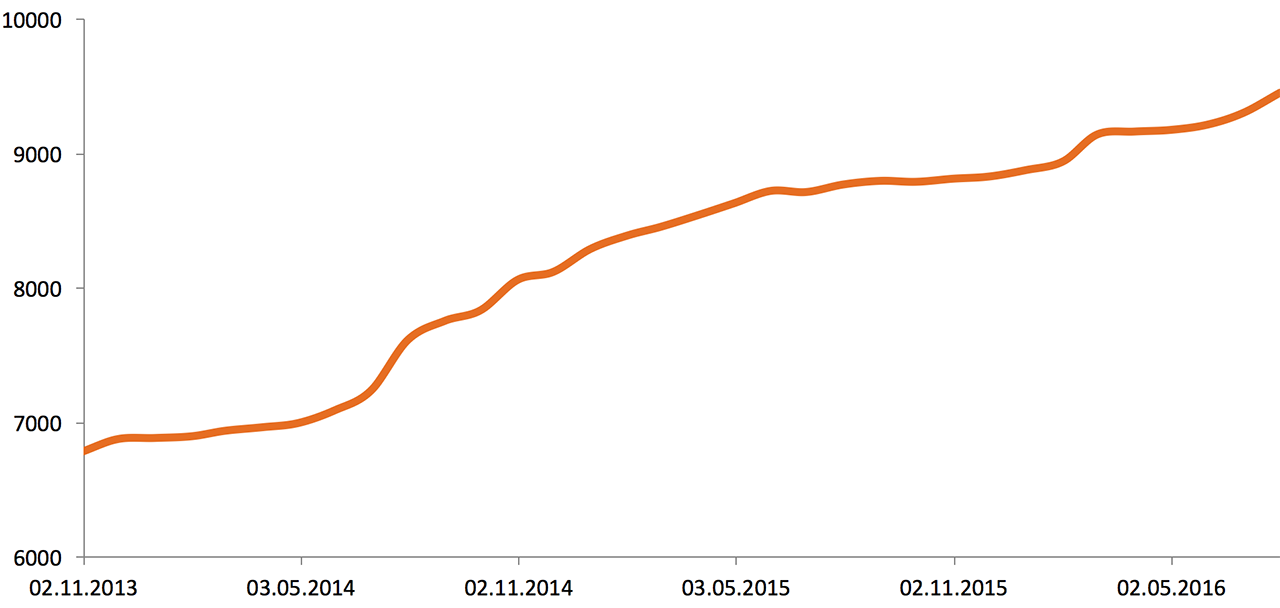

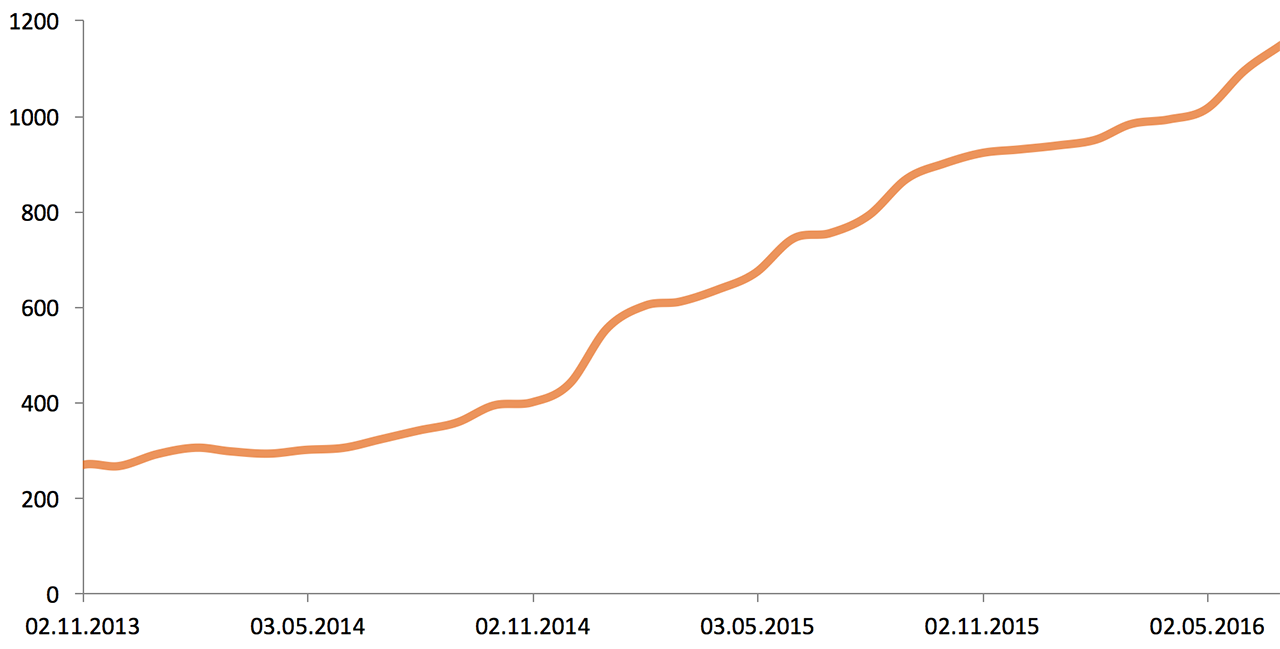

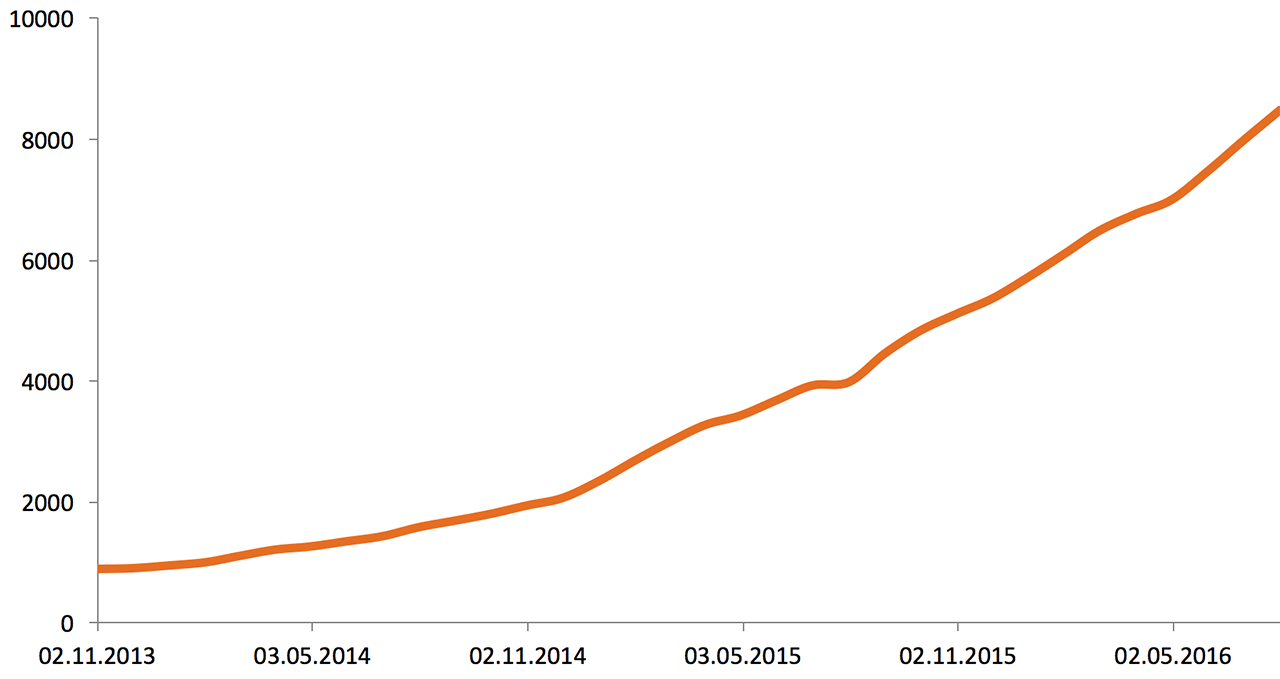

In early 2013, the Odnoklassniki infrastructure consisted of 5,000 servers and data storage systems. Here are two and a half years growth:

The volume of external network traffic (2013—2016), Gbit / s:

The volume of stored data (2013—2016), Tb:

The performance of applications, equipment and business metrics are monitored by the monitoring team 24/7. Due to the fact that the already large infrastructure continues to grow, the solution of problems with bottlenecks in it may take considerable time. That is why a single operational monitoring is not enough. To deal with troubles as quickly as possible, you need to predict the growth of the load. This is done as follows: the monitoring team once a week starts an automatic check of the operational performance of all servers, arrays and network devices, which results in a list of all possible problems with the equipment. The list is transferred to system administrators and network specialists.

What is monitored

On all servers and their arrays, we check the following parameters:

- total processor load, as well as the load on a single core;

- disk utilization (I / O Utilization) and disk queue (I / O Queue). The system automatically detects SSD / HDD, since the limits on them are different;

- free space on each disk partition;

- memory utilization, since different services use memory differently. There are several formulas that calculate the used / free memory for each server group, taking into account the specifics of the tasks that need to be solved on a specific group (formula by default: Free + Buffers + Cached + SReclaimable - Shmem);

- using swap and tmpfs;

- Load average;

- traffic on server network interfaces;

- GC Count, Full GC Count and GC Time (talking about the Java Garbage Collection).

On central switches (core) and routers, memory usage, processor load and, of course, traffic are checked. We don’t monitor access-ports traffic on access level switches, as we check it directly at the server’s network interface level.

Analytics

Data is collected from all hosts using SNMP and then accumulated into the DWH (Data Warehouse) system. We told about DWH on Habré earlier - in a BI article in Odnoklassniki: data collection and delivery to DWH . Each data center has its own servers to collect these statistics. Statistics from the device is collected every minute. Data is uploaded to DWH every 90 minutes. Each data center generates its own data set, their volume - 300-800 Mb for downloading, and this is 300 GB per week. The data is not approximated, so it is possible to find out the exact time of the beginning of the problem, which helps to find its cause.

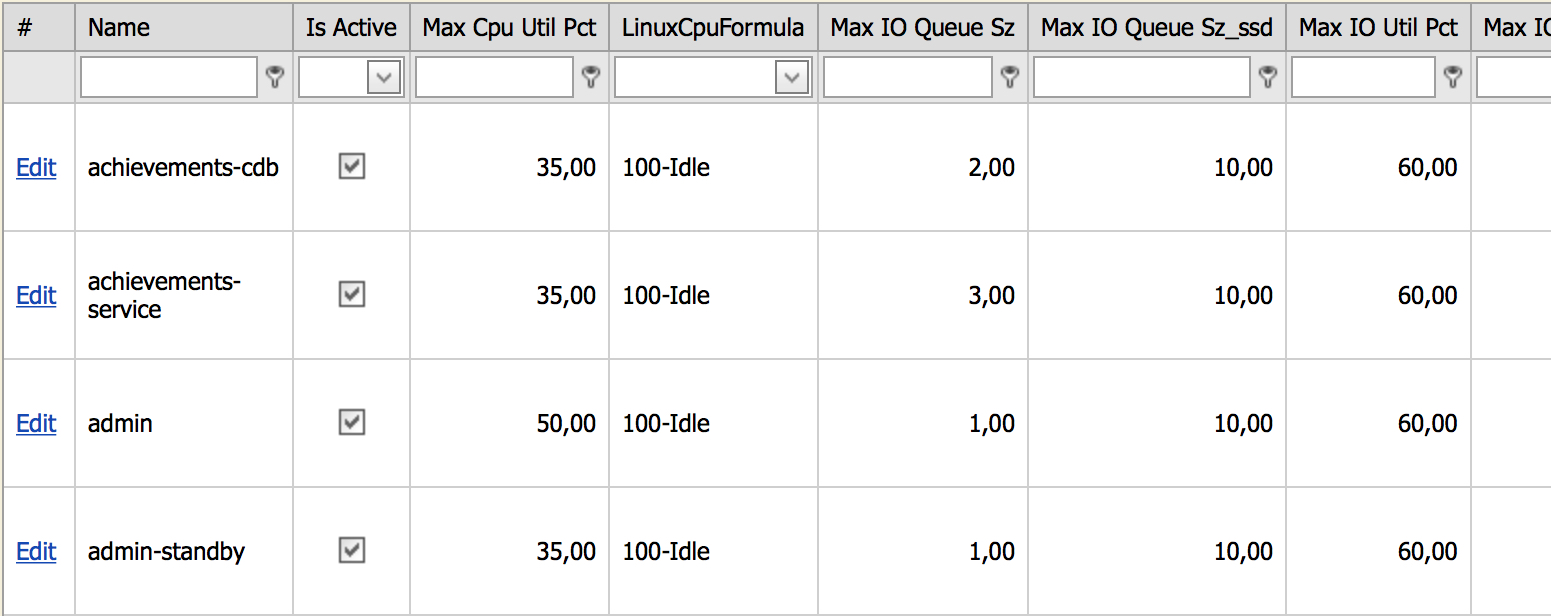

All servers, depending on their role in the infrastructure, are divided into groups for which thresholds are placed. After exceeding the limit, the device will get into the report. All hosts are grouped in the Service Catalog system specially developed for this. Since the limits are placed on a group of servers, the new servers in the group automatically start monitoring at the same limits. If the administrator has created a new group, then hard limits are automatically placed on it, and if the servers of the new group get checked, then a decision is made whether this is normal or not. If programmers believe that this is normal behavior for a group of servers, the limit is raised. For convenient management of limits, a control panel has been created, which looks like this:

In this admin panel you can see all unique server groups and their limits. For example, the screenshot shows the limits for the achievements-cdb group:

- maximum processor load should not exceed 35%;

- formula for calculating the load on the processor 100% - idle;

- limit for disk queue for HDD - 2, for SSD - 10.

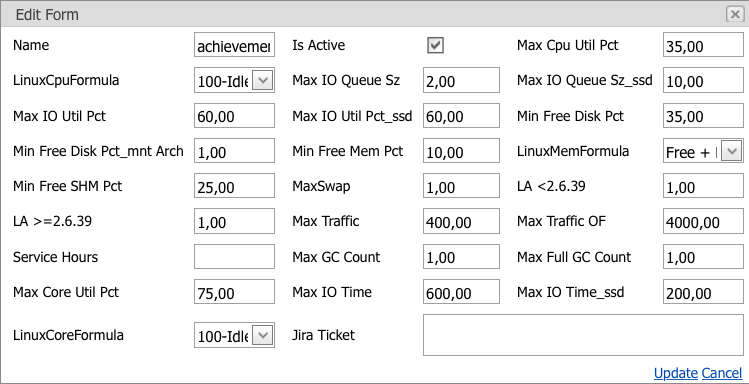

And so on. You can correct any limit only by referring to the task, which describes the reasons for the change. Change limit window:

The screenshot lists all current limits for a group of servers with the possibility of changing them. You can apply changes only if the “Jira Ticket” field is filled; it records the task in which the problem was solved.

At night, when the load on the servers is much lower, various service and calculation tasks are performed that create additional load, but they do not affect the users or the portal’s functionality, so we consider these responses to be false, and an additional parameter was introduced to combat them - the service window . A service window is a time interval in which a limit can be exceeded, which is not considered a problem and is not included in the report. Also, short-term jumps are related to false positives. Network and server checks are separated, as server and network problems are solved by different departments.

What happens to the report

The report is collected on Wednesdays in one click. Then one of the five “daytime” engineers is removed from the usual “patrolling” of the resource and work on ticket management, and begins to read the report. Check takes no more than one and a half hours. The report contains a list of all possible problems in the infrastructure, both new and old, which have not yet been resolved. Each problem can become an alert to the admin, and the sysadmin already decides to fix it right away or start a ticket.

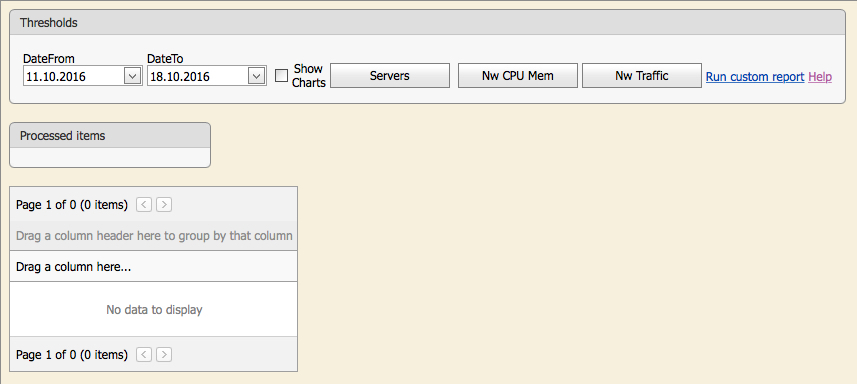

The picture shows the initial check window. The user can click on the appropriate button and start the automatic check: “Servers” - all servers, “Nw CPU Mem” - use of memory and processor on network devices, “Nw Traffic” - traffic on central switches (core) and routers.

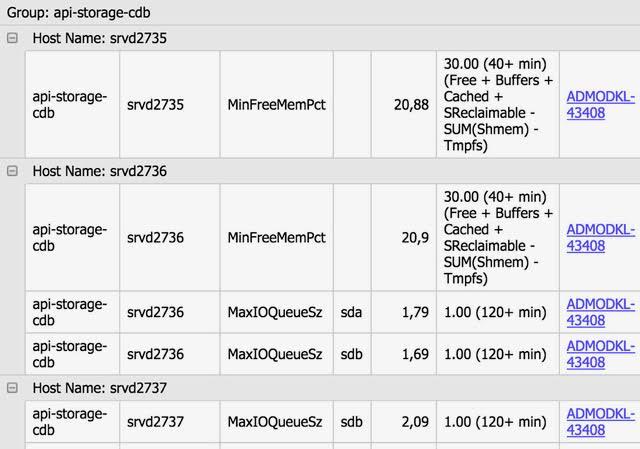

The system is integrated with JIRA (task management system). If the problem (the limit is exceeded) is already solved, then there is a link to the ticket opposite it, within which the problem is being worked on or caused by the status of the ticket. So we see, within the framework of which task the problem was solved earlier - There will be a ticket with the status Resolve.

All new problems are transferred to senior system administrators and senior network specialists, who create tickets in JIRA to solve each problem separately, then these tickets are linked to the corresponding problems. The system administrator has the opportunity to run a special check on any parameter, it is enough to specify the server group (s), parameter and limit, and the system will issue all the servers that have exceeded the limit. You can also view the chart for any parameter of any server with a limit line.

An example of a server on radar:

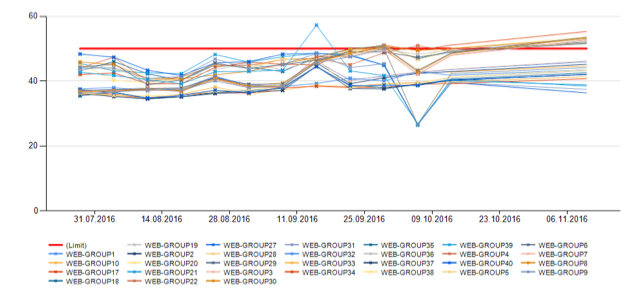

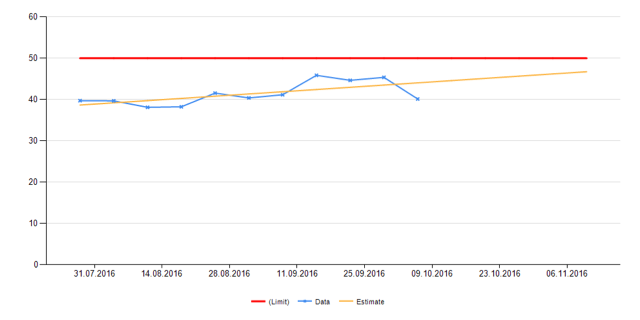

For the largest groups of servers (web - front end, level of business logic and user data cache), a long-term forecast is made in addition to the usual check, since considerable resources (equipment, time) are needed to solve problems with these groups.

The long-term forecast is also made by the monitoring team, which takes about five minutes. In the report generated by the system, the monitoring specialist sees the charts of the main indicators for the subgroups and the group as a whole with a forecast for the future, and if the established limit is exceeded within two to three months, then a ticket to solve the problem is also created. Here is an example of what the forecast looks like:

In the first picture we see the use of the CPU of all groups of web-servers separately, in the second - the general. The red line indicates the limit. Yellow - the forecast.

The forecast is based on fairly simple algorithms. Here is an example of a CPU load prediction algorithm.

For each group of servers in seven days, we build points using the following formula (an example for the front end group):

∑ (n - max load - min load) / (n - 2) , where:

- n - number of servers in the group;

- max load - the maximum load value that lasted more than 30 minutes on each server separately for the whole week. We add all load values for a group of servers;

- min load is the minimum load value, calculated in the same way as the maximum.

To get a more accurate forecast, remove one server with a maximum load and one with a minimum. This algorithm may seem simple, but it is very close to reality, and that is why it makes no sense to invent a complex analysis. Thus, for each group of servers, we get the weekly points on which the approximated linear function is constructed.

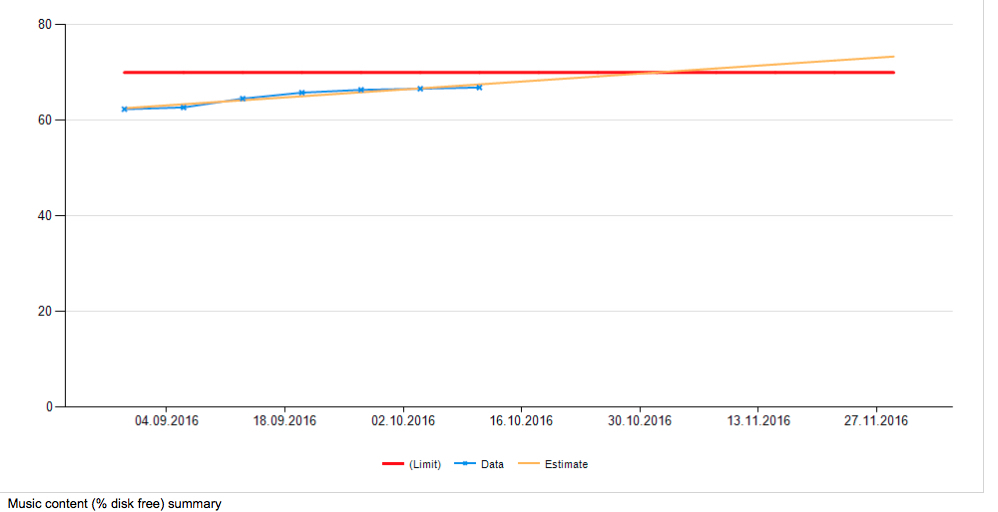

On the resulting graph, you can see the estimated date when you need to expand or upgrade the group. Likewise, the date is predicted when space will end in large clusters. Clusters of photos, videos and music are checked:

The system discards spare-disks (spare disks in the array), and they do not fall into this statistic.

As a result, after the engineer from the monitoring team creates a report and finishes all the problems, the managers assign the following tasks to the administrators:

- expand the cluster photo;

- increase disks in group1 and group2;

- solve the problem with the queue on group3;

- deal with GC on group4;

- solve the problem of traffic and load on server1 and server2;

- solve the problem with swap on group5 and group6.

All these tasks were not critical at the time of their production, since the system detected problems in advance, which made the system administrators much easier: they didn’t have to drop everything to solve problems in a short period of time.

New metrics

Sometimes we need new metrics. This is one or two new indicators per year. We start them, most often, on incidents, assuming that if something had been notified by the admin in advance, there would have been no incident. However, sometimes you need some kind of crutches - for example, on a cluster of servers with a storage of large photos, the space was somehow reclaimed by 80%. When expanding, we could not technically redistribute the photos, and wanted to monitor at 70% of the fill. Two groups were formed - almost full servers that would generate an alert continuously, and new, pristine clean ones. Instead of separate rules, we had to start a virtual "fake" group of machines, which corrected the question. Another example is that with a massive transition to SSD, it was necessary to build the monitoring of the disk queue correctly, there the typical behavior is very different from ordinary HDDs.

In general, the work is well-established, this part is beautiful, easily supported and easily scalable. Using the forecasting system, we have several times already avoided an undesirable situation when the server should have been purchased suddenly. This is a very good business sense. And admins received another layer of protection from various minor troubles.

Source: https://habr.com/ru/post/312588/

All Articles