How we built our mini data center. Part 1 - Colocation

Hello colleagues and residents of Habr. We want to tell you the story of a small company from the beginning of its formation to the current realities. We want to immediately say that this material is made for people who are interested in this topic. Before writing this (and long before that) we reviewed a lot of publications on Habré about the construction of data centers and it helped us a lot in the implementation of our ideas. The publication will be divided into several stages of our work, from the rental of the cabinet to the final construction of its own mini-center. I hope this article will help at least one person. Go!

In 2015, a hosting company was created in Ukraine with big ambitions for the future and the actual absence of start-up capital. We rented equipment in Moscow, from one of the well-known providers (and an acquaintance), this gave us the beginning of development. Thanks to our experience and partly luck, already in the first months we gained enough customers to buy our own equipment and get good connections.

')

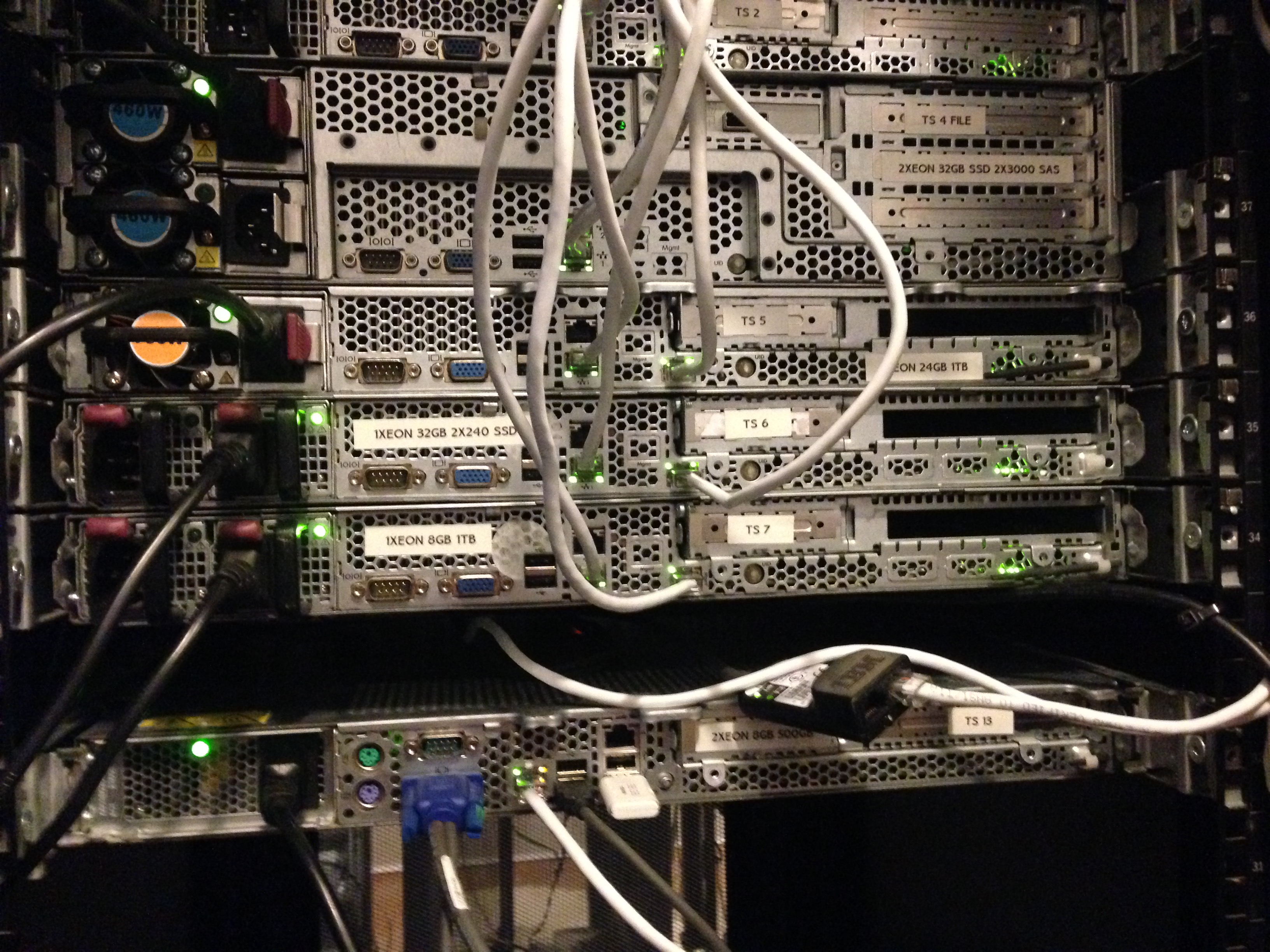

We chose the equipment we needed for our own purposes for a long time, chose price / quality first, but unfortunately most well-known brands (hp, dell, supermicro, ibm) are all very good in quality and have similar prices.

Then we went the other way, chose from what is available in Ukraine in large quantities (in case of breakdowns or replacement) and chose HP. Supermicro (as it seemed to us) are very well configured, but unfortunately their price in Ukraine exceeded any adequate sense. And there were not so many spare parts. So we chose what we chose.

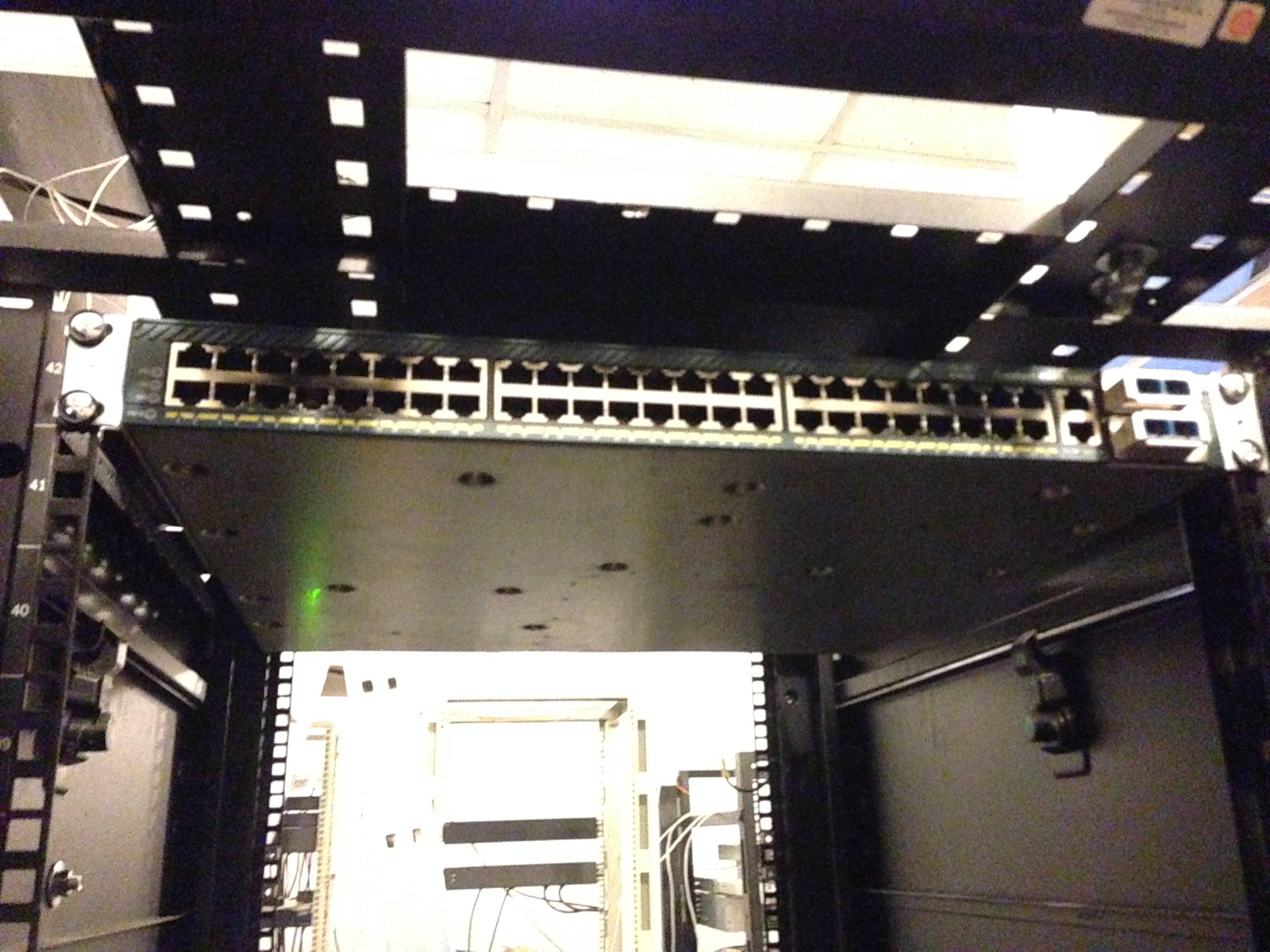

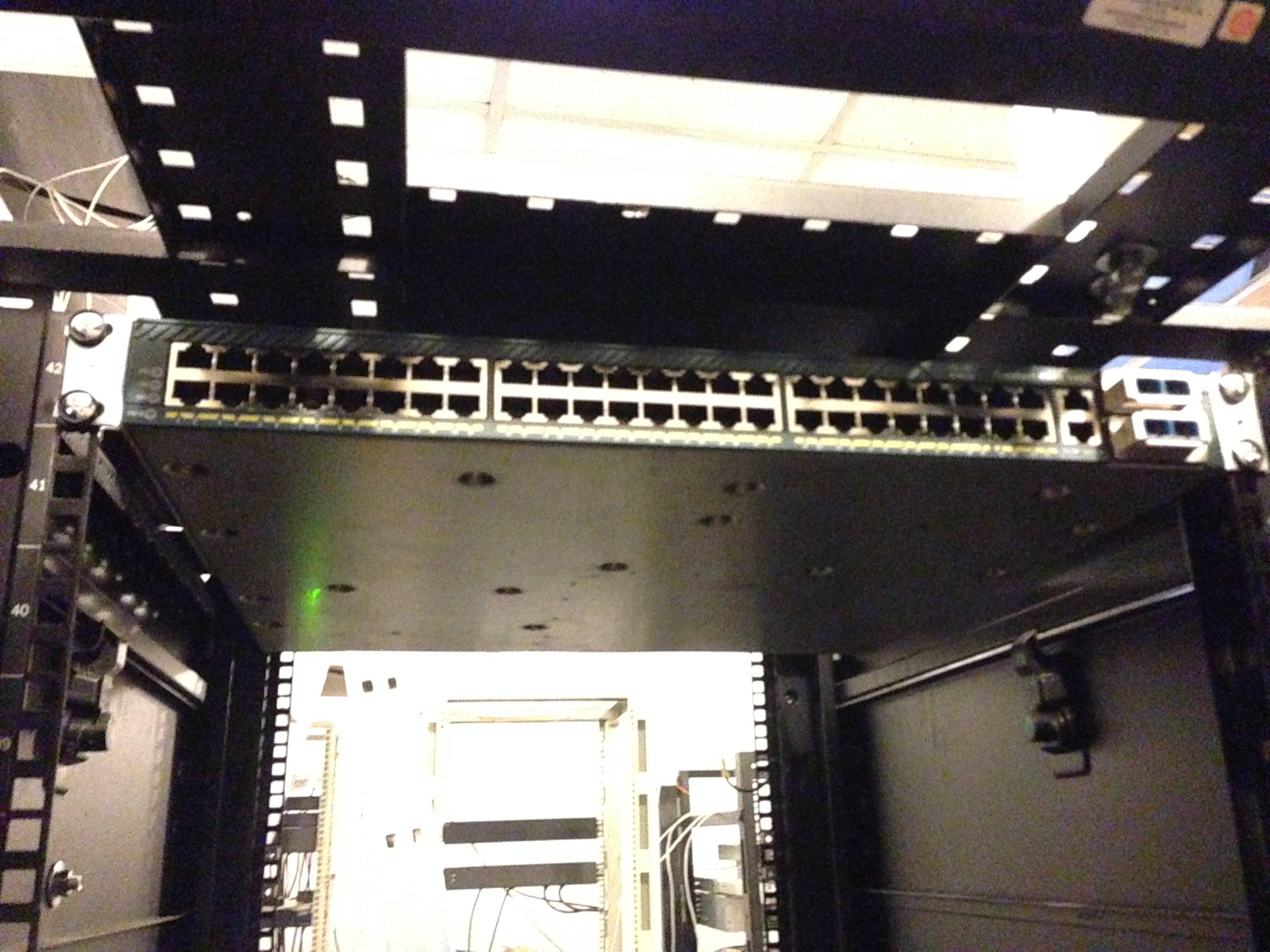

We rented a closet in the Dnepropetrovsk data center and purchased the Cisco 4948 10GE (for growth) and the first three HP Gen 6 servers.

The choice of HP equipment subsequently helped us a lot, due to its low power consumption. Well, then, off we go. We purchased managed outlets (PDUs), they were important for us in terms of energy consumption and server management (auto-install).

Later we realized that we took not the PDUs that are controlled, but simply the measuring ones. But HP has a built-in iLO (IPMI) and this saved us. We did all the automation of the system using IPMI (via DCI Manager). After we acquired some tools for laying the SCS and we slowly began to build something more.

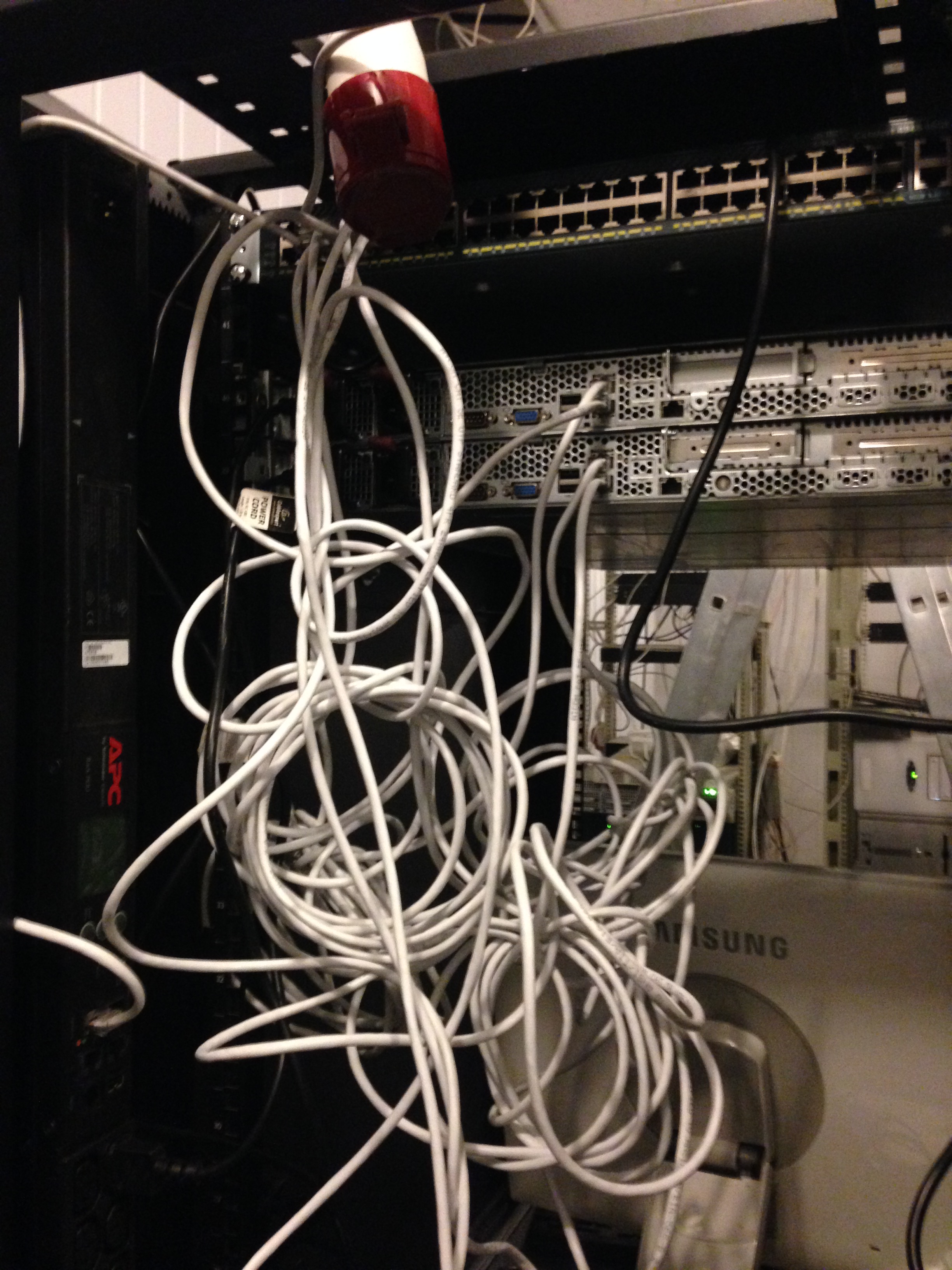

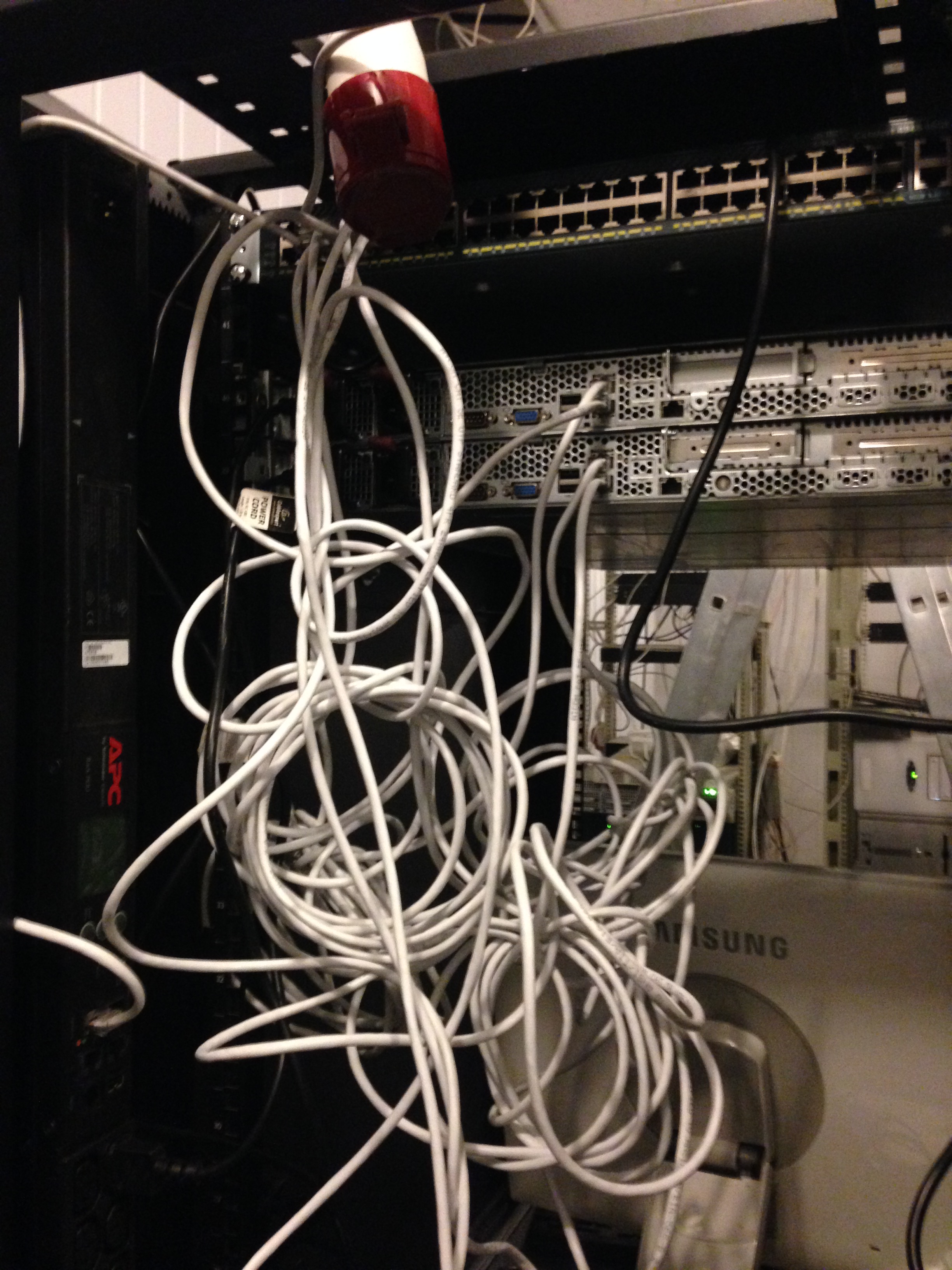

Here we see the APC PDU (which were not managed), we connect:

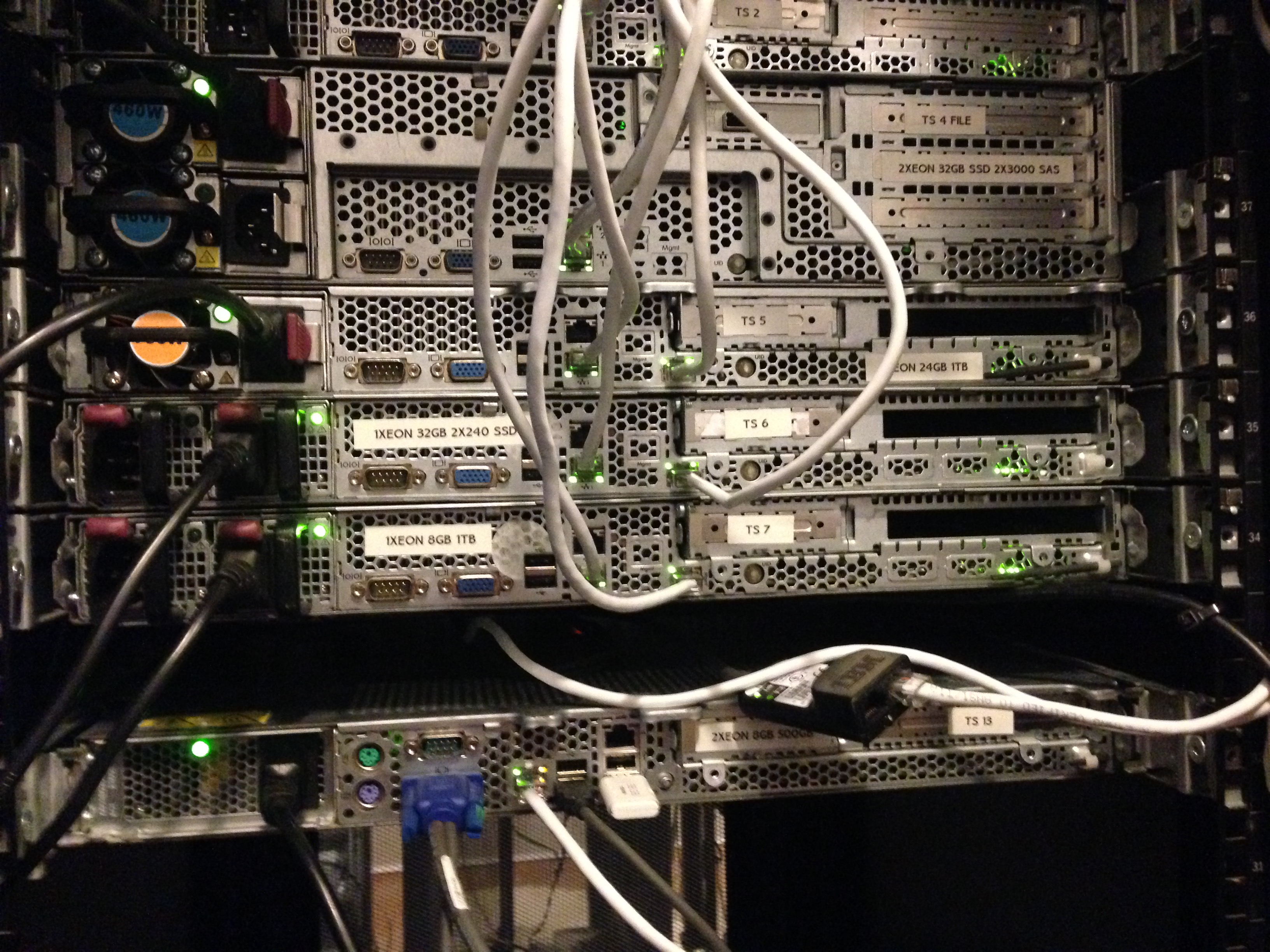

So, by the way, we installed the third server and PDU (right and left). The server has long served us as a stand for the monitor and keyboard with a mouse.

On the existing equipment, we organized a certain Cloud service with the help of VMmanager Cloud from ISP Systems. We had three servers, one of which was a master, the second - a file one. We had the actual backup in case one of the servers crashed (except for the file one). We understood that 3 servers for the cloud is not enough, so add. equipment was on the way. The blessing to “deliver” to the cloud it was possible without problems and special settings.

When one of the servers crashed (master or second backup), the cloud quietly migrated back and forth without crashing. We specifically tested this before putting it into production. The file server was organized on quite good disks in hardware RAID10 (for speed and fault tolerance).

At that time, we had several nodes in Russia on rented equipment, which we carefully began to transfer to our new Cloud service. A lot of negative was expressed by the support of ISP Systems, because there was no adequate migration from VMmanager KVM to VMmanager Cloud, and as a result we were told that this should not be the case. Carried handles, long and hard. Of course, only those who agreed to share placement and transfer were transferred.

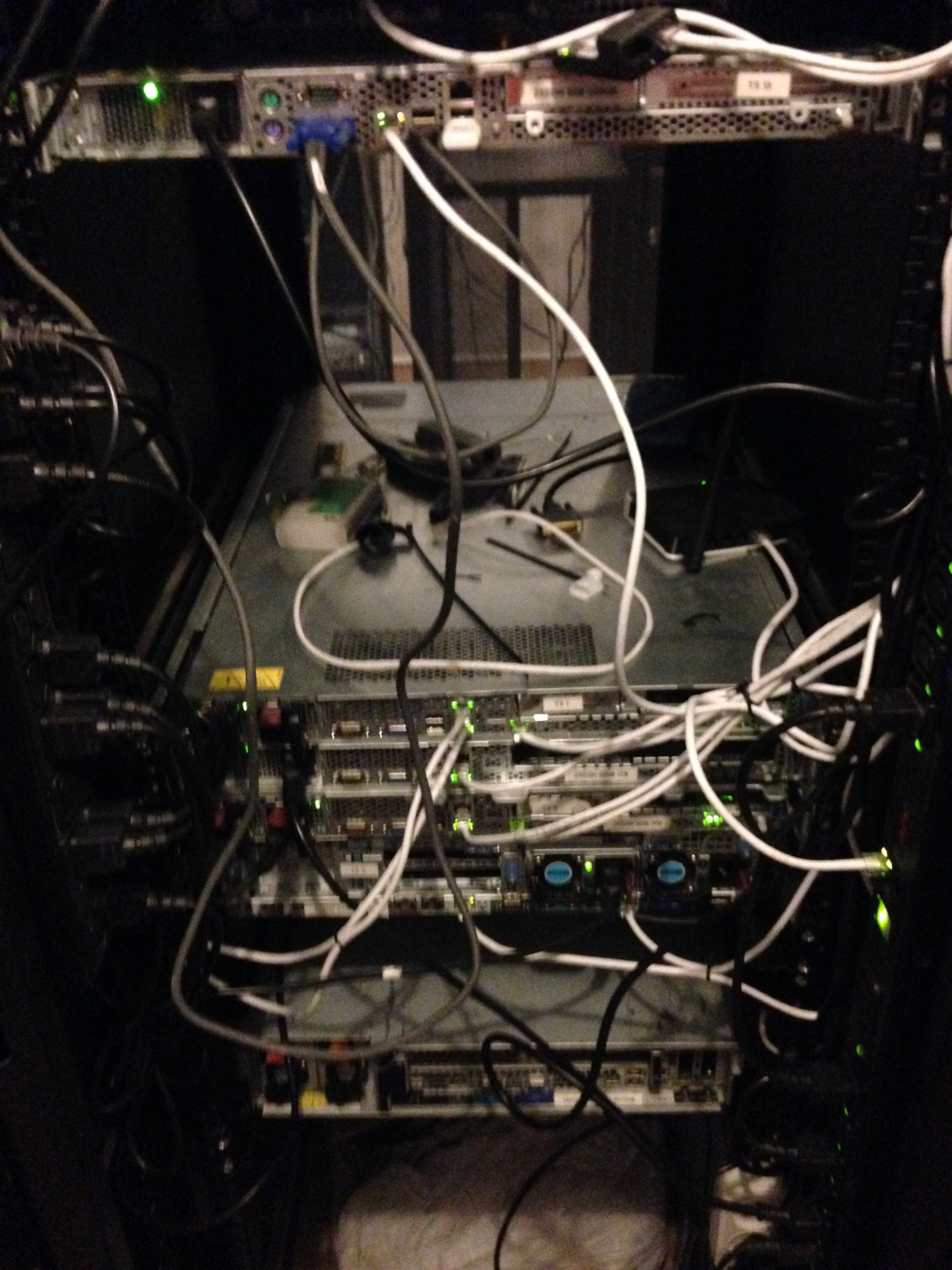

In this way we worked for about a month until we filled the floor of the cabinet with equipment.

So we met the second batch of our equipment, again HP (not advertising). It is simply better to have servers that are interchangeable than vice versa.

Based on the fact that none of us have extensive experience in laying SCS (except administrators, who, as usual, are not where they need to), we had to learn everything on the fly. So we had the wires the first time (about a day) when we installed the servers.

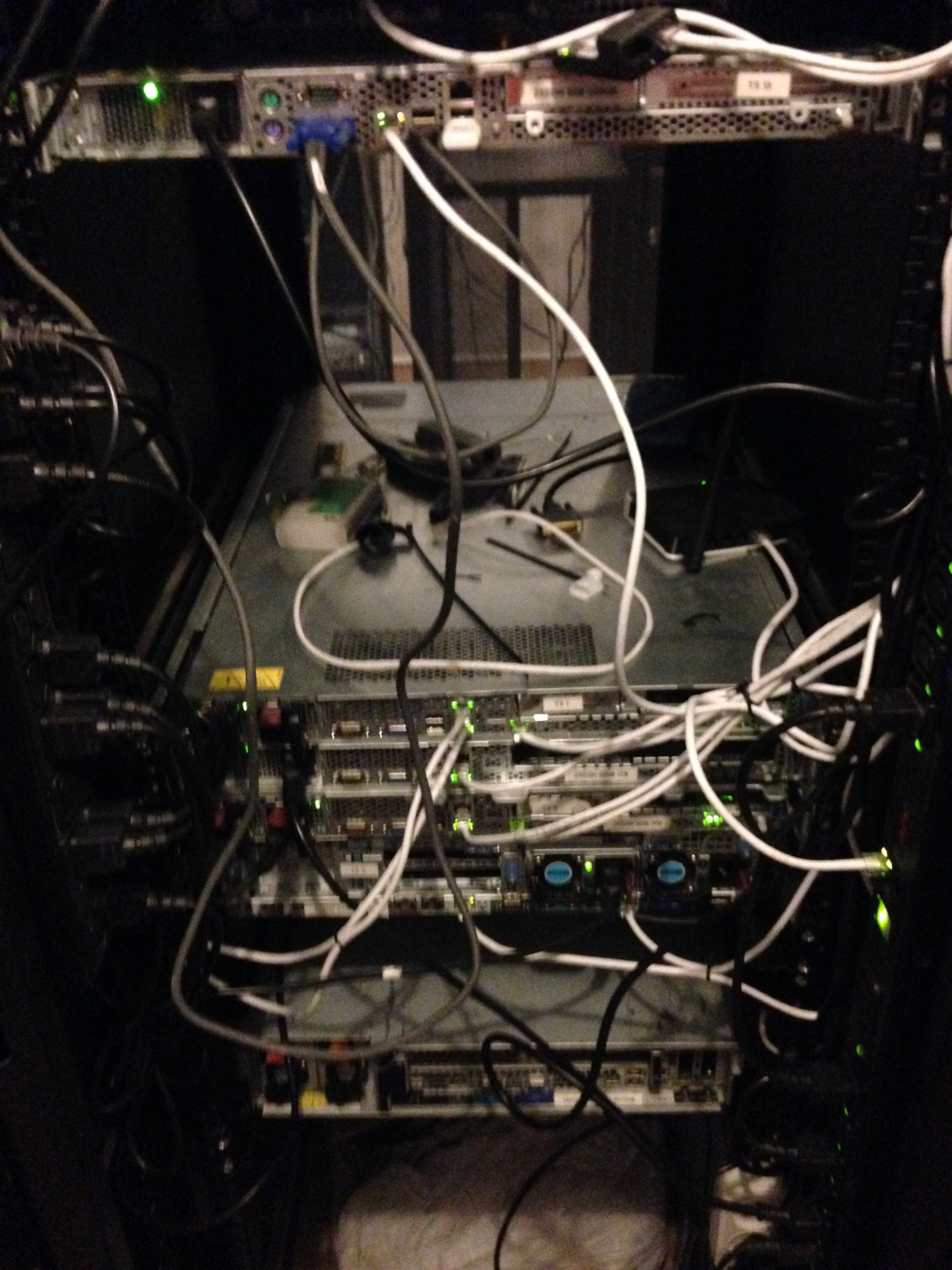

Something like this - it became after the installation of all the incoming equipment and gaskets.

As the company grew, we increasingly encountered certain difficulties. There were also problems with the equipment (the lack of SAS-SATA cables in fast access), its connection, various subtleties and with DDoS attacks on us (flew and such that they put the entire data center). But one of the main, in our opinion, is the instability of the data center in which we rented a cabinet for equipment. We ourselves were not very stable, but when the dp and where you are located falls, this is absolutely bad.

Based on the fact that the data center was located in an old semi-abandoned building of an industrial complex, it worked accordingly. And despite the fact that its owner made every effort so that he worked stably (and this is true, Alexander is really well done and in many ways helped us in the beginning) - they turned off the light, now the Internet. It probably saved us. This was the reason that prompted us (without available finance) to start searching for the premises for our own data center and organize the relocation to it in less than 14 days (taking into account the preparation of the room, pulling the optics and everything else) But about this in the next part of our story. On a hurry move, search for premises and the construction of a mini-Tsoda in which we now live.

How we built our mini data center. Part 2 - Hermoson

PS In the next part a lot of interesting things. From difficulties with moving, repairing, broaching optics in residential areas, installing automation, connecting UPS and DGU, etc.

Part 2 - Hermoson

Part 3 - Relocation

Go

In 2015, a hosting company was created in Ukraine with big ambitions for the future and the actual absence of start-up capital. We rented equipment in Moscow, from one of the well-known providers (and an acquaintance), this gave us the beginning of development. Thanks to our experience and partly luck, already in the first months we gained enough customers to buy our own equipment and get good connections.

')

We chose the equipment we needed for our own purposes for a long time, chose price / quality first, but unfortunately most well-known brands (hp, dell, supermicro, ibm) are all very good in quality and have similar prices.

Then we went the other way, chose from what is available in Ukraine in large quantities (in case of breakdowns or replacement) and chose HP. Supermicro (as it seemed to us) are very well configured, but unfortunately their price in Ukraine exceeded any adequate sense. And there were not so many spare parts. So we chose what we chose.

First iron

We rented a closet in the Dnepropetrovsk data center and purchased the Cisco 4948 10GE (for growth) and the first three HP Gen 6 servers.

The choice of HP equipment subsequently helped us a lot, due to its low power consumption. Well, then, off we go. We purchased managed outlets (PDUs), they were important for us in terms of energy consumption and server management (auto-install).

Later we realized that we took not the PDUs that are controlled, but simply the measuring ones. But HP has a built-in iLO (IPMI) and this saved us. We did all the automation of the system using IPMI (via DCI Manager). After we acquired some tools for laying the SCS and we slowly began to build something more.

Here we see the APC PDU (which were not managed), we connect:

So, by the way, we installed the third server and PDU (right and left). The server has long served us as a stand for the monitor and keyboard with a mouse.

On the existing equipment, we organized a certain Cloud service with the help of VMmanager Cloud from ISP Systems. We had three servers, one of which was a master, the second - a file one. We had the actual backup in case one of the servers crashed (except for the file one). We understood that 3 servers for the cloud is not enough, so add. equipment was on the way. The blessing to “deliver” to the cloud it was possible without problems and special settings.

When one of the servers crashed (master or second backup), the cloud quietly migrated back and forth without crashing. We specifically tested this before putting it into production. The file server was organized on quite good disks in hardware RAID10 (for speed and fault tolerance).

At that time, we had several nodes in Russia on rented equipment, which we carefully began to transfer to our new Cloud service. A lot of negative was expressed by the support of ISP Systems, because there was no adequate migration from VMmanager KVM to VMmanager Cloud, and as a result we were told that this should not be the case. Carried handles, long and hard. Of course, only those who agreed to share placement and transfer were transferred.

In this way we worked for about a month until we filled the floor of the cabinet with equipment.

So we met the second batch of our equipment, again HP (not advertising). It is simply better to have servers that are interchangeable than vice versa.

Based on the fact that none of us have extensive experience in laying SCS (except administrators, who, as usual, are not where they need to), we had to learn everything on the fly. So we had the wires the first time (about a day) when we installed the servers.

Something like this - it became after the installation of all the incoming equipment and gaskets.

As the company grew, we increasingly encountered certain difficulties. There were also problems with the equipment (the lack of SAS-SATA cables in fast access), its connection, various subtleties and with DDoS attacks on us (flew and such that they put the entire data center). But one of the main, in our opinion, is the instability of the data center in which we rented a cabinet for equipment. We ourselves were not very stable, but when the dp and where you are located falls, this is absolutely bad.

Based on the fact that the data center was located in an old semi-abandoned building of an industrial complex, it worked accordingly. And despite the fact that its owner made every effort so that he worked stably (and this is true, Alexander is really well done and in many ways helped us in the beginning) - they turned off the light, now the Internet. It probably saved us. This was the reason that prompted us (without available finance) to start searching for the premises for our own data center and organize the relocation to it in less than 14 days (taking into account the preparation of the room, pulling the optics and everything else) But about this in the next part of our story. On a hurry move, search for premises and the construction of a mini-Tsoda in which we now live.

How we built our mini data center. Part 2 - Hermoson

PS In the next part a lot of interesting things. From difficulties with moving, repairing, broaching optics in residential areas, installing automation, connecting UPS and DGU, etc.

Source: https://habr.com/ru/post/312310/

All Articles