The problem of the Internet - in low bandwidth

The Internet is getting closer to each of us. We in Telfin see it every day. Is there a limit? Read our translation of the article “The bandwidth bottleneck that is throttling the Internet” .

Specialists from all over the world are working day and night to maintain data transmission channels in working condition and expand them in order to prevent the information revolution from fading.

June 19, a new series of "Game of Thrones". Hundreds of thousands of viewers from the United States immediately launched a show - and the stream service of the HBO channel could not cope with the load . About 15 thousand spectators could not access the series for an hour.

')

The administration of the channel apologized for unforeseen problems and promised that this would not happen again. However, the fact is that this case is only one of the most noticeable manifestations of an impending problem. The volume of traffic on the Internet is growing by 22% every year, and such demand threatens to exceed the capabilities of Internet providers.

Although we have not been using modems for a long time, we should not forget that the Internet was created on the basis of the telephone network. And although instead of copper wires, we see fiber optic cables that interconnect huge data centers and ensure the transmission of trillions of bits per second, the level of service on the scale of local network segments and individual users leaves much to be desired.

This problem threatens to slow down the development of information technology. Already, users feel the impact of “congestion” in the network: mobile calls are interrupted with a significant load, wireless connections are slowing down in crowded places (like exhibition centers), the quality of video broadcasting suffers with a significant influx of viewers. It would seem that the promised high-tech future - with mobile access to high-resolution video, car robots , remote operations, telepresence conferences, interactive 3D entertainment in virtual reality - is about to come, but the network infrastructure is not ready for it .

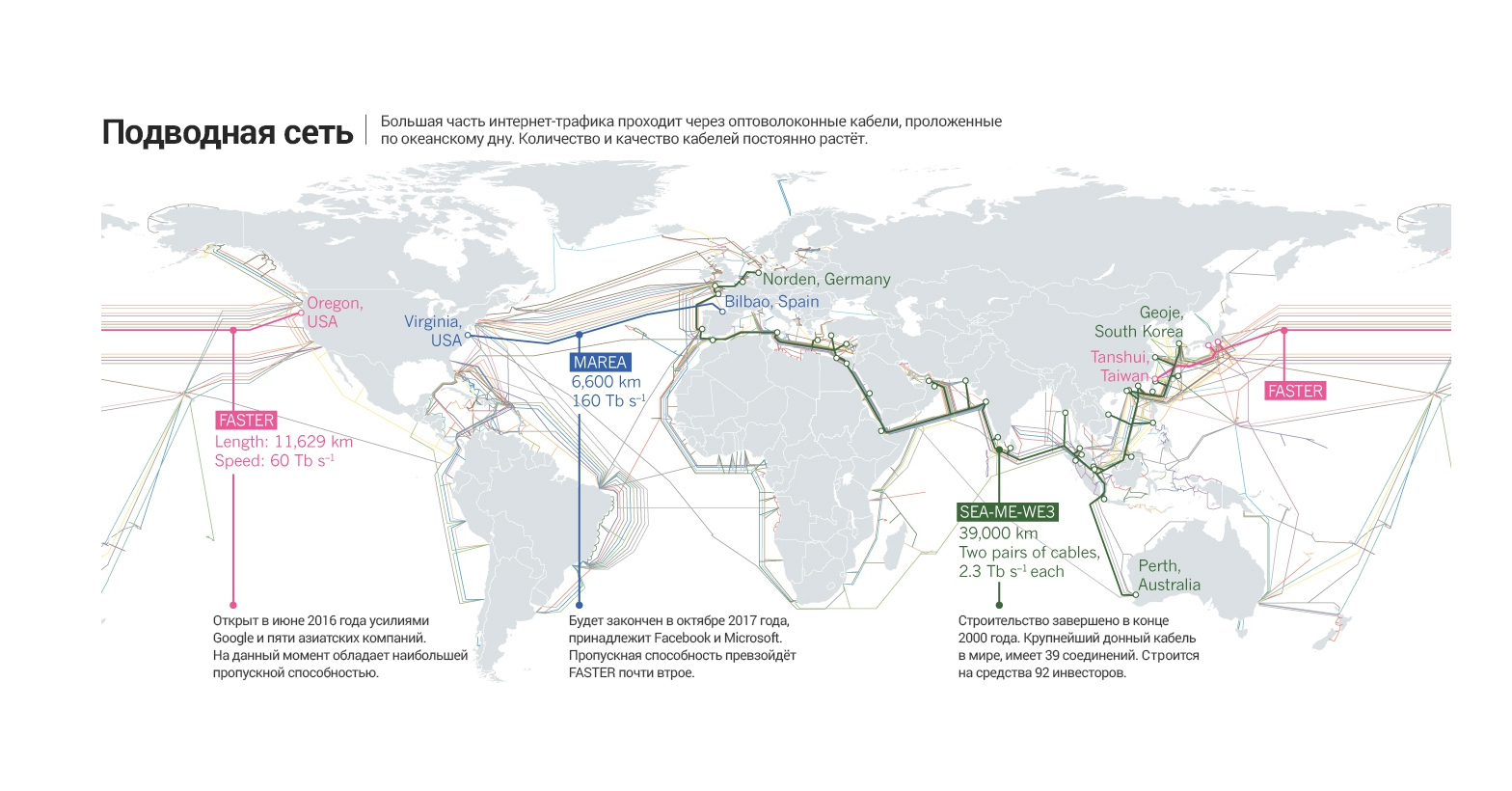

Now, service providers are investing billions of dollars in rebuilding the Internet infrastructure and eliminating congestion. The significance of this process for the development of information technology can be compared with an increase in the processing power of processors. For example, Google, in conjunction with five Asian telecommunications companies, organized the laying of a fiber optic trunk line costing $ 300 million and a length of 11,600 kilometers across the bottom of the Pacific Ocean, connecting the state of Oregon with Japan and Taiwan . Eric Kreifeldt (Erik Kreifeldt), a specialist in laying underwater cables from the Washington-based company TeleGeography, calls this project "a necessary investment necessary for the development of the industry."

Laying new high-speed highways is only part of a set of measures taken to optimize the Internet infrastructure. Other surveys are being conducted in various fields: from accelerating wireless networks to increasing the power of transmitting servers.

One of the tasks associated with the problem of expanding the network infrastructure is quite simple. In Europe and North America, quite a lot of unused fiber-optic highways have been preserved by optimistic investors before the dot-com bubble burst in 2000. With their help, providers will be able to temporarily solve the problem of excessive demand for traffic in wired networks.

However, this does not solve the problems that arise from the boom of wireless devices . Mobile traffic is mainly handled by cellular base stations, and its volume grows on average by 53% every year - despite the fact that the station coverage is not optimal and each tower serves thousands of users.

Cell networks of the first generation of the 1980s, which used only the analog signal, are in the past. Second generation networks appeared in the early 1990s and were distinguished by the availability of digital services (for example, SMS). Only recently began replacing 2G networks with more advanced technologies. They still make up 75% of all networks in Africa and the Middle East. Third-generation networks support the use of mobile Internet and have existed since the late 1990s; Now they are supported by the majority of mobile devices in Western Europe.

The fourth-generation networks are still the most advanced, they allow smartphone owners to use mobile Internet at speeds up to 100 Mbps. This technology became available in the late 2000s and its popularity is growing rapidly. However, in order to satisfy the level of demand for mobile Internet, which is expected by 2020s, it will take the fifth generation of wireless networks (5G). And such networks will have to provide connection speeds exceeding 4G hundreds of times - tens of Gbit per second.

The head of the Institute of Communication Systems at the University of Surrey in Guilford (UK), Rahim Tafazolli (Rahim Tafazolli) believes that the 5G signal will have to spread much wider than is possible now, and cover up to a million devices per square kilometer. It will be necessary to create the “Internet of Things” - the network, which will include all kinds of devices, from household appliances to power management systems, medical devices and autonomous cars.

The association of independent telecommunication companies of the Third Generation Partnership Project has coordinated the transition to 3G and 4G networks, and is now working on the transition to 5G. Tafazolli participates in this work and carries out tests of the technology of multiple input / output (Multiply Input Multiply Output, MIMO). It should allow the simultaneous transmission of multiple data streams on a single radio frequency. In this case, the transmitter and receiver are equipped with several antennas, and the signal is transmitted and received in various ways. After receiving the data streams are again separated using a complex algorithm.

MIMO technology is already used in 4G and Wi-Fi networks. However, the small size of the smartphone allows you to install only four antennas, and the same is used at base stations. This problem needs to be solved in the 5G deployment process.

MIMO installations with a large number of antennas have already been tested. Ericsson has introduced a multi-user system with an antenna of 512 elements at the Mobile World Congress exhibition, which took place in February of this year in Barcelona (Spain). The data transfer rate between the fixed and mobile terminal has reached 25 Gbit per second. The transmission was carried out at a frequency of 15 GHz, which is included in the high frequency range for 5G networks. The Japanese mobile operator NTT DoCoMo is testing the system with Ericsson, and Korea Telecom is planning a large-scale demonstration of the 5G capabilities during the 2018 Winter Olympics.

Another approach involves improving adaptability: instead of using a fixed frequency range, the mobile device should operate on the principle of "cognitive radio." When this occurs, the software transition of the wireless connection to the radio channel that is currently open. Such a method will not only allow the automatic transfer of data along the fastest possible route, but will also increase the stability of the system. In addition, it will require the replacement of not software, but software, which is much simpler.

Another challenge on the road to deploying 5G is to reserve a radio frequency band to achieve the required coverage and bandwidth. Most of the available frequencies are already reserved by existing international agreements and are used for television broadcasting, navigation, radio telescopes, etc. This issue will be considered at the World Radiocommunication Conference in 2019. The Federal Communications Commission is currently selling off frequencies below 1 GHz to telecommunications companies. These frequencies were previously reserved for television broadcasting, as they better penetrate obstacles than the higher ones, but they are no longer used after switching to digital TV. According to Tafazolli, the use of these frequencies is optimal for sparsely populated areas and will provide homes and roads with access to 5G using a small number of base stations.

(click to enlarge)

In addition, it is possible to use bands from 1–6 GHz as 2G and 3G networks are replaced by 5G. However, optimum for densely populated urban areas are considered frequencies above 6 GHz; they are little used at the moment, since they give a small range of the signal. It is planned to install base stations 5G with an interval of 200 meters. For comparison, 4G stations are usually installed at intervals of 1 km; however, the Federal Communications Commission has already authorized the use of these frequencies for high-speed communications since July 14. The British Ofcom Commission, which performs the same functions as the FCC, is about to make a similar decision soon.

The use of high frequencies is especially interesting for companies engaged in the telecommunications industry, as they open up new possibilities for the use of 5G technology. Verizon recently tested a signal transmission at 28 GHz at 1 Gbit per second in New Jersey, Massachusetts and Texas. The experiments used equipment 5G companies Ericsson, Cisco, Intel, Nokia and Samsung. Verizon plans to offer fixed wireless connections as an alternative to wired, with the cost of setting up a connection to be noticeably reduced.

Neal Bergano, technical director of TE SubCom - a submarine cable manufacturer in Eatontown, New Jersey - says that although mobile communications use no wires and its users are mobile, the network itself is not mobile . When a user connects to the network using a telephone, the base station turns the radio signal into an optical signal, which is then transmitted through a fixed fiber optic cable.

For a quarter of a century, the global telecommunications network has been built on fiber optic channels. Its bandwidth is incomparable: a single cable with a hair thickness capable of transmitting 10 Tbdata - a capacity of 25 dual-layer Blu-Ray - every second, while the transmitter and receiver can stand on different shores of the Atlantic Ocean. For comparison, the very first transatlantic cable, conducted in 1988, had a capacity of 30 thousand times less. Modern technologies allow transmitting up to 100 separate signals simultaneously, each of which has its own wavelength. However, even the "optics" have limits: as the signal travels thousands of kilometers of glass, noise and distortion accumulates in it. The upper limit for a single signal is considered to be 100 Gbps per second.

A standard optical fiber has a core of ultrapure glass with a thickness of 9 micrometers. A new fiber has been developed with a wider core, which less “pollutes” the signal with noise, although at the same time it is much more sensitive to stretching and bending. Thus, the “wide” fiber is optimal for long and straight highways - for example, submarine cables that are in a stable environment and are not exposed to external influences.

Last year, Infinera conducted an experiment in Sunnyvale (California): a broadband core fiber optic missed the signal at a speed of 150 Gbit per second. The signal traveled 7,400 kilometers, which is enough for transatlantic cable; also during the test, a signal was used at a speed of 200 Gbit per second, which covered a shorter distance.

The fastest data transmission system at the moment is the FASTER submarine cable that connects Japan and the state of Oregon. It consists of 6 pairs of "wide" fibers, through each of which passes 100 signals of 100 Gbit per second. However, this record will soon be broken: according to a statement made by Microsoft and Facebook in May of this year, the MAREA trunk line will be built in October 2017. It will stretch for 6,400 kilometers and connect data centers of these companies located in Spain and in the state of Virginia. The total data transfer rate is 160 Tbit per second.

There is another way to protect the signal from noise. It was demonstrated at the University of California (San Diego). Fiber-optic systems typically use multiple laser emitters to generate signals with different wavelengths. Instead, they proposed to use a single emitter , created using the "frequency comb" technology. It generates a series of signals with different wavelengths separated from each other by the same interval. Such an approach could double the throughput of fiber-optic systems.

The channel width is extremely important, but the speed of the system is also important. Human speech is so sensitive to interruption that an unexpected pause of a quarter of a second can disrupt a conversation on the phone or video. For video, a constant frame rate is also important. The US FCC allowed the use of special codes to increase the priority of transmission of packets that carry video communication frames and audio fragments so that traffic associated with video and audio communication is transmitted quickly and evenly.

The time it takes a signal to go from one terminal to another depends on the distance. Although the signal speed in an optical cable is 200,000 km / s — two-thirds of the speed of light in the air — the delay between entering a command, for example, in London, and receiving a response from a data center in San Francisco, remains significant; in this case, it will be 86 milliseconds. This limits cloud computing capabilities. However, there are many other new services — such as remote-controlled robots and surgeries — that are extremely sensitive to delays. Do not forget about the games.

New mobile applications require both quick system response and a significant channel width. For example, in order to guarantee the safe driving of an autonomous car, it is necessary to constantly receive information about the terrain in real time. “Normal” cars will also need a fast response link for voice control systems.

Also do not forget about the three-dimensional virtual reality systems. For optimal performance of such systems, a data transfer rate of 1 Gbps per second is needed, which is 20 times faster than the usual Blu-Ray read speed. This is necessary because the image has to be redrawn at least 90 times per second in order to keep up with the turns of the user's head; if this does not happen, the user may experience discomfort. David Whittinghill's virtual reality lab at Purdue University in West Lafayette, Indiana, uses a 10 Gbps fiber-optic line.

Large companies - such as Google, Microsoft, Facebook and Amazon - store copies of their data in data centers distributed throughout the world. Each request for data is transmitted to the nearest center. According to Geoff Bennett, technical director of Infinera, this approach allows viewers to scroll through the videos as if they were stored on their own computers. The wide distribution of data centers stimulates an increase in the throughput of fiber-optic backbones, as the traffic that is generated during the synchronization of copies of data in various data centers now exceeds the total traffic of private Internet users. The MAREA cable is built for synchronization purposes.

Currently, most data centers are located in areas with the highest concentration of users and highways: in North America, Europe, and Southeast Asia. According to Kreyfeldt, users in many parts of the world still do not have access to data processing centers, and are forced to put up with a long response system. South America has few centers of its own, and most content is downloaded from Miami (Florida); packages that follow from Brazil to Chile also pass through Miami, which increases response time. The same problem with the countries of the Middle East. 85% of international traffic passes through European data centers. The situation is changing for the better, but it happens rather slowly. Last year, the first Amazon Web Service cloud storage center was launched in Mumbai (India); The center in Sao Paulo (Brazil) has been operating since 2011.

Throughput is important not only at the level of backbones, but also at the level of communication between the processors inside each of the data center servers. Acceleration of data turnover inside the center will lead to a general acceleration of the system response. However, the growth of the clock frequency of the processors themselves stalled a few years ago due to problems with heat generation, and does not exceed several gigahertz. The most practical way to speed up the processing of data by the processor is the separation of operations between several cores with a high-speed connection between them. The optical connection in this case would be more practical, since the speed of light exceeds the speed of electrons, however, the integration of silicon microcircuits with optics is difficult.

Researches in the field of silicon photonics have been going on for a long time, however, a method of efficient light generation by a silicon microcircuit has not yet been found. There are good semiconductor light sources - for example, indium phosphide - but they are almost impossible to grow directly on a silicon crystal due to differences in the structure of atomic lattices. Therefore, the integration of indium optical and electronic components is still extremely limited.

The American Institute for the Production of Integrated Photonics in Rochester (New York) was opened last year. It has already invested 110 million dollars from federal agencies and 502 million from private investors and companies. This institute will be engaged in the development of integrated photonics for computing and communications equipment.

In addition, earlier this year already demonstrated an integrated photon circuit with 21 active components, capable of performing three different logical functions. It was developed by a team of engineers from Canada. This is an important step in the development of photon microprocessors: the circuit is comparable in complexity to the first programmable electronic circuits with which the development of microcomputers began. Jinping Yao, an engineer from the University of Ottawa, and a development participant, said the scheme is "simple compared to electronic circuits, but much more complicated than other integrated photon circuits at the moment."

Further development of this scheme can open up the widest possibilities. For example, it could convert the signal that a 5G tower receives from a smartphone into an analog optical signal, which can then be sent over fiber to a data center and digitized.

Like many other tasks that need to be solved to optimize the Internet, finding faster processors is a difficult task. However, this does not stop professionals who are looking for solutions. Many of them - for example, Bergano - have been working in the industry for more than a decade, but they remain optimistic and see the potential for many improvements.

Specialists from all over the world are working day and night to maintain data transmission channels in working condition and expand them in order to prevent the information revolution from fading.

June 19, a new series of "Game of Thrones". Hundreds of thousands of viewers from the United States immediately launched a show - and the stream service of the HBO channel could not cope with the load . About 15 thousand spectators could not access the series for an hour.

')

The administration of the channel apologized for unforeseen problems and promised that this would not happen again. However, the fact is that this case is only one of the most noticeable manifestations of an impending problem. The volume of traffic on the Internet is growing by 22% every year, and such demand threatens to exceed the capabilities of Internet providers.

Although we have not been using modems for a long time, we should not forget that the Internet was created on the basis of the telephone network. And although instead of copper wires, we see fiber optic cables that interconnect huge data centers and ensure the transmission of trillions of bits per second, the level of service on the scale of local network segments and individual users leaves much to be desired.

This problem threatens to slow down the development of information technology. Already, users feel the impact of “congestion” in the network: mobile calls are interrupted with a significant load, wireless connections are slowing down in crowded places (like exhibition centers), the quality of video broadcasting suffers with a significant influx of viewers. It would seem that the promised high-tech future - with mobile access to high-resolution video, car robots , remote operations, telepresence conferences, interactive 3D entertainment in virtual reality - is about to come, but the network infrastructure is not ready for it .

Now, service providers are investing billions of dollars in rebuilding the Internet infrastructure and eliminating congestion. The significance of this process for the development of information technology can be compared with an increase in the processing power of processors. For example, Google, in conjunction with five Asian telecommunications companies, organized the laying of a fiber optic trunk line costing $ 300 million and a length of 11,600 kilometers across the bottom of the Pacific Ocean, connecting the state of Oregon with Japan and Taiwan . Eric Kreifeldt (Erik Kreifeldt), a specialist in laying underwater cables from the Washington-based company TeleGeography, calls this project "a necessary investment necessary for the development of the industry."

Laying new high-speed highways is only part of a set of measures taken to optimize the Internet infrastructure. Other surveys are being conducted in various fields: from accelerating wireless networks to increasing the power of transmitting servers.

Fifth generation

One of the tasks associated with the problem of expanding the network infrastructure is quite simple. In Europe and North America, quite a lot of unused fiber-optic highways have been preserved by optimistic investors before the dot-com bubble burst in 2000. With their help, providers will be able to temporarily solve the problem of excessive demand for traffic in wired networks.

However, this does not solve the problems that arise from the boom of wireless devices . Mobile traffic is mainly handled by cellular base stations, and its volume grows on average by 53% every year - despite the fact that the station coverage is not optimal and each tower serves thousands of users.

Cell networks of the first generation of the 1980s, which used only the analog signal, are in the past. Second generation networks appeared in the early 1990s and were distinguished by the availability of digital services (for example, SMS). Only recently began replacing 2G networks with more advanced technologies. They still make up 75% of all networks in Africa and the Middle East. Third-generation networks support the use of mobile Internet and have existed since the late 1990s; Now they are supported by the majority of mobile devices in Western Europe.

The fourth-generation networks are still the most advanced, they allow smartphone owners to use mobile Internet at speeds up to 100 Mbps. This technology became available in the late 2000s and its popularity is growing rapidly. However, in order to satisfy the level of demand for mobile Internet, which is expected by 2020s, it will take the fifth generation of wireless networks (5G). And such networks will have to provide connection speeds exceeding 4G hundreds of times - tens of Gbit per second.

The head of the Institute of Communication Systems at the University of Surrey in Guilford (UK), Rahim Tafazolli (Rahim Tafazolli) believes that the 5G signal will have to spread much wider than is possible now, and cover up to a million devices per square kilometer. It will be necessary to create the “Internet of Things” - the network, which will include all kinds of devices, from household appliances to power management systems, medical devices and autonomous cars.

The association of independent telecommunication companies of the Third Generation Partnership Project has coordinated the transition to 3G and 4G networks, and is now working on the transition to 5G. Tafazolli participates in this work and carries out tests of the technology of multiple input / output (Multiply Input Multiply Output, MIMO). It should allow the simultaneous transmission of multiple data streams on a single radio frequency. In this case, the transmitter and receiver are equipped with several antennas, and the signal is transmitted and received in various ways. After receiving the data streams are again separated using a complex algorithm.

MIMO technology is already used in 4G and Wi-Fi networks. However, the small size of the smartphone allows you to install only four antennas, and the same is used at base stations. This problem needs to be solved in the 5G deployment process.

MIMO installations with a large number of antennas have already been tested. Ericsson has introduced a multi-user system with an antenna of 512 elements at the Mobile World Congress exhibition, which took place in February of this year in Barcelona (Spain). The data transfer rate between the fixed and mobile terminal has reached 25 Gbit per second. The transmission was carried out at a frequency of 15 GHz, which is included in the high frequency range for 5G networks. The Japanese mobile operator NTT DoCoMo is testing the system with Ericsson, and Korea Telecom is planning a large-scale demonstration of the 5G capabilities during the 2018 Winter Olympics.

Another approach involves improving adaptability: instead of using a fixed frequency range, the mobile device should operate on the principle of "cognitive radio." When this occurs, the software transition of the wireless connection to the radio channel that is currently open. Such a method will not only allow the automatic transfer of data along the fastest possible route, but will also increase the stability of the system. In addition, it will require the replacement of not software, but software, which is much simpler.

Another challenge on the road to deploying 5G is to reserve a radio frequency band to achieve the required coverage and bandwidth. Most of the available frequencies are already reserved by existing international agreements and are used for television broadcasting, navigation, radio telescopes, etc. This issue will be considered at the World Radiocommunication Conference in 2019. The Federal Communications Commission is currently selling off frequencies below 1 GHz to telecommunications companies. These frequencies were previously reserved for television broadcasting, as they better penetrate obstacles than the higher ones, but they are no longer used after switching to digital TV. According to Tafazolli, the use of these frequencies is optimal for sparsely populated areas and will provide homes and roads with access to 5G using a small number of base stations.

(click to enlarge)

In addition, it is possible to use bands from 1–6 GHz as 2G and 3G networks are replaced by 5G. However, optimum for densely populated urban areas are considered frequencies above 6 GHz; they are little used at the moment, since they give a small range of the signal. It is planned to install base stations 5G with an interval of 200 meters. For comparison, 4G stations are usually installed at intervals of 1 km; however, the Federal Communications Commission has already authorized the use of these frequencies for high-speed communications since July 14. The British Ofcom Commission, which performs the same functions as the FCC, is about to make a similar decision soon.

The use of high frequencies is especially interesting for companies engaged in the telecommunications industry, as they open up new possibilities for the use of 5G technology. Verizon recently tested a signal transmission at 28 GHz at 1 Gbit per second in New Jersey, Massachusetts and Texas. The experiments used equipment 5G companies Ericsson, Cisco, Intel, Nokia and Samsung. Verizon plans to offer fixed wireless connections as an alternative to wired, with the cost of setting up a connection to be noticeably reduced.

Wide channels

Neal Bergano, technical director of TE SubCom - a submarine cable manufacturer in Eatontown, New Jersey - says that although mobile communications use no wires and its users are mobile, the network itself is not mobile . When a user connects to the network using a telephone, the base station turns the radio signal into an optical signal, which is then transmitted through a fixed fiber optic cable.

For a quarter of a century, the global telecommunications network has been built on fiber optic channels. Its bandwidth is incomparable: a single cable with a hair thickness capable of transmitting 10 Tbdata - a capacity of 25 dual-layer Blu-Ray - every second, while the transmitter and receiver can stand on different shores of the Atlantic Ocean. For comparison, the very first transatlantic cable, conducted in 1988, had a capacity of 30 thousand times less. Modern technologies allow transmitting up to 100 separate signals simultaneously, each of which has its own wavelength. However, even the "optics" have limits: as the signal travels thousands of kilometers of glass, noise and distortion accumulates in it. The upper limit for a single signal is considered to be 100 Gbps per second.

A standard optical fiber has a core of ultrapure glass with a thickness of 9 micrometers. A new fiber has been developed with a wider core, which less “pollutes” the signal with noise, although at the same time it is much more sensitive to stretching and bending. Thus, the “wide” fiber is optimal for long and straight highways - for example, submarine cables that are in a stable environment and are not exposed to external influences.

Last year, Infinera conducted an experiment in Sunnyvale (California): a broadband core fiber optic missed the signal at a speed of 150 Gbit per second. The signal traveled 7,400 kilometers, which is enough for transatlantic cable; also during the test, a signal was used at a speed of 200 Gbit per second, which covered a shorter distance.

The fastest data transmission system at the moment is the FASTER submarine cable that connects Japan and the state of Oregon. It consists of 6 pairs of "wide" fibers, through each of which passes 100 signals of 100 Gbit per second. However, this record will soon be broken: according to a statement made by Microsoft and Facebook in May of this year, the MAREA trunk line will be built in October 2017. It will stretch for 6,400 kilometers and connect data centers of these companies located in Spain and in the state of Virginia. The total data transfer rate is 160 Tbit per second.

There is another way to protect the signal from noise. It was demonstrated at the University of California (San Diego). Fiber-optic systems typically use multiple laser emitters to generate signals with different wavelengths. Instead, they proposed to use a single emitter , created using the "frequency comb" technology. It generates a series of signals with different wavelengths separated from each other by the same interval. Such an approach could double the throughput of fiber-optic systems.

Flight time

The channel width is extremely important, but the speed of the system is also important. Human speech is so sensitive to interruption that an unexpected pause of a quarter of a second can disrupt a conversation on the phone or video. For video, a constant frame rate is also important. The US FCC allowed the use of special codes to increase the priority of transmission of packets that carry video communication frames and audio fragments so that traffic associated with video and audio communication is transmitted quickly and evenly.

The time it takes a signal to go from one terminal to another depends on the distance. Although the signal speed in an optical cable is 200,000 km / s — two-thirds of the speed of light in the air — the delay between entering a command, for example, in London, and receiving a response from a data center in San Francisco, remains significant; in this case, it will be 86 milliseconds. This limits cloud computing capabilities. However, there are many other new services — such as remote-controlled robots and surgeries — that are extremely sensitive to delays. Do not forget about the games.

New mobile applications require both quick system response and a significant channel width. For example, in order to guarantee the safe driving of an autonomous car, it is necessary to constantly receive information about the terrain in real time. “Normal” cars will also need a fast response link for voice control systems.

Also do not forget about the three-dimensional virtual reality systems. For optimal performance of such systems, a data transfer rate of 1 Gbps per second is needed, which is 20 times faster than the usual Blu-Ray read speed. This is necessary because the image has to be redrawn at least 90 times per second in order to keep up with the turns of the user's head; if this does not happen, the user may experience discomfort. David Whittinghill's virtual reality lab at Purdue University in West Lafayette, Indiana, uses a 10 Gbps fiber-optic line.

Large companies - such as Google, Microsoft, Facebook and Amazon - store copies of their data in data centers distributed throughout the world. Each request for data is transmitted to the nearest center. According to Geoff Bennett, technical director of Infinera, this approach allows viewers to scroll through the videos as if they were stored on their own computers. The wide distribution of data centers stimulates an increase in the throughput of fiber-optic backbones, as the traffic that is generated during the synchronization of copies of data in various data centers now exceeds the total traffic of private Internet users. The MAREA cable is built for synchronization purposes.

Currently, most data centers are located in areas with the highest concentration of users and highways: in North America, Europe, and Southeast Asia. According to Kreyfeldt, users in many parts of the world still do not have access to data processing centers, and are forced to put up with a long response system. South America has few centers of its own, and most content is downloaded from Miami (Florida); packages that follow from Brazil to Chile also pass through Miami, which increases response time. The same problem with the countries of the Middle East. 85% of international traffic passes through European data centers. The situation is changing for the better, but it happens rather slowly. Last year, the first Amazon Web Service cloud storage center was launched in Mumbai (India); The center in Sao Paulo (Brazil) has been operating since 2011.

Internal communications

Throughput is important not only at the level of backbones, but also at the level of communication between the processors inside each of the data center servers. Acceleration of data turnover inside the center will lead to a general acceleration of the system response. However, the growth of the clock frequency of the processors themselves stalled a few years ago due to problems with heat generation, and does not exceed several gigahertz. The most practical way to speed up the processing of data by the processor is the separation of operations between several cores with a high-speed connection between them. The optical connection in this case would be more practical, since the speed of light exceeds the speed of electrons, however, the integration of silicon microcircuits with optics is difficult.

Researches in the field of silicon photonics have been going on for a long time, however, a method of efficient light generation by a silicon microcircuit has not yet been found. There are good semiconductor light sources - for example, indium phosphide - but they are almost impossible to grow directly on a silicon crystal due to differences in the structure of atomic lattices. Therefore, the integration of indium optical and electronic components is still extremely limited.

The American Institute for the Production of Integrated Photonics in Rochester (New York) was opened last year. It has already invested 110 million dollars from federal agencies and 502 million from private investors and companies. This institute will be engaged in the development of integrated photonics for computing and communications equipment.

In addition, earlier this year already demonstrated an integrated photon circuit with 21 active components, capable of performing three different logical functions. It was developed by a team of engineers from Canada. This is an important step in the development of photon microprocessors: the circuit is comparable in complexity to the first programmable electronic circuits with which the development of microcomputers began. Jinping Yao, an engineer from the University of Ottawa, and a development participant, said the scheme is "simple compared to electronic circuits, but much more complicated than other integrated photon circuits at the moment."

Further development of this scheme can open up the widest possibilities. For example, it could convert the signal that a 5G tower receives from a smartphone into an analog optical signal, which can then be sent over fiber to a data center and digitized.

Like many other tasks that need to be solved to optimize the Internet, finding faster processors is a difficult task. However, this does not stop professionals who are looking for solutions. Many of them - for example, Bergano - have been working in the industry for more than a decade, but they remain optimistic and see the potential for many improvements.

Source: https://habr.com/ru/post/312200/

All Articles