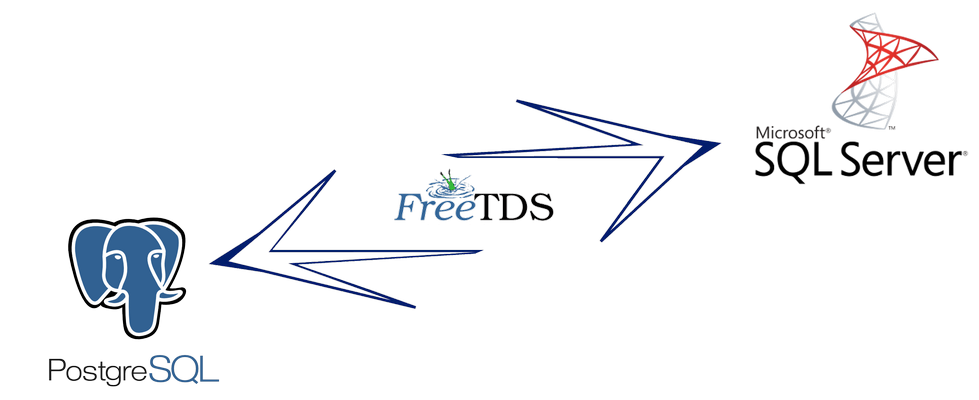

PostgreSQL integration with MS SQL Server for those who want quicker and deeper

Recently, a description of the integration of PostgreSQL and MSSQL has been published on Habré. But, the details there are absolutely not enough. Therefore, the purpose of this publication is as follows:

- Expand and deepen publicly available information about FDW for MSSQL called tds_fdw : talk about the difference in major versions and describe basic compatibility issues;

- talk about the possibilities of optimizing queries using external tables;

- touch on the topic of external data caching in materialized views;

- say a few words about the exotic approaches to the integration of PostgreSQL and MSSQL.

Installing and configuring TDS FDW

The guys from PostgresPro have already said enough about this process, I will not repeat. I’ll leave only a few links to the official PostgreSQL documentation and examples from tds_fdw:

- creation of an extension (extension) in PG: off.documentation ;

- server creation: off.documentation , example from tds_fdw ;

- creation of user mapping (user mapping): off.documentation , example from tds_fdw ;

- creation of a foreign table (foreign table): off.documentation , an example from tds_fdw .

And one more thing: please do not do as indicated in the installation instructions for tds_fdw

')

sudo make USE_PGXS=1 install Save the cats , collect the deb-package and enjoy your life:

sudo USE_PGXS=1 checkinstall Differences between major versions of TDS FDW

At the moment there are two current versions of FDW's: stable 1.0.7 and 2.0.0-alpha, which, in essence, is a master branch and in which all the most interesting happens. Here is a small list of their differences:

- in 2.0.0, pushdown support finally appeared for conditions in the WHERE clause directly related to the external table; however, it still does not work well when using the query option inside a foreign_table declaration;

- tds 7.4 version has been added (I’ll describe why this is necessary and important below);

- There are some problems with the work of DISTINCT on the external table ( here is an issue on GitHub ), although it is not known for certain: if my hands do not grow from there or the bug is quite tricky and only manifests under certain circumstances.

Compatibility Pitfalls

Until recently, tds_fdw did not work with tds version above 7.3. But in the course of writing this article, support for version 7.4 had to be found . So now, starting with commit 3a803c , tds_fdw supports all current tds versions.

Why is the support of this version so important? For me personally, this is important because of the need to work with MSSQL 2012. In short: Ruby on Rails uses an activerecord-sqlserver-adapter library to connect to MSSQL, which, in turn, uses tiny_tds , which uses FreeTDS , which can communicate with MSSQL. The trouble is that for RoR 3 and the corresponding major versions of libraries, using tds 7.1 is nailed and you can only change it through the config in the 4+ version. At the same time, version 7.1 works fine with MSSQL 2008, but when communicating with MSSQL 2012 the following errors appear:

DB-Library error: DB #: 20017, DB Msg: Unexpected EOF from the server ActiveRecord::LostConnection: TinyTds::Error: closed connection: ... TinyTds::Error: Adaptive Server connection failed And like them.

They wanted to avoid switching to the use of FDW, since updating RoR is definitely longer and more expensive. But tds_fdw did not support the required version and had to do something about it.

As for errors, they all appear randomly and grow due to the same place; make some "diversity" in the application, forcing it to fall off in random places at random times. All this disgrace is treated only by using the correct version of tds. For MSSQL 2012, this is tds 7.4.

Here is the first ambush: tds 7.4 version support has been implemented in FreeTDS since version 0.95. But out of the box in Ubuntu 14.04 and 16.04 are versions 0.91-5 and 0.91-6.1build1, respectively. And to get a newer version of FreeTDS in two ways:

- compile FreeTDS from source ;

- use alternative PPA with FreeTDS version 1.00.

In the second case, there is one nuance: in the specified repository there is a package only for Ubuntu 14.04 (which is trusty). For 16.04 (which is xenial), there is nothing there. But, on the whole, nothing is fatal and if you correct /etc/apt/sources.list.d/jamiewill-freetds-trusty.list on 16.04 to something like this:

deb http://ppa.launchpad.net/jamiewillis/freetds/ubuntu trusty main It will be possible to install the package in the latest Ubuntu (and yes, it works without problems).

If you have CentOS, then under it you can easily find FreeTDS up to version 0.95 inclusive. All that is older will have to collect from the source.

Temporary solution to compatibility issues

If the error numbered 20017 and its derivatives are very annoying, and there is no possibility to use the required version of tds, then you can handle the exception thrown by PostgreSQL and restart the / etc block, which calls MSSQL via FDW. In my case for the RoR application, it looked like this:

def retry_mssql_operation(tries = 5) begin yield rescue ActiveRecord::StatementInvalid => e if e.message =~ /^PG::FdwUnableToCreateExecution/ && tries > 0 tries -= 1 retry else raise end end end For the first time, it saves, but for a long-term solution it is categorically inappropriate.

A little bit about pushdown and how FDW works on the fingers

Before turning to the issues of optimizing queries to an external database, I would like to say a few words about pushdown. For some reason, the description of this mechanism is not in demand in Russian-speaking resources (or I am not familiar with its correct translation, and the tricep press down on the block is clearly not from that opera). Therefore, I want to talk briefly about him.

In the simplest case, when we in PG execute a query of the form:

SELECT column_name FROM foreign_table WHERE column_id = 42; In fact, the following occurs in the database:

- from the table associated with foreign_table (or non-table) located on a third-party server, all the contents in postgres are retrieved;

- then, the resulting data is filtered based on conditions from WHERE.

Not very effective scheme, especially if you want to get only one from a table with several million rows. And here appears pushdown. This mechanism allows you to reduce the number of lines that we receive from a remote server. This is done by constructing a query to an external database, taking into account what we want on the PG side, that is, taking into account what is specified in WHERE, JOIN, ORDER, etc. In other words, FDW parses the original request in PotsgreSQL, select from it, that the remote data warehouse can understand and assemble a new query, according to these conditions. This leads to an obvious consequence: pushdown is not applicable to all FDWs (for example, pushdown is almost useless for file_fdw , but quite the opposite for postgres_fdw or tds_fdw ).

Total: pushdown is cool, it allows you to use external data storage mechanisms, reduces the amount of data circulating between the PG and external storage, thereby speeding up the execution of requests, but at the same time, it is a separate mechanism, so it needs to be implemented, maintained and pretty nontrivial task.

Speed up requests

With the installation, configuration and materiel sorted out. Now we will start the description of how it is possible to extract data from MSSQL as soon as possible.

Pushdown

This approach is useful in the case of simple queries that are not burdened with various JOINs and other SQL tricks. The latest version of tds_fdw (currently 2.0.0-alpha) adds support for the simplest pushdown for WHERE.

For example, consider the table simple_table from the MSSQL database. There are two fields in this table: id and data. The definition of the external table for it will be as follows:

CREATE FOREIGN TABLE mssql_table ( id integer, custom_data varchar OPTIONS (column_name 'data')) SERVER mssql_svr OPTIONS (schema_name 'dbo', table_name 'simple_table', row_estimate_method 'showplan_all', match_column_names '1'); In this case, the first column has the same name in PostgreSQL and in MSSQL: id. The second column has different names in PG and MSSQL, so the column_name option is needed here. This parameter explicitly sets the mapping of columns from PostgreSQL to columns in MSSQL. Also, at the end is the match_column_name parameter, which is responsible for the implicit mapping of column names by name, that is, thanks to it, the column id is mapped.

Everything, now if we execute the query:

SELECT custom_data FROM mssql_table WHERE id = 42; FDW must process the condition specified in WHERE and collect the correct query in MSSQL. For example:

SELECT data FROM simple_table WHERE id = 42; In the case of tds_fdw version 1.0.7 and below, the request in MSSQL will be different:

SELECT id, data FROM simple_table; I repeat: pushdown, currently, works only for WHERE; for JOIN, ORDER and other functions such as MAX, LOWER, etc., it will not take off.

And one more thing: how do you know which query was actually executed on the MSSQL side? When using FDW for, for example, MySQL, this line appears in explain:

Remote query: SELECT `id`, `customer_id`, `order_date` FROM `data`.`orders` And it is convenient. In tds_fdw there is no such thing yet and you need to go a longer way through the FreeTDS logs. By default, logs are disabled in FreeTDS, but this is easily fixed by digging into /etc/freetds/freetds.conf . There you can find the following lines:

; dump file = /tmp/freetds.log ; debug flags = 0xffff Those who need to remove the semicolon at the beginning.

Now for any query in MSSQL from PG, FreeTDS will log everything that it can. This will slow down the execution of all external queries and can spawn a bunch of logs (in my case, the usual SELECT made a log in ~ 300MB, and JOIN could barely get to ~ 1.5GB). But in logih, it will be seen what actually happened in MSSQL. In addition, the volume of logs can be reduced by playing around with `debug flags`. For more information about logging in FreeTDS, write here , and details about `debug flags` are right here .

Materialized view

The materialized view (hereinafter MV) is the usual view + data table. This approach will help in case of complex queries with joins of external and internal tables, with functions, preference and courtesans.

The profit from MV is as follows: it is the “native” object for the PG, that is, the MV interacts remarkably well with the rest of PostgreSQL and it can be indexed and analyzed regardless of the data source that filled it. There are also disadvantages: MV needs to be updated. You can update by internal triggers, by external events, you can completely re-create and so on. But, in any case, the MV generates a PG lag from the original data source.

For the above-described external MV table, you can create it as follows:

CREATE MATERIALIZED VIEW materialized_mssql_table AS SELECT id, custom_data FROM mssql_table; Now all the data from MSSQL is in PostgreSQL, which means they can be indexed as they please ( B-tree , GIN and GiST , etc.), statistics become available for them, you can see the details of the query execution plan and many more pleasant things from PG.

You can update the MV through standard INCERT / UPDATE / DELETE commands, or simply recreate all the content with

REFRESH MATERIALIZED VIEW CONCURRENTLY materialized_mssql_table; The CONCURRENTLY option allows you to update the MV without blocking competing read requests, but it takes more time and resources. Also, in order to be able to use CONCURRENTLY, the target MV must satisfy certain requirements. They can be found on the corresponding documentation page .

Exotic approach

Honestly, the devil knows whether this approach can take off, perhaps a respected public will tell something interesting in this regard. In any case, I think that it is necessary to say about it, since the core resources for the majority of questions on the integration of two databases respond to "use FDW" and no diversity is expected, even if you want a strange one.

So, when it may be necessary: if all the above options did not help due to various restrictions. For example:

- indecently large amount of external database and the impossibility of its cloning in PG;

- strict speed requirements and the availability of an optimal query to an external database;

- the desire to execute a parameterized query, that is, an analogue of the query option for FDW, only with a dynamic parameter, for example, you want to use full-text search on the MSSQL side through the CONTAINS function;

- something else unusual.

What to use: dbi-link or dblink-tds . This is analogous to dblink but with support for several DBMS: PostgreSQL, MySQL, MSSQL Server and Oracle in the case of dbi-link and simply TDS in the case of dblink-tds.

The mechanics of work seems to be: as a kind of highly specialized analogue of FDW as a PG function, which collects the necessary request within itself based on the arguments passed, executes it in the external database through the above tools, receives data, processes them and returns them to the PG as a pipeline function . That is, hypothetically, you can execute exactly the query that you want and present its result in the form that is digestible for further processing in PG.

All of the above are pure theoretical considerations. If you have real experience using these or similar tools, please share your knowledge with the world.

Conclusion

Currently, there is only one simple and working solution for PostgreSQL and MSSQL docking. This is tds_fdw . It has many flaws, but the project is developing, bugs are being repaired, features are rolling out and it's great. Therefore, tds_fdw can solve most of the problems associated with extracting data from MSSQL via PG. Those who want to quickly, more optimally and with courtesans will help PostgreSQL and its rich arsenal of optimization tools. And those who want a very strange and want to do everything inside the database with a minimum of external services will be difficult. The toolkit is ancient, there is no documentation, there is no support, it is

Source: https://habr.com/ru/post/312090/

All Articles