Programming & Music: ADSR-signal envelope. Part 2

Hello!

You are reading the second part of the article about creating a VST synthesizer in C #. In the first part , the SDK and the library for creating VST plug - ins were considered, the programming of the oscillator was considered.

In this part I will tell about the signal envelopes, their varieties, application in sound processing. The article will consider programming an ADSR envelope to control the amplitude of a signal generated by an oscillator.

Envelopes are in any synthesizer, are used not only in the synthesis, but throughout the processing of sound.

The source code for the synthesizer I've written is available on GitHub .

Cycle of articles

- We understand and write VSTi synthesizer on C # WPF

- ADSR signal envelope

- Buttervo Frequency Filter

- Delay, Distortion and Parameter Modulation

Table of contents

- Envelope

- Different types of envelopes in existing plugins

- MIDI keystrokes

- A more object-oriented approach in the oscillator code

- Click to switch notes

- ADSR Envelope Programming

- Examples of sounds using ADSR-envelope

- Bibliography

Envelope

From the point of view of mathematics, the envelope curve (or function) is such a curve (function), which at each point touches a certain set. This may be a set of curves, points, shapes, elements. It goes around a given set. One can imagine that the envelope is a certain boundary that limits the elements of the set.

Imagine a set of identical circles, the centers of which are located on the same line. Their envelopes are two parallel straight lines. These envelopes are, in fact, boundaries for sets of points of circles.

Envelopes for circles. Screenshot taken from dic.academic.ru

In terms of synthesizing and processing signals, the envelope is a function that describes changes in a parameter over time.

The red curve is the wave amplitude envelope. Screenshot taken from the site www.kit-e.ru

The envelopes are mainly used to describe changes in the amplitude of the signal. But no one forbids you to use the envelope to describe changes in the cutoff frequency of the filter (cutoff), pitch (pitch), pan (pan) and some other existing parameters of the synthesizer.

In the real world, the sound volume of a musical instrument changes over time. Each instrument has its own specific volume changes. For example, the organ with the pressed key of the corresponding note plays it at a constant volume, and the guitar reproduces the sound as loud as possible only at the moment of striking the string, after which it fades smoothly. It is typical for wind and string instruments to achieve maximum sound volume not immediately, but some time after taking the note.

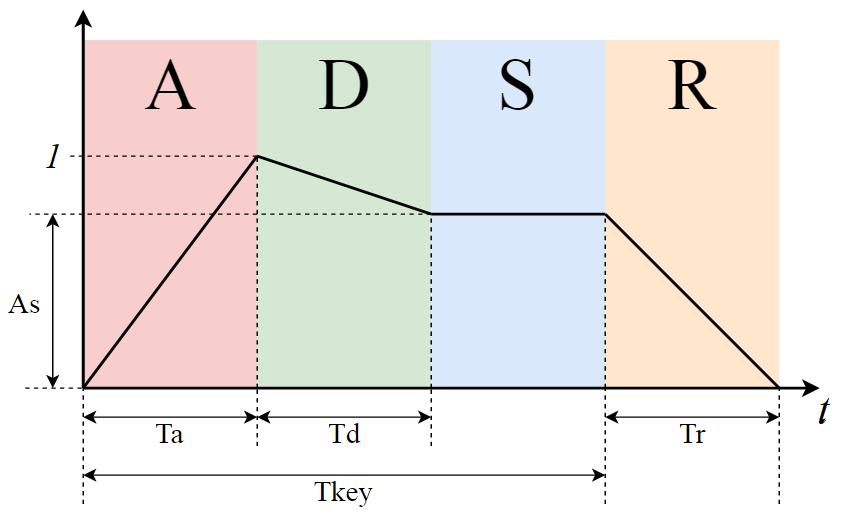

The ADSR-envelope allows describing changes in sound volume as 4 consecutive phases with parameters:

- Attack - The phase of increasing the signal from complete silence to the maximum volume level of the signal. Attack time (they say simply “attack”) is the time needed for the volume of the signal to reach its maximum level. Any signal has an attack, since the amplitude (in real life) changes continuously, without jumps.

- Decay — The phase of the falloff of the signal to the level specified in the Sustain phase. Similarly, the parameter is the fall time.

- Sustain (Hold) - the phase of "stable sound" with a constant predetermined amplitude. If you describe pressing a key on the piano, the Sustain phase lasts until the player holds the key pressed. Of course, in a real piano, if you keep the key pressed for a long time, the sound will gradually subside. If we look at the synthesizer, then it can continue to generate a note, and in this phase we will hear a constant signal, which does not vary in amplitude.

- Release (Attenuation) - the attenuation phase of the signal. Determines the time (say "release") necessary for the final decline of the signal amplitude to zero. On the piano, this phase begins as soon as the key is released.

Consider the graph at the beginning of the article, the notation:

- As is the amplitude value of the signal in the Sustain state.

- Ta - Attack time

- Td - Decay status time

- Tr - release state time

- Tkey - key / note hold time (from start to release)

Imagine that we generate a certain harmonic with an oscillator with an amplitude of c maximum level k (the sine values will be from - k to k ).

Consider the use of an ADSR envelope for this signal.

Since at the moment of transition from the Attack phase to the Decay phase, the signal amplitude is maximal (in the graph it is 1), we can consider the application of the envelope as a multiplication of the current signal level by the current envelope value. In this case, "maximum amplitude" means that the signal is not limited (does not change) to the envelope and its amplitude will be equal to k .

At the time of the beginning of the signal generation, the attack phase begins. During Ta, the signal increases to the level of 1 * k . After the Decay phase begins, during Td the level value decreases to As * k .

The envelope goes into the Sustain phase - it is not limited in time. After the transition to the Release phase (pressed key), the signal amplitude decreases to zero during the time Tr.

What if we release the note until the Decay or Sustain phase occurs? In any case, we have to go into the Release phase, which will start at the current signal level.

It is important to understand that the Sustain parameter is the signal level , as opposed to the Attack, Delay, Release parameters - which represent time (if you look at the ADSR charts on the Internet, you can catch some misunderstanding).

Different types of envelopes in existing plugins

There are a lot of information, articles, video tutorials on envelopes on the Internet - they are widely used, and especially in synthesizers for modulating parameters.

No text can convey a picture, much less a sound. Therefore, I recommend that you watch a couple of videos on YouTube for the query "adsr envelope": at least this, on the first link, is a valid explanation with an interactive .

In VST synthesizers, envelopes are used as:

- ADSR envelope to control the volume of the signal after the oscillator

- More complex envelopes for modulating parameters

The ADSR envelope processes the amplitude of the sound from two oscillators. Screenshot from Sylenth1 synthesizer

Often, a Hold phase is added to the ADSR envelope — a phase with a finite time and maximum amplitude between the Attack and Decay phases.

AHDSR envelope (Hold phase added) in the Serum synthesizer, in the envelope block to modulate the parameters

There are more complex envelopes - ADBSSR-envelope .

MIDI keystrokes

Moving on to programming and reviewing the code I wrote, the synth. ( GitHub Link )

In the last article , a simple oscillator was considered that generates a wave according to a table of a certain frequency right from the start of the plug-in. It also says how to build a project and consider the architecture of the code.

Screw Midi messages to the oscillator so that the signal is generated only if the key is held down.

To get Midi messages from the host, the plugin class (derived from SyntagePlugin) must overload the CreateMidiProcessor method, which returns an IVstMidiProcessor.

Syntage.Framework has a ready-made MidiListener class that implements IVstMidiProcessor.

MidiListener has OnNoteOn and OnNoteOff events, which we will use in the oscillator.

Now the oscillator will not have a frequency parameter (Frequency), since the frequency will be determined by the key held down.

We subscribe to the OnNoteOn and OnNoteOff events in the constructor and implement them.

private int _note = -1; // -1 ... public Oscillator(AudioProcessor audioProcessor) : base(audioProcessor) { _stream = Processor.CreateAudioStream(); audioProcessor.PluginController.MidiListener.OnNoteOn += MidiListenerOnNoteOn; audioProcessor.PluginController.MidiListener.OnNoteOff += MidiListenerOnNoteOff; } private void MidiListenerOnNoteOn(object sender, MidiListener.NoteEventArgs e) { _time = 0; // _note = e.NoteAbsolute; } private void MidiListenerOnNoteOff(object sender, MidiListener.NoteEventArgs e) { // , if (_note == e.NoteAbsolute) _note = -1; } The event handler comes with the NoteEventArgs argument, which has information about the note, octave, and velocity (note velocity).

The minimum distance between the notes is semitone, respectively, the integer absolute value of the note (its number) we will measure as the number of semitones from a certain bass note (we will understand some later). Why? This is convenient for the formula for obtaining frequency by note number.

A bit of theory - now evenly tempered mode rules in music.

The octave is a range of 12 semitones, of 12 different notes (in the major and minor keys of the note 7, since there is a tone between some notes, and between some notes a semitone).

The whole scale is divided into octaves. Accordingly, the entire audible frequency spectrum is also divided into octave segments.

A note an octave higher will have twice the frequency, but to be called the same.

To calculate the frequency of the note, which is separated by i semitones from the reference note with the frequency f0, use the following formula:

We write the corresponding static function in the auxiliary class DSPFunctions:

public static double GetNoteFrequency(double note) { var r = 440 * Math.Pow(2, (note - 69) / 12.0); return r; } Here, the note of the first octave (440 Hz) is taken as the standard. From the formula, it is clear that the note with the “number” 0 is lower than the first octave by 69 semitones, it is the note up with a frequency of 8.1758 Hz (even lower by an octave than in the subcontractacle), the first octave of the same number will be 69. Why this note? Apparently, it was the way people agreed when they made keyboards for synthesizers, so that "with a margin."

Now, you need to generate a signal while the _note! = -1 condition is met.

Our mono oscillator - no matter how many keys you press on the keyboard, only the last key pressed will be perceived. After reading the following chapters, you can program a polyphonic oscillator, I will not talk about it - I decided not to complicate it.

A more object-oriented approach in the oscillator code

The OnNoteOn and OnNoteOff handlers tell us that a specific key has been pressed or removed. Inside the oscillator, we define the current key of the integer variable _note, and the absence of the pressed key is interpreted as the value -1.

It's time to move to a higher level: I propose to write a class to represent the generated tone (especially in the future, it will simplify our life).

The tone is characterized by the number and elapsed time from the start - so far nothing new, just added clarity to the code:

private class Tone { public int Note; public double Time; } Accordingly, now the oscillator has a Tone _tone field, which will be null in the absence of a clamped key.

Moreover, with such a class it will be easier to program a polyphonic oscillator.

Click to switch notes

Imagine the following situation: you pressed a note on the keyboard, the oscillator started to generate a harmonic with a given frequency, then you press the next note and the oscillator immediately switches the frequency and the phase (time) is reset. This means that there will be a gap in the wave, which by ear leads to artifacts and clicks.

To generate a wave from a table, we found the “relative phase” as a value from 0 to 1, where 1 was understood as the full wave period. If we change the frequency of the wave, but leave the same "relative phase", the wave values will coincide and there will be no click.

It is necessary to determine the starting time for a new note, if a note with a different frequency is currently being generated.

The formula from the previous article for the "relative phase":

Accordingly, knowing the new frequency, we get the right time:

In code, it looks like this:

private void MidiListenerOnNoteOn(object sender, MidiListener.NoteEventArgs e) { var newNote = e.NoteAbsolute; // , , double time = 0; if (_tone != null) { // 0 1 var tonePhase = DSPFunctions.Frac(_tone.Time * DSPFunctions.GetNoteFrequency(_tone.Note)); // , time = tonePhase / DSPFunctions.GetNoteFrequency(newNote); } _tone = new Tone { Time = time, Note = e.NoteAbsolute }; } public class Oscillator : SyntageAudioProcessorComponentWithParameters<AudioProcessor>, IGenerator { private class Tone { public int Note; public double Time; } private readonly IAudioStream _stream; private Tone _tone; public VolumeParameter Volume { get; private set; } public EnumParameter<WaveGenerator.EOscillatorType> OscillatorType { get; private set; } public RealParameter Fine { get; private set; } public RealParameter Panning { get; private set; } public Oscillator(AudioProcessor audioProcessor) : base(audioProcessor) { _stream = Processor.CreateAudioStream(); audioProcessor.PluginController.MidiListener.OnNoteOn += MidiListenerOnNoteOn; audioProcessor.PluginController.MidiListener.OnNoteOff += MidiListenerOnNoteOff; } private void MidiListenerOnNoteOn(object sender, MidiListener.NoteEventArgs e) { var newNote = e.NoteAbsolute; // , , double time = 0; if (_tone != null) { // 0 1 var tonePhase = DSPFunctions.Frac(_tone.Time * DSPFunctions.GetNoteFrequency(_tone.Note)); // , time = tonePhase / DSPFunctions.GetNoteFrequency(newNote); } _tone = new Tone { Time = time, Note = e.NoteAbsolute }; } private void MidiListenerOnNoteOff(object sender, MidiListener.NoteEventArgs e) { // , if (_tone != null && _tone.Note == e.NoteAbsolute) _tone = null; } public override IEnumerable<Parameter> CreateParameters(string parameterPrefix) { Volume = new VolumeParameter(parameterPrefix + "Vol", "Oscillator Volume"); OscillatorType = new EnumParameter<WaveGenerator.EOscillatorType>(parameterPrefix + "Osc", "Oscillator Type", "Osc", false); Fine = new RealParameter(parameterPrefix + "Fine", "Oscillator pitch", "Fine", -2, 2, 0.01); Fine.SetDefaultValue(0); Panning = new RealParameter(parameterPrefix + "Pan", "Oscillator Panorama", "", 0, 1, 0.01); Panning.SetDefaultValue(0.5); return new List<Parameter> {Volume, OscillatorType, Fine, Panning}; } public IAudioStream Generate() { _stream.Clear(); if (_tone != null) GenerateToneToStream(_tone); return _stream; } private void GenerateToneToStream(Tone tone) { var leftChannel = _stream.Channels[0]; var rightChannel = _stream.Channels[1]; double timeDelta = 1.0 / Processor.SampleRate; var count = Processor.CurrentStreamLenght; for (int i = 0; i < count; ++i) { var frequency = DSPFunctions.GetNoteFrequency(tone.Note); var sample = WaveGenerator.GenerateNextSample(OscillatorType.Value, frequency, tone.Time); sample *= Volume.Value; var panR = Panning.Value; var panL = 1 - panR; leftChannel.Samples[i] += sample * panL; rightChannel.Samples[i] += sample * panR; tone.Time += timeDelta; } } } ADSR Envelope Programming

After the oscillator, the signal goes to the ADSR-envelope processing, which will change its amplitude (volume).

When you press a key, the envelope enters the Attack phase, while pressing the key, the envelope enters the Release phase. In this phase, the signal should smoothly (meaning without clicks, and so quickly or slowly decide the user twisting the knobs of parameters) to subside.

The oscillator stops generating a signal when pushing a key - the envelope will not be able to correctly process the Release phase. In the Release phase, the signal must continue to be generated by an oscillator, and the envelope will do its job to attenuate the volume.

To do this, remove the OnNoteOff handler from the code of the oscillator - now the envelope will do this.

As described in the " Envelope " chapter, we use the envelope value as a multiplier for the signal sample. The value of the envelope changes in time from 0 to 1, the value of 1 envelope takes only when switching between the Attack and Decay phases (when the value of the envelope is 1, the signal amplitude does not change).

In order to program transitions from the ADSR phases, you need to code the state machine, so I will speak of states later, and not the envelope phases.

The envelope has 4 parameters: Attack, Delay, Release, and Sustain amplitude multiplier. Usually Attack, Delay is milliseconds, Release can already be comparable to the second.

All 4 states replace each other always consistently: you can not jump from one state to another. If the status of Attack, Delay or Release time will be zero - you can get a clip. Therefore, the minimum of these parameters must be made non-zero. I made the parameters Attack, Delay or Release from 0.01 to 1 second.

The implementation will have the following hierarchy: the ADSR class is a framework for the envelope logic, which contains part of the synthesizer logic: the set of necessary parameters, keystroke event handlers, the Process function, which processes the sample stream.

The envelope logic will be hidden in the NoteEnvelope class, which, in essence, requires only a method — to get the current envelope value. This method must be called sequentially for all stream samples and multiplied.

It is important to agree on how to handle keystrokes. For example, how to handle keystroke if another key is already pressed? I studied it with the method of a close look, as it is done in the Sylenth1 synthesizer, if you do not use polyphony in it. I decided to choose this strategy:

- If the state of the keys has changed and the keys are now clamped, but not before - we register the press (go to the Attack state).

- If the state of the keys has changed and now there are no clamped keys, but there used to be - register the key removal (go to the Release state).

In simple words - we pay attention only to the change in the state of the keys "all released - at least one is clamped."

Thus, when you press a new key and hold the current key, the frequency in the oscillator will simply switch, and the envelope will not respond to it. When programming polyphony, everything becomes more complicated.

Let us write all of the above in the ADSR framework class: parameters, keystroke handler, sample processing.

public class ADSR : SyntageAudioProcessorComponentWithParameters<AudioProcessor>, IProcessor { private readonly NoteEnvelope _noteEnvelope; // private int _lastPressedNotesCount; // , , public RealParameter Attack { get; private set; } public RealParameter Decay { get; private set; } public RealParameter Sustain { get; private set; } public RealParameter Release { get; private set; } public ADSR(AudioProcessor audioProcessor) : base(audioProcessor) { _noteEnvelope = new NoteEnvelope(this); Processor.Input.OnPressedNotesChanged += OnPressedNotesChanged; } public override IEnumerable<Parameter> CreateParameters(string parameterPrefix) { Attack = new RealParameter(parameterPrefix + "Atk", "Envelope Attack", "", 0.01, 1, 0.01); Decay = new RealParameter(parameterPrefix + "Dec", "Envelope Decay", "", 0.01, 1, 0.01); Sustain = new RealParameter(parameterPrefix + "Stn", "Envelope Sustain", "", 0, 1, 0.01); Release = new RealParameter(parameterPrefix + "Rel", "Envelope Release", "", 0.01, 1, 0.01); return new List<Parameter> {Attack, Decay, Sustain, Release}; } private void OnPressedNotesChanged(object sender, EventArgs e) { // - , - // , - , // var currentPressedNotesCount = Processor.Input.PressedNotesCount; if (currentPressedNotesCount > 0 && _lastPressedNotesCount == 0) { _noteEnvelope.Press(); } else if (currentPressedNotesCount == 0 && _lastPressedNotesCount > 0) { _noteEnvelope.Release(); } _lastPressedNotesCount = currentPressedNotesCount; } public void Process(IAudioStream stream) { var lc = stream.Channels[0]; var rc = stream.Channels[1]; var count = Processor.CurrentStreamLenght; for (int i = 0; i < count; ++i) { var multiplier = _noteEnvelope.GetNextMultiplier(); lc.Samples[i] *= multiplier; rc.Samples[i] *= multiplier; } } } We start programming the logic of the envelope - the class NoteEnvelope. As you can see from the above ADSR class code, the following public methods are needed from the NoteEnvelope class:

- Press () - handle keystroke (go to Attack state)

- Release () - handle key press (go to the release state)

- GetNextMultiplier () - get the next envelope value

We define an enumeration for the states:

private enum EState { None, Attack, Decay, Sustain, Release } We know the time between two samples, therefore we know the working time of the steits and the total working time of the envelope.

In all states, the same logic is used to determine the current value: knowing the time from the start of the state, the state time (envelope parameters) interpolates the initial and final envelope values.

For the Sustain state, the initial and final values are the same, so the logic with interpolation here will also work correctly (although it is not useful here).

The initial value and time for the state is determined at the moment of the state switching:

private void SetState(EState newState) { // - _startMultiplier = (_time > 0) ? _multiplier : GetCurrentStateFinishValue(); _state = newState; // _time = GetCurrentStateMultiplier(); } private double GetCurrentStateMultiplier() { switch (_state) { case EState.None: return 1; case EState.Attack: return _ownerEnvelope.Attack.Value; case EState.Decay: return _ownerEnvelope.Decay.Value; case EState.Sustain: return _ownerEnvelope.Sustain.Value; case EState.Release: return _ownerEnvelope.Release.Value; default: throw new ArgumentOutOfRangeException(); } } Each call to the GetNextMultiplier () function needs to be reduced _time for the time between two samples. If the time is less than or equal to zero, then you need to go to another state.

The transition to the Release state is possible only from the Release () function.

public double GetNextMultiplier() { var startMultiplier = GetCurrentStateStartValue(); var finishMultiplier = GetCurrentStateFinishValue(); // , var stateTime = 1 - _time / GetCurrentStateMultiplier(); // startMultiplier finishMultiplier CalculateLevel _multiplier = CalculateLevel(startMultiplier, finishMultiplier, stateTime); switch (_state) { case EState.None: break; case EState.Attack: if (_time < 0) SetState(EState.Decay); break; case EState.Decay: if (_time < 0) SetState(EState.Sustain); break; case EState.Sustain: // break; case EState.Release: if (_time < 0) SetState(EState.None); break; default: throw new ArgumentOutOfRangeException(); } // var timeDelta = 1.0 / _ownerEnvelope.Processor.SampleRate; _time -= timeDelta; return _multiplier; } The end time for states is obvious: for Attack - 1, for Decay and Sustain - the amplitude parameter Sustain, for Release - 0.

public class ADSR : SyntageAudioProcessorComponentWithParameters<AudioProcessor>, IProcessor { private class NoteEnvelope { private enum EState { None, Attack, Decay, Sustain, Release } private readonly ADSR _ownerEnvelope; private double _time; private double _multiplier; private double _startMultiplier; private EState _state; public NoteEnvelope(ADSR owner) { _ownerEnvelope = owner; } public double GetNextMultiplier() { var startMultiplier = GetCurrentStateStartValue(); var finishMultiplier = GetCurrentStateFinishValue(); // , var stateTime = 1 - _time / GetCurrentStateMultiplier(); // startMultiplier finishMultiplier CalculateLevel _multiplier = CalculateLevel(startMultiplier, finishMultiplier, stateTime); switch (_state) { case EState.None: break; case EState.Attack: if (_time < 0) SetState(EState.Decay); break; case EState.Decay: if (_time < 0) SetState(EState.Sustain); break; case EState.Sustain: // break; case EState.Release: if (_time < 0) SetState(EState.None); break; default: throw new ArgumentOutOfRangeException(); } // var timeDelta = 1.0 / _ownerEnvelope.Processor.SampleRate; _time -= timeDelta; return _multiplier; } public void Press() { SetState(EState.Attack); } public void Release() { SetState(EState.Release); } private double CalculateLevel(double a, double b, double t) { return DSPFunctions.Lerp(a, b, t); } private void SetState(EState newState) { // - _startMultiplier = (_time > 0) ? _multiplier : GetCurrentStateFinishValue(); _state = newState; // _time = GetCurrentStateMultiplier(); } private double GetCurrentStateStartValue() { return _startMultiplier; } private double GetCurrentStateFinishValue() { switch (_state) { case EState.None: return 0; case EState.Attack: return 1; case EState.Decay: case EState.Sustain: return _ownerEnvelope.Sustain.Value; case EState.Release: return 0; default: throw new ArgumentOutOfRangeException(); } } private double GetCurrentStateMultiplier() { switch (_state) { case EState.None: return 1; case EState.Attack: return _ownerEnvelope.Attack.Value; case EState.Decay: return _ownerEnvelope.Decay.Value; case EState.Sustain: return _ownerEnvelope.Sustain.Value; case EState.Release: return _ownerEnvelope.Release.Value; default: throw new ArgumentOutOfRangeException(); } } } private readonly NoteEnvelope _noteEnvelope; private int _lastPressedNotesCount; public RealParameter Attack { get; private set; } public RealParameter Decay { get; private set; } public RealParameter Sustain { get; private set; } public RealParameter Release { get; private set; } public ADSR(AudioProcessor audioProcessor) : base(audioProcessor) { _noteEnvelope = new NoteEnvelope(this); Processor.Input.OnPressedNotesChanged += OnPressedNotesChanged; } public override IEnumerable<Parameter> CreateParameters(string parameterPrefix) { Attack = new RealParameter(parameterPrefix + "Atk", "Envelope Attack", "", 0.01, 1, 0.01); Decay = new RealParameter(parameterPrefix + "Dec", "Envelope Decay", "", 0.01, 1, 0.01); Sustain = new RealParameter(parameterPrefix + "Stn", "Envelope Sustain", "", 0, 1, 0.01); Release = new RealParameter(parameterPrefix + "Rel", "Envelope Release", "", 0.01, 1, 0.01); return new List<Parameter> {Attack, Decay, Sustain, Release}; } private void OnPressedNotesChanged(object sender, EventArgs e) { // - , - // , - , // var currentPressedNotesCount = Processor.Input.PressedNotesCount; if (currentPressedNotesCount > 0 && _lastPressedNotesCount == 0) { _noteEnvelope.Press(); } else if (currentPressedNotesCount == 0 && _lastPressedNotesCount > 0) { _noteEnvelope.Release(); } _lastPressedNotesCount = currentPressedNotesCount; } public void Process(IAudioStream stream) { var lc = stream.Channels[0]; var rc = stream.Channels[1]; var count = Processor.CurrentStreamLenght; for (int i = 0; i < count; ++i) { var multiplier = _noteEnvelope.GetNextMultiplier(); lc.Samples[i] *= multiplier; rc.Samples[i] *= multiplier; } } } If we generate a simple sine using the written envelope, we get the next wave (the audio file was generated in FL Studio and then studied in the open source Audacity editor)

In the Attack, Decay and Release phases, the envelope changes according to a linear law (Screenshot from Audacity)

It can be seen that in the Attack, Decay and Release phases the envelope changes according to a linear law.

In the real world, oscillations cannot last forever, because of the energy expended on resistance forces, oscillations subside. The amplitude of damped oscillations varies according to an exponential law.

Using the envelope in the Sylenth1 synthesizer (Screenshot from Audacity)

Using envelope in 3x Osc synthesizer from FL Studio (Screenshot from Audacity)

Following the example of the envelopes in other synthesizers in decay and release, we use the exponential function, and in attack, we use the inverse function, the logarithm.

For each state, we know the relative time (from 0 to 1) and the extreme values of the envelope a and b.

Take the function E ^ x on the interval [0, 1]. It has values in the interval [1, E]. It is necessary to translate this segment into [a, b]. The logarithm is found as the inverse function for the exponent:

F1 - Function for Decay and Release status. Since it is concave in the other direction, you need to display it symmetrically to the Y axis (replacing the argument with 1-x).

Function F2 is the inverse of F1, you need to express x through y.

Let's make in the code:

private double CalculateLevel(double a, double b, double t) { //return DSPFunctions.Lerp(a, b, t); switch (_state) { case EState.None: case EState.Sustain: return a; case EState.Attack: return Math.Log(1 + t * (Math.E - 1)) * Math.Abs(b - a) + a; case EState.Decay: case EState.Release: return (Math.Exp(1 - t) - 1) / (Math.E - 1) * (a - b) + b; default: throw new ArgumentOutOfRangeException(); } }

Result of Works (Screenshot from Audacity)

In advanced synthesizers, you can choose the curvature of the curve between phases:

Envelope in the synthesizer Serum

Examples of sounds using ADSR-envelope

Consider a couple of sound ideas that can be obtained from a simple signal using an ADSR envelope.

- A and S on zero, D and R ~ 1/4 knobs. The signal will die out sharply, if you generate noise, then the sound will be similar to a hi-hat.

- A - great value (~ 1/2 knobs), D and R - 0, S - 1: effect, as if the notes sound "in the inverse form".

- A, D, R - small, S at zero. If you generate a noise, the sound will be like a shaker shaking.

- D is small, the rest is at zero. If you generate a square or ramp signal, the sound will resemble the click of a metronome.

- A, D - small, S and R at zero. If you generate a noise, then the sound will be remotely similar to pressing the keys of a typewriter.

Again, on the Internet there are tons of material, training videos and examples on the subject of the signal envelope. With the help of the volume envelope, various sounds and effects are well emulated, again, you can find a bunch of ready-made presets in synthesizers (there will definitely be imitations of drums).

In the next article I will talk about the frequency filter Buttervota .

All good!

Good luck in programming!

Bibliography

The main articles and books on digital sound are listed in the previous article .

- The Human Ear And Sound Perception

- Sound Envelopes small article with audio examples

- en.wikiaudio.org/ADSR_envelope links to good videos

- Envelope tool charts

- Nine Components of Sound

')

Source: https://habr.com/ru/post/311750/

All Articles