OpenGL ES 2.0. Deferred lighting

In this article we will look at one of the options for implementing deferred lighting on OpenGL ES 2.0.

Deferred lighting

The traditional way of rendering a scene (forward rendering) involves drawing an individual object in one or several passes, depending on the number and nature of the processed light sources (the object receives lighting from one or several sources at each pass). This means that the amount of resources spent on a single pixel-type light source is of the order of growth O (L * N), where N is the number of illuminated objects, and L is the number of illuminated pixels.

The main task of deferred lighting (deferred shading / lighting / rendering) is to more efficiently process a large number of light sources using the means of separating the rendering of scene geometry from lighting rendering. Thereby, reducing the amount of resources expended to O (L).

In general, the technique consists of two passes.

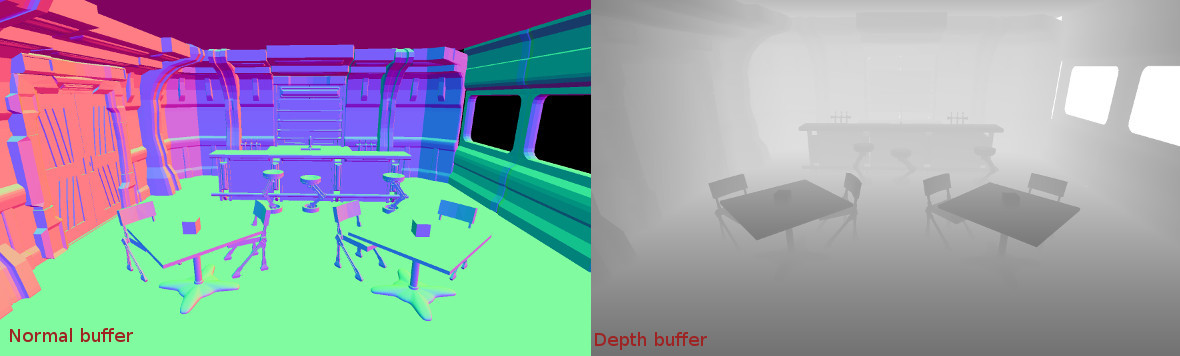

- Geometry pass . Objects are drawn to create buffers of screen space (G-buffer) with depth, normals, positions, albedo and degree of specularity.

- Lighting pass . Buffers created in the previous pass are used to calculate the lighting and get the final image.

To use this technology in full and build buffers, you need hardware support for Multiple Render Targets (MRT) .

Unfortunately, MRT is supported only on processors compatible with OpenGL ES 3.0.

In order to circumvent this hardware limitation, we will use a modified delay lighting technique, known as Light Pre-Pass (LPP) .

Light pre pass

So, based on the fact that in the first pass we will not be able to build all the necessary buffers for the final image , we build only those that are necessary to calculate the lighting . In the second pass we form only an illumination buffer. And unlike the general case (where, the geometry is drawn only once), we, on the third pass, draw all the objects again, forming the final image. Objects get calculated lighting from the previous passage, combine it with color textures. The third pass compensates for what we could not (due to hardware limitations) to do on the first.

- Geometry pass . Objects are drawn to create buffers of screen space with only depth and normals.

- Lighting pass . Build an illumination buffer. To calculate the light, in addition to data from the previous passage (depth and normal buffers), we also need the three-dimensional position of the pixel. The technique assumes the restoration of the position on the depth buffer.

- Final pass . Objects are drawn to create the final image, based on the illumination buffer and color textures of the objects.

Before examining each pass in detail, we should mention the additional constraints imposed on the buffers in OpenGL ES 2.0. We will need to support two additional extensions OES_rgb8_rgba8 and OES_packed_depth_stencil .

- OES_rgb8_rgba8 . Allow us to support textures with pixel data format RGBA8 as buffers. We will use such textures as a normal buffer and an illumination buffer.

- OES_packed_depth_stencil . Allow us to use the depth buffer along with the stencil buffer and the pixel data format UNSIGNED_INT_24_8_OES. (24 bits - depth, 8 bits - the value of the stencil). The buffer is required at the second stage of optimization. Also, because of the 24-bit depth buffer, we will need support for high-precision computations in the fragment shader.

Currently, these extensions support more than 95% of existing devices.

Geometry pass

Creating a buffer for off-screen rendering

// glBindTexture(GL_TEXTURE_2D, shared_depth_buffer); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE); glTexImage2D(GL_TEXTURE_2D, 0, GL_DEPTH_COMPONENT24_OES, cx, cy, 0, GL_DEPTH_STENCIL_OES, GL_UNSIGNED_INT_24_8_OES, nullptr); glBindTexture(GL_TEXTURE_2D, 0); // glBindTexture(GL_TEXTURE_2D, normal_buffer); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE); glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, cx, cy, 0, GL_RGBA, GL_UNSIGNED_BYTE, nullptr); glBindTexture(GL_TEXTURE_2D, 0); // . glBindFramebuffer(GL_FRAMEBUFFER, pass_fbo); // glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, normal_buffer, 0); // . // glFramebufferTexture2D(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_TEXTURE_2D, shared_depth_buffer, 0); glBindFramebuffer(GL_FRAMEBUFFER, 0); Rendering.

// attribute vec3 a_vertex_pos; attribute vec3 a_vertex_normal; uniform mat4 u_matrix_mvp; uniform mat4 u_matrix_model_view; varying vec3 v_normal; void main() { gl_Position = u_matrix_mvp * vec4(a_vertex_pos, 1.0); v_normal = vec3(u_matrix_model_view * vec4(a_vertex_normal, 0.0)); } // precision lowp float; varying vec3 v_normal; void main() { // gl_FragColor = vec4(v_normal * 0.5 + 0.5, 1.0); } Result

Lighting pass

Creating a buffer for off-screen rendering

// ... // . glBindFramebuffer(GL_FRAMEBUFFER, pass_fbo); // glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, light_buffer, 0); // . glFramebufferTexture2D(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_TEXTURE_2D, shared_depth_buffer, 0); glFramebufferTexture2D(GL_FRAMEBUFFER, GL_STENCIL_ATTACHMENT, GL_TEXTURE_2D, shared_depth_buffer, 0); glBindFramebuffer(GL_FRAMEBUFFER, 0); Drawing

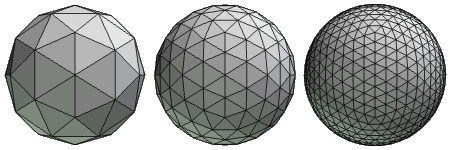

At this stage, we will draw each light source separately. To draw a source, it is necessary to express its volume in any way. This can be done in different ways, using screen-oriented rectangles, cubes, spheres.

As an approximation of the light volumes of point sources of light, we will use a primitive called Icosphere

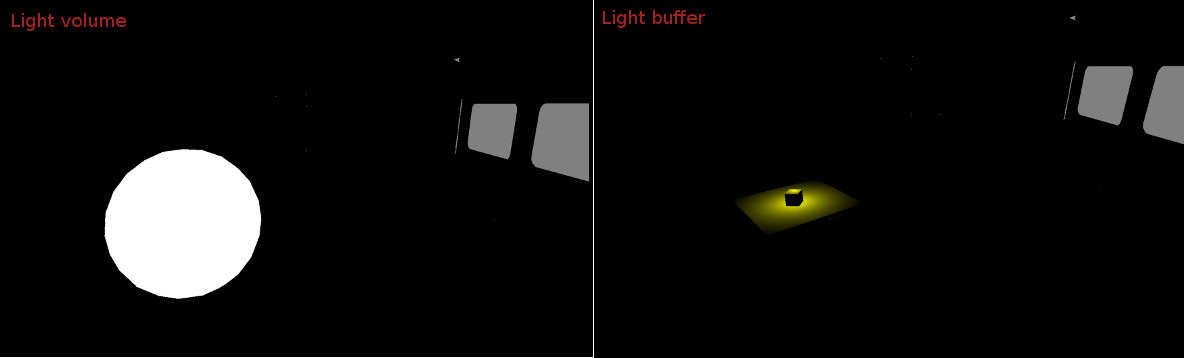

// attribute vec3 a_vertex_pos; uniform mat4 u_matrix_mvp; varying vec4 v_pos; void main() { v_pos = u_matrix_mvp * vec4(a_vertex_pos, 1.0); gl_Position = v_pos; } // precision highp float; // uniform float u_camera_near; // uniform float u_camera_far; // c // u_camera_view_param.x = tan(fov / 2.0) * aspect; // u_camera_view_param.y = tan(fov / 2.0); uniform vec2 u_camera_view_param; // u_light_inv_range_square = 1.0 / light_radius^2 // c uniform float u_light_inv_range_square; // uniform vec3 u_light_intensity; // uniform vec3 u_light_pos; // uniform sampler2D u_map_geometry; // uniform sampler2D u_map_depth; varying vec4 v_pos; const float shininess = 32.0; // float fn_get_attenuation(vec3 pos) { vec3 direction = u_light_pos - pos; float value = dot(direction, direction) * u_light_inv_range_square; return 1.0 - clamp(value, 0.0, 1.0); } // near far float fn_get_linearize_depth(float depth) { return 2.0 * u_camera_near * u_camera_far / (u_camera_far + u_camera_near - (depth * 2.0 - 1.0) * (u_camera_far - u_camera_near)); } // vec2 fn_get_uv(vec4 pos) { return (pos.xy / pos.w) * 0.5 + 0.5; } // vec3 fn_get_view_normal(vec2 uv) { return texture2D(u_map_geometry, uv).xyz * 2.0 - 1.0; } // vec3 fn_get_view_pos(vec2 uv) { float depth = texture2D(u_map_depth, uv).x; depth = fn_get_linearize_depth(depth); return vec3(u_camera_view_param * (uv * 2.0 - 1.0) * depth, -depth); } void main() { // vec2 uv = fn_get_uv(v_pos); vec3 normal = fn_get_view_normal(uv); vec3 pos = fn_get_view_pos(uv); float attenuation = fn_get_attenuation(pos); vec3 lightdir = normalize(u_light_pos - pos); float nl = dot(normal, lightdir) * attenuation; vec3 reflectdir = reflect(lightdir, normal); float spec = pow(clamp(dot(normalize(pos), reflectdir), 0.0, 1.0), shininess); gl_FragColor = vec4(u_light_intensity * nl, spec * nl); } Result for one source.

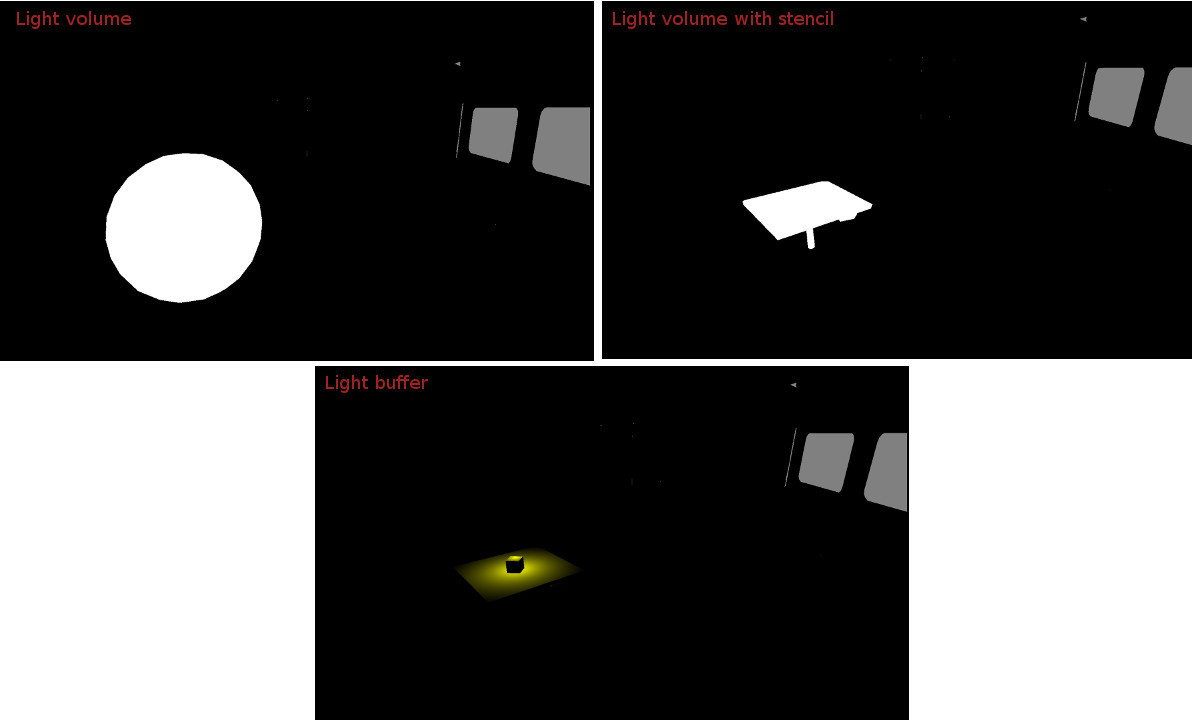

The volume on the left is the pixels for which the luminance was calculated. The right shows the pixels that actually received the light. You may notice that the lit pixels are much smaller than those that participated in the calculations.

To solve this problem, we will use the stencil buffer, with which we will cut off unnecessary pixels in depth.

Then the whole process of source light drawing will look like this:

// 0. // ( ) glEnable(GL_STENCIL_TEST); glDepthMask(GL_FALSE); // // ------------------------------------------------------------------------ // 1. glEnable(GL_DEPTH_TEST) // 2. , glColorMask(GL_FALSE, GL_FALSE, GL_FALSE, GL_FALSE); // 3. . glClear(GL_STENCIL_BUFFER_BIT); // 4. glStencilFunc(GL_ALWAYS, 0, 0); glStencilOpSeparate(GL_BACK, GL_KEEP, GL_INCR_WRAP, GL_KEEP); glStencilOpSeparate(GL_FRONT, GL_KEEP, GL_DECR_WRAP, GL_KEEP); // 5. ... // 6. , // , glStencilFunc(GL_NOTEQUAL, 0, 0xFF); // 7. glDisable(GL_DEPTH_TEST); // 8. glColorMask(GL_TRUE, GL_TRUE, GL_TRUE, GL_TRUE); // // ---------------------------------------------------------------------- // 1. glEnable(GL_CULL_FACE); // 2. glCullFace(GL_FRONT); // 3. ( ). // , ... // 4. glCullFace(GL_BACK); // 5. glDisable(GL_CULL_FACE); // attribute vec3 a_vertex_pos; uniform mat4 u_matrix_mvp; void main() { gl_Position = u_matrix_mvp * vec4(a_vertex_pos, 1.0); } // precision lowp float; void main() { } Result

In addition to everything, we note, taking into account the fact that the buffer of the trafeet is filled without excluding surfaces, and the illumination is calculated only on the back, this also solves the problem of rendering the light source, if there is a camera inside.

Final pass

With the final pass, we once again draw all the objects, restoring the pixel illumination from the buffer from the previous pass.

// attribute vec3 a_vertex_pos; uniform mat4 u_matrix_mvp; varying vec4 v_pos; void main() { v_pos = u_matrix_mvp * vec4(a_vertex_pos, 1.0); gl_Position = v_pos; } // precision lowp float; uniform sampler2D u_map_light; uniform vec3 u_color_diffuse; uniform vec3 u_color_specular; varying vec4 v_pos; vec2 fn_get_uv(vec4 pos) { return (pos.xy / pos.w) * 0.5 + 0.5; } void main() { vec4 color_light = texture2D(u_map_light, fn_get_uv(v_pos)); vec3 color = color_light.rgb; gl_FragColor = vec4(u_color_diffuse * color + u_color_specular * (color * color_light.a), 1.0); } The project ID can be found on GitHub .

I would be happy for comments and suggestions (you can mail yegorov.alex@gmail.com)

Thank!

')

Source: https://habr.com/ru/post/311730/

All Articles