How to stop debugging asynchronous code and start living

Andrei Salomatin ( filipovskii_off )

Today, every day there are new programming languages - Go, Rust, CoffeeScript - whatever. I decided that I, too, would be ready to invent my own programming language, that the world lacks a new language ...

Ladies and gentlemen, I present to you today Schlecht! Script - the freaky programming language. We all need to start using it right now. It has everything that we are used to - it has conditional operators, there are cycles, there are functions and functions of higher orders. In general, it has everything that a normal programming language needs.

')

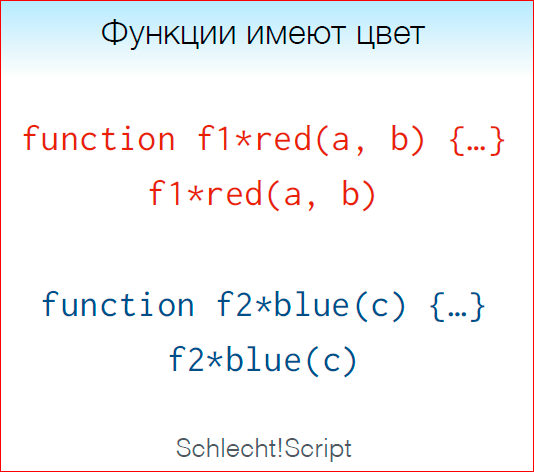

What is not very common in it, which may even push away, at first glance, is that in Schlecht! Script functions have color.

That is, when you declare a function, when you call it, you explicitly specify its color.

Options are red and blue - two colors.

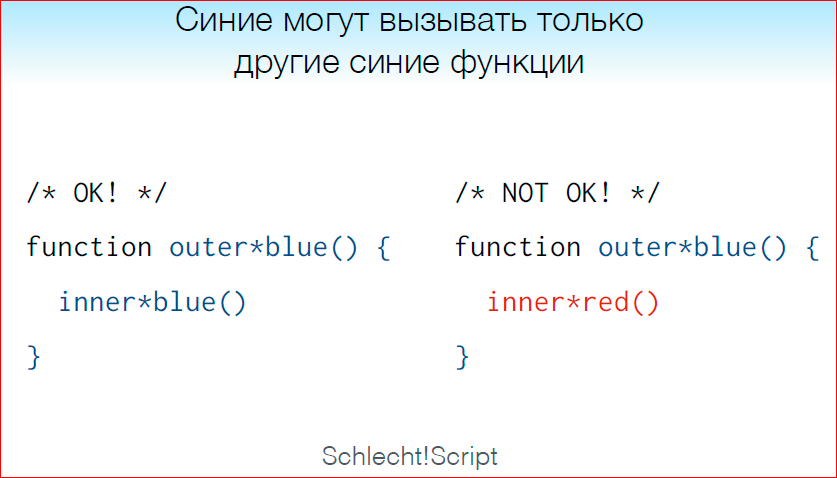

The important point: inside the blue functions, you can only call other blue functions. You cannot call red functions inside blue functions.

Inside the red functions, you can call both red and blue functions.

I decided that it should be so. Every language should be like this.

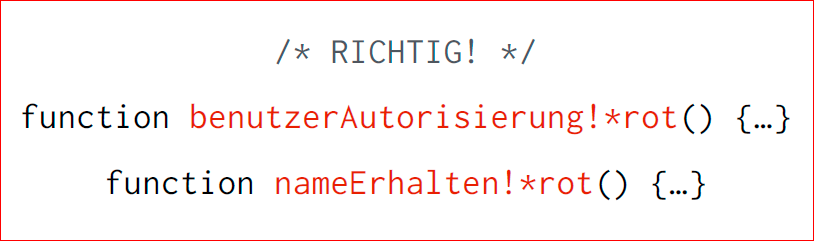

Thin moment: red functions to write and cause pain! What do I mean when I say "hurt"? The fact is that now I am learning German, and I decided that we should all call the red functions only in German, otherwise the interpreter simply does not understand that you are trying to shove him, and he simply will not do it.

This is how you should write functions in German:

“!” Is obligatory - we write in German, after all.

How to write in such a language? We have two ways. We can only use blue functions, in which writing is not painful, but inside we cannot use red functions. This approach will not work, because in a fit of inspiration I wrote half of the standard library on red functions, so, excuse me ...

Question to you - would you use such a language? Did I sell you Schlecht! Script?

Well, you, however, have no choice. Sorry…

Javascript

JavaScript is a great language, we all love it, we all gather here because we love JavaScript. But the problem is that JavaScript inherits some of the features of the Schlecht! Script, and I certainly don’t want to brag, but I think they stole a couple of my ideas.

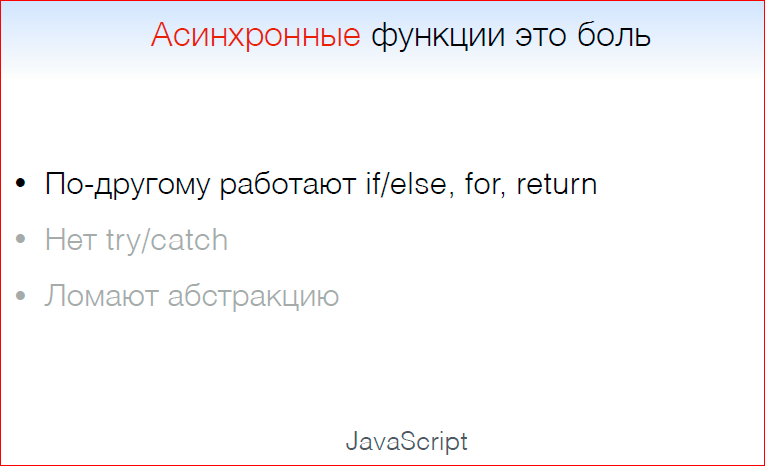

What exactly do they inherit? JavaScript has red and blue functions. Red functions in JavaScript are asynchronous functions, blue - synchronous functions. And everything is traced, the same chain ... Red functions to cause pain in Schlecht! Script, and asynchronous functions to cause pain in JavaScript.

And inside the blue functions we cannot write red functions. I will say more about this later.

Why does it hurt? Where does the pain come from when calling and writing asynchronous functions?

We work differently conditional operators, cycles, return. Try / catch does not work for us, and asynchronous functions break abstraction.

About each item a little more.

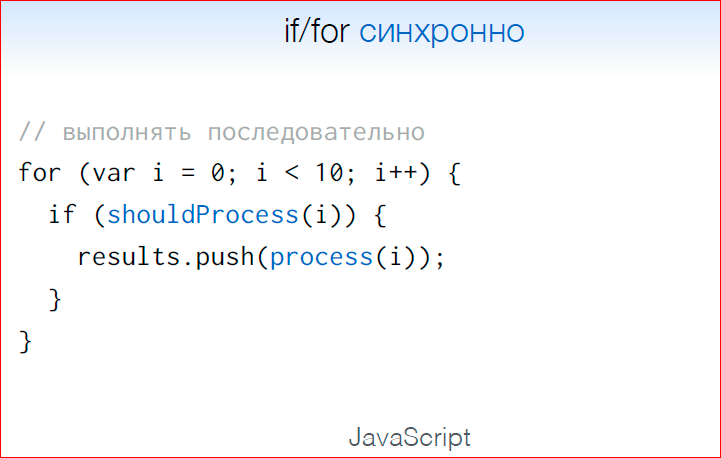

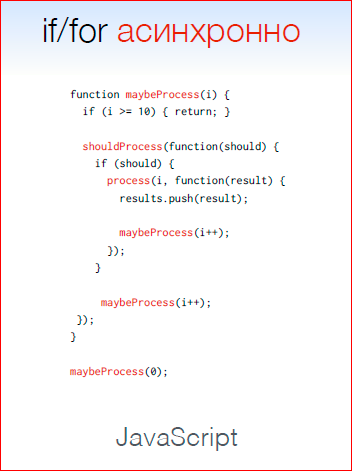

This is how the synchronous code looks like, where shouldProcess and process are synchronous functions, and conditional operators work, works for, in general, everything is fine.

The same, but asynchronous, will look like this:

A recursion appeared there, we pass the state to the parameters, to the function. In general, looking downright unpleasant. Try / catch does not work for us, and I think we all know that if we wrap the asynchronous block of code in try / catch, we will not catch the exception. We will need to pass a callback, rewrite the event handler, in general, we do not have a try / catch ...

And asynchronous functions break the abstraction. What I mean? Imagine that you wrote a cache. You made a user cache in memory. And you have a function that reads from this cache, which is naturally synchronous, because everything is in memory. Tomorrow thousands, millions, billions of users will come to you, and you need to put this cache in Redis. You put the cache in Redis, the function becomes asynchronous, because because of Redis, we can only read asynchronously. And, accordingly, the entire stack, which called your synchronous function, will have to be rewritten, because now the whole stack becomes asynchronous. If any function depended on the read function from the cache, it will now also be asynchronous.

In general, speaking of asynchrony in JavaScript, we can say that everything is sad there.

But what are we all about asynchrony? Let's talk a little about me, finally.

I came to save you all. Well, I'll try to do it.

My name is Andrey, I work in a startup “Productive Mobile” in Berlin. I help with the organization of MoscowJS and I am a co-host of RadioJS. I am very interested in the topic of asynchrony, and not only in JavaScript, I believe that, in principle, this is the defining moment of the language. The way language works with asynchrony determines its success and how pleasant and comfortable it is for people to work with it.

Speaking of asynchrony specifically in JavaScript, it seems to me that we have two scenarios with which we constantly interact. This is the processing of multiple events and the processing of single asynchronous operations.

A number of events — for example, a DOM event or a connection to a server — is something that emits a variety of several types of events.

A single operation is, for example, reading from a database. A single asynchronous operation returns either one result or returns an error. There are no other options.

And, speaking of these two scenarios, it is interesting to speculate: here, like, asynchrony is bad, in general, everything is sad ... And what do we really want? What would an ideal asynchronous code look like?

And we want, I think, control flow of control. We want our conditional operators, cycles, to work in synchronous code in the same way as in asynchronous.

We want exception handling. Why do we need try / catch if we cannot use it in asynchronous operations? This is just weird.

And it is desirable, of course, to have a single interface. Why should an asynchronous function be written and called differently as compared to the synchronous function? This should not be.

That is what we want.

And what do we have today, and what tools will we have in the future?

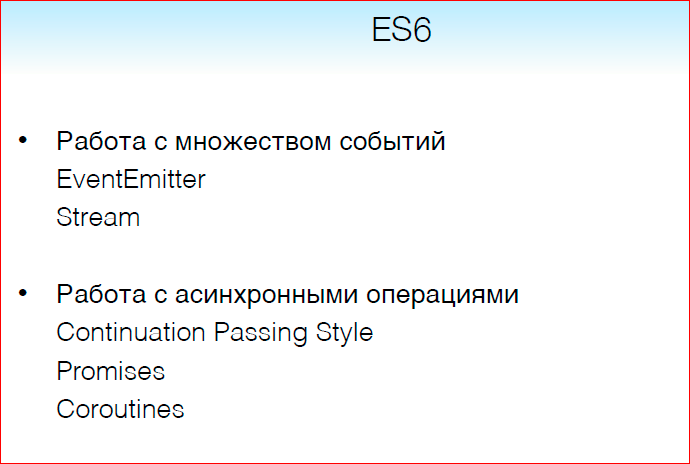

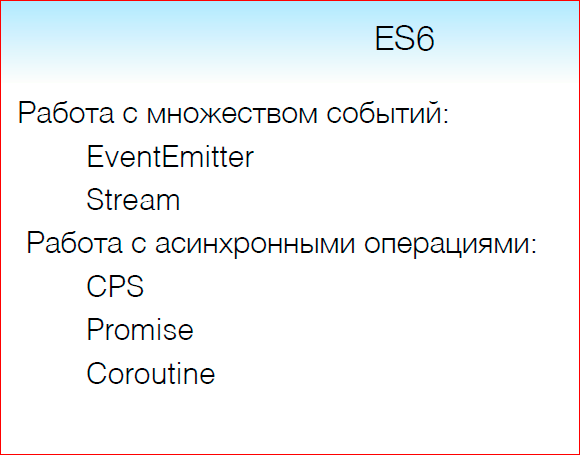

If we are talking about ECMAScript 6 (this, in principle, what I'm going to talk about today), we have EventEmitter and Stream for working with many events, and Continuation Passing Style for working with single asynchronous operations (they are also callback ' and) Promises and Coroutines.

In ECMAScript 7, we will have Async Generators for working with a variety of events and Async / Await for working with single asynchronous operations.

About this and talk.

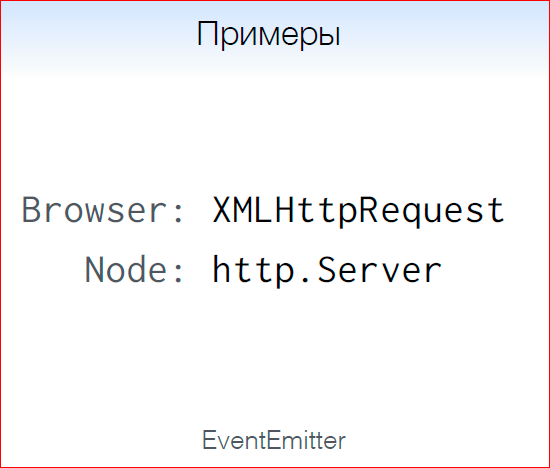

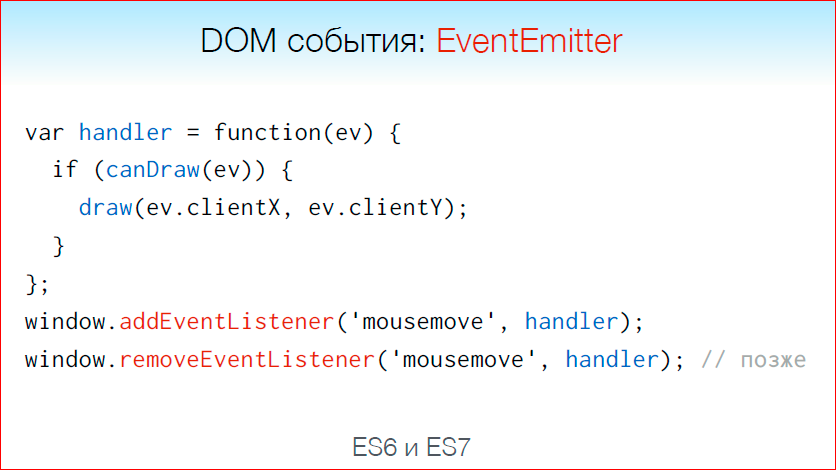

Let's start with what we have in ECMAScript 6 for working with many asynchronous events. I recall, for example, this is handling of mouse events or keystrokes. We have an EventEmitter pattern, which is implemented in Node.js browser. It is found in almost any API where we work with a variety of events. The EventEmitter tells us that we can create an object that emits events, and hang handlers for each type of event.

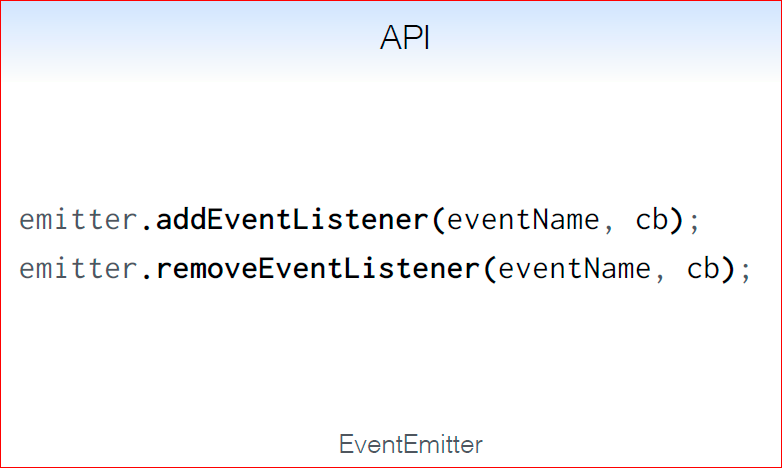

The interface is very simple. We can add EventListener, remove EventListener by event name, passing callback there.

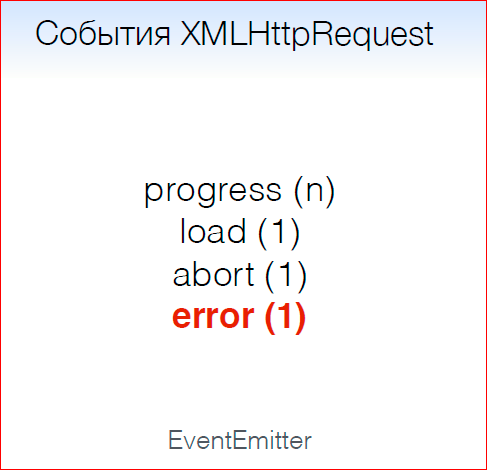

For example, in XMLHttpRequest, when I talk about a lot of events, I mean that we can have a lot of progress events. Those. as we load some data using an AJAX request, we fire up progress events, and once we fire up the load, abort and error events:

Error is a special event, a universal event in EventEmitters and Streams in order to notify the user about the error.

There are many implementations:

Only a few are listed here, and at the end of the report there will be a link where all these implementations are.

It is important to say that EventEmitter is built in by default in Node.js.

So, this is what we have practically according to the standard in the API and browsers in Node.js.

What else do we have to work with a variety of events? Stream.

Stream is a stream of data. What is data? This can be binary data, for example, data from a file, text data, or objects or events. The most popular examples are:

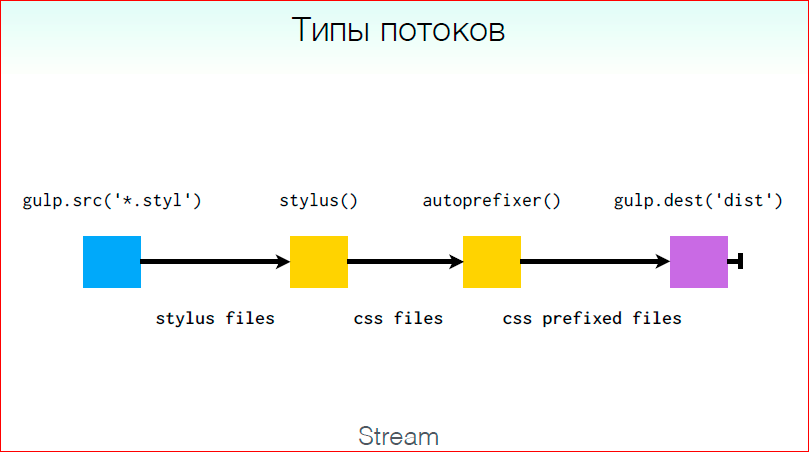

There are several types of threads:

Here we look at the chain of conversions from the Stylus files to css files, adding an auto prefixer, because we all love Andrei Sitnik and his autoprefixer.

You see that we have several types of streams - the source stream is gulp.src, which reads files and emits file objects, which then go to conversion streams. The first conversion stream makes from the css stylus file, the second conversion stream adds prefixes. And the last type of streams is a consumer stream that accepts these objects, writes something somewhere to disk, and emits nothing.

Those. We have 3 types of streams - data source, conversion and consumer. And these patterns can be traced everywhere, not only in the gulp, but also when observing DOM events. We have threads that emit DOM events that transform them and something that, while consuming these DOM events, returns a specific result.

This is what can be called a pipeline. With the help of threads, we can build such chains, when an object is placed somewhere at the beginning of a pipeline, a chain of transformations passes, and when people come to it, they change something, add, delete, and eventually we get some car.

There are several implementations of streams, or they are Observables:

In Node.js, the embedded streams are Node Streams.

So, we have an EventEmitter and Stream. The default EventEmitter is in all APIs as well. Stream is an add-on that we can use to unify the interface for handling multiple events.

When we talk about the criteria by which we compare asynchronous APIs, by and large, return operators and loop operators do not work for us, try / catch doesn’t work for us, of course, and we are still far from a single interface with synchronous operations .

In general, everything is not very good for working with multiple events in ECMAScript 6.

When we talk about single asynchronous operations, we have 3 approaches in ECMAScript 6:

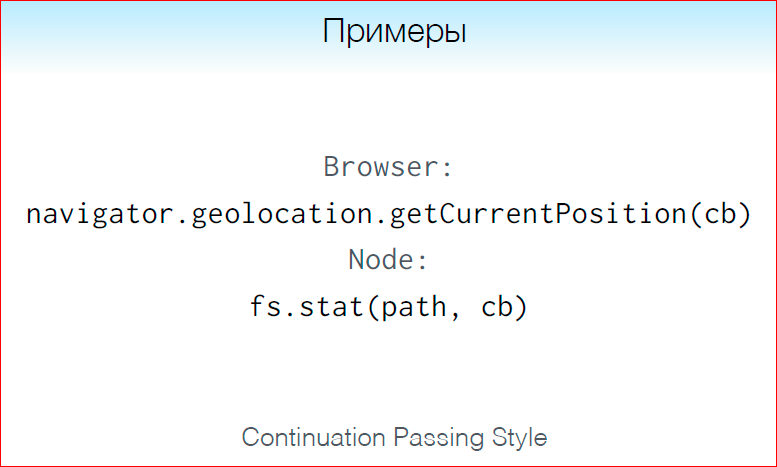

Continuation Passing Style, they are also callbacks.

I think you are all used to it. This is when we make an asynchronous request, pass a callback there, and the callback will be called either with an error or with a result. This is a common approach, it is also in the browser in Node.

Problems with this approach, I think, you also understand everything.

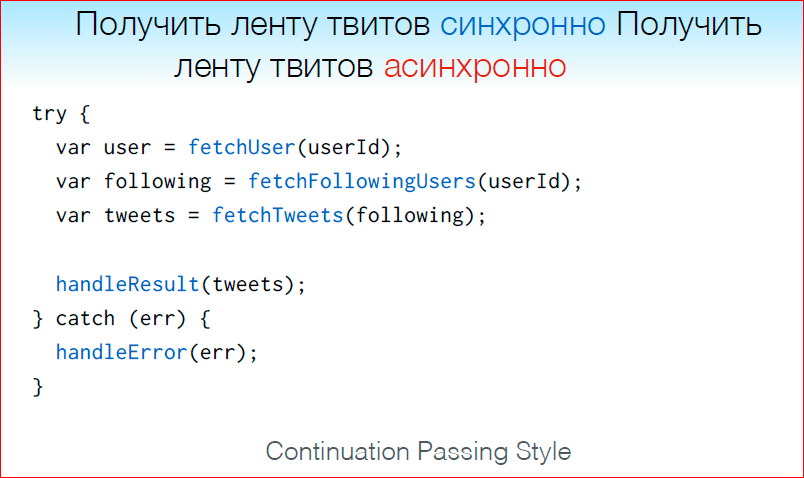

This is how we would receive a tape of user tweets asynchronously, if all functions were synchronous.

The same code, but synchronous, looks like this:

You can increase the font to make it more visible. And a little more increase ... And we are well aware that this is Schlecht! Script.

I said they stole my idea.

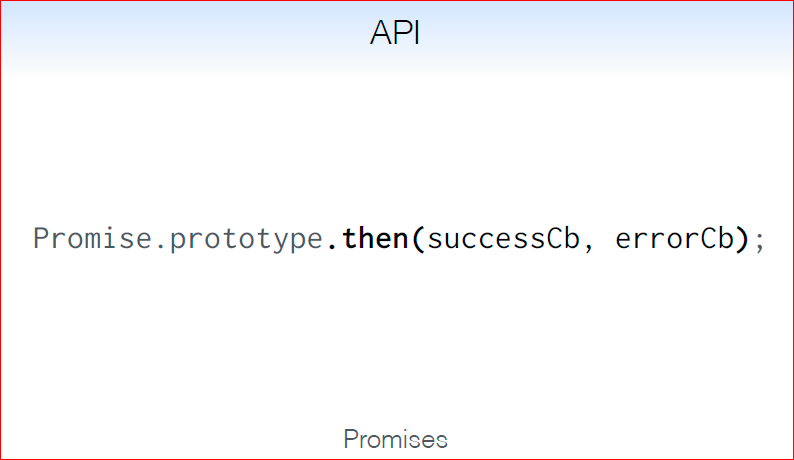

Continuation Passing Style is the standard API in Node.js browser, we work with them all the time, but this is inconvenient. Therefore we have Promises. This is an object that represents an asynchronous operation.

Promise is like a promise to do something in the future, asynchronously.

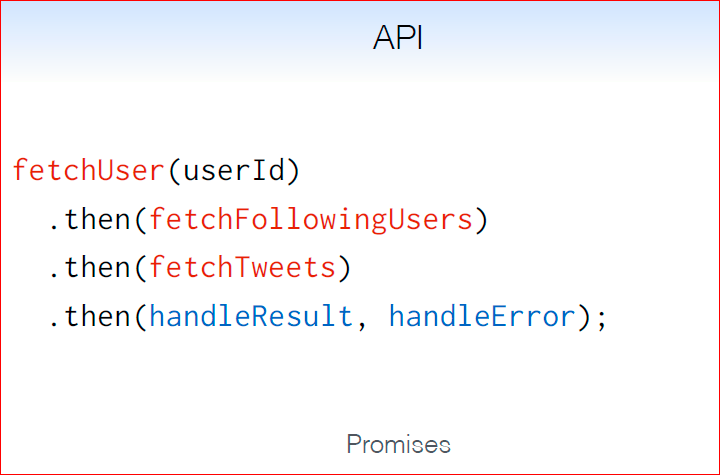

We can hang callbacks on an asynchronous operation using the then method, and this is, in principle, the main method in the API. And we can, very importantly, check Promises, we can call then sequentially, and each function that is passed to then can also return Promises. This is what a user's Twitter feed request for Promises looks like.

If we compare this approach with the Continuation Passing Style, Promises, of course, it is more convenient to use - they give us the opportunity to write much less boilerplate.

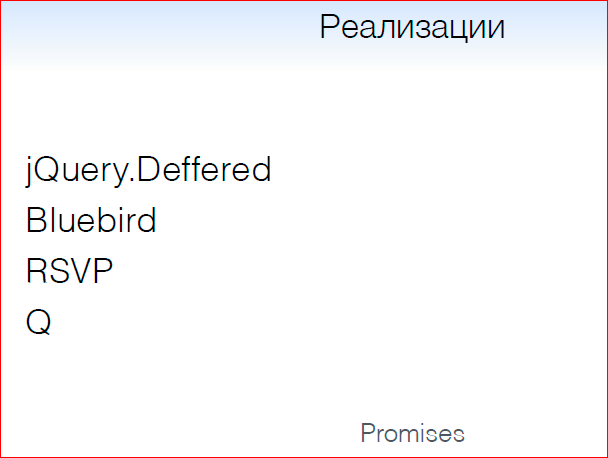

Continuation Passing Style is still used in all APIs by default, in Node.js, in io.js., and they don’t even plan to transition to Promises for several reasons. At first, many said that the reasons are productivity. And this is true, 2013 studies show that Promises are strongly behind callbacks. But with the advent of such libraries as bluebird, we can already confidently say that this is not the case, because the Promises in bluebird are closer in performance to callbacks. An important point: why don't Promises recommend using the API so far? Because when you issue Promises from your API, you impose implementation.

All library Promises must comply with the standard, but when issuing Promises, you also issue implementation, i.e. if you have written your code using slow Promises, and are issuing slow Promises from the API, this will not be very pleasant for users. Therefore, for external APIs, of course, still recommend using callbacks.

Promises implementations are a mass, and if you have not written your implementation, you are not a real JavaScript programmer. I did not write my implementation of Promises, so I had to invent my own language.

So, Promises are, in general, a little less than a boilerplate, but, nevertheless, still not so good.

What about Coroutines? Here the interesting things begin. Imagine ...

It is interesting. We were at JSConf in Budapest, and there was a crazy person who programmed a quadcopter and something else in JavaScript, and half of what he tried to show us, he could not do. Therefore, he constantly said: “OK, now imagine ... This quadcopter took off and everything turned out ...”.

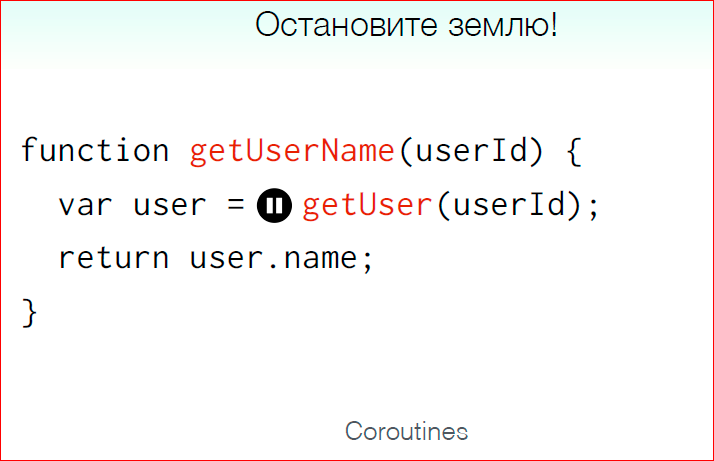

Imagine that we can pause a function at some point in its execution.

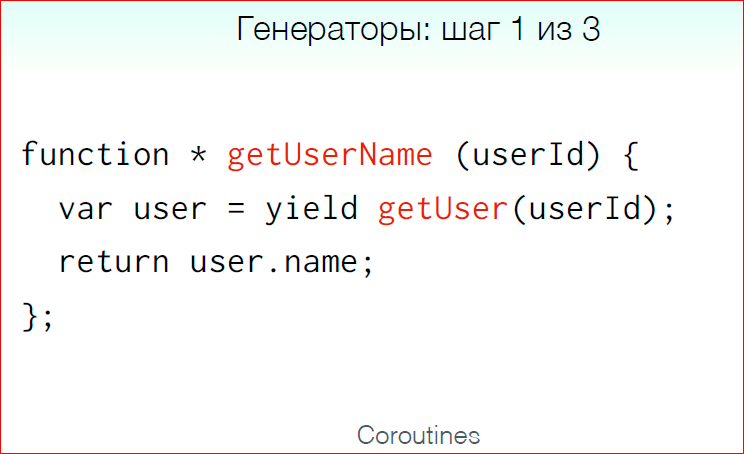

Here the function gets the user name, it climbs into the database, gets the user object, returns its name. Naturally, “climb into the database” - the getUser function is asynchronous. What if we could pause the getUserName function at the time getUser is called? Here, we execute our getUserName function, get to getUser, and stop. getUser went to the database, got the object, returned it to the function, we continue execution. How cool would that be.

The fact is that the Coroutines give us this opportunity. Coroutines is a function that we can suspend and resume at any time. Important point: we do not stop the whole program.

This is not a blocking operation. We stop the execution of a specific function in a particular place.

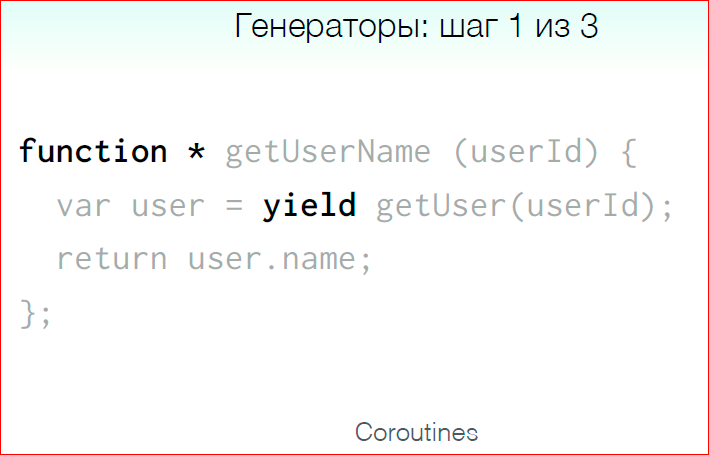

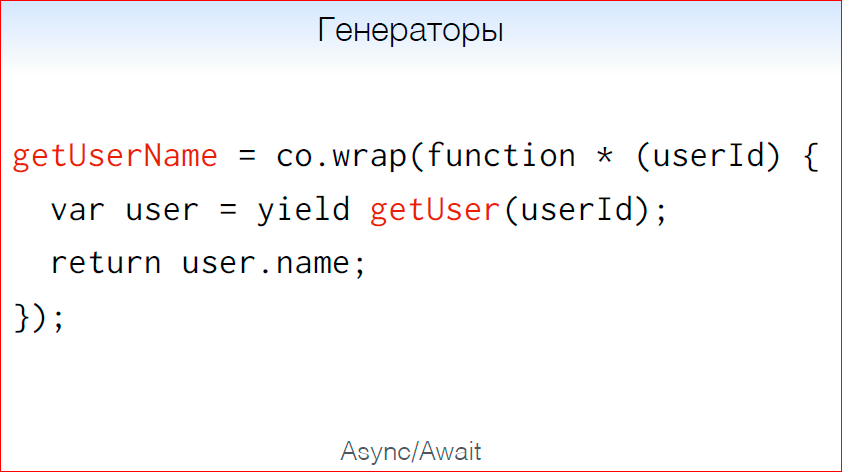

What does getUserName look like using JavaScript generators? We need to add “*” to the function declaration to say that the function returns the generator. We can use the keyword "yield" in the place where we want to pause the function. And it is important to remember that getUser returns Promises here.

Since generators were originally invented in order to make lazy sequences in JavaScript, by and large, use them for synchronous code - this is a hack. Therefore, we need libraries to somehow compensate for this.

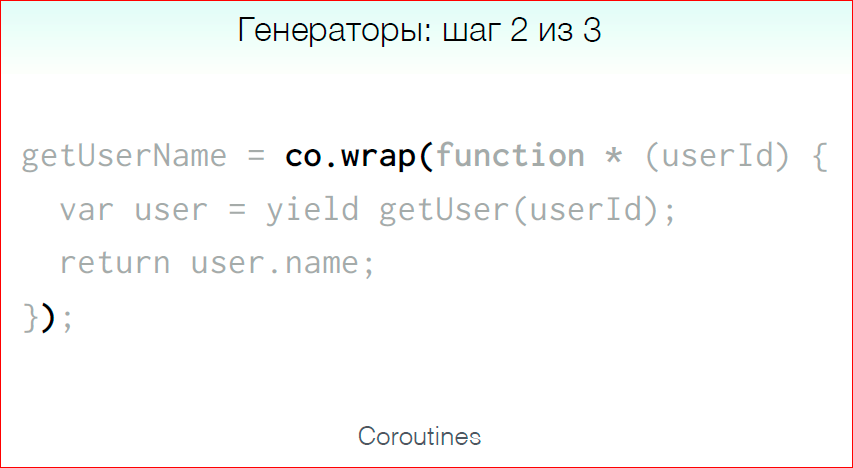

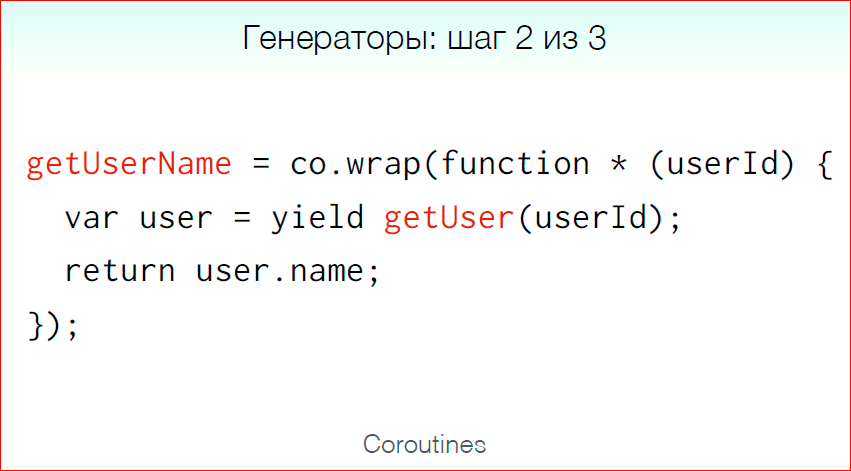

Here we use “co” to wrap the generator and return the asynchronous function to us.

Total, here's what we get:

We have a function within which we can use if, for, and other operators.

To return a value, we simply write return, just like in a synchronous function. We can use try / catch inside, and we catch an exception.

If Promises with getUser resolves with an error, it will be thrown as an exception.

The getUserName function returns Promises, so we can work with it the same way as with any Promises, we can hang up a callback and using then, cheat, etc.

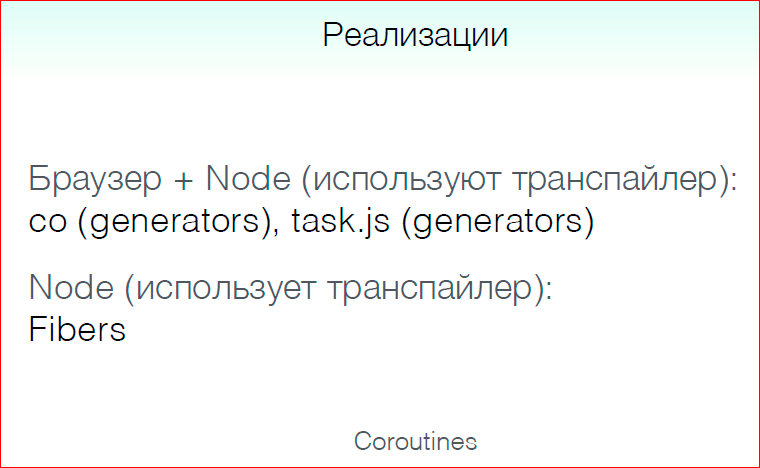

But, as I said, using generators for asynchronous code is a hack. Therefore, executing as an external API is undesirable. But using inside the application is normal, so use it if you have the ability to translate your code.

There are many implementations. Some use generators that are already part of the standard, there are Fibers that work in Node.js and that do not use a generator, but they have their own problems.

In general, this is the third approach for working with single asynchronous operations, and this is still a hack, but we can already use code that is close to synchronous. We can use conditional statements, cycles, and try / catch blocks.

Those. ECMAScript 6 for working with single asynchronous operations brings us a little closer to the desired result, but the problem of a single interface is still not solved, even in Coroutines, because we need to write “*” special and use the key operator “yield”.

So, in ECMAScript 6 for working with a variety of events, we have EventEmitter and Stream, for working with single asynchronous operations - CPS, Promises, Coroutines. And all this seems to be great, but something is missing. I want more, something brave, brave, new, I want a revolution.

And the guys who write ES7 decided to give us a revolution and brought Async / Await and Async Generators for us.

Async / Await is a standard that allows us to work with single asynchronous operations, such as, for example, database queries.

This is how we wrote getUserName on generators:

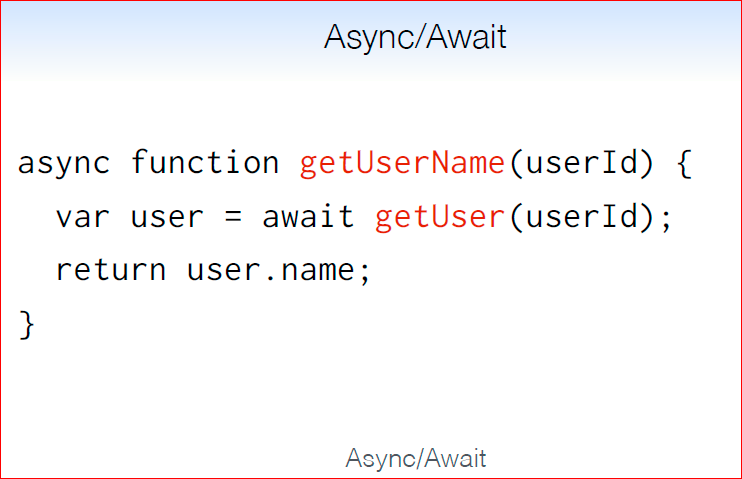

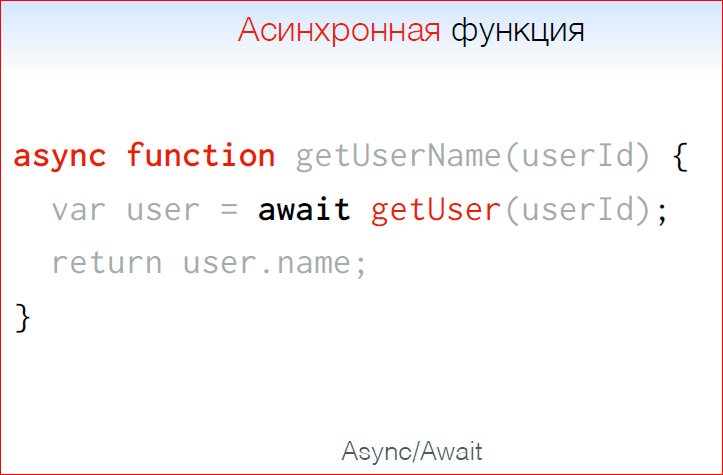

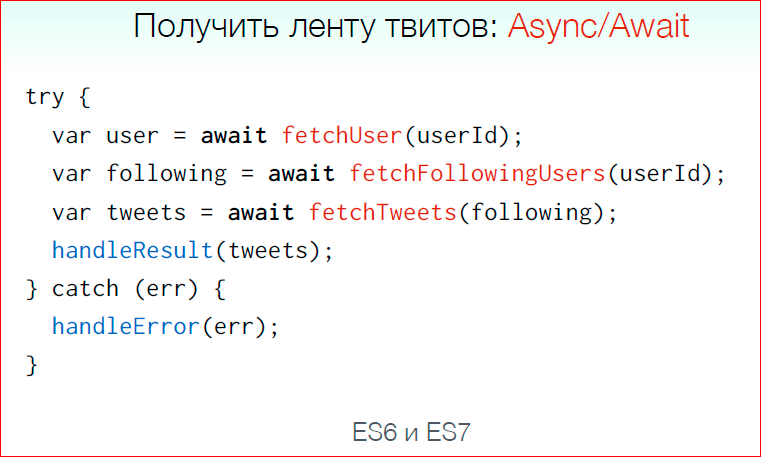

And this is how the same code looks with Async / Await:

Everything is very similar, by and large, this is a step away from the hack to the standard. Here we have the keyword "async", which says that the function is asynchronous, and it will return Promise. Inside the asynchronous function, we can use the “await” keyword, wherever we return Promise. And we can wait for the execution of this Promise, we can pause the function and wait for the execution of this Promise.

And also conditional operators, cycles, and try / catch work for us, that is, asynchronous functions are legalized in ES7. Now we explicitly say that if the function is asynchronous, then add the "async" keyword. And this, in principle, is not so bad, but again, we do not have a single interface.

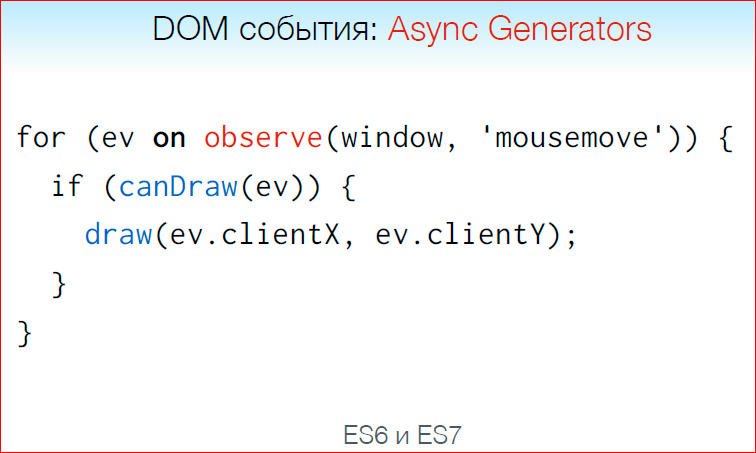

What about a lot of events? Here we have a standard called Async Generators.

What is a lot of things? How do we work with set in javascript?

With the set we work with the help of cycles, so let's work with a set of events with the help of cycles.

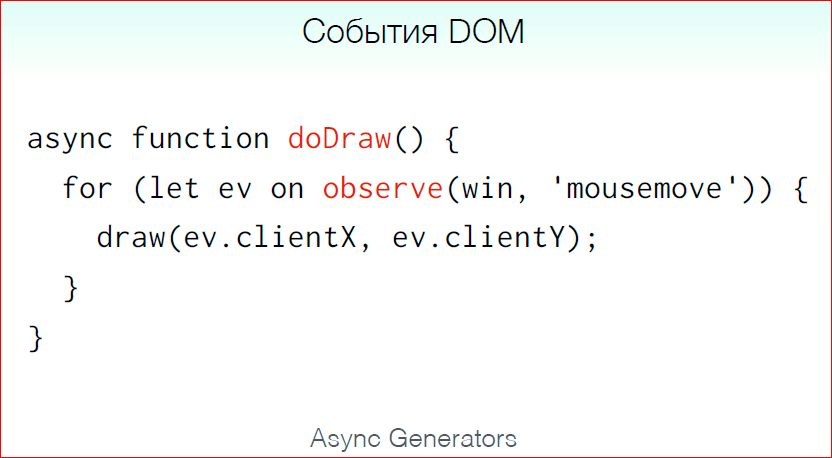

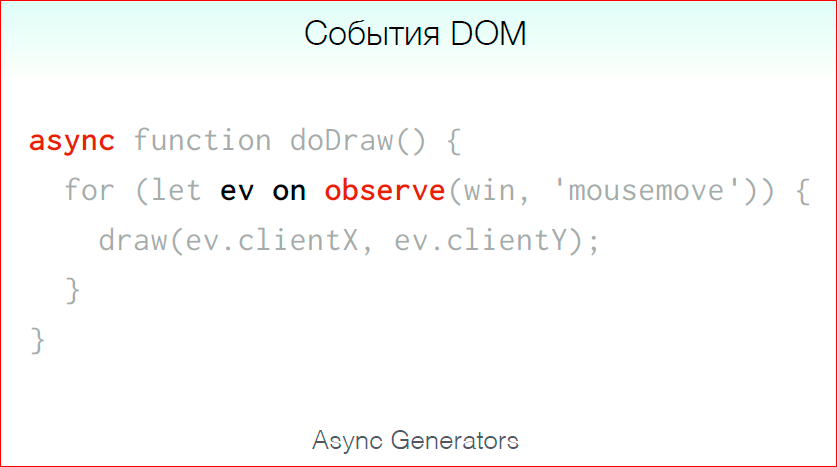

Inside the asynchronous function, we can use the key construction “for ... on”, which allows us to iterate over asynchronous collections. As if.

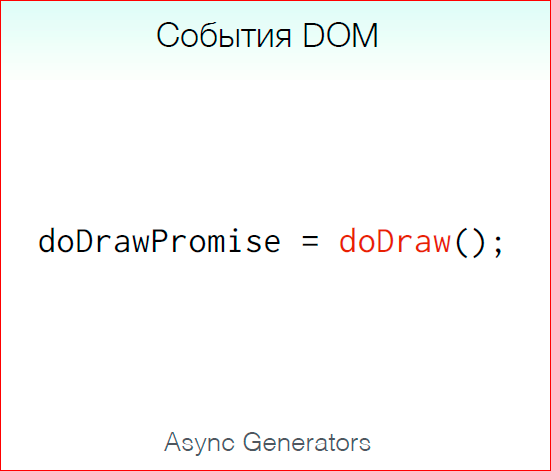

In this example, observe returns us something for which we can iterate, i.e. each time the user moves the mouse, we will have a “mousemove” event. We fall into this cycle, and somehow handle this event. In this case, draw the dash on the screen.

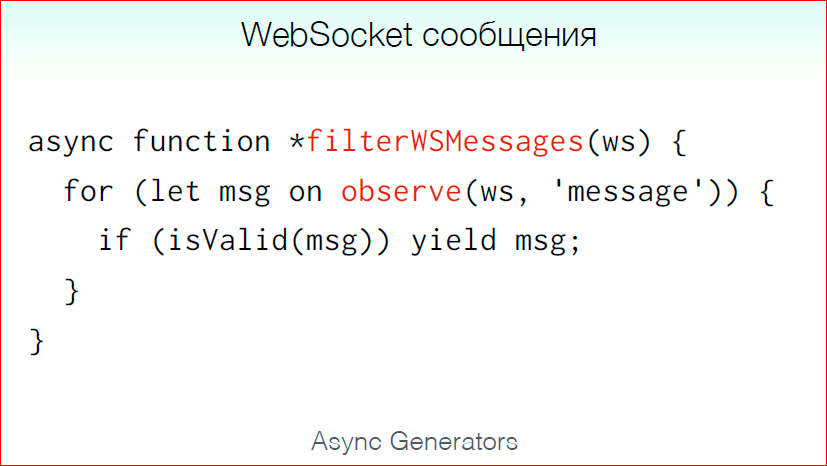

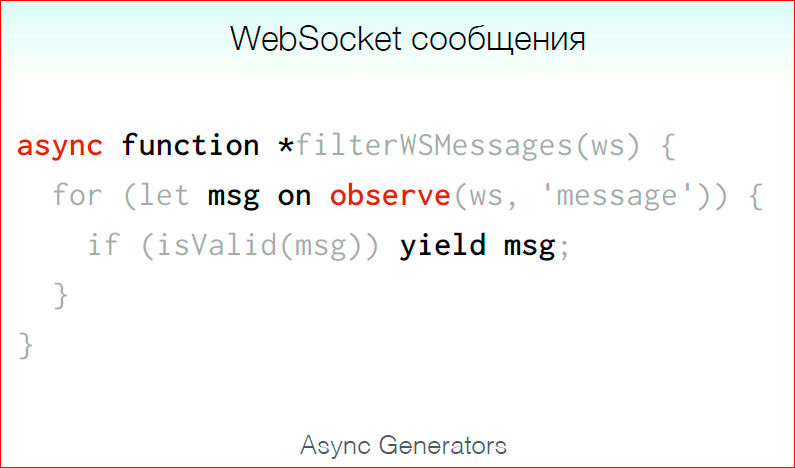

Since asynchronous function, it is important to understand that it returns Promise. But what if we want to return a set of values, if we want, for example, to process somehow messages from a web socket, to filter them? Those. we get a lot and at the exit we have a lot. Here we are assisted by asynchronous generators. We write "async function *" and say that the function is asynchronous, and we return a set of some kind.

In this case, we look at the Message event on the web socket, and every time it occurs, we do some kind of checking and, if the check passes, we are in the return collection. How to add this Message.

At what, all this happens asynchronously. Messages do not accumulate, they return as they arrive. And here all our conditional statements, loops and try / catch work as well.

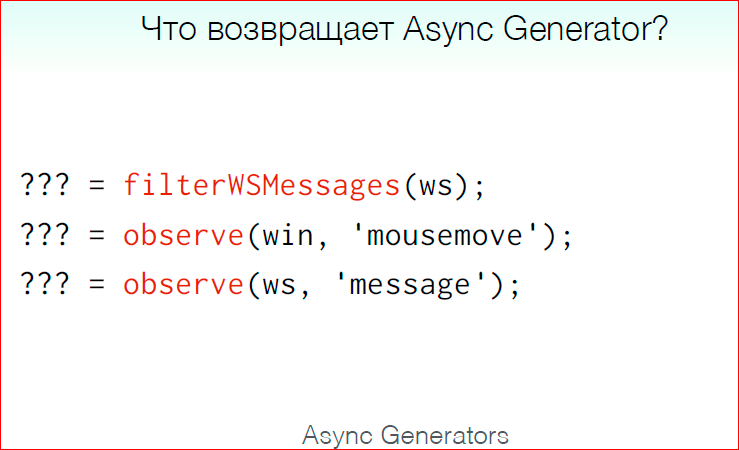

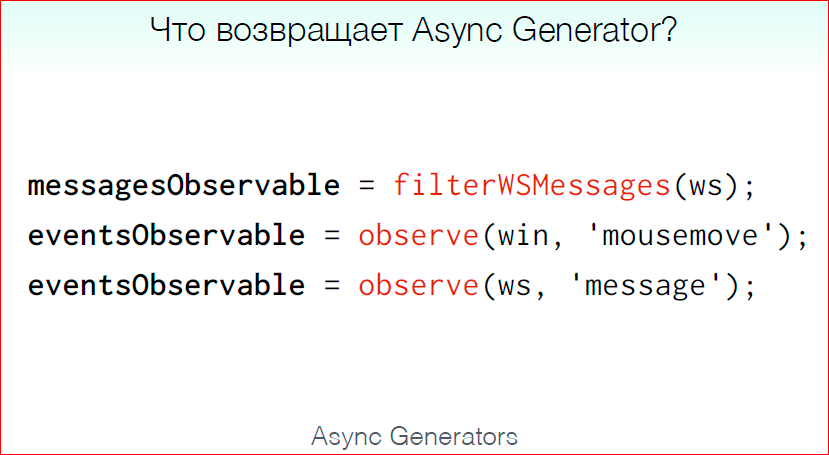

Question: What does filterWSMessages return?

This is definitely not a Promise, because this is some kind of collection, something like that ... But this is not an array.

Even more. What do these observe return events that generate?

And they return the so-called. Observables objects. This is a new word, but by and large, Observables are streams, this is Streams. Thus, the circle closes.

Total, we have to work with asynchronous single operations Async / Await, to work with many - Async Generators.

Let's go and make a small retrospective of what we left and what we came to.

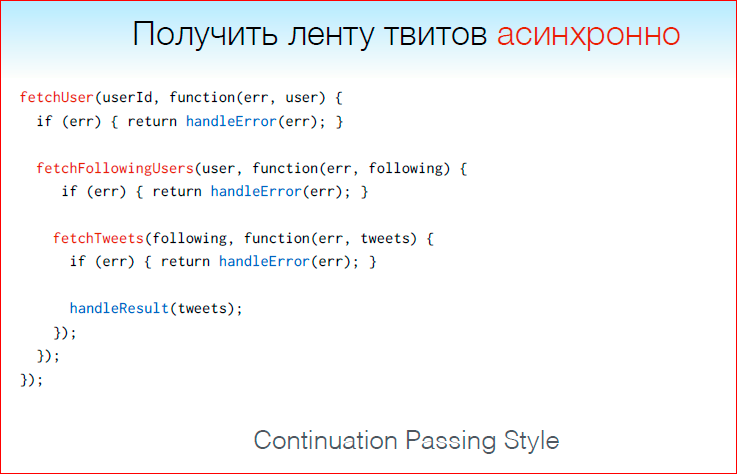

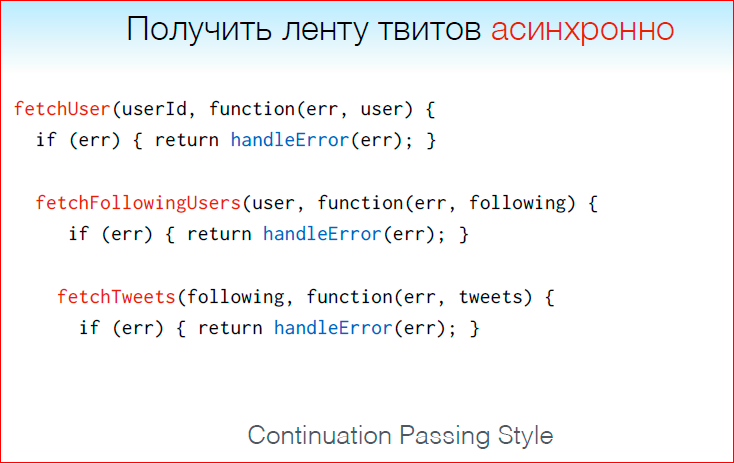

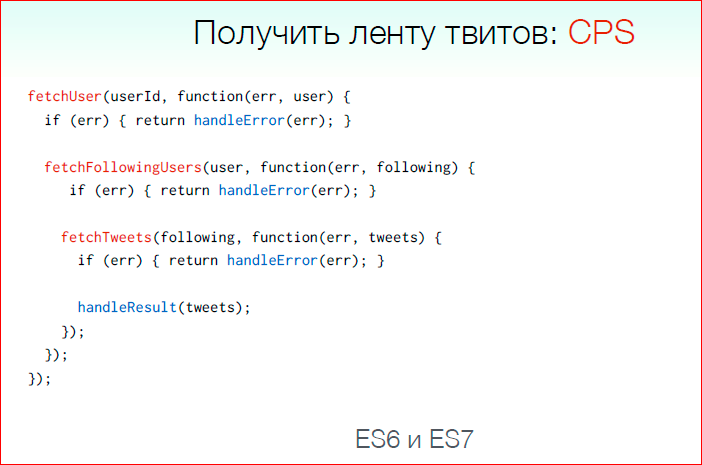

To get a feed of tweets, we would write the following code in CPS:

A lot of boilerplate, error handling, manual practically and, in general, not very pleasant.

Using Promise, the code looks like this:

The boilerplate is smaller, we can eliminate exceptions in one place, which is already good, but, nevertheless, there are these then ..., neither try / catch nor conditional operators work.

With the help of Async / Await we get the following structure:

And about the same give us the Coroutines.

Everything is gorgeous here, except that we need to declare this function as “async”.

As for the set of events, if we are talking about DOM events, we would process the mousemove like this and draw around the screen using an EventEmitter:

The same code, but using the Streams and the Kefir library looks like this:

We create a stream from the mousemove events on the window, we somehow filter them, and for each value we call the callback function. And when we call end of this stream, we automatically unsubscribe from events in the DOM, which is important

Async Generators looks like this:

This is just a loop, we iterate over the collection of asynchronous events and perform some operations on them.

In my opinion, this is a great way.

In conclusion, I would like to say a few words about how, in fact, to stop debugging asynchronous code and start living.

- Define your task, i.e. if you are working with a lot of events, it makes sense to look at Streams or, perhaps, even on Async Generators, if you have a transpiler.

- , , AJAX-, , , Promises.

- . , Async/Await Async Generators. API, , Promise API callback'.

- , error EventEmitter'.

- Promise.all ..

I know you all are interested in the fate of Schlecht! Script, when it will be posted on GitHub, etc., but the fact is that due to constant criticism, accusations of plagiarism, they say that there is already such a language, nothing new I did not invent it, I decided to close the project and devote myself to maybe something useful, important, interesting, maybe I would even write my Promises library.

Contacts

» Filipovskii_off

— - FrontendConf . 2017 .

Frontend Conf , , . , 8 , .

HighLoad++ 11 « », :

- NASA JavaScript / (Liberty Global);

- 16 / (Beta Digital Production);

- . - / (SuperJob);

Source: https://habr.com/ru/post/311554/

All Articles