Programming & Music: we understand and write VSTi synthesizer on C # WPF. Part 1

Being engaged in musical creativity, I often make arrangements and recordings on a computer - using a bunch of all sorts of VST plug-ins and instruments . I am ashamed to admit - I never understood how the sounds in synthesizers are "wound". Programming allowed me to write my own synthesizer, “skip through” the process of creating sound.

I am planning several articles in which I will step by step how to write my VST plug-in / instrument: programming an oscillator, a frequency filter, various effects, and modulation of parameters. The emphasis will be placed on the practice , an explanation to the programmer in a simple language, how it all works. The theory (harsh conclusions and evidence) will be bypassed (naturally, there will be references to articles and books).

Typically, plug-ins are written in C ++ (cross-platform, the ability to effectively implement algorithms), but I decided to choose a more suitable language for me - C #; focus on learning about the synthesizer itself, the algorithms, and not the technical details of programming. To create a beautiful interface, I used WPF. The ability to use the .NET architecture has made it possible to use the VST wrapper library . NET .

Below is a review video of my simple synthesizer , received interesting sounds.

There is a hard road ahead, if you are ready - welcome under cat.

Cycle of articles

- We understand and write VSTi synthesizer on C # WPF

- ADSR signal envelope

- Buttervo Frequency Filter

- Delay, Distortion and Parameter Modulation

Table of contents

- Mysterious world of sound synthesis

- Digital sound

- VST SDK

- WDL-OL and JUCE

- VST .NET

- My add-on VST .NET

- WPF UI

- UI thread

- Syntage synthesizer architecture overview

- Customize the project to create a plugin / tool

- Debugging code

- We write a simple oscillator

- Bibliography

Mysterious world of sound synthesis

I love music, I listen to different styles, I play various instruments, and, of course, I compose and record arrangements. When I started using synthesizer emulators in recording programs (and even now) I always went through a bunch of presets, looking for the right sound.

Going through the presets of one synthesizer, one can find both the “expected” sound of an electronic synthesizer from childhood (music from the cartoon Flying Ship ) and imitation of drums, sounds, noise, even voices! And all this makes one synthesizer, with the same parameter knobs. It always surprised me, although I understood that each sound is the essence of the specific setting of all the knobs.

Recently, I finally decided to finally figure out how the sound is created (or, more correctly, synthesized ), how and why you need to turn the knobs, how the signal changes (visually and by ear) from the effects. And of course, learn (at least understand the basics) to "wind up" the sound yourself, copy the styles I like. I decided to follow one quote:

"Tell me - and I will forget, show me - and I will remember, let me do it - and I will understand."

Confucius

Of course, you don’t have to do everything (where so many bicycles?), But now I want to get knowledge and the most important thing is to share it with you.

Objective : Without going into theory, create a simple synthesizer, focusing on explaining processes from a programming point of view, in practice.

In the synthesizer will be:

- wave generator (oscillator)

- ADSR signal envelope

- frequency filter

- echo / delay

- parameter modulation

I plan to consider all components in several articles. This program will consider the oscillator.

We will program in C #; UI can be written either on WPF, or on Windows Forms, or do without the graphical shell. Plus the choice of WPF - beautiful graphics, which is fast enough to code, minus - only on Windows. Owners of other OSs - do not be discouraged, after all, the goal is to understand the work of the synthesizer (and not sip a beautiful UI), especially the code that I will demonstrate can be quickly transferred, say, to C ++.

In the chapters of the VST SDK and WDL-OL and JUCE, I will discuss the concept of VST, its internal implementation; about add-on libraries that are well suited for developing serious plugins. In the chapter VST .NET I will tell about this library, its minuses, my add-in, programming UI.

Programming logic synthesizer begins with the head of writing a simple oscillator . If you are not interested in the technical aspects of writing VST plug-ins, you just want to read about the synthesis (and do not code anything) - you are welcome to this chapter right away.

The source code for the synthesizer I wrote is available on GitHub .

Digital sound

In essence, our ultimate goal is the creation of sound on a computer. Be sure to read (at least fluently) the article on Habré "Theory of Sound" - it outlines the basic knowledge about the representation of sound on a computer, concepts and terms.

Any audio file in a computer in an uncompressed format is an array of samples. Any plug-in, ultimately, accepts and processes an array of seeds as input (depending on the accuracy, they will be float or double numbers, or you can work with integers). Why did I say an array, not a single sample? With this, I wanted to emphasize that the sound is processed as a whole : if you need to do an equalization , you cannot operate with one sample without information about the others.

Although, of course, there are tasks for which it is not important to know what you are processing - they are considering a particular sample. For example, the task is to raise the volume level 2 times. We can work with each sample separately, and we do not need to know about the others.

We will work with a sample as with a float number from -1 to 1. Usually, in order not to say “the value of the sample”, you can say “amplitude”. If the amplitude of some samples is greater than 1 or less than -1, clipping will occur, this should be avoided.

VST SDK

VST (Virtual Studio Technology) is a technology that allows you to write plug-ins for sound processing programs. Now there are a large number of plug-ins that solve various problems: synthesizers, effects, sound analyzers, virtual instruments and so on.

To create VST plug-ins, Steinberg (some of them know from Cubase) released the VST SDK written in C ++. In addition to the technology (or, as they say, "plug-in format") VST, there are others - RTAS, AAX, thousands of them . I chose VST, because of its greater fame, a large number of plug-ins and tools (although most of the known plug-ins come in different formats).

At the moment, the current version of VST SDK 3.6.6, although many continue to use version 2.4. Historically, it is difficult to find a DAW without support for version 2.4, and not all support version 3.0 and higher.

VST SDK can be downloaded from the official site .

In the future, we will work with the VST.NET library, which is a wrapper for VST 2.4.

If you intend to seriously develop plugins, and want to use the latest version of the SDK, then you can independently study the documentation and examples (everything can be downloaded from the official site ).

Now I will summarize the principles of VST SDK 2.4, for a general understanding of the work of the plugin and its interaction with DAW .

In Windows VST plugin version 2.4 is presented as a dynamic DLL library.

We will call the host a program that loads our DLL. Usually, this is either a music editing program ( DAW ) or a simple shell to run a plugin independently of other programs (for example, very often in virtual instruments with a .dll plugin an .exe file is supplied to load the plugin as a separate program - piano, synthesizer).

Further functions, enumerations and structures can be found in the downloaded VST SDK in the source code from the folder "VST3 SDK \ pluginterfaces \ vst2.x".

The library should export the function with the following signature:

EXPORT void* VSTPluginMain(audioMasterCallback hostCallback) The function accepts a pointer to a callback so that the plugin can receive the information it needs from the host.

VstIntPtr (VSTCALLBACK *audioMasterCallback) (AEffect* effect, VstInt32 opcode, VstInt32 index, VstIntPtr value, void* ptr, float opt) Everything is done at a fairly "low" level - in order for the host to understand what they want from it, you need to send the command number via the opcode parameter. Enumeration of all opcodes hardcore C-encoders can be found in the listing AudioMasterOpcodesX. The remaining parameters are used in the same way.

VSTPluginMain should return a pointer to the AEffect structure, which, in essence, is our plugin: it contains information about the plugin and pointers to the functions that the host will call.

Main fields of the AEffect structure:

- Information about the plugin. Name, version, number of parameters, number of programs and presets (read below), type of plug-in and so on.

- Functions for querying and setting parameter values.

- Functions change presets / programs.

Sample array processing function

void (VSTCALLBACK *AEffectProcessProc) (AEffect* effect, float** inputs, float** outputs, VstInt32 sampleFrames)float ** is an array of channels, each channel contains the same number of samples (the number of samples in the array depends on the sound driver and its settings). Mainly plugins that handle mono and stereo.

Super function, similar to audioMasterCallback.

VstIntPtr (VSTCALLBACK *AEffectDispatcherProc) (AEffect* effect, VstInt32 opcode, VstInt32 index, VstIntPtr value, void* ptr, float opt)Called by the host, the required action is determined by the opcode parameter (the AEffectOpcodes list). It is used to find out additional information about the parameters, to inform the plugin about changes in the host (change of the frequency of discredit), to interact with the UI of the plugin.

When working with a plugin, it would be very convenient for the user to save all the configured knobs and switches. And even steeper that was their automation! For example, you may want to make the famous effect of rise up - then you need to change the cutoff parameter (cutoff frequency) of the equalizer in time.

In order for the host to control the parameters of your plugin, AEffect has corresponding functions: the host can query the total number of parameters, find out or set the value of a specific parameter, find out the name of the parameter, its description, and get the displayed value.

The host doesn't care what the logic of the parameters in the plugin is. The task of the host is to save, load, automate parameters. It is very convenient for the host to take a parameter as a float number from 0 to 1 - let the plug-in be as it pleases, and interprets it that way (as most DAW did, unofficially).

Presets (in terms of VST SDK - programs / programs) is a collection of specific values of all parameters of a plugin. The host can change / switch / select preset numbers, learn their names, similarly with parameters. Banks - a collection of presets. Banks logically exist only in DAW, in the VST SDK there are only presets and programs.

Having understood the idea of the AEffect structure, you can sketch and compile a simple DLL plugin.

And we will go further, one level higher.

WDL-OL and JUCE

What is bad development on bare VST SDK?

- Write the whole routine from scratch yourself? .. Anyway, someone has already done it!

- Structures, callbacks ... but you want something more high-level

- I want cross-platform so that the code is one

- What about UI, which is easy to develop!?

WDL-OL enters the scene. This is a C ++ library for creating cross-platform plugins. Supported formats are VST, VST3, Audiounit, RTAS, AAX. The convenience of the library is that (if the project is properly configured), you write one code, and when compiling you get your plugin in different formats.

How to work with WDL-OL is well described in Martin Finke's Blog "Music & Programming" , there are even habr articles and translations into Russian .

WDL-OL solves at least the first three points of the development minuses on the VST SDK. All you need is to set up the project correctly (the first article from the blog), and inherit from the IPlug class.

class MySuperPuperPlugin : public IPlug { public: explicit MyFirstPlugin(IPlugItanceInfo instanceInfo); virtual ~MyFirstPlugin() override; void ProcessDoubleReplacing(double** inputs, double** outputs, int nFrames) override; }; Now with a clear conscience, you can implement the function ProcessDoubleReplacing, which, in essence, is the "core" of the plugin. All concerns took over the class IPlug. If you study it, you can quickly understand that (in VST format) it is a wrapper around the AEffect structure. Callbacks from the host and functions for the host have become convenient virtual functions with clear names and adequate lists of parameters.

The WDL-OL already has the tools to build a UI. But as for me, all this is done with great pain: the UI is assembled in code, all resources must be described in a .rc file, and so on.

In addition to WDL-OL, I also learned about the JUCE library. JUCE is similar to WDL-OL, solves all declared minuses of development on the VST SDK. In addition, it already has in its composition and UI-editor, and a bunch of classes for working with audio data. I personally did not use it, so I advise you to read about it, at least, on the wiki .

If you want to write a serious plugin, then I would have seriously thought about using the libraries WDL-OL or JUCE. They will do the whole routine for you, and you still have all the power of the C ++ language to implement efficient algorithms and cross-platform - which is not unimportant in the world of a large number of DAWs.

VST .NET

What did not please me WDL-OL and JUCE?

- My task is to understand how the synthesizer is programmed, audio processing, effects, and not how to build a plugin for the maximum number of formats and platforms. "Technical programming" here fades into the background (of course, this is not a reason to write bad code and not to use OOP).

- I am spoiled by the C # language. Again, this language, unlike the same C ++, allows not to think about some technical issues.

- I like WPF technology in terms of its visual capabilities.

Library page - vstnet.codeplex.com , there are source codes, binaries, documentation. As I understand it, the library is in the process of almost completed and scored frosts (some rarely used functions are not implemented, there are no repository changes for a couple of years).

The library consists of three key assemblies:

- Jacobi.Vst.Core.dll - contains interfaces defining host and plug-in behavior, auxiliary classes of audio, events, MIDI. Most are wrappers for native structures, defines, and enumerations from the VST SDK.

- Jacobi.Vst.Framework.dll - contains the base classes of plug-ins that implement the interfaces from Jacobi.Vst.Core, which allow speeding up the development of plug-ins and not writing everything from scratch; classes for higher-level interaction "host plugin", various managers of parameters and programs, MIDI messages, working with UI.

- Jacobi.Vst.Interop.dll - Managed C ++ wrapper over VST SDK, which allows you to connect a host with a loaded .NET assembly (your plugin).

How can a .NET assembly be made if the host expects a simple dynamic DLL? And this is how: in fact, the host does not load your build, but the compiled DLL Jacobi.Vst.Interop, which already in turn loads your plugin as part of .NET.

The following trick is used: let's say you are developing your plugin, and you get the .NET build of MyPlugin.dll. You need to make the host download Jacobi.Vst.Interop.dll instead of your MyPlugin.dll, and it loads your plugin. The question is, how does Jacobi.Vst.Interop.dll know where to ship your lib from? There are a lot of solutions. The developer chose to name the wrapper with the same name as your lib, and then search for the .NET assembly as "my_name.vstdll".

It works like this.

- You compiled and got MyPlugin.dll

- Rename MyPlugin.dll to MyPlugin.vstdll

- Copy next to Jacobi.Vst.Interop.dll

- Rename Jacobi.Vst.Interop.dll to MyPlugin.dll

- Now the host will load the MyPlugin.dll (i.e. Jacobi.Vst.Interop wrapper) and she, knowing that her name is "MyPlugin", will load your assembly MyPlugin.vstdll.

When loading your data, it is necessary that it has a class that implements the IVstPluginCommandStub interface:

public interface IVstPluginCommandStub : IVstPluginCommands24 { VstPluginInfo GetPluginInfo(IVstHostCommandStub hostCmdStub); Configuration PluginConfiguration { get; set; } } VstPluginInfo contains the base plug-in - version, unique plug-in ID, number of parameters and programs, number of channels processed. PluginConfiguration is needed for the calling wrapper Jacobi.Vst.Interop.

In turn, IVstPluginCommandStub implements the IVstPluginCommands24 interface, which contains the methods called by the host: processing the array (buffer) of samples, working with parameters, programs (presets), MIDI messages, and so on.

Jacobi.Vst.Framework contains a ready-made convenient StdPluginCommandStub class that implements the IVstPluginCommandStub. All you need to do is inherit from StdPluginCommandStub and implement the CreatePluginInstance () method, which will return an object (instance) of your plugin class that implements IVstPlugin.

public class PluginCommandStub : StdPluginCommandStub { protected override IVstPlugin CreatePluginInstance() { return new MyPluginController(); } } Again, there is a ready-made convenient class VstPluginWithInterfaceManagerBase:

public abstract class VstPluginWithInterfaceManagerBase : PluginInterfaceManagerBase, IVstPlugin, IExtensible, IDisposable { protected VstPluginWithInterfaceManagerBase(string name, VstProductInfo productInfo, VstPluginCategory category, VstPluginCapabilities capabilities, int initialDelay, int pluginID); public VstPluginCapabilities Capabilities { get; } public VstPluginCategory Category { get; } public IVstHost Host { get; } public int InitialDelay { get; } public string Name { get; } public int PluginID { get; } public VstProductInfo ProductInfo { get; } public event EventHandler Opened; public virtual void Open(IVstHost host); public virtual void Resume(); public virtual void Suspend(); protected override void Dispose(bool disposing); protected virtual void OnOpened(); } If you look at the source code of the library, you can see the interfaces that describe the components of the plug-in for working with audio, parameters, MIDI, etc. :

IVstPluginAudioProcessor IVstPluginParameters IVstPluginPrograms IVstHostAutomation IVstMidiProcessor The VstPluginWithInterfaceManagerBase class contains virtual methods that return these interfaces:

protected virtual IVstPluginAudioPrecisionProcessor CreateAudioPrecisionProcessor(IVstPluginAudioPrecisionProcessor instance); protected virtual IVstPluginAudioProcessor CreateAudioProcessor(IVstPluginAudioProcessor instance); protected virtual IVstPluginBypass CreateBypass(IVstPluginBypass instance); protected virtual IVstPluginConnections CreateConnections(IVstPluginConnections instance); protected virtual IVstPluginEditor CreateEditor(IVstPluginEditor instance); protected virtual IVstMidiProcessor CreateMidiProcessor(IVstMidiProcessor instance); protected virtual IVstPluginMidiPrograms CreateMidiPrograms(IVstPluginMidiPrograms instance); protected virtual IVstPluginMidiSource CreateMidiSource(IVstPluginMidiSource instance); protected virtual IVstPluginParameters CreateParameters(IVstPluginParameters instance); protected virtual IVstPluginPersistence CreatePersistence(IVstPluginPersistence instance); protected virtual IVstPluginProcess CreateProcess(IVstPluginProcess instance); protected virtual IVstPluginPrograms CreatePrograms(IVstPluginPrograms instance); These methods also need to be overloaded in order to implement your logic in custom component classes. For example, you want to process samples, then you need to write a class that implements the IVstPluginAudioProcessor, and return it in the CreateAudioProcessor method.

public class MyPlugin : VstPluginWithInterfaceManagerBase { ... protected override IVstPluginAudioProcessor CreateAudioProcessor(IVstPluginAudioProcessor instance) { return new MyAudioProcessor(); } ... } ... public class MyAudioProcessor : VstPluginAudioProcessorBase // { public override void Process(VstAudioBuffer[] inChannels, VstAudioBuffer[] outChannels) { // } } Using various pre-built component classes, you can focus on programming the plug-in logic. Although no one bothers you to implement everything yourself, as you want, based only on the interfaces from Jacobi.Vst.Core.

For those who are already kodit - I offer you an example of just a plug-in, which lowers the volume by 6 dB (for this you need to multiply the sample by 0.5, why - read the article about the sound ).

using Jacobi.Vst.Core; using Jacobi.Vst.Framework; using Jacobi.Vst.Framework.Plugin; namespace Plugin { public class PluginCommandStub : StdPluginCommandStub { protected override IVstPlugin CreatePluginInstance() { return new MyPlugin(); } } public class MyPlugin : VstPluginWithInterfaceManagerBase { public MyPlugin() : base( "MyPlugin", new VstProductInfo("MyPlugin", "My Company", 1000), VstPluginCategory.Effect, VstPluginCapabilities.None, 0, new FourCharacterCode("TEST").ToInt32()) { } protected override IVstPluginAudioProcessor CreateAudioProcessor(IVstPluginAudioProcessor instance) { return new AudioProcessor(); } } public class AudioProcessor : VstPluginAudioProcessorBase { public AudioProcessor() : base(2, 2, 0) // { } public override void Process(VstAudioBuffer[] inChannels, VstAudioBuffer[] outChannels) { for (int i = 0; i < inChannels.Length; ++i) { var inChannel = inChannels[i]; var outChannel = outChannels[i]; for (int j = 0; j < inChannel.SampleCount; ++j) { outChannel[j] = 0.5f * inChannel[j]; } } } } } My add-on VST .NET

When programming a synth, I encountered some problems when using classes from Jacobi.Vst.Framework. The main problem was the use of parameters and their automation.

First, I didn’t like the implementation of value-changing events; secondly, there were bugs when testing the plugin in FL Studio and Cubase. FL Studio perceives all parameters as float numbers from 0 to 1, even without using a special function from the VST SDK with the opGode effGetParameterProperties (the function is called by the plugin to get additional information about the parameter). In the WDL-OL, the implementation is commented out with the note:

couldn’t implement aGetParameterProperties to group parameters

Although, of course, this function is called in Cubase (Cubase is a product of Steinberg, which released the VST SDK).

In VST .NET, this callback is implemented as a GetParameterProperties function that returns an object of the VstParameterProperties class. Anyway, Cubase incorrectly perceived and automated my parameters.

In the beginning, I made the edits to the library itself, wrote to the author, so that he would give permission to upload the source code to the repository, or create the repository on GitHub himself. But I never received a clear answer, so I decided to make a superstructure over the library - Syntage.Framework.dll .

In addition, the add-in implemented convenient classes for working with UI, if you want to use WPF.

It's time to download the source code of my synthesizer and compile it.

- Clone / download repository.

- Build a solution in Visual Studio in Debug.

- To run the synth from the studio, you need to use the project SimplyHost.

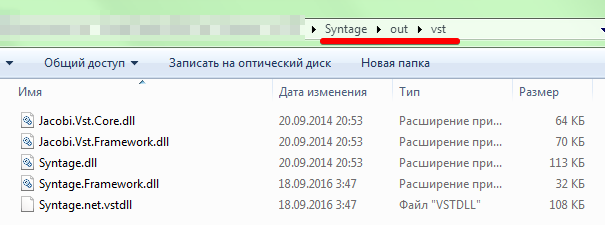

- Plugin files and dependent libraries will be in the "out \ vst \" folder:

The rules for using my add-in are simple: instead of StdPluginCommandStub, use SyntagePluginCommandStub, and inherit your plugin from SyntagePlugin.

WPF UI

In the VST plugin does not have to be a graphical interface. I have seen many plugins without UI (some of them are mda ). Most DAWs (at least Cubase and FL Studio) will provide you with the ability to manage parameters from the UI they generate.

Auto-generated UI for my synthesizer in FL Studio

In order for your plugin to be with a UI, first, you must have a class that implements IVstPluginEditor; secondly, you need to return its instance in the overloaded CreateEditor function of your plugin class (heir to SyntagePlugin).

I wrote a PluginWpfUI <T> class that directly owns a WPF window. Here T is the type of your UserControl, which is the "main form" of the UI. PluginWpfUI <T> has 3 virtual methods that you can overload to implement your logic:

- public virtual void Open (IntPtr hWnd) - called whenever the UI of the plugin is opened

- public virtual void Close () - called whenever the UI plug-in is closed

- public virtual void ProcessIdle () - called several times per second from a UI thread, to process custom logic (the basic implementation is empty)

In my Syntage synthesizer, I wrote a couple of controls - a slider, a knob, a piano keyboard - if you want, you can copy and use them.

UI thread (thread)

I tested the synthesizer in FL Studio and Cubase 5 and I am sure that in other DAW it will be the same: the UI of the plug-in is processed by a separate stream. This means that the audio and UI logic is processed in independent threads. This entails all the problems, or the consequences of such an approach: access to data from different streams, critical data, access to the UI from another stream ...

To facilitate problem solving, I wrote the UIThread class, which, in essence, is a command queue. If at some point you want to inform / change / do something in the UI, and the current code does not work in the UI stream, then you can put the necessary function in the queue:

UIThread.Instance.InvokeUIAction(() => Control.Oscilloscope.Update()); Here an anonymous method is placed in the command queue, updating the necessary data. When you call ProcessIdle, all the commands accumulated in the queue will be executed.

UIThread does not solve all problems. When programming the oscilloscope, it was necessary to update the UI over an array of samples that were processed in another stream. I had to use mutexes.

Syntage synthesizer architecture overview

When writing a synthesizer, OOP was actively used; I suggest you get acquainted with the resulting architecture and use my code. You can do everything in your own way, but these articles will have to endure my vision)

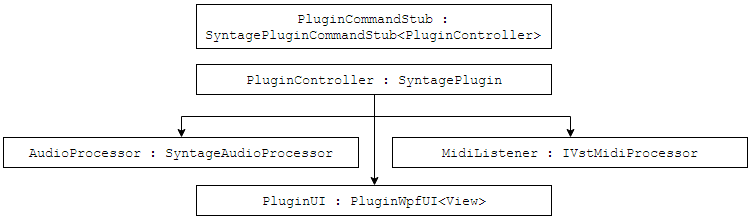

The PluginCommandStub class is only needed to create and return an object of the PluginController class. PluginController provides information about the plugin, it also creates and owns the following components:

- AudioProcessor - a class with all the audio processing logic

- MidiListener - class for handling MIDI messages (from Syntage.Framework)

- PluginUI (heir to PluginWpfUI <View>) is the class that controls the synthesizer's GUI, the main form is the UserControl "View".

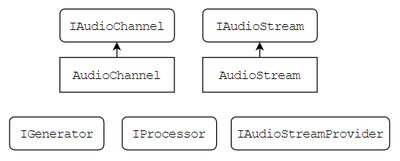

To process audio data there are interfaces IAudioChannel and IAudioStream. IAudioChannel provides direct access to the sample array / buffer (double [] Samples). IAudioStream contains an array of channels.

The presented interfaces contain convenient methods for processing all samples and channels "together": mixing channels and streams, applying the method to each sample separately, and so on.

For interfaces IAudioChannel and IAudioStream implementations of AudioChannel and AudioStream are written. Here it is important to remember the following thing: you cannot store links to the AudioStream and AudioChannel, if they are external data in a function . The bottom line is that buffer sizes can change as the plugin goes on, buffers are constantly reused - it is not profitable to constantly re-allocate and copy memory. If you need to save a buffer for future use. (I don’t know why) - copy it to your clipboard.

The IAudioStreamProvider owns the audio streams, you can ask the CreateAudioStream function to create a stream and return the stream to remove it by the ReleaseAudioStream function.

At any time, the length (length of the sample array) of all audio streams and channels is the same, technically it is determined by the host. In the code, it can be obtained either from the IAudioChannel itself or the IAudioStream (the Length property), also from the “host” IAudioStreamProvider (the CurrentStreamLenght property).

The AudioProcessor class is the "core" of the synthesizer - it is in this that the synthesis of sound occurs. The class is a successor of SyntageAudioProcessor, which, in turn, implements the following interfaces:

- VstPluginAudioProcessorBase - to process the sample buffer (Process method)

- IVstPluginBypass - to disable synthesizer logic if the plugin is in Bypass mode

- IAudioStreamProvider - to provide audio streams for generators

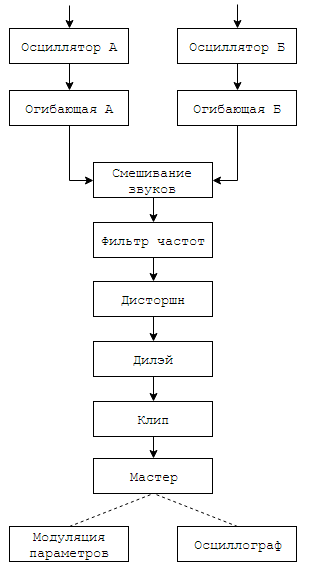

Sound synthesis goes through a long chain of processing: creating a simple wave in oscillators, mixing sound with different oscillators, sequential processing in effects. The sound creation and processing logic was divided into component classes for the AudioProcessor. SyntageAudioProcessorComponentWithParameters<T> — AudioProcessor .

:

- Input — (MIDI- UI)

- Oscillator —

- ADSR —

- ButterworthFilter —

- Distortion —

- Delay — /

- Clip — -1 1.

- LFO — ( Low Frequency Oscillator — )

- Master — - ( ) . .

- Oscillograph —

- Routing —

Routing.Process :

( - ). . , , , . . , LFO- ( ).

Oscillator, -.

, Parameter<T>, : EnumParameter, IntegerParameter, RealParameter . , Value T, float- RealValue — [0,1] ( UI ).

/

Finally! . C#, Visual Studio.

.NET Class Library, Jacobi.Vst.Core.dll Jacobi.Vst.Framework.dll, Syntage.Framework.dll.

( VST .NET ).

( Project → Properties → Build Events → Post-build event command line , On successful build ):

if not exist "$(TargetDir)\vst\" mkdir "$(TargetDir)\vst\" copy "$(TargetDir)$(TargetFileName)" "$(TargetDir)\vst\$(TargetName).net.vstdll" copy "$(TargetDir)Syntage.Framework.dll" "$(TargetDir)\vst\Syntage.Framework.dll" copy "$(TargetDir)Jacobi.Vst.Interop.dll" "$(TargetDir)\vst\$(TargetName).dll" copy "$(TargetDir)Jacobi.Vst.Core.dll" "$(TargetDir)\vst\Jacobi.Vst.Core.dll" copy "$(TargetDir)Jacobi.Vst.Framework.dll" "$(TargetDir)\vst\Jacobi.Vst.Framework.dll" Syntage SimplyHost. , ".vstdll" ( .exe ). — .

, . , DAW: FL Studio 12 Cubase 5. FL Studio , Visual Studio FL Studio ( Debug → Attach To Process ). , : .dll ( ); DAW.

, " Syntage" — .

— ( , ) . . - ( , ). , . — , ( ).

— , .

"" ? , , , . :

: , , /, .

, .

, — "" . , , , () .

: .

. , 0 1. , . , , .

WaveGenerator, GetTableSample, ( 0 1).

— . , . NextDouble Random — , , . , , . [0,1] [-1,1].

public static class WaveGenerator { public enum EOscillatorType { Sine, Triangle, Square, Saw, Noise } private static readonly Random _random = new Random(); public static double GetTableSample(EOscillatorType oscillatorType, double t) { switch (oscillatorType) { case EOscillatorType.Sine: return Math.Sin(DSPFunctions.Pi2 * t); case EOscillatorType.Triangle: if (t < 0.25) return 4 * t; if (t < 0.75) return 2 - 4 * t; return 4 * (t - 1); case EOscillatorType.Square: return (t < 0.5f) ? 1 : -1; case EOscillatorType.Saw: return 2 * t - 1; case EOscillatorType.Noise: return _random.NextDouble() * 2 - 1; default: throw new ArgumentOutOfRangeException(); } } } , Oscillator, SyntageAudioProcessorComponentWithParameters<AudioProcessor>. , IGenerator,

IAudioStream Generate(); IAudioStreamProvider ( AudioProcessor) , Generate .

:

- — WaveGenerator.EOscillatorType, EnumParameter Syntage.Framework

- — 20 20000 , FrequencyParameter Syntage.Framework

:

public class Oscillator : SyntageAudioProcessorComponentWithParameters<AudioProcessor>, IGenerator { private readonly IAudioStream _stream; // , private double _time; public EnumParameter<WaveGenerator.EOscillatorType> OscillatorType { get; private set; } public RealParameter Frequency { get; private set; } public Oscillator(AudioProcessor audioProcessor) : base(audioProcessor) { _stream = Processor.CreateAudioStream(); // } public override IEnumerable<Parameter> CreateParameters(string parameterPrefix) { OscillatorType = new EnumParameter<WaveGenerator.EOscillatorType>(parameterPrefix + "Osc", "Oscillator Type", "Osc", false); Frequency = new FrequencyParameter(parameterPrefix + "Frq", "Oscillator Frequency", "Hz"); return new List<Parameter> { OscillatorType, Frequency }; } public IAudioStream Generate() { _stream.Clear(); // , GenerateToneToStream(); // return _stream; } } GenerateToneToStream.

, :

, - . Generate() ( , ) — "". , , "". . .

0 [ ].

— . , timeDelta = 1/SampleRate. 44100 — 0.00002267573 .

, — _time timeDelta .

WaveGenerator.GetTableSample 0 1, 1 — . , — , .

.

: 440 . : 1/440 = 0.00227272727 .

44100 .

44150- , .

44150- 44150/44100 = 1.00113378685 .

, — 1.00113378685/0.00227272727 = 440.498866743.

— 0.498866743. WaveGenerator.GetTableSample.

, :

WaveGenerator.GenerateNextSample GenerateToneToStream.

public static double GenerateNextSample(EOscillatorType oscillatorType, double frequency, double time) { var ph = time * frequency; ph -= (int)ph; // frac return GetTableSample(oscillatorType, ph); } ... private void GenerateToneToStream() { var count = Processor.CurrentStreamLenght; // double timeDelta = 1.0 / Processor.SampleRate; // // , var leftChannel = _stream.Channels[0]; var rightChannel = _stream.Channels[1]; for (int i = 0; i < count; ++i) { // Frequency OscillatorType - // var frequency = DSPFunctions.GetNoteFrequency(Frequency.Value); var sample = WaveGenerator.GenerateNextSample(OscillatorType.Value, frequency, _time); leftChannel.Samples[i] = sample; rightChannel.Samples[i] = sample; _time += timeDelta; } } , :

- Volume

- (Fine) — . , wah-wah . , .

- / (Pan/Panning/Stereo) .

— .

AudioProcessor ( Generate) PluginController ( AudioProcessor).

Syntage. AudioProcessor , :

- ( CreateParameters)

- Generte

public class PluginCommandStub : SyntagePluginCommandStub<PluginController> { protected override IVstPlugin CreatePluginInstance() { return new PluginController(); } } ... public class PluginController : SyntagePlugin { public AudioProcessor AudioProcessor { get; } public PluginController() : base( "MyPlugin", new VstProductInfo("MyPlugin", "TestCompany", 1000), VstPluginCategory.Synth, VstPluginCapabilities.None, 0, new FourCharacterCode("TEST").ToInt32()) { AudioProcessor = new AudioProcessor(this); ParametersManager.SetParameters(AudioProcessor.CreateParameters()); ParametersManager.CreateAndSetDefaultProgram(); } protected override IVstPluginAudioProcessor CreateAudioProcessor(IVstPluginAudioProcessor instance) { return AudioProcessor; } } ... public class AudioProcessor : SyntageAudioProcessor { private readonly AudioStream _mainStream; public readonly PluginController PluginController; public Oscillator Oscillator { get; } public AudioProcessor(PluginController pluginController) : base(0, 2, 0) // , , - { _mainStream = (AudioStream)CreateAudioStream(); PluginController = pluginController; Oscillator = new Oscillator(this); } public override IEnumerable<Parameter> CreateParameters() { var parameters = new List<Parameter>(); parameters.AddRange(Oscillator.CreateParameters("O")); return parameters; } public override void Process(VstAudioBuffer[] inChannels, VstAudioBuffer[] outChannels) { base.Process(inChannels, outChannels); // var stream = Oscillator.Generate(); // stream _mainStream _mainStream.Mix(stream, 1, _mainStream, 0); // _mainStream.WriteToVstOut(outChannels); } } ADSR- .

Good luck in programming!

PS — , , diy- .

Bibliography

- . What you need to know about the sound to work with it. ..

- -. . . .

- ., . — . .

- Martin Finke's Blog "Music & Programming" C++, WDL-OL.

- - Martin Finke's Blog

- ( - , ).

')

Source: https://habr.com/ru/post/311220/

All Articles