Analysis of the use of data redundancy as the required additional memory when sorting by the merge algorithm

Sorting algorithms

This article will discuss the comparison of some sorting algorithms implemented in C ++ for a sequence of unpacked large BCD numbers.

I conducted this analysis as a summer practice in the company “Software Technologies” .

The sortable sequence does not have a header, the numbers in it have different bit depths and are stored without alignment. There are separators between the numbers (0xFF).

')

To perform sorting using the library function, an additional level of data is introduced - a container containing pointers to memory areas, each of which contains one BCD number. In comparison, participate:

1. Merge sort;

2. Merge sort without using buffer;

3. Natural merge sorting;

4. Natural merge sort without using buffer;

5. Modified natural merge sorting;

6. Modified natural merge sort without using buffer;

7. std :: sort.

Some features of the algorithms used

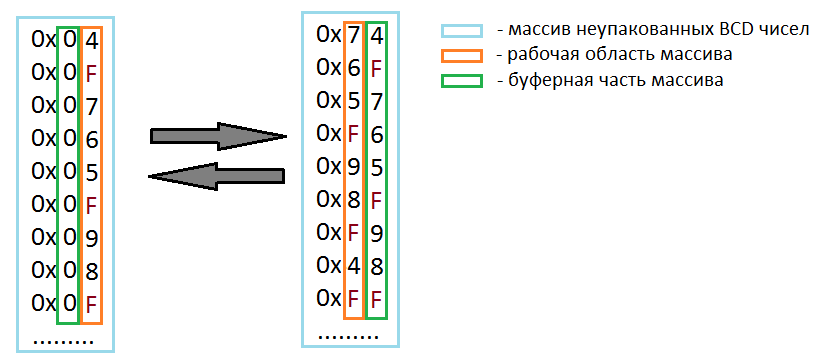

When implementing merge sorting without an additional memory buffer, 4 non-occupied 4 bits of each number in the container are used for storing temporary data (for the first iteration, the upper 4 bits, then the buffer part and the working part, respectively, are swapped). Implementations of merge sorts with and without using a buffer are ascending .

When implementing the natural sorting of the boundaries of the sorted sections, each iteration is found anew.

A modification of the natural sorting is to remember the minimum size of the sorted subsequence at the previous iteration. At the next iteration, when searching for a subsequence, this size is skipped, then the current BCD number is found (or the previous one if the transition was made to the boundary between the numbers) and then the usual search procedure for the end of the subsequence occurs, as in natural sorting.

Testing

When testing, I was guided by "this article" , for which many thanks to its author.

All tests were performed on a computer with the following characteristics:

processor - Intel core 2 duo e7400,

RAM - 4 gibibytes.

For each variable value, three measurements were taken, the graph shows the arithmetic average of all three measurements.

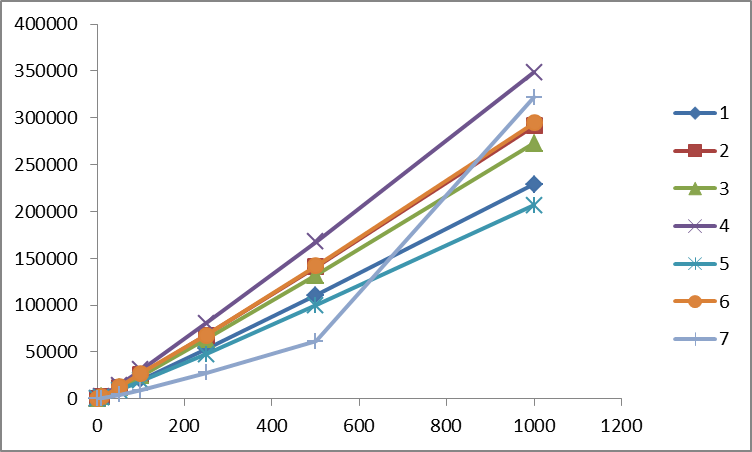

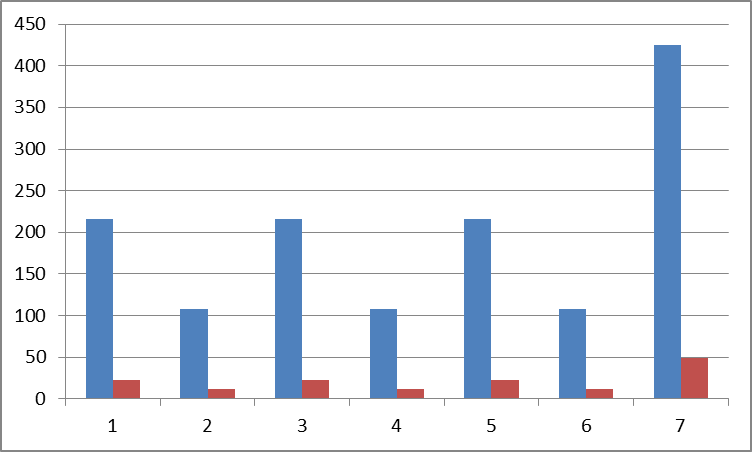

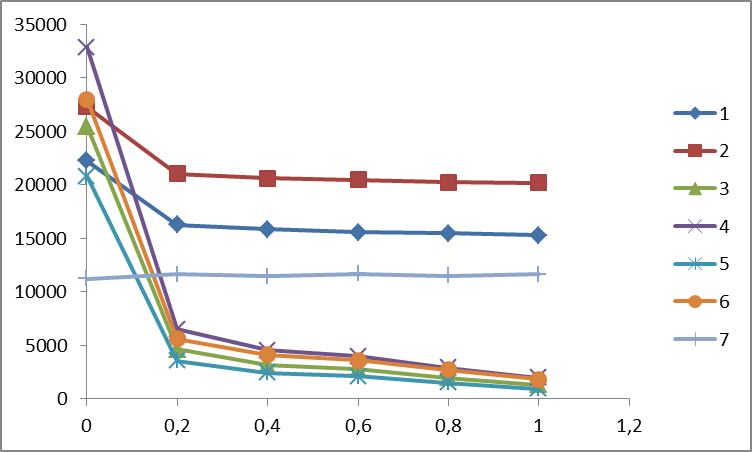

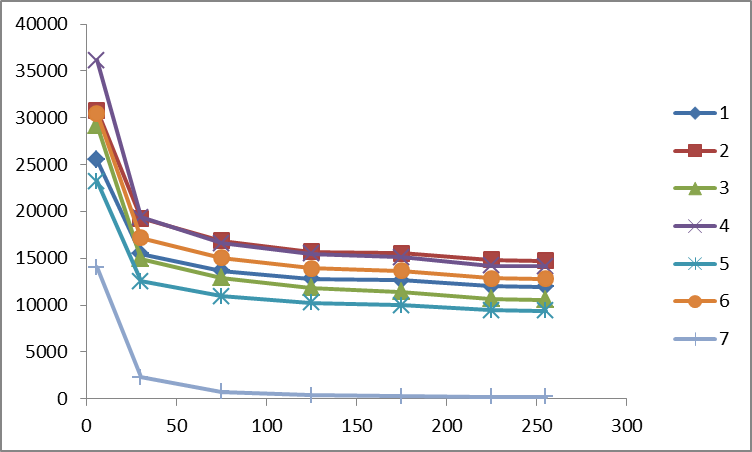

At each of the measurements at the input all sorts were supplied with equivalent data. The time is in milliseconds (on the side), the memory in megabytes (bottom). Sorting go under their ordinal numbers.

The processing speed is from 1 to 1000 MB of BCD numbers, for numbers by value, not exceeding their number in the sequence.

The processing speed is from 1 to 1000 MB of BCD numbers, for numbers on the value not exceeding MAX_INT (in this system - 2 to the power of 32).

Memory consumption for sorting 10 and 100 MB BCD numbers.

The processing speed of a container containing 100MB BCD numbers of varying degrees of sorting.

Hidden text

Sorting is defined as

sortedness = counts / count

where count is the total number of pairs of consecutive numbers in a sequence, count is the number of such pairs for which it is known that the first number in a pair is less than the second.

Thus, the sorting measure is on the interval [0, 1], and, with sortedness = 0, we get a completely random sequence, with sortedness = 1 we get a fully sorted sequence.

sortedness = counts / count

where count is the total number of pairs of consecutive numbers in a sequence, count is the number of such pairs for which it is known that the first number in a pair is less than the second.

Thus, the sorting measure is on the interval [0, 1], and, with sortedness = 0, we get a completely random sequence, with sortedness = 1 we get a fully sorted sequence.

The processing speed of a container containing 100MB BCD numbers of various digits.

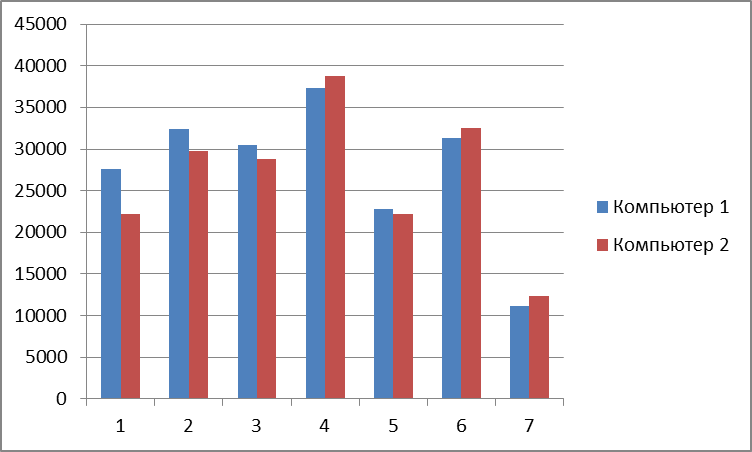

Differences in sorting time for different computers for 100 MB of BCD numbers.

Computer Features 1:

- processor - Intel core 2 duo e7400,

- RAM - 4 gibibytes.

Characteristics of computer 2:

- processor - AMD A10-7300,

- RAM - 4 gibibytes.

Conclusion

On the basis of the tests performed, it can be said that library sorting processes data of small and medium size twice as fast, but at the same time consumes much more memory, therefore, it starts to show a drop in performance on data volumes comparable to the amount of RAM.

Sorts without the use of additional buffers on average work longer than their counterparts and using additional buffers by 25%.

Natural sorting is inferior in speed to conventional merge sorting by 15%, although its modification eliminates this gap.

Source: https://habr.com/ru/post/311150/

All Articles