Android security. Lecture in Yandex

Developer Dmitry Lukyanenko, whose lecture we are publishing today, is not only a Yandex specialist, but also knows how to test the strength of the decisions of developers of other companies. This allows you to learn from the mistakes of others - sometimes including their own, of course. In the report, Dmitry will share examples of Android vulnerabilities, including those he himself found. Each example is accompanied by recommendations - how to and how not to write applications for Android.

My name is Dmitry, I work at Yandex in the Minsk office, I am developing an account manager. This is the library that is responsible for authorizing users. Therefore, we will talk about the security of Android applications.

I would highlight the following sources of problems. The first - network related to communication with the server. This is when your data is not transmitted in a completely secure way. The latter are related to the problem of data storage: this is when your data is stored somewhere in the open form, it can even be stored somewhere on the SD card, or it is not encrypted, in general, when it is accessed from the outside.

')

The third kind of problem is the impact of third-party applications on yours. This is when a third-party application can get unauthorized access to yours, perform some actions on behalf of the user, or even steal something, etc.

In this report, we will focus on the third type of problems associated with the impact of third-party applications. We will consider not only the theory, but also specific examples from real-life applications. And some of these examples are still relevant, apparently, they were simply scored, but they seem to me quite interesting.

This report will be useful to those who are engaged in Android development. I would advise to pay attention to these aspects to all who make applications. For example, testers. Testers, it seems to me, if they look at these nuances, will be able to knock out some bonuses, they will begin to delve more deeply into the development, and you can even not learn much of programming. Manifest file will simply be able to analyze and identify potentially dangerous places. And everyone else who is interested in such security issues.

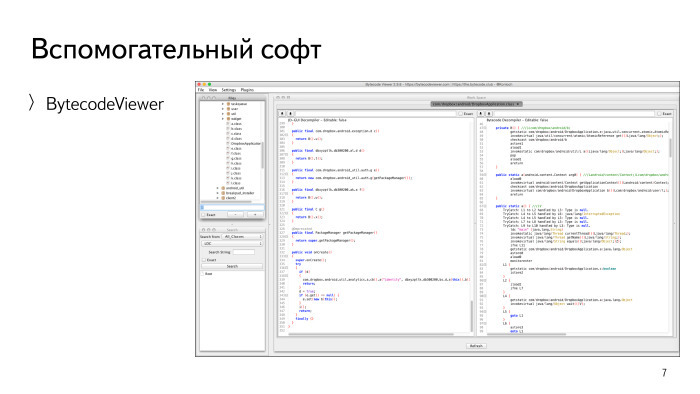

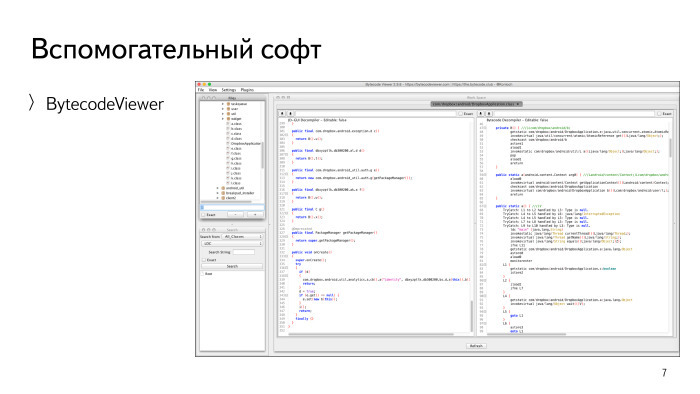

Before we begin, it is worth mentioning the software, which can be useful. First of all, BytecodeViewer, a very useful program, allows you to get from the apk-file all the sources, all the resources, including the manifest-file, from the apk-file.

It is worth noting a handy utility for Android - ManifestViewer. It allows you to quickly view the manifest files of all installed applications. I use it for work, for example, when people come to me and say: the account manager does not work, what is it? First of all, I always look at the manifest file to make sure that the account manager has been properly integrated, that is, that all its components are declared as it should.

This program is useful for you to quickly open and look at the entry points, the vector of attacks from the manifest file.

If you like to do everything from the console, here are all these programs separately. apktool allows you to pull out all the resources and the manifest file. Dex2jar and enjarify allow you to convert apk-file to jar. Dex2jar may not convert some apk, issue it with some kind of exception. Do not despair: often these files are consumed by enjarify, and you get a jar. These are two good utilities.

Once you have jar-files, you can take a Java Decompiler and convert them to a more convenient form - in the form of classes. It is clear that they can not be run, but it is much easier to analyze them than the code that turns out there, etc.

Let's look at the most basic problems. First, we first need to analyze the manifest file, because all the components of the Android application must be described in it. This is Activity, Service, Broadcast Receiver, ContentProvider. All of them, with the exception of Broadcast Reciever, must be there.

So when we start looking for some problems, we look at these components. Here is a specific example. This is a service that was declared in the manifest file of a single application, it is explicitly exported. It even explicitly states that it is exported. This means that it is available to any other third-party application. Any other application, in turn, can send an intent to this service, and the service can perform some actions, if they are provided there.

In this case, we see a potential attack vector. If we dig deeper and look at the sources, it will become clear from them that this service allows, say, to delete an authorized user in this program. I doubt that the developers provided such a feature for some third-party applications. This is a concrete example that should be addressed.

One more thing: when we have some component that uses intent filters, by default it also becomes exportable. Here, perhaps, novice developers may stumble. If the intent filter is there, then everything, this component is already public. Here it is worth being attentive.

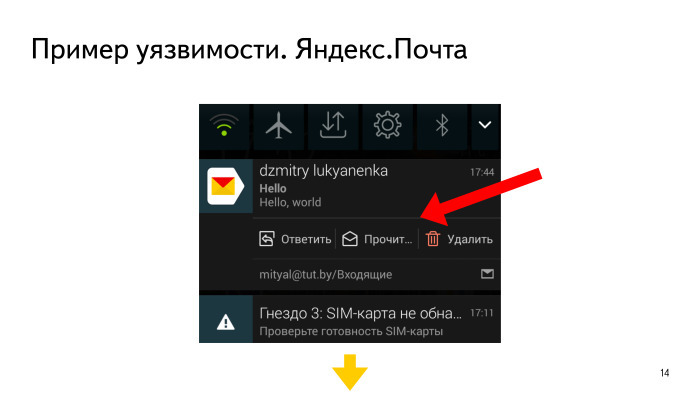

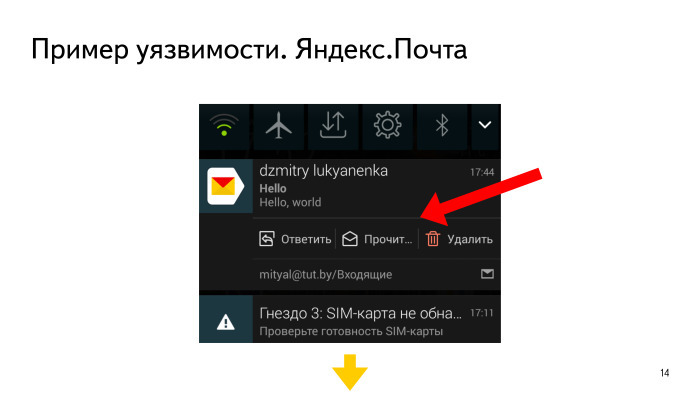

I encountered these kinds of problems, when a number of actions can be taken on a notification that is displayed in the corresponding panel. For example, this happened in Yandex.Mail and other applications.

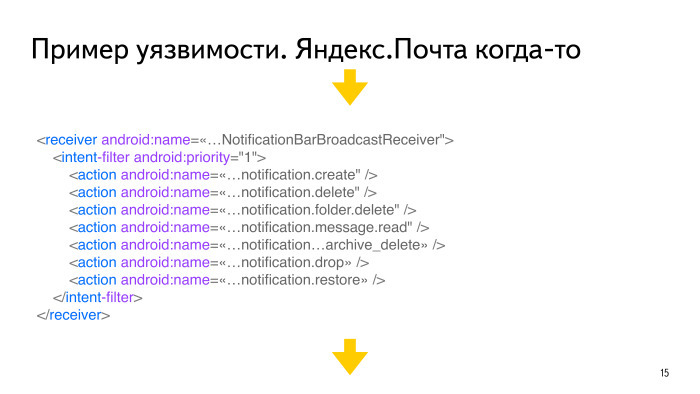

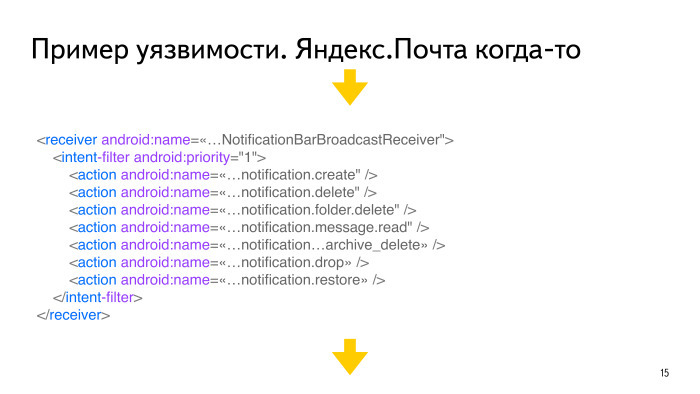

What did this event do? It was processed using a public BroadcastReciever. That is, the attacker could also send any Broadcast - pick up the ID of the letter and, say, delete it. And it is not necessary that this was a message in the panel.

Once upon a time in Yandex. Mail was such a vulnerability. Already all corrected, so that you can use, everything is fine. Thanks Ildar, they quickly do it all.

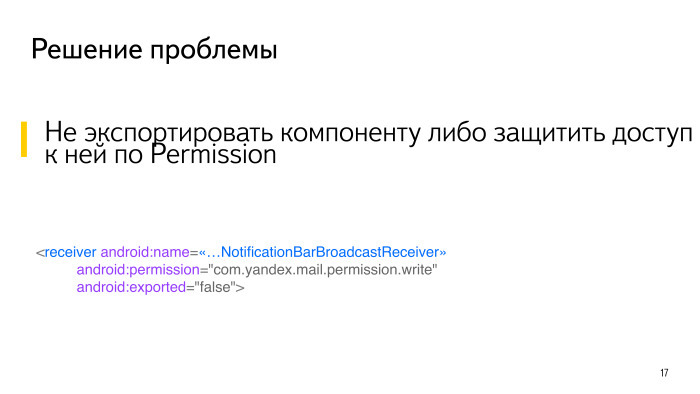

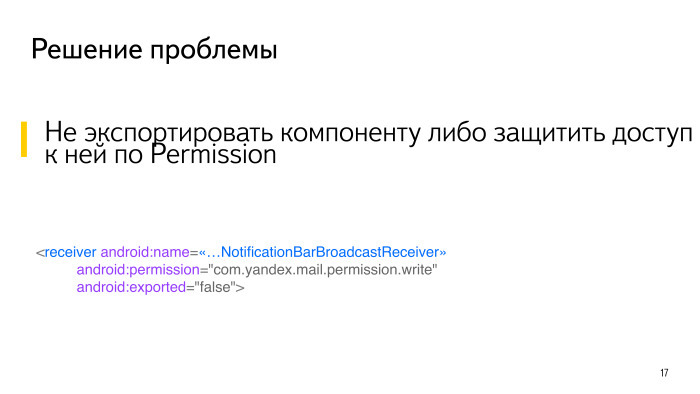

How was this decided? The component was simply made inaccessible from the outside, another permission was added. It could not have been installed, the main thing is if it is not exported, it means that no one can connect to it and do something.

The next type of problem is the use of implicit intents, when the intent is not to specify the specific place to which it should come. This means that you have no guarantee that this intent will go where you want. He may come somewhere else. Therefore, here we must be careful.

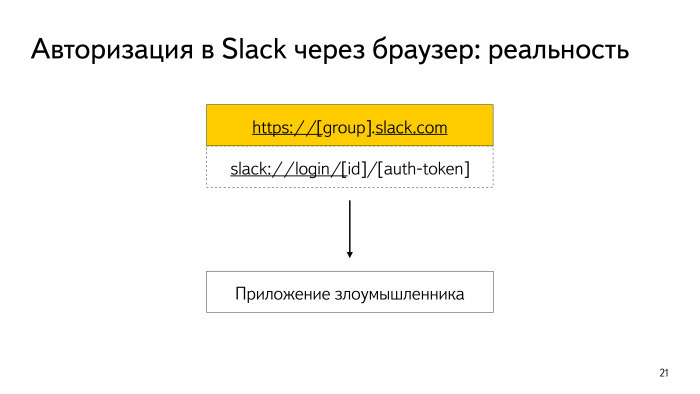

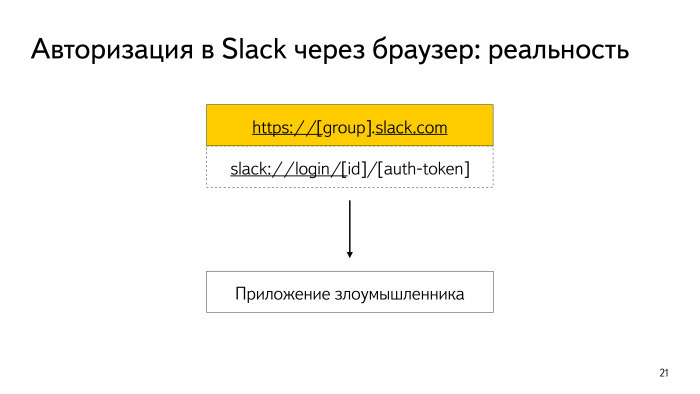

Another nuance that I would attribute to implicit intents is Browsable Activity, when we can open a third-party Application from our browser. This happens when some kind of scheme is set, the host is clicked there and a window opens. Well, when the window just opened and for example, passed the position on the map. It's not scary. But scary - the following. Let's say we have some guys offer to use Slack in the company. Slack has a very interesting authorization. If you are logged in to the browser on Android, then you can log in to the installed Slack application. It works according to this scheme, when there is a URL in the browser, you click on it and the Slack application intercepts it.

But there is no guarantee that the URL is not intercepted by someone else, and the token is transmitted through it. The scheme is quite simple: when you click in the browser, you are immediately authorized in the Slack application. This means that this URL is sufficient for authorization.

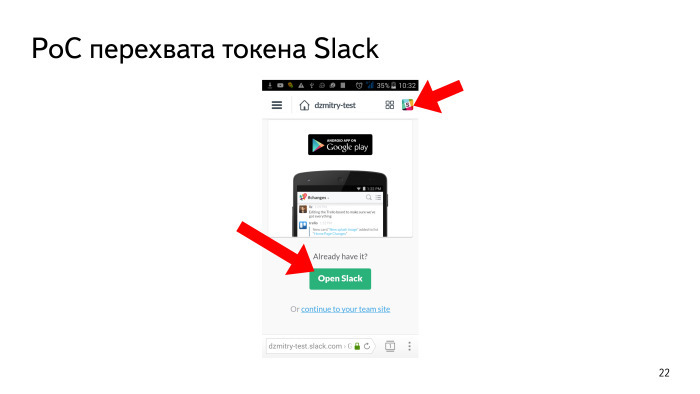

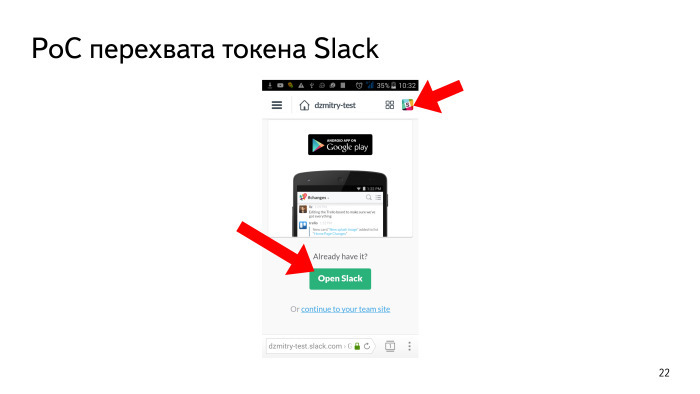

There are buttons that can be clicked in the browser and which initiate this discovery. This is not only “Open Slack”, but also the logo - it is always on top, on any screen.

Let's watch a video that demonstrates how to use this problem. We logged in, logged into our directory, clicked on the Slack logo - but we don’t have the Slack application, the attacker substituted an intent filter. Returned, clicked ... You can do phishing - exactly the same in appearance as Slack. Write that the authorization did not work and all that. The user does not understand, and the token will be with you. Therefore, if you use Slack, then be careful with this browser authorization.

The solution is very simple. As my colleague said, you can use explicit intents that open from the browser. But when I signed up with Slack and my ticket received the number 80000 with something, they said it was a duplicate and that there was already a 5000-some sort of ticket. If you estimate, it turns out that they were told more than six months ago. It seems to be fixed rather simply, but - they did not fix it. It means that this is not a critical problem and then you can talk about it.

There is a recommendation that it is always better to use explicit intent. If you read and understand more deeply, you will find out that Android had a funny typo. They used the implicit pending intent in one place. Because of this, it was possible to access the user with system privileges. They fixed these errors in Android 5. Everyone has such errors, even Google.

It is always better to use explicit intent or, if this is not possible, always ask for some kind of permission. It allows you to restrict access.

Consider another type of vulnerability - based on user data.

We have applications that can get some data from users, for example, send a file, open it, view it. This is usually done using intents like view and send.

This malicious application can tell you: send me the file that you have in the private directory, in your sandbox. Suppose this file can be your database containing all the correspondence, or preferences, if any tokens are stored. Some applications can perform some actions with these files. For example, they can cache the file on an SD card or send it somewhere. In particular, you can perform such an action that this file will be available to the attacker, although initially they assumed that this would be their internal file.

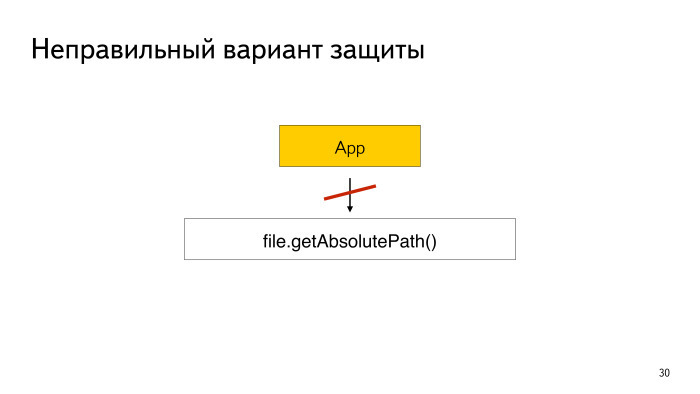

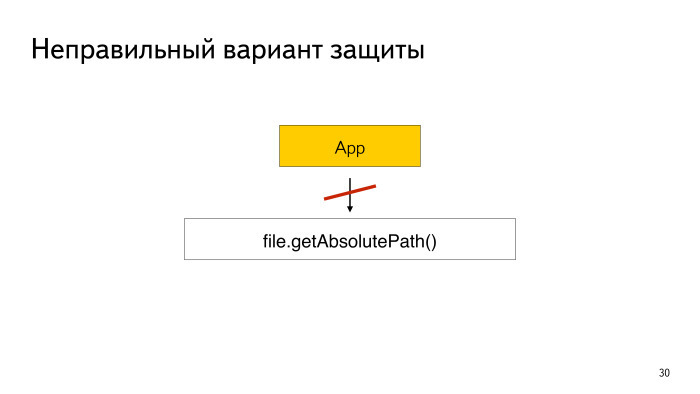

You can take getAbsolutePath () and check if the directory matches your private one. But it's not right.

An attacker may not send you the file itself, but a link to it.

How can this link be created? The attacker creates a readme.txt - he made a link to a private file of your application. Then he pushed this link to you, and if you use getAbsolutePath (), then the link path is returned to you - the path to the readme.txt file. And you think that everything is fine.

To fix this, it is better to use the getCanonicalPath () method. It returns the full path, the real one. In this case, the system will not return a readme.txt, but some mailbox. Consider these issues.

I came across a situation where a single application with more than 50 million users had a similar problem. You say: show me this file. They cache it on the SD card and then try to open it. So it was possible to steal all tokens. They have already fixed everything, but have asked not to disclose yet. However, this occurs.

The next interesting point: you can send a link to the content provider, and it can also be transferred. Even if it is protected by permission, even if it is not exported, it’s all the same - when your application tries to open it, the query method that is in the content provider will be executed.

You have to be careful here. Here is another real example, already from another application. They made a backup of the database from the query method. There it was possible to transfer an action of type backup and the program immediately duplicated the entire database to the SD card. It turns out that the provider is protected, but you can slip it in such a way as to end up with the entire database.

I have never met anyone else to write in the query method, but there it is always better to open something for reading. And also consider that there may be a SQL injection. Now there are a number of applications in which I discovered the possibility of SQL injection. However, they have read-only access, and I have not yet understood whether something can be done further there. But suddenly there is a vulnerability in the SQLite database itself, which will allow to perform an injection that is not so harmless? Still, it is better not to allow them, even if if you open only read access.

Now let's consider one problem with WebView, and it works in all Android up to version 5.0 and allows you to bypass the SOP. From this we conclude that in WebView it is better not to let anyone open their files.

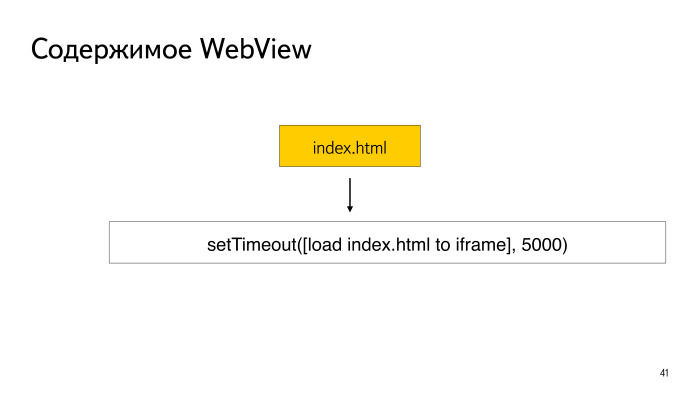

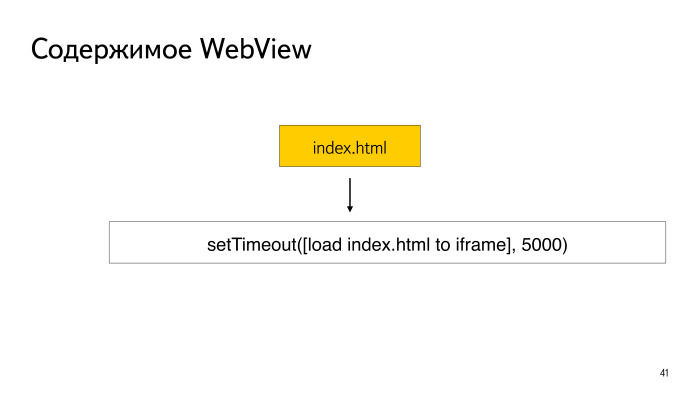

Consider this type of attack. A malicious application can ask your application if it has access to WebView: show me the index.html file. Suppose your application has opened index.html. The content of the file is very simple - JavaScript, which after 5 seconds the file itself, itself, loads into the iframe, reloads, as it were.

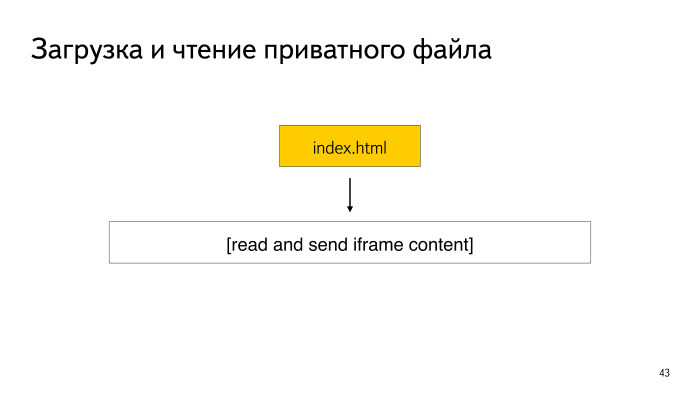

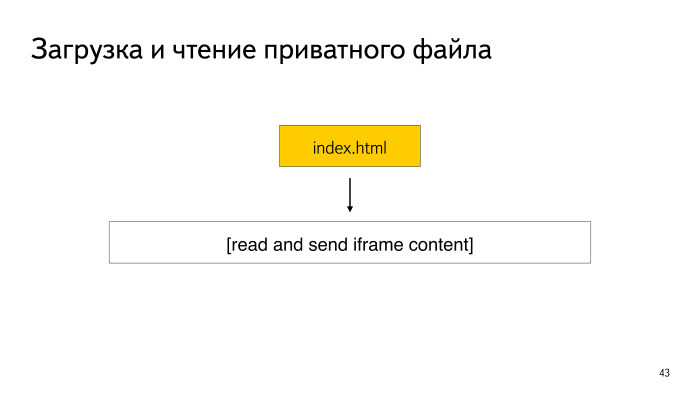

During this time period, the malicious application deletes the specified file and replaces it with a symbolic link to a private file from your application. It takes 5 seconds, it reboots, but the content in it already becomes the content of the same private file. On Android before 5.0, even before WebView, apparently, there is an incorrect check that this is a symbolic link. This way you can read the content. It looks - the file names match, which means the SOP is not broken, the content can be given away and it means that JavaScript can take the content and send it to the server.

This type of attack is not only found in WebView. He still works at UCBrowser, which has more than 100 million installations. I have seen this on some versions of the Android browser, up to Android 5.0. Probably, it is also built on WebView. For example, on Samsung phones I couldn’t play this, but on my Acer phone it works.

Watch a short video on how this works in UCBrowser. It's about a small malicious application, but it may work. You went to bed, the phone, too, an activity, a page, suddenly starts. You are asleep, and there it started - some iframe, confirmation, alert. If the alert read the content, it means that we could also send this content anywhere. This is bookmark.db, bookmarks are stored here. I think anyone interested - can search. It may be possible to find more private user data there. You can steal cookies too. But their cookies are named for the site, so you need to sort out. VKontakte can be taken. Vulnerability is still there, it seems like they have not yet been fixed and are in no hurry to fix it.

Conclusion: if you have a WebView, do not let anyone open your links in it. And if you still give, then at least prohibit the opening of local files. In this regard, Qiwi’s great fellows, I tried their application, was delighted that now I’ll open the file - but no, they have forbidden to open local files and can’t do anything.

Consider the following kind of nontrivial problem. It is based on the features of deserialization in Android. Perhaps Java developers themselves might be useful.

Imagine that we have an intent that contains some data that is transmitted to our application — it takes one field and reads it from the intent. And if the application counted at least one field, then all the data in the intent will be automatically deserialized. Even if they are not used, they are still deserialized. Now you will understand what is dangerous here.

There is such a bonus in Android up to 5.0: on some versions, during deserialization, it is not checked that the class actually implements the serialization interface.

This means that all classes that are in your application may be vulnerable. Yes, they may not be serializable, but if they fit the criteria that will be described later ...

So, what is dangerous here? If an object has been created, it will someday be deleted. Usually, the finalize method will be called, there is nothing dangerous, nothing is implemented. However, when there is native development, usually in the finalize method, a destructor is called from the native area and the pointer is passed there. I didn’t do any native development, but I learned how to get pointers there. If this pointer is serialized, then the attacker can substitute his own, and he, in turn, can either cause an error exceeding the memory boundaries, and point to another memory, which then takes and executes some code.

This was used by the guys from IBM. They analyzed the entire list of classes on the Android platform - checked out more than a thousand or how many are there. And they found only one that met the criteria: it was serializable, and it serialized the native pointer. They took this class, set up their pointer, it was serialized and deserialized. He was not new every time. We made a proof of concept, a demonstration, there is a video. This vulnerability allowed them using this class to send an intent to a system application. It allowed them, for example, to remove the original Facebook application and replace it with a fake one. The video lasts about 7 minutes. There you have to turn it around - until the finalize is called, until the memory is cleared. But the conclusion is that you never need to deserialize the native pointer.

To summarize It is better to never export the components that are in your application. Or - to restrict access by permission. Intents are always better to use explicit or permission to set. Never trust the data that comes from the user - it can be data for anything, even to your private areas. You can then damage them. Carefully consider ContentProvider, including SQL injection, etc.

Such a thing as WebView is not a toy at all. If it is in your application, close it and do not let anyone play with it.

Serialization, or rather, native development, is also like a match, be careful with it. Thanks for attention.

My name is Dmitry, I work at Yandex in the Minsk office, I am developing an account manager. This is the library that is responsible for authorizing users. Therefore, we will talk about the security of Android applications.

I would highlight the following sources of problems. The first - network related to communication with the server. This is when your data is not transmitted in a completely secure way. The latter are related to the problem of data storage: this is when your data is stored somewhere in the open form, it can even be stored somewhere on the SD card, or it is not encrypted, in general, when it is accessed from the outside.

')

The third kind of problem is the impact of third-party applications on yours. This is when a third-party application can get unauthorized access to yours, perform some actions on behalf of the user, or even steal something, etc.

In this report, we will focus on the third type of problems associated with the impact of third-party applications. We will consider not only the theory, but also specific examples from real-life applications. And some of these examples are still relevant, apparently, they were simply scored, but they seem to me quite interesting.

This report will be useful to those who are engaged in Android development. I would advise to pay attention to these aspects to all who make applications. For example, testers. Testers, it seems to me, if they look at these nuances, will be able to knock out some bonuses, they will begin to delve more deeply into the development, and you can even not learn much of programming. Manifest file will simply be able to analyze and identify potentially dangerous places. And everyone else who is interested in such security issues.

Before we begin, it is worth mentioning the software, which can be useful. First of all, BytecodeViewer, a very useful program, allows you to get from the apk-file all the sources, all the resources, including the manifest-file, from the apk-file.

It is worth noting a handy utility for Android - ManifestViewer. It allows you to quickly view the manifest files of all installed applications. I use it for work, for example, when people come to me and say: the account manager does not work, what is it? First of all, I always look at the manifest file to make sure that the account manager has been properly integrated, that is, that all its components are declared as it should.

This program is useful for you to quickly open and look at the entry points, the vector of attacks from the manifest file.

If you like to do everything from the console, here are all these programs separately. apktool allows you to pull out all the resources and the manifest file. Dex2jar and enjarify allow you to convert apk-file to jar. Dex2jar may not convert some apk, issue it with some kind of exception. Do not despair: often these files are consumed by enjarify, and you get a jar. These are two good utilities.

Once you have jar-files, you can take a Java Decompiler and convert them to a more convenient form - in the form of classes. It is clear that they can not be run, but it is much easier to analyze them than the code that turns out there, etc.

Let's look at the most basic problems. First, we first need to analyze the manifest file, because all the components of the Android application must be described in it. This is Activity, Service, Broadcast Receiver, ContentProvider. All of them, with the exception of Broadcast Reciever, must be there.

So when we start looking for some problems, we look at these components. Here is a specific example. This is a service that was declared in the manifest file of a single application, it is explicitly exported. It even explicitly states that it is exported. This means that it is available to any other third-party application. Any other application, in turn, can send an intent to this service, and the service can perform some actions, if they are provided there.

In this case, we see a potential attack vector. If we dig deeper and look at the sources, it will become clear from them that this service allows, say, to delete an authorized user in this program. I doubt that the developers provided such a feature for some third-party applications. This is a concrete example that should be addressed.

One more thing: when we have some component that uses intent filters, by default it also becomes exportable. Here, perhaps, novice developers may stumble. If the intent filter is there, then everything, this component is already public. Here it is worth being attentive.

I encountered these kinds of problems, when a number of actions can be taken on a notification that is displayed in the corresponding panel. For example, this happened in Yandex.Mail and other applications.

What did this event do? It was processed using a public BroadcastReciever. That is, the attacker could also send any Broadcast - pick up the ID of the letter and, say, delete it. And it is not necessary that this was a message in the panel.

Once upon a time in Yandex. Mail was such a vulnerability. Already all corrected, so that you can use, everything is fine. Thanks Ildar, they quickly do it all.

How was this decided? The component was simply made inaccessible from the outside, another permission was added. It could not have been installed, the main thing is if it is not exported, it means that no one can connect to it and do something.

The next type of problem is the use of implicit intents, when the intent is not to specify the specific place to which it should come. This means that you have no guarantee that this intent will go where you want. He may come somewhere else. Therefore, here we must be careful.

Another nuance that I would attribute to implicit intents is Browsable Activity, when we can open a third-party Application from our browser. This happens when some kind of scheme is set, the host is clicked there and a window opens. Well, when the window just opened and for example, passed the position on the map. It's not scary. But scary - the following. Let's say we have some guys offer to use Slack in the company. Slack has a very interesting authorization. If you are logged in to the browser on Android, then you can log in to the installed Slack application. It works according to this scheme, when there is a URL in the browser, you click on it and the Slack application intercepts it.

But there is no guarantee that the URL is not intercepted by someone else, and the token is transmitted through it. The scheme is quite simple: when you click in the browser, you are immediately authorized in the Slack application. This means that this URL is sufficient for authorization.

There are buttons that can be clicked in the browser and which initiate this discovery. This is not only “Open Slack”, but also the logo - it is always on top, on any screen.

Let's watch a video that demonstrates how to use this problem. We logged in, logged into our directory, clicked on the Slack logo - but we don’t have the Slack application, the attacker substituted an intent filter. Returned, clicked ... You can do phishing - exactly the same in appearance as Slack. Write that the authorization did not work and all that. The user does not understand, and the token will be with you. Therefore, if you use Slack, then be careful with this browser authorization.

The solution is very simple. As my colleague said, you can use explicit intents that open from the browser. But when I signed up with Slack and my ticket received the number 80000 with something, they said it was a duplicate and that there was already a 5000-some sort of ticket. If you estimate, it turns out that they were told more than six months ago. It seems to be fixed rather simply, but - they did not fix it. It means that this is not a critical problem and then you can talk about it.

There is a recommendation that it is always better to use explicit intent. If you read and understand more deeply, you will find out that Android had a funny typo. They used the implicit pending intent in one place. Because of this, it was possible to access the user with system privileges. They fixed these errors in Android 5. Everyone has such errors, even Google.

It is always better to use explicit intent or, if this is not possible, always ask for some kind of permission. It allows you to restrict access.

Consider another type of vulnerability - based on user data.

We have applications that can get some data from users, for example, send a file, open it, view it. This is usually done using intents like view and send.

This malicious application can tell you: send me the file that you have in the private directory, in your sandbox. Suppose this file can be your database containing all the correspondence, or preferences, if any tokens are stored. Some applications can perform some actions with these files. For example, they can cache the file on an SD card or send it somewhere. In particular, you can perform such an action that this file will be available to the attacker, although initially they assumed that this would be their internal file.

You can take getAbsolutePath () and check if the directory matches your private one. But it's not right.

An attacker may not send you the file itself, but a link to it.

How can this link be created? The attacker creates a readme.txt - he made a link to a private file of your application. Then he pushed this link to you, and if you use getAbsolutePath (), then the link path is returned to you - the path to the readme.txt file. And you think that everything is fine.

To fix this, it is better to use the getCanonicalPath () method. It returns the full path, the real one. In this case, the system will not return a readme.txt, but some mailbox. Consider these issues.

I came across a situation where a single application with more than 50 million users had a similar problem. You say: show me this file. They cache it on the SD card and then try to open it. So it was possible to steal all tokens. They have already fixed everything, but have asked not to disclose yet. However, this occurs.

The next interesting point: you can send a link to the content provider, and it can also be transferred. Even if it is protected by permission, even if it is not exported, it’s all the same - when your application tries to open it, the query method that is in the content provider will be executed.

You have to be careful here. Here is another real example, already from another application. They made a backup of the database from the query method. There it was possible to transfer an action of type backup and the program immediately duplicated the entire database to the SD card. It turns out that the provider is protected, but you can slip it in such a way as to end up with the entire database.

I have never met anyone else to write in the query method, but there it is always better to open something for reading. And also consider that there may be a SQL injection. Now there are a number of applications in which I discovered the possibility of SQL injection. However, they have read-only access, and I have not yet understood whether something can be done further there. But suddenly there is a vulnerability in the SQLite database itself, which will allow to perform an injection that is not so harmless? Still, it is better not to allow them, even if if you open only read access.

Now let's consider one problem with WebView, and it works in all Android up to version 5.0 and allows you to bypass the SOP. From this we conclude that in WebView it is better not to let anyone open their files.

Consider this type of attack. A malicious application can ask your application if it has access to WebView: show me the index.html file. Suppose your application has opened index.html. The content of the file is very simple - JavaScript, which after 5 seconds the file itself, itself, loads into the iframe, reloads, as it were.

During this time period, the malicious application deletes the specified file and replaces it with a symbolic link to a private file from your application. It takes 5 seconds, it reboots, but the content in it already becomes the content of the same private file. On Android before 5.0, even before WebView, apparently, there is an incorrect check that this is a symbolic link. This way you can read the content. It looks - the file names match, which means the SOP is not broken, the content can be given away and it means that JavaScript can take the content and send it to the server.

This type of attack is not only found in WebView. He still works at UCBrowser, which has more than 100 million installations. I have seen this on some versions of the Android browser, up to Android 5.0. Probably, it is also built on WebView. For example, on Samsung phones I couldn’t play this, but on my Acer phone it works.

Watch a short video on how this works in UCBrowser. It's about a small malicious application, but it may work. You went to bed, the phone, too, an activity, a page, suddenly starts. You are asleep, and there it started - some iframe, confirmation, alert. If the alert read the content, it means that we could also send this content anywhere. This is bookmark.db, bookmarks are stored here. I think anyone interested - can search. It may be possible to find more private user data there. You can steal cookies too. But their cookies are named for the site, so you need to sort out. VKontakte can be taken. Vulnerability is still there, it seems like they have not yet been fixed and are in no hurry to fix it.

Conclusion: if you have a WebView, do not let anyone open your links in it. And if you still give, then at least prohibit the opening of local files. In this regard, Qiwi’s great fellows, I tried their application, was delighted that now I’ll open the file - but no, they have forbidden to open local files and can’t do anything.

Consider the following kind of nontrivial problem. It is based on the features of deserialization in Android. Perhaps Java developers themselves might be useful.

Imagine that we have an intent that contains some data that is transmitted to our application — it takes one field and reads it from the intent. And if the application counted at least one field, then all the data in the intent will be automatically deserialized. Even if they are not used, they are still deserialized. Now you will understand what is dangerous here.

There is such a bonus in Android up to 5.0: on some versions, during deserialization, it is not checked that the class actually implements the serialization interface.

This means that all classes that are in your application may be vulnerable. Yes, they may not be serializable, but if they fit the criteria that will be described later ...

So, what is dangerous here? If an object has been created, it will someday be deleted. Usually, the finalize method will be called, there is nothing dangerous, nothing is implemented. However, when there is native development, usually in the finalize method, a destructor is called from the native area and the pointer is passed there. I didn’t do any native development, but I learned how to get pointers there. If this pointer is serialized, then the attacker can substitute his own, and he, in turn, can either cause an error exceeding the memory boundaries, and point to another memory, which then takes and executes some code.

This was used by the guys from IBM. They analyzed the entire list of classes on the Android platform - checked out more than a thousand or how many are there. And they found only one that met the criteria: it was serializable, and it serialized the native pointer. They took this class, set up their pointer, it was serialized and deserialized. He was not new every time. We made a proof of concept, a demonstration, there is a video. This vulnerability allowed them using this class to send an intent to a system application. It allowed them, for example, to remove the original Facebook application and replace it with a fake one. The video lasts about 7 minutes. There you have to turn it around - until the finalize is called, until the memory is cleared. But the conclusion is that you never need to deserialize the native pointer.

To summarize It is better to never export the components that are in your application. Or - to restrict access by permission. Intents are always better to use explicit or permission to set. Never trust the data that comes from the user - it can be data for anything, even to your private areas. You can then damage them. Carefully consider ContentProvider, including SQL injection, etc.

Such a thing as WebView is not a toy at all. If it is in your application, close it and do not let anyone play with it.

Serialization, or rather, native development, is also like a match, be careful with it. Thanks for attention.

Source: https://habr.com/ru/post/310926/

All Articles