How to rewrite the SDK in TypeScript, upgrade the platform and do not regret anything

We have a new version of WebSDK - v4. So far this is only a public beta version, but it is already stable for most everyday cases. We tried to maintain the backward compatibility of the new version.

And the platform has been updated - v3. There are many new and interesting things. Everything works faster and more fun. For details below.

As you can see, we have a double strike! Under the cut - what happened in 6 months of cross debugging, continuous improvement and pain. Spoiler: no more ancient flash. Only pure WebRTC + ORTC.

New stuff in SDK

We rewrote everything in TypeScript. Literally every line and function of the SDK from scratch. The main reason was the support. Typescript is much easier to maintain than Vanilla JS. With the help of a risky step, we can provide you with updates more often. In addition, we will soon introduce new tsd files for your favorite language.

Weight SDK increased by only 12KB. Such was the price of TypeScript implementation. This is a small problem if you make voice and video calls. If you are confused by the volume of 111 KB, I recommend using async download mode. In general, I advise you to always use it async download our script. The extra moments of waiting for your users upset us too.

Putting it all up with a webpack. The previous version of the SDK was built using Gulp, sh and divine help. We needed a stable autodetection and easy configuration, auto-build documentation, building npm modules, tsd, automatic versioning ... I could go on forever, and anyone can drop a couple of points into this list from personal DevOps desires. And so it happened that the Webpack could do anything we wanted. Yes, he works fast.

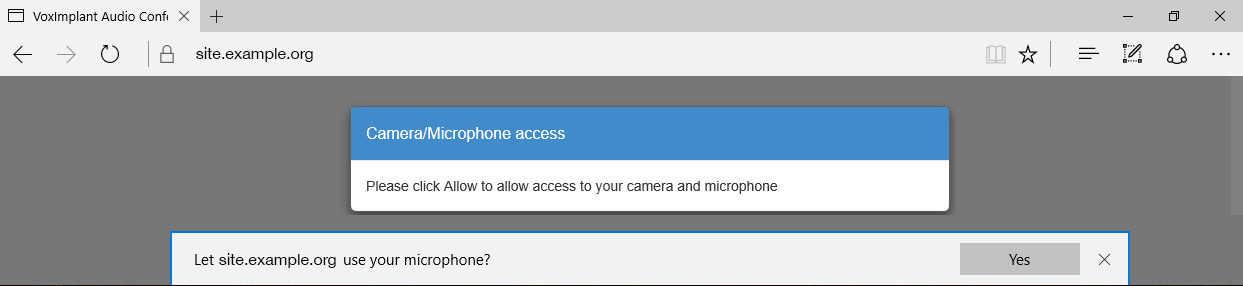

Make audio calls to and from Microsoft Edge available. ORTC instead of WebRTC, lack of solutions on StackOverflow (Ctrl + C -> Ctrl + V could not save us), 7 laps of MS support hell, undocumented errors. You can read about the pain and suffering of an individual programmer with one separate Hedgehog in my previous article . It took a decent part of the development cycle to forge the gauntlets for our SDK and make you a comfortable API. As a result, you can talk to users of Edge, they can talk to you. Soon VP9 will appear in the Edge - you can see it.

You can prioritize h 264 codec or set priorities of other codecs. Mobile devices typically have hardware h264 encoding / decoding and VP8 encoding / decoding software. If you call Chrome with a default priority, you’ll select VP8. Software rendering. Who played games with him, immediately knows that this is a pain. Unfortunately, browsers are often quite the opposite. This will allow you to make energy-efficient calls to mobile applications with our WebSDK or adjust the priority of codecs for exotic hardware and cases.

You can turn on / off video during a call. This is a renegotiation, comrades! Renegotiation is a re-exchange of the capabilities of your browsers at the time when you turned on / off the video. I will explain in Pavla. Suppose Paul A. calls Paul B. voice. The two Pauls happily communicate in browsers. Now Pavel B. wants to show his new two-headed turtle to Paul A. Without renegotiation, one would have to end the audio call and start the video. And now Pavla can not be interrupted. True, they will never know about renegotiation. Pavel B. presses the button and Pavel A. sees him with the power of our SDK! In addition, one of Pavlov can show his video, and the second is not. Just like in Skype.

Hold / Unhold is made using WebRTC and now works in Peer-To-Peer mode. Hold is when you place a call from hold and in a magical way sound and video do not go. Unhold is when you take a call from hold and magically everything goes again. In the old version of the SDK, there was also such functionality, but our server became the barrier between the streams of sound. Traffic was going. Now the traffic does not go. Your browser will tell the server or another client that you are temporarily suspending the exchange and everyone is happy. Less traffic and longer battery life.

Filters and masks for the implementation of your ideas. You can take the incoming audio stream and make a chipmunk voice. Get outgoing and send Darth's voice. At the same time adding compression and mitola! You can take a video stream, drive it through the canvas and neural network and add a mustache in the style of Picasso. You decide! Give your MSQRD in the browser!

New things in the server part

Delayed sound and video has decreased. We changed the call pattern. RTP traffic now goes through one server, and earlier went through a chain to three servers. Tests showed a reduction in the delay of 180m / s in both directions.

Improved work on bad networks for Chrome. Added support for REMB technology. This technology allows you to control the quality of pictures and sound on weak channels. It helps from stuttering and loss of sound on weak networks. The technology is smart, it will not degrade the signal quality on a good connection. And if the quality of the connection jumps, then adjust.

Now you can only choose in the server script how the P2P / ViaServer call will go. Our platform can work in 2 modes: PeerToPeer and through our media server. In the case of work in Peer-To-Peer media traffic goes from subscriber to subscriber directly. Neither we nor you will see it and will not be able to record or send to the telephone network, for example. For the server scenario, everything goes through our server with all the pluses. Previously, client applications for these two scenarios were different - it was necessary to pass the X-DirectCall flag at the start of the call, and even in the VoxEngine script, everything would be handled correctly. Now everything is easier. The decision is made in the VoxEngine script. When you start a call, you can determine if you need a server call or just enough Peer-To-Peer and correctly connect subscribers.

Audio / video tracks are now synced in Firefox and Chrome. On the cloud side, an intelligent selection of the synchronization context occurs, and delays are calculated based on RTCP statistics. And the browser according to the synchronization context correctly collects pairs of audio-video. A trifle, but nice - no need to look into the past, but listen to the present.

Battle loss

Removed old messaging API. Little spoilers - we make it better, fresher, faster and sexier. Soon, when the Timlid resolves, it will fall into the public beta. In the meantime, in its place is temporarily nothing.

We removed Flash. Not at all from the web, we cannot do this, unlike Google, which is actively involved in this, but only from our SDK. Although there is no limit to our joy, there is a bit sad about it. Safari and IE dedicated users will have to use Temasys plugin to simulate WebRTC. If your users are the very 4.9% of Runet, I recommend to wait a bit until they update, or use the old version of our SDK.

In conclusion, I want to say that we have updated the documentation and opened the gitter channel, where you can quickly get technical support for the new version of sdk. Test it.

')

Source: https://habr.com/ru/post/310740/

All Articles