GitLab CI: Learning to Deploy

This article focuses on the success story of an imaginary news portal, of which you are the happy owner. Fortunately, you already have the project code on GitLab.com and you know that you can use GitLab CI for testing .

Now you are wondering whether it is possible to go further and use CI also to deploy the project, and if so, what opportunities open up.

In order not to become attached to any particular technology, suppose that your application is a simple set of HTML files, no execution of code on the server, no compilation of JS assets. Deploy will be on Amazon S3 .

The author has no purpose to give recipes for a particular technology in this article. On the contrary, code samples are as primitive as possible, so that they do not get stuck too much. The point is that you look at the features and principles of GitLab CI in action, and then apply them to your technology.

Let's start from the beginning: in your fictional application there is no CI support yet.

Start

Deployment : in this example, the result of the deployment should be the appearance of a set of HTML files in your bucket (bucket S3, which is already configured to host static websites ).

This can be achieved in many different ways. We will use the library

awscli from amazon

The complete deployment team looks like this:

aws s3 cp ./ s3://yourbucket/ --recursive --exclude "*" --include "*.html"  Push code to the repository and deployment are two different processes.

Push code to the repository and deployment are two different processes.

Important : this command expects you to pass the environment variables AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY . You may also be required to specify AWS_DEFAULT_REGION .

Let's try to automate this process using GitLab CI.

First automatic deployment

When working with GitLab, all the same commands are used as when working on the local terminal. You can configure the GitLab CI to suit your requirements in the same way that you configure your local terminal. All the commands that can be executed on your computer can be executed in GitLab by transferring them to CI. Just add your script to the .gitlab-ci.yml and push - that’s all, CI runs the job (job) and executes your commands.

Add details to our history: your website is small - 20-30 visitors per day, and in the repository there is only one branch - master .

Let's start by adding a task with the aws command to .gitlab-ci.yml :

deploy: script: aws s3 cp ./ s3://yourbucket/ --recursive --exclude "*" --include "*.html" Failure:

We need to make sure the aws executable is aws . Installing awscli requires a pip tool to install Python packages. Specify the Docker image with Python preinstalled, which should also contain a pip :

deploy: image: python:latest script: - pip install awscli - aws s3 cp ./ s3://yourbucket/ --recursive --exclude "*" --include "*.html"  When pushed to GitLab, the code is automatically deployed using CI.

When pushed to GitLab, the code is automatically deployed using CI.

Installing awscli increases the task execution time, but now we are not particularly worried. If you still want to speed up the process, you can search for a Docker image with a pre-installed awscli , or create one on your own.

Also, do not forget about environment variables derived from the AWS Console :

variables: AWS_ACCESS_KEY_ID: "AKIAIOSFODNN7EXAMPLE" AWS_SECRET_ACCESS_KEY: “wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY” deploy: image: python:latest script: - pip install awscli - aws s3 cp ./ s3://yourbucket/ --recursive --exclude "*" --include "*.html" It should work, but keeping the secret keys open (even in a private repository) is not a good idea. Let's see what can be done with this.

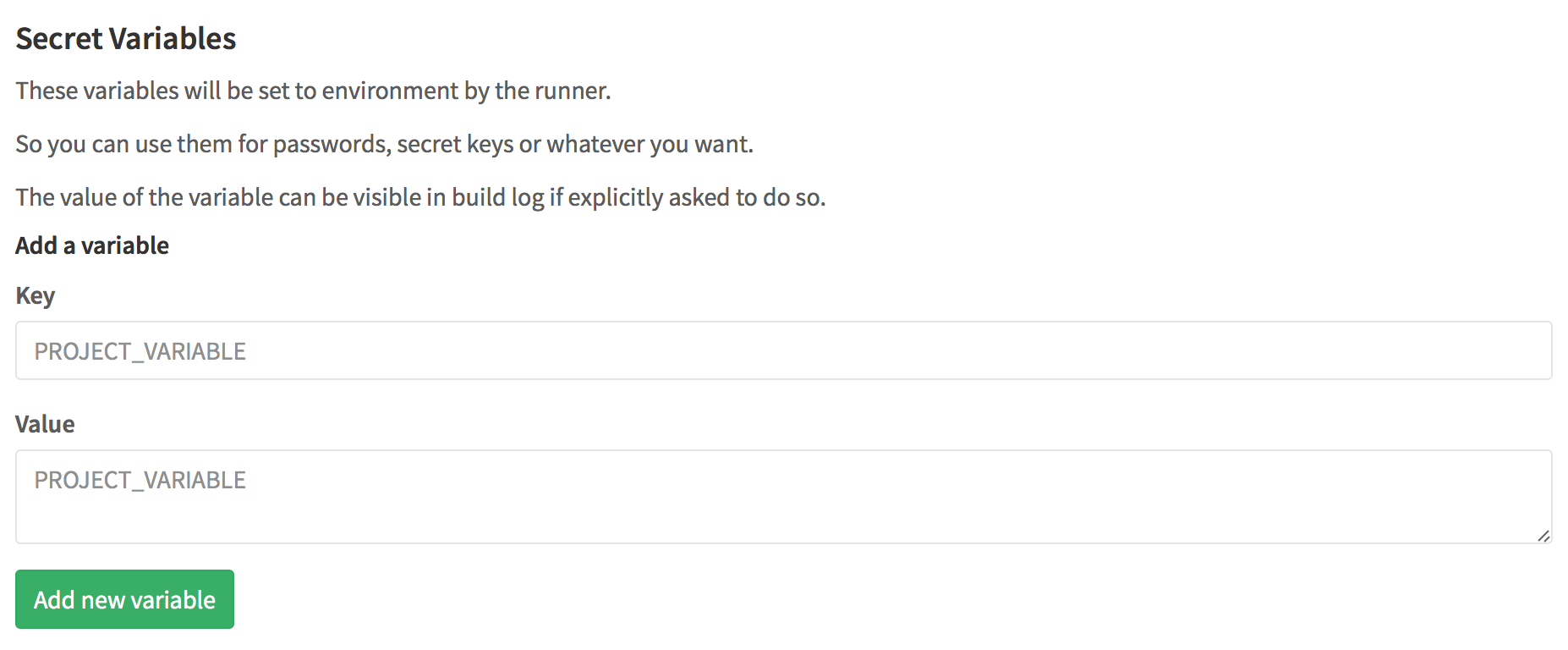

Keeping secrets

GitLab has a special section for secret variables: Settings> Variables

All data placed in this section will become environment variables . Access to this section is only for the project administrator.

The variables section can be removed from the CI settings, but better let's use it for another purpose.

Creating and using public variables

With the increase in the size of the configuration file, it becomes more convenient to store some parameters at the beginning as variables. This approach is especially relevant when these parameters are used in several places. Although in our case it hasn’t yet reached this point, for demonstration purposes, we will create a variable for the name of the S3 bake:

variables: S3_BUCKET_NAME: "yourbucket" deploy: image: python:latest script: - pip install awscli - aws s3 cp ./ s3://$S3_BUCKET_NAME/ --recursive --exclude "*" --include "*.html" Build successful:

In the meantime, your site traffic has increased, and you have hired a developer to help you. Now you have a team. Let's see how teamwork affects the workflow.

Teamwork

Now two people work in the same repository, and it is no longer advisable to use the master branch for development. Therefore, you decide to use different branches for developing new features and writing articles and merging them into master as soon as you are ready.

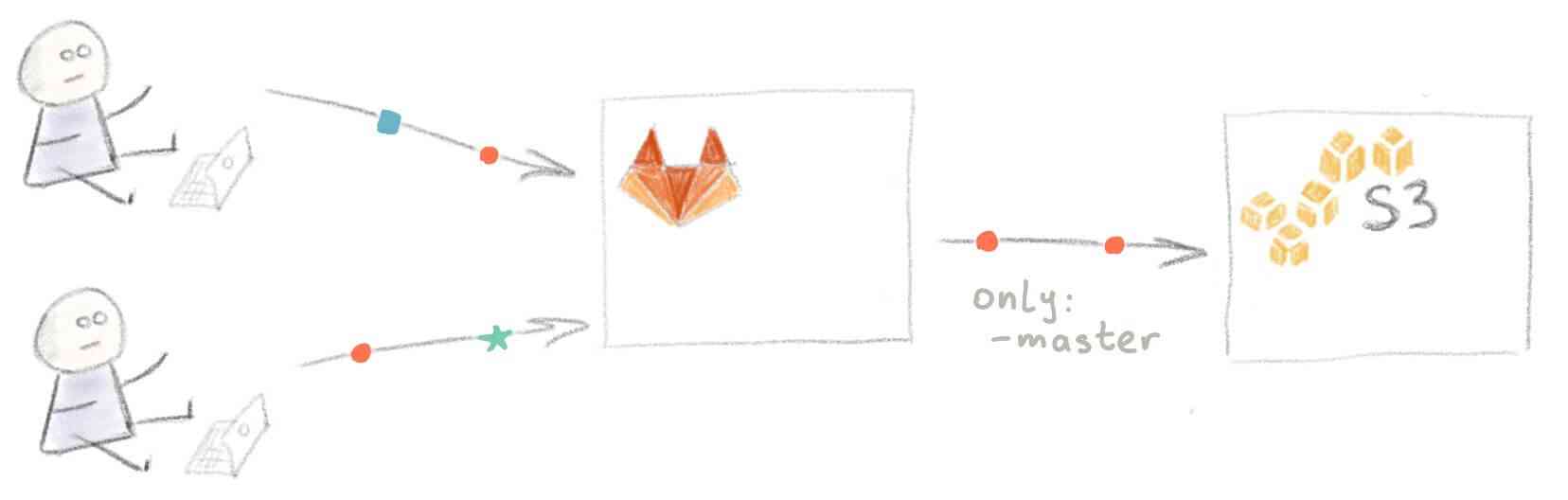

The problem is that with the current setup, CI does not support working with branches — when pushing to any branch on GitLab, the current master state is deployed to S3.

Fortunately, this is easy to fix - just add only: master to the deploy task.

We want to avoid deploying every branch on the site.

We want to avoid deploying every branch on the site.

On the other hand, it would be nice to be able to preview changes made from the branches for the selected functionality (feature-branches).

Creating a separate space for testing

Patrick (the developer you recently hired) reminds you that there is such functionality as

GitLab Pages . Just what you need - a place to preview the new changes.

To host a website on GitLab Pages, your CI configuration must meet three simple requirements:

- The task must be named

pages - There must be a section

artifacts, and it contains a folderpublic - In this very

publicfolder should be everything that you want to place on the site

The contents of the public folder will be located at http://<username>.gitlab.io/<projectname>/

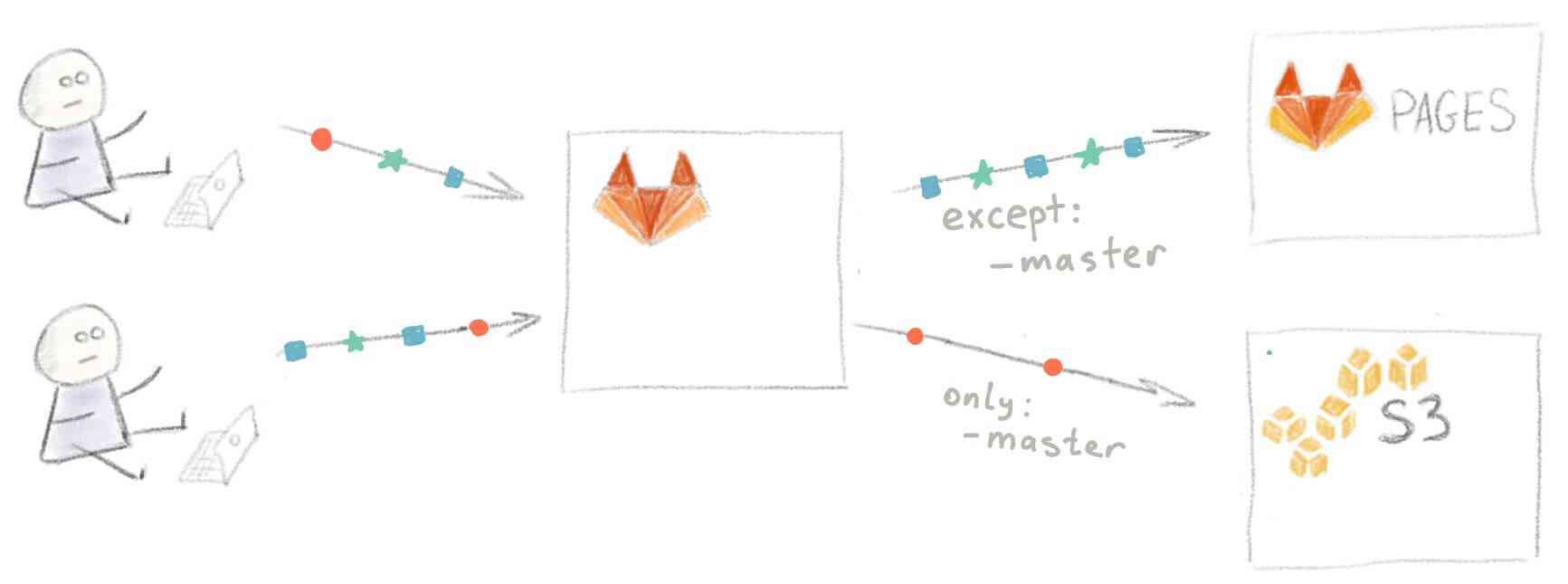

After adding the code from the example for sites in pure HTML, the CI configuration file looks like this:

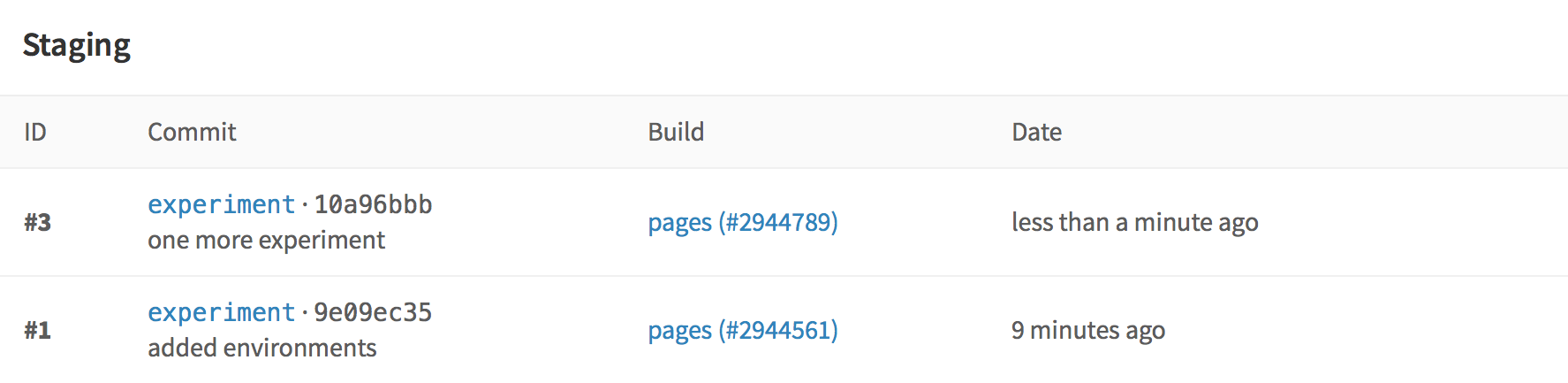

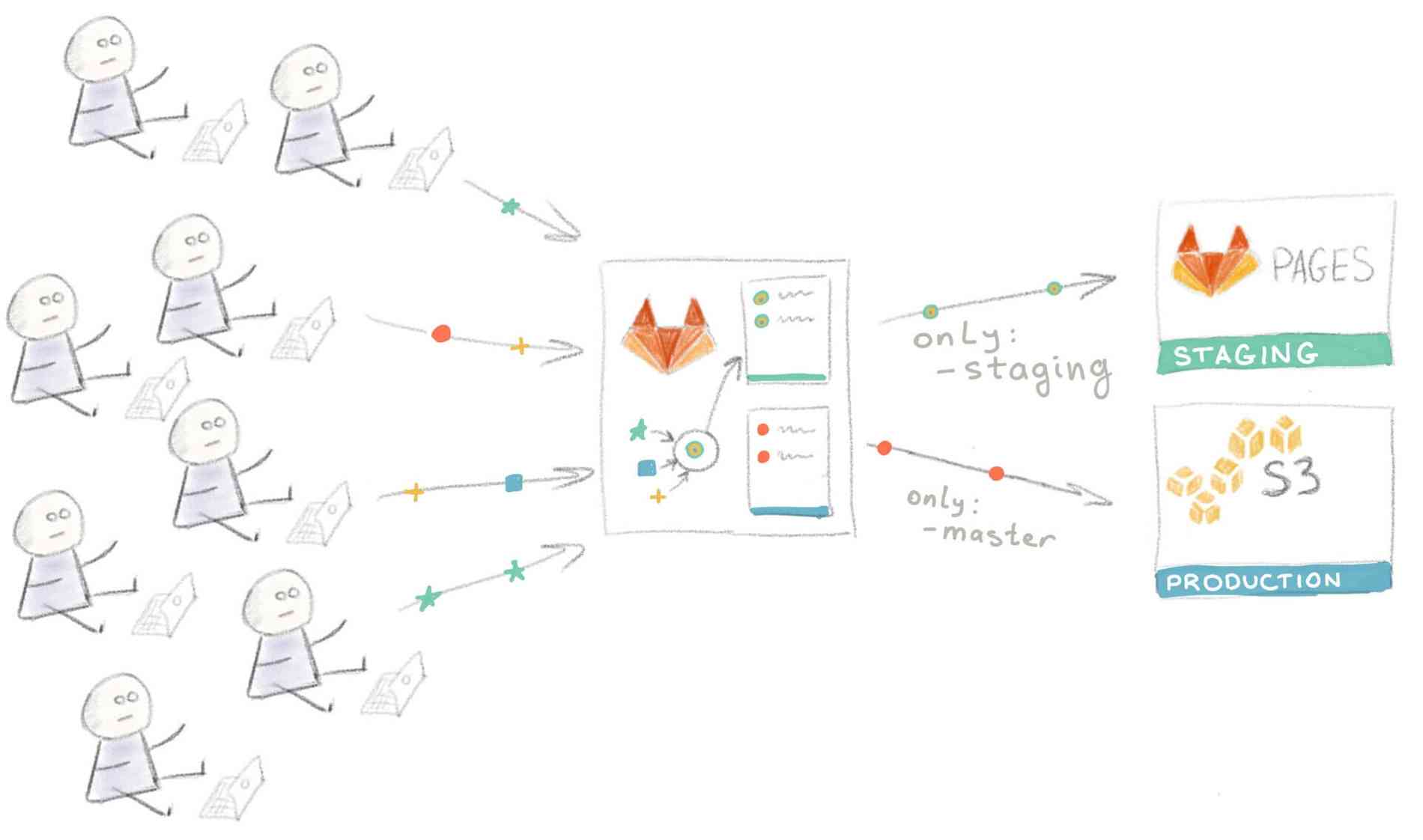

variables: S3_BUCKET_NAME: "yourbucket" deploy: image: python:latest script: - pip install awscli - aws s3 cp ./ s3://$S3_BUCKET_NAME/ --recursive --exclude "*" --include "*.html" only: - master pages: image: alpine:latest script: - mkdir -p ./public - cp ./*.html ./public/ artifacts: paths: - public except: - master In total, it contains two tasks: one ( deploy ) deploys the site to S3 for your readers, and the other ( pages ) to GitLab Pages. Let's call them the “ Production environment” and “ Staging environment”, respectively.

All branches except master will be deployed on GitLab Pages

All branches except master will be deployed on GitLab Pages

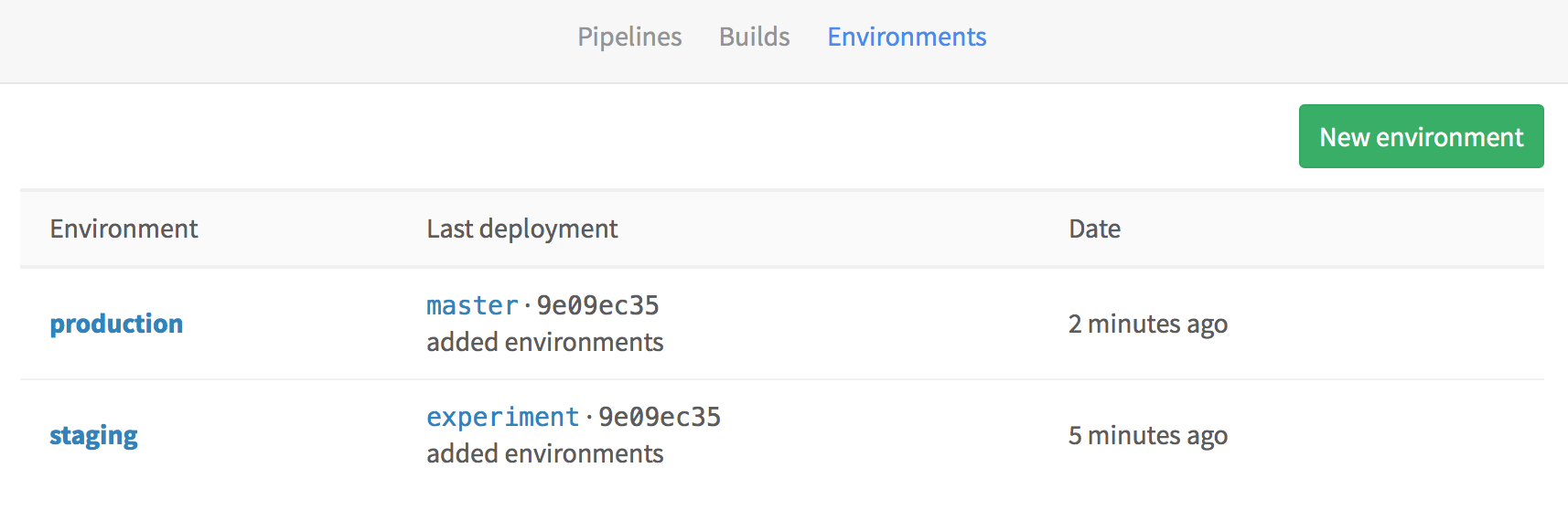

Deployment environments

GitLab supports deployment environments . All you need to do is assign the appropriate environment for each deployment task :

variables: S3_BUCKET_NAME: "yourbucket" deploy to production: environment: production image: python:latest script: - pip install awscli - aws s3 cp ./ s3://$S3_BUCKET_NAME/ --recursive --exclude "*" --include "*.html" only: - master pages: image: alpine:latest environment: staging script: - mkdir -p ./public - cp ./*.html ./public/ artifacts: paths: - public except: - master GitLab keeps track of all your deployment processes, so you can always see what is currently being deployed on your servers:

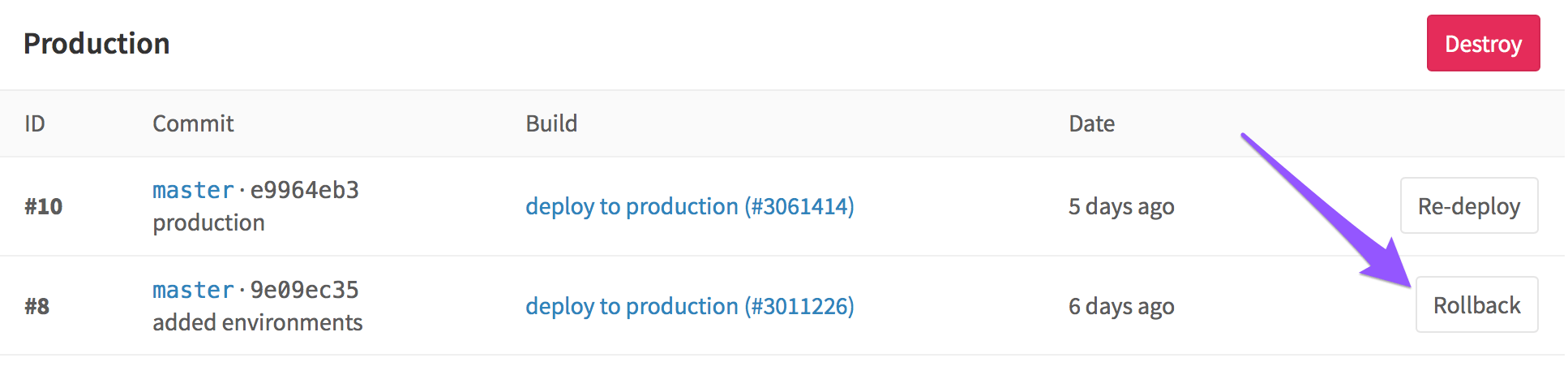

Also, GitLab keeps a complete history of all deployments for each environment:

So, we have optimized and set up everything that we could, and are ready for new challenges that will not take long to wait.

Teamwork, part 2

Again. It happened again. All you had to do was push out your feature branch for the preview, after a minute Patrick did the same and overwrites the contents of the staging. # $ @! Third time today!

Idea! We use Slack for deployment alerts so that no one pushes the new changes before the old ones finish deploying.

Slack Alerts

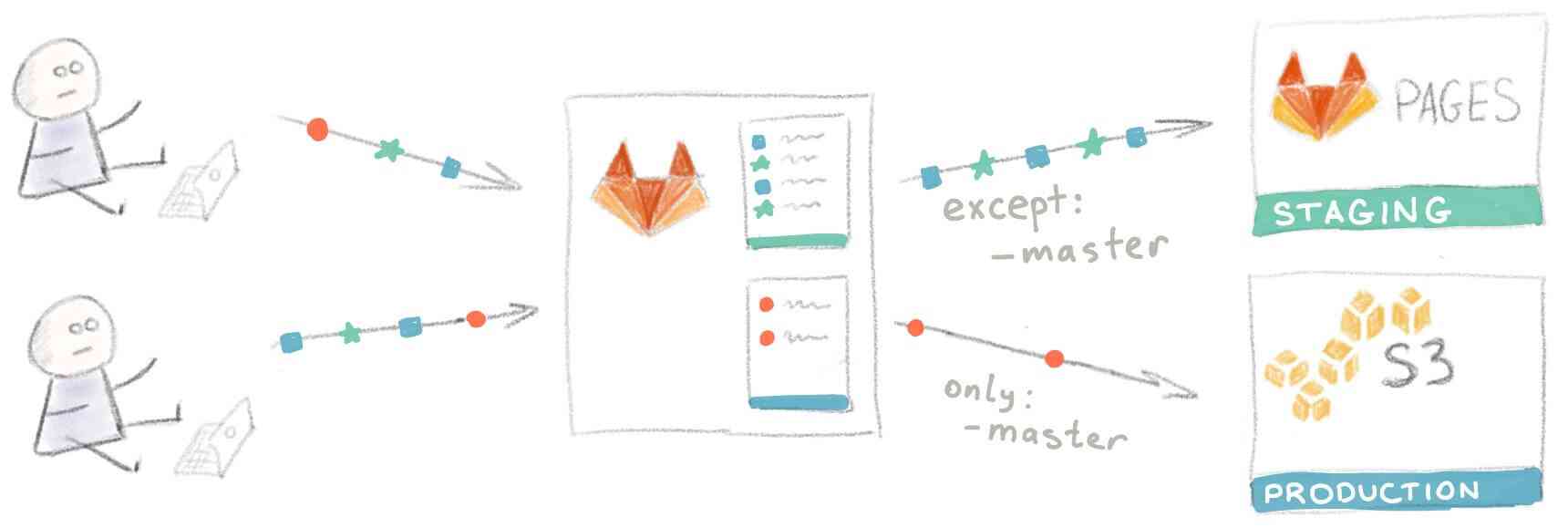

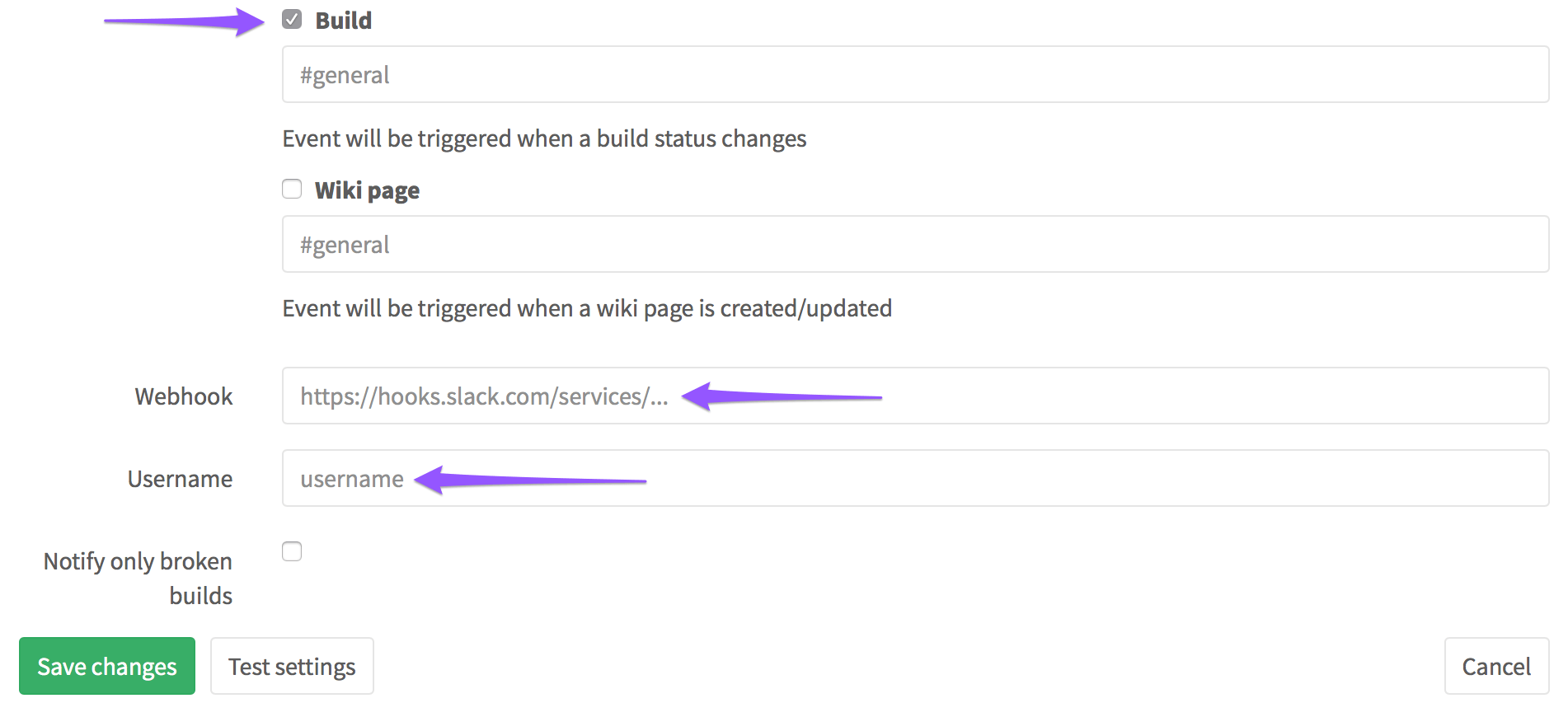

Customizing Slack alerts is a snap . We just need to take from the Slack URL of the incoming webhuk ...

... and transfer it to Settings> Services> Slack along with your Slack login:

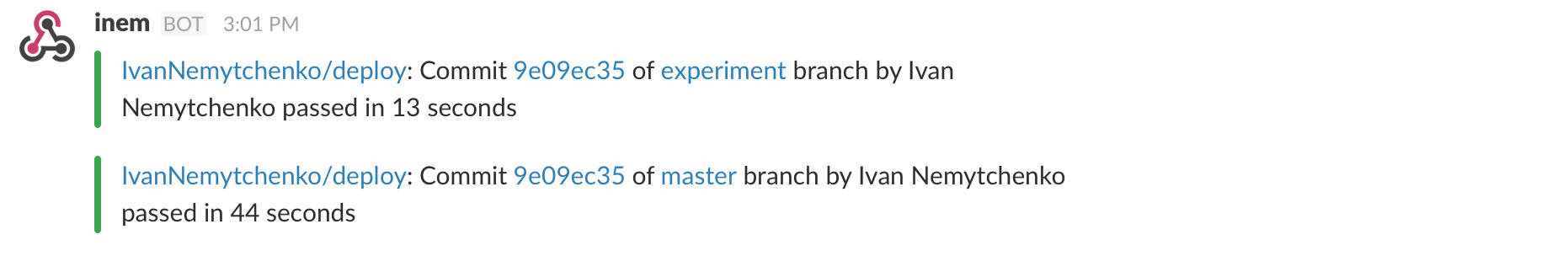

Since you only want to be notified about deployments, in the settings shown above you can uncheck all items except “Build”. That's all, now you will receive alerts about each deployment:

Work in a big team

Over time, your site has become very popular, and your team has grown from two to eight people. Development takes place in parallel, and people increasingly have to wait in line for a preview on Staging. The “Deploy each branch to Staging” approach no longer works.

It's time to re-work the workflow. You and your team agreed that in order to roll out changes to a staging server, you must first merge these changes into the “staging” branch.

To add this functionality, you need to make only minor changes to the .gitlab-ci.yml :

except: - master becomes

only: - staging  Developers spend merge their feature-branches before the Staging preview

Developers spend merge their feature-branches before the Staging preview

Of course, with this approach, extra time and effort is spent on merge, but everyone in the team agrees that it is better than waiting in a queue.

Unseen circumstances

It is impossible to control everything, and troubles tend to happen. For example, someone misunderstood branches incorrectly and pushed the result right in production just when your site was in the top of HackerNews. As a result, thousands of people saw a crooked version of the site instead of your chic home page.

Fortunately, there was a man who knew about the Rollback button, so that just a minute after the discovery of the problem, the site assumed the same form.

Rollback restarts an earlier task spawned in the past by some other commit.

Rollback restarts an earlier task spawned in the past by some other commit.

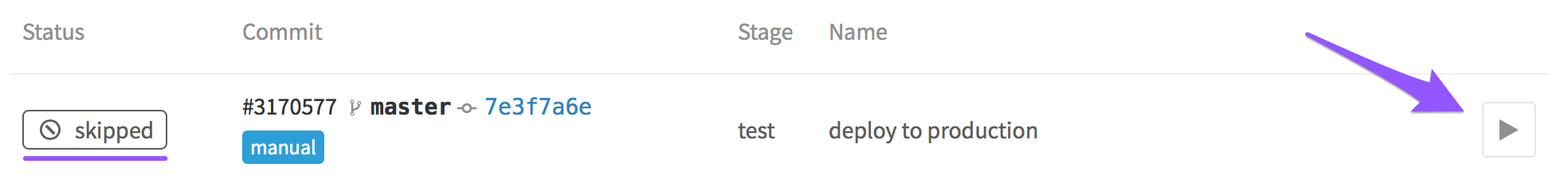

To avoid this in the future, you decided to disable automatic deployment in production and switch to manual deployment. To do this, add the task when: manual .

In order to start the deployment manually, go to the Pipelines> Builds tab and click on this button:

And so your company has become a corporation. Hundreds of people are working on the site, and some of the previous work practices are no longer well suited to new circumstances.

App review

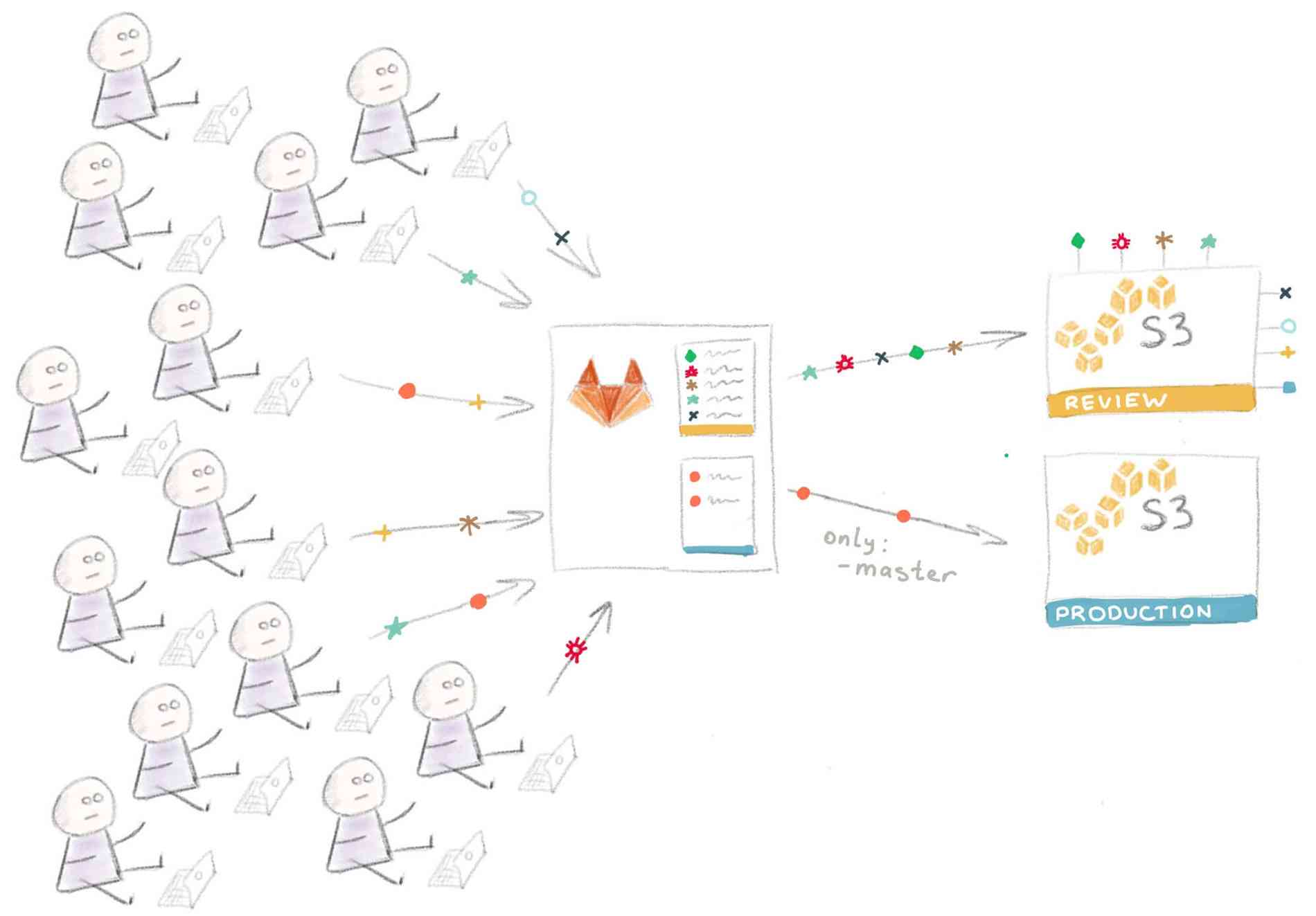

The next logical step is to add the ability to deploy a temporary instance of an application for each feature branch for a review.

In our case, for this you need to configure another S3 baket, with the only difference that in this case the contents of the site are copied into the “folder” with the name of the branch. Therefore, the URL looks like this:

http://<REVIEW_S3_BUCKET_NAME>.s3-website-us-east-1.amazonaws.com/<branchname>/

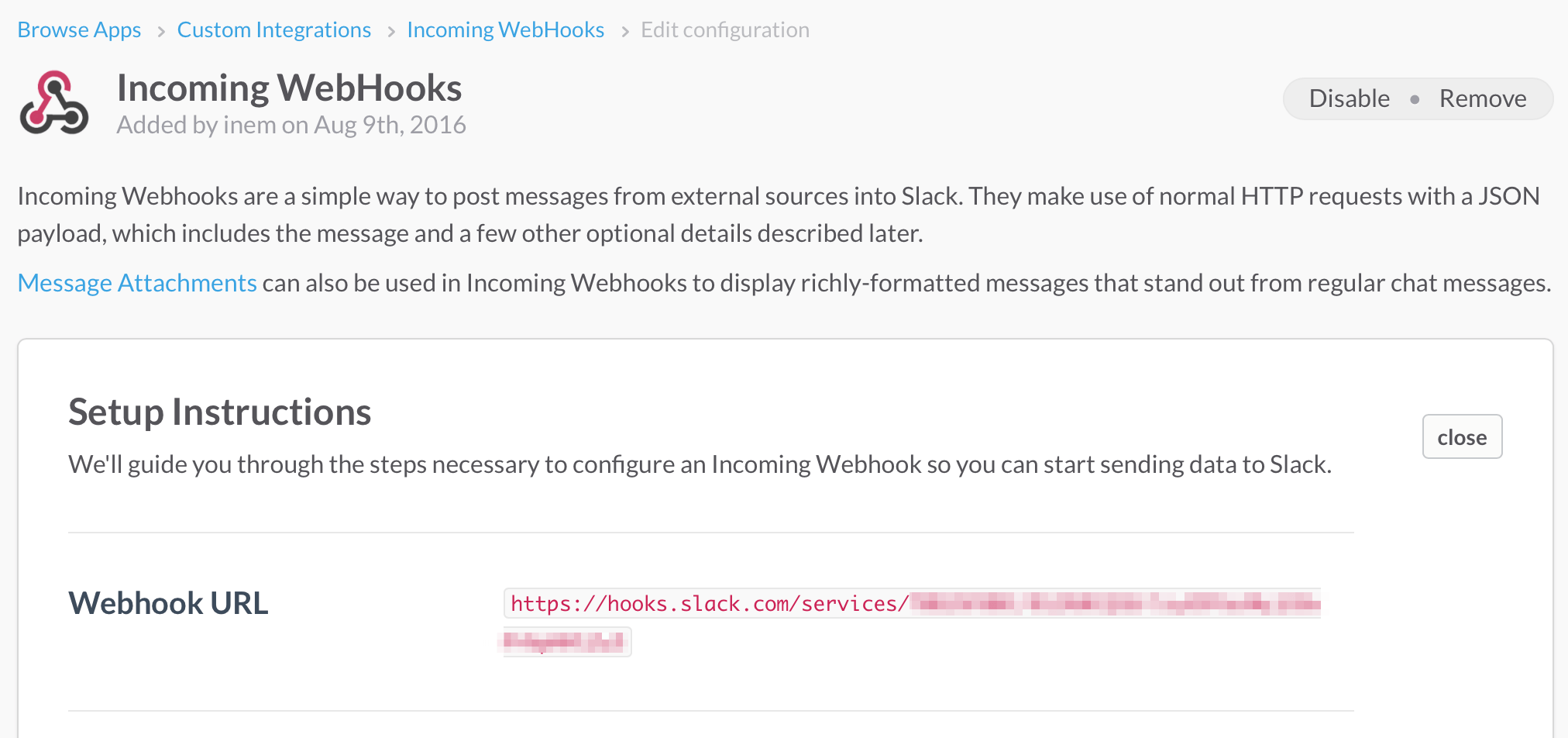

And this is how the code replacing the pages task will look like:

review apps: variables: S3_BUCKET_NAME: "reviewbucket" image: python:latest environment: review script: - pip install awscli - mkdir -p ./$CI_BUILD_REF_NAME - cp ./*.html ./$CI_BUILD_REF_NAME/ - aws s3 cp ./ s3://$S3_BUCKET_NAME/ --recursive --exclude "*" --include "*.html" It’s worth explaining where we got the $CI_BUILD_REF_NAME variable - from the list of predefined GitLab environment variables that you can use for any of your tasks.

Note that the variable S3_BUCKET_NAME defined inside the task — this way you can rewrite the definitions of a higher level.

Visual interpretation of this configuration:

The technical details of implementing this approach vary greatly depending on the technologies used in your stack and on how your deployment process is arranged, which is beyond the scope of this article.

Real projects, as a rule, are much more complicated than our example with a site on static HTML. For example, since the instances are temporary, this greatly complicates their automatic loading with all the required services and software on the fly. However, this is doable, especially if you use Docker or at least Chef or Ansible.

About deployment with Docker will be discussed in another article. Honestly, I feel a little guilty for having simplified the process of unloading to simply copying HTML files, completely missing out on more hardcore scripts. If you're interested, I recommend reading the article "Building an Elixir Release into a Docker image using GitLab CI" .

For now let's discuss another, last problem.

Deploy to various platforms

In reality, we are not limited to S3 and GitLab Pages; Applications are deployed to various services.

Moreover, at some point you may decide to move to another platform, and for this you will need to rewrite all deployment scripts. In such a situation, using gem dpl greatly simplifies life.

In the examples in this article, we used awscli as a tool for delivering code to Amazon S3. Actually, no matter what tool you use and where you deliver the code - the principle remains the same: a command is run with certain parameters and a secret key is somehow transferred to it for identification.

The dbl deployment dbl follows this principle and provides a unified interface for a specific list of plug-ins (providers) for deploying your code on different hosting sites.

The task for deployment in production using dpl will look like this:

variables: S3_BUCKET_NAME: "yourbucket" deploy to production: environment: production image: ruby:latest script: - gem install dpl - dpl --provider=s3 --bucket=$S3_BUCKET_NAME only: - master So if you deploy to different hosting sites or frequently change target platforms, consider using dpl in deployment scripts - this contributes to their consistency.

Summing up

- Deployment occurs through regular execution of a command (or set of commands), so that it can be run as part of GiLab CI

- In most cases, these commands need to transfer various secret keys. Store these keys in the Settings> Variables section .

- With GitLab CI, you can choose which branches to deploy.

- GitLab saves deployment history, allowing you to roll back to any previous version.

- It is possible to enable manual deployment (rather than automatically) for the most important parts of the project infrastructure.

Translation from English is made by translational artel "Nadmozg and partners", http://nadmosq.ru . Over translation worked sgnl_05 .

')

Source: https://habr.com/ru/post/310502/

All Articles