Do Tango robots dance

Project Tango from Google - a project to create mobile devices that can analyze the space around them in three dimensions. Thanks to the Device Lab project, I was able to play around with one of these devices.

Article author Sergei Melekhin, in the framework of the competition " Device Lab from Google ."

It seemed to me interesting to make a robot that will use Tango for orientation in space and to avoid collisions with obstacles.

Motion tracking

')

The definition of the change in position of the device in space

All modern smartphones have an accelerometer and a gyroscope, which allow you to determine the change in the position of the device, but they are not extremely accurate and determine the absolute position of the device with their help is problematic .

Depth perception

Due to the presence of special sensors - an infrared camera and an infrared laser projecting a two-dimensional grid onto the space in front of the device, Tango can receive a cloud of points, that is, a three-dimensional image of the space in front of the device.

Area learning

By combining motion tracking, depth perception and adding black algorithmic magic that unites the point cloud in real time in the manner of a panorama, we can build a complete model of the surrounding space in the device’s memory and then accurately determine its position within this model.

In fact, the best way to understand what Tango can without a device is to look at existing applications in the Play Market :

On Monday, I received a long-awaited device. Of course, it was discharged to zero. I charged up to 100% and turned on.

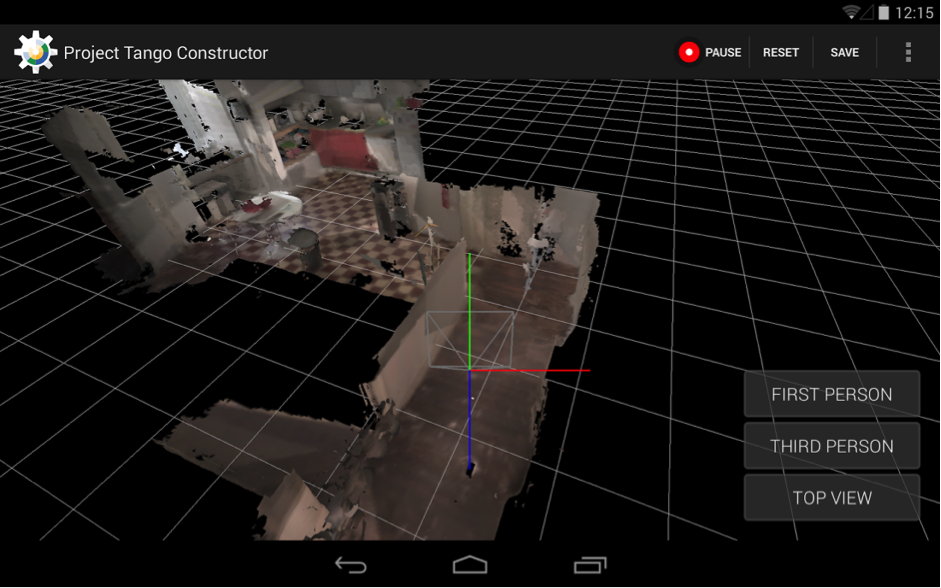

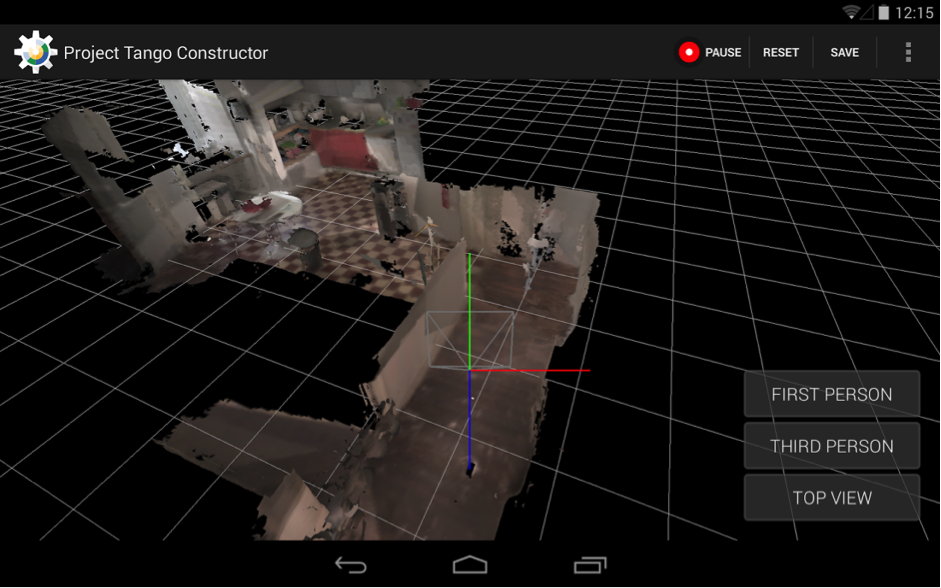

At first I tried everything that was already installed on the device, then I downloaded everything in the Play Market in a row. The greatest enthusiasm caused Tango Constructor, allows you to scan the surrounding space and save the textured 3D model.

But it was enough and played - I have only 3 days to make a robot and teach it to navigate in space.

First of all - the documentation. At https://developers.google.com/tango/ there is everything you need to start developing for Tango as soon as possible. I started by learning the standard examples here .

For my project, I decided not to go into Area Learning, but simply to analyze Point Cloud in real time. Of course, the first option would be much more interesting, but I was afraid not to meet the deadline.

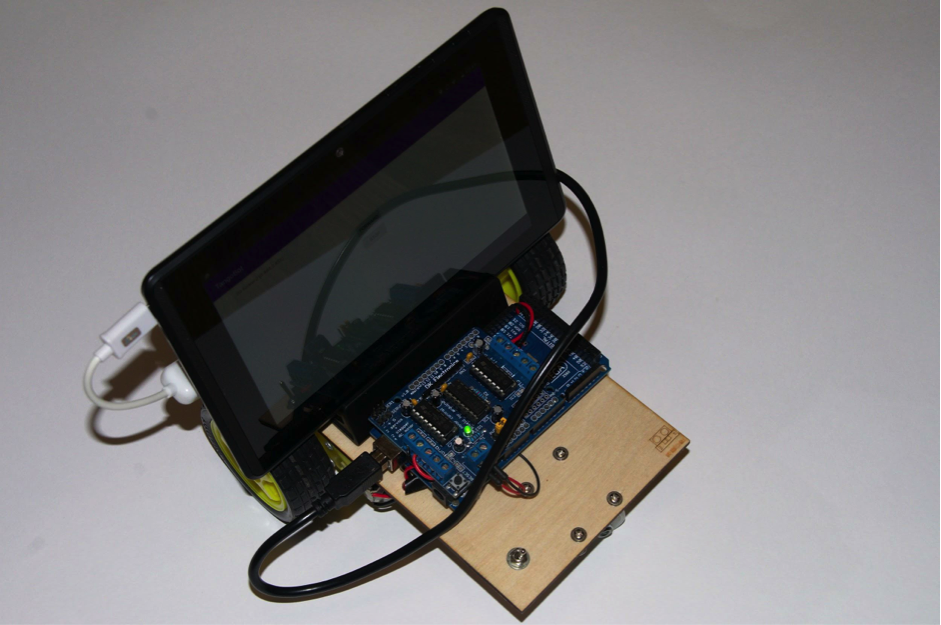

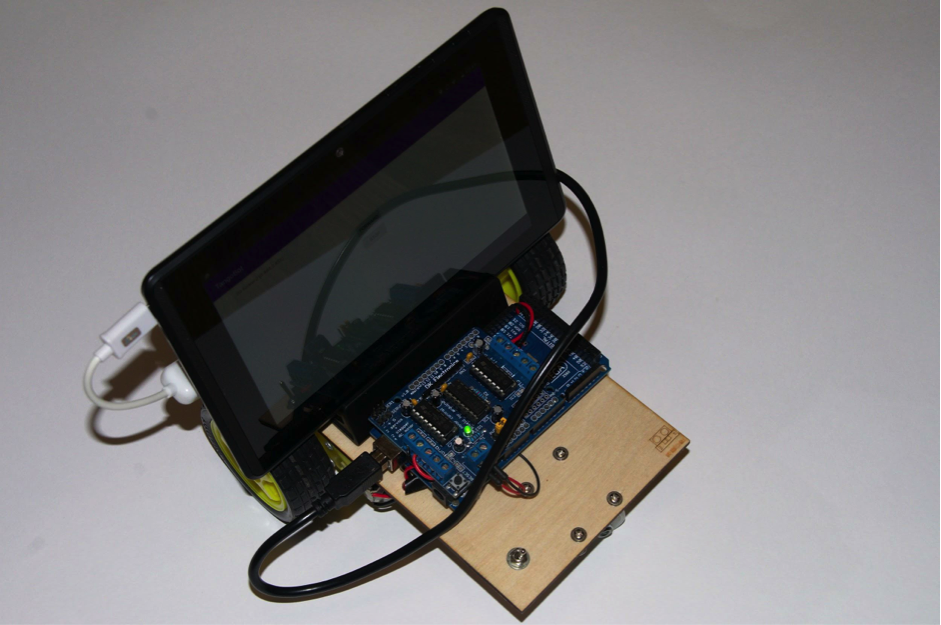

For the “carcass” of the robot, I took the Arduino Mega with a Motor Shield and two motors. I programmed Arduino so that it listens and executes commands that come in via a serial port (which is emulated on USB). Platform cut out of 3mm plywood by laser, in itself - fascinating.

Sketch for arduinka can be taken here . The device itself Tango carries on board Android 4.4, so the control program will be an Android application. To communicate with the Arduino, I used the usb-serial-for-andoid library .

The protocol of communication with Arduino chose the simplest - only 5 single-byte commands:

In the Tango configuration, I ask that I need a point cloud "KEY_BOOLEAN_DEPTH":

Tango returns a point cloud map as a one-dimensional floating-point array. The first in a row (and in index - zero) array element is the x coordinate of the first point, the second is at the first point, the third is the z coordinate of the first point. The fourth element is the x coordinate already for the second point. Well, and so on.

I am not strong in robotics and geometry, so the algorithm for analyzing the depth map has sucked from my finger. Hmm, well, that is, derived empirically.

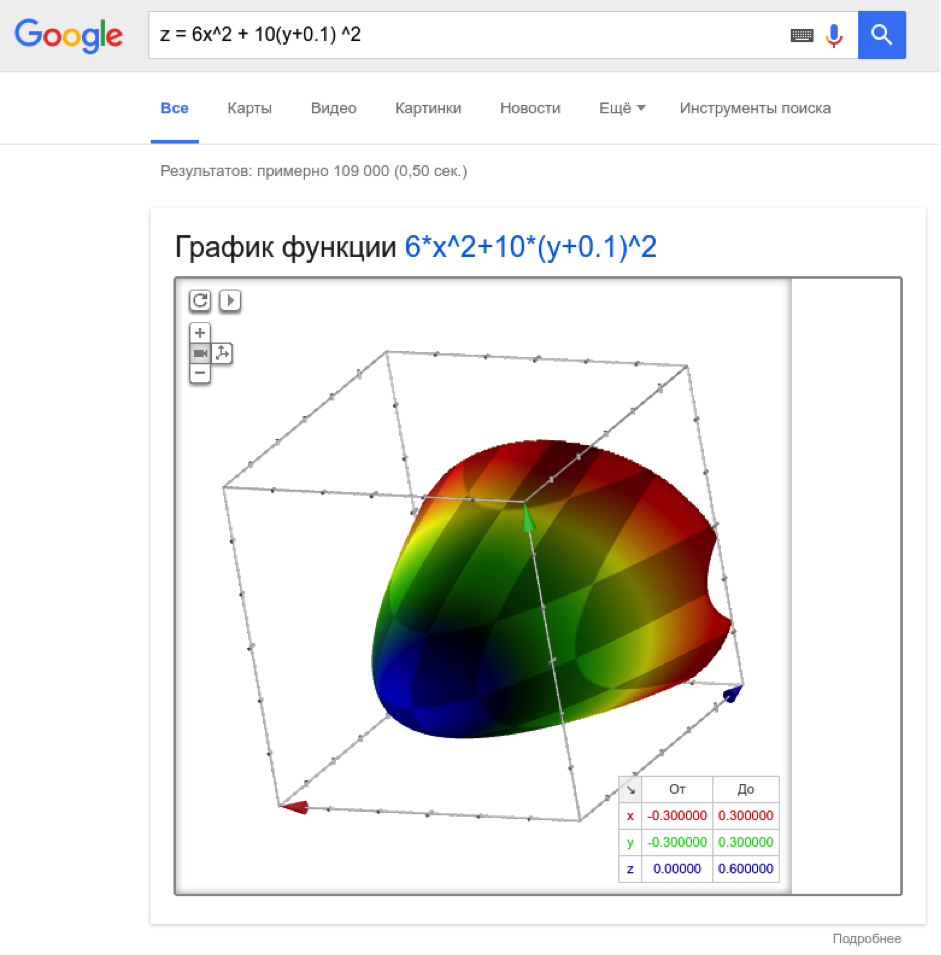

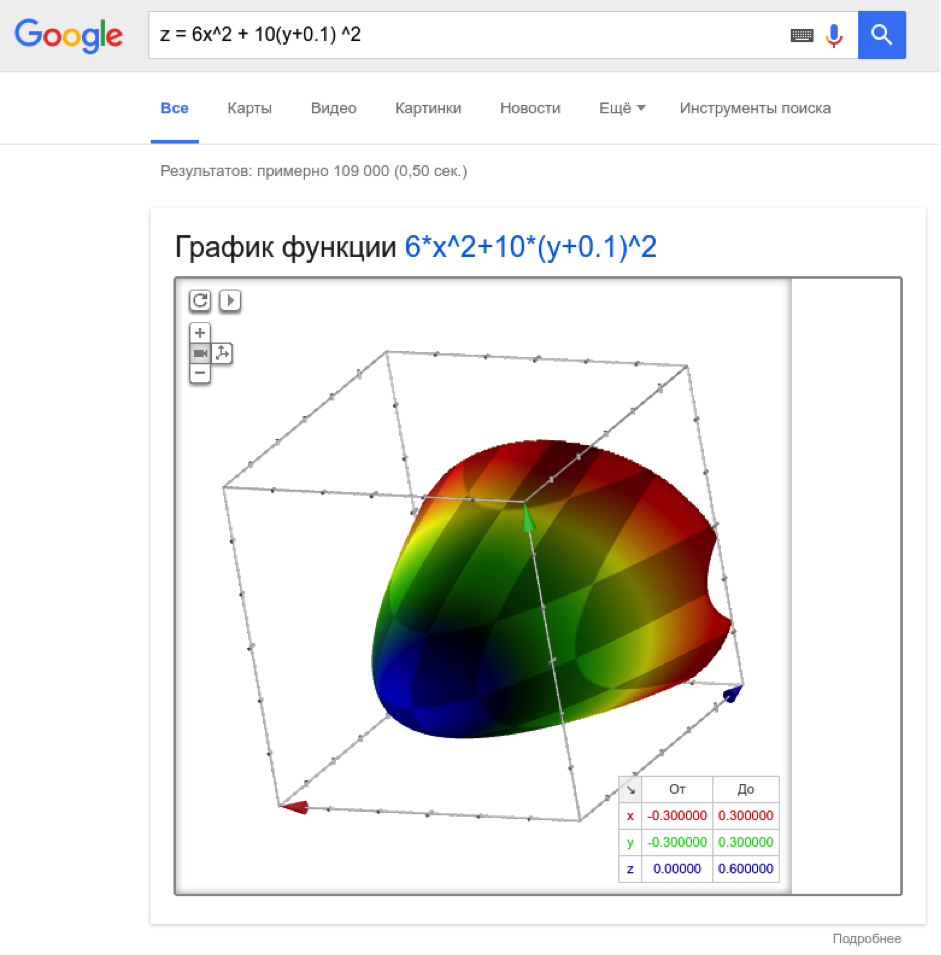

To the obstacle, I attribute points that fall into the parabolic region in front of the device, given by a quadratic formula:

The coefficients are chosen so that the parabola is stretched deep into and wide (along y), slightly shifted down (10 cm).

And at the same time, these points should be closer than 50 cm. If there are at least five of these points, and we see two “frames” in a row (trying to avoid false positives), then we turn the robot to the left. A simple algorithm, but it works decently for spaces not much complex configuration (for example in the office).

In order to be able to control the robot remotely, I added support for Firebase to the project and when changing the stateString parameter on the server I send the corresponding Arduino command.

The robot rolls around, dodges walls and furniture, is controlled remotely, which makes me very happy.

So he rides:

I was pleased with the simplicity of development - it took me two days to do the software, despite the fact that I was not an Android or even a Java developer - I spent time on the war with gradle and did not understand why it was impossible to iterate over the iterator.

So I can say that the future has come - the threshold for entering the development of products based on Tango is extremely low. The Tango SDK is very simple and logical; ordinary user devices with Tango support appear on the market.

The project can be made more interesting if you use not a point cloud, but a model built using Area Learning. You can try to classify the surrounding objects using TensorFlow, the benefit of 192 Cuda cores on the device allow.

Thank you for your attention, and fewer obstacles on your way!

Article author Sergei Melekhin, in the framework of the competition " Device Lab from Google ."

It seemed to me interesting to make a robot that will use Tango for orientation in space and to avoid collisions with obstacles.

Three basic functions of Tango

Motion tracking

')

The definition of the change in position of the device in space

All modern smartphones have an accelerometer and a gyroscope, which allow you to determine the change in the position of the device, but they are not extremely accurate and determine the absolute position of the device with their help is problematic .

Depth perception

Due to the presence of special sensors - an infrared camera and an infrared laser projecting a two-dimensional grid onto the space in front of the device, Tango can receive a cloud of points, that is, a three-dimensional image of the space in front of the device.

Area learning

By combining motion tracking, depth perception and adding black algorithmic magic that unites the point cloud in real time in the manner of a panorama, we can build a complete model of the surrounding space in the device’s memory and then accurately determine its position within this model.

Main applications

- Augmented reality (virtual objects really “stick” to real surfaces, not hover over them or sink inside, as happens without Tango)

- Accurate indoor navigation

- Games

- Room mapping

- Creation of 3D models (still rather rough, but still)

In fact, the best way to understand what Tango can without a device is to look at existing applications in the Play Market :

Tangobot

On Monday, I received a long-awaited device. Of course, it was discharged to zero. I charged up to 100% and turned on.

At first I tried everything that was already installed on the device, then I downloaded everything in the Play Market in a row. The greatest enthusiasm caused Tango Constructor, allows you to scan the surrounding space and save the textured 3D model.

But it was enough and played - I have only 3 days to make a robot and teach it to navigate in space.

First of all - the documentation. At https://developers.google.com/tango/ there is everything you need to start developing for Tango as soon as possible. I started by learning the standard examples here .

For my project, I decided not to go into Area Learning, but simply to analyze Point Cloud in real time. Of course, the first option would be much more interesting, but I was afraid not to meet the deadline.

For the “carcass” of the robot, I took the Arduino Mega with a Motor Shield and two motors. I programmed Arduino so that it listens and executes commands that come in via a serial port (which is emulated on USB). Platform cut out of 3mm plywood by laser, in itself - fascinating.

Sketch for arduinka can be taken here . The device itself Tango carries on board Android 4.4, so the control program will be an Android application. To communicate with the Arduino, I used the usb-serial-for-andoid library .

The protocol of communication with Arduino chose the simplest - only 5 single-byte commands:

- F - go forward

- B - go back

- L - turn left

- R - turn to the right

- S - stop

In the Tango configuration, I ask that I need a point cloud "KEY_BOOLEAN_DEPTH":

private TangoConfig setupTangoConfig(Tango tango) { // Create a new Tango Configuration and enable the Depth Sensing API. TangoConfig config = new TangoConfig(); config = tango.getConfig(config.CONFIG_TYPE_DEFAULT); config.putBoolean(TangoConfig.KEY_BOOLEAN_DEPTH, true); return config; } Tango returns a point cloud map as a one-dimensional floating-point array. The first in a row (and in index - zero) array element is the x coordinate of the first point, the second is at the first point, the third is the z coordinate of the first point. The fourth element is the x coordinate already for the second point. Well, and so on.

I am not strong in robotics and geometry, so the algorithm for analyzing the depth map has sucked from my finger. Hmm, well, that is, derived empirically.

To the obstacle, I attribute points that fall into the parabolic region in front of the device, given by a quadratic formula:

The coefficients are chosen so that the parabola is stretched deep into and wide (along y), slightly shifted down (10 cm).

And at the same time, these points should be closer than 50 cm. If there are at least five of these points, and we see two “frames” in a row (trying to avoid false positives), then we turn the robot to the left. A simple algorithm, but it works decently for spaces not much complex configuration (for example in the office).

In order to be able to control the robot remotely, I added support for Firebase to the project and when changing the stateString parameter on the server I send the corresponding Arduino command.

The robot rolls around, dodges walls and furniture, is controlled remotely, which makes me very happy.

So he rides:

I was pleased with the simplicity of development - it took me two days to do the software, despite the fact that I was not an Android or even a Java developer - I spent time on the war with gradle and did not understand why it was impossible to iterate over the iterator.

So I can say that the future has come - the threshold for entering the development of products based on Tango is extremely low. The Tango SDK is very simple and logical; ordinary user devices with Tango support appear on the market.

The project can be made more interesting if you use not a point cloud, but a model built using Area Learning. You can try to classify the surrounding objects using TensorFlow, the benefit of 192 Cuda cores on the device allow.

Thank you for your attention, and fewer obstacles on your way!

Sources

- Source code of the program

- Source code for the “control panel”

- Sketch for Arduino

Source: https://habr.com/ru/post/310488/

All Articles