Removing Service Routing Mess With Docker

“Not difficulties“ break ”you, but how you transfer them” - Lou Holtz

Co-authored by Emmet O'Grady (founder of NimbleCI and Docker Ninja)

In Franz Kafka’s book “The Transformation” (“Metamorphoses”), a person wakes up one morning and discovers that he has turned into a giant insect-like creature. Like DevOps engineers, we have the same surreal moments in life. We find exotic errors “under the rug” (hidden in the most inaccessible places) or are attacked by worms or other dangerous entities. If you have been doing this for a long time, you will sooner or later have a terrible story, or even two (share them with us!). At such a moment we cannot sit and wait for the crisis to come, we must act quickly. Hurrying to fix it as soon as possible, we must deploy a new entity and release a new version of our service, eliminating the problem.

How about another surreal moment: your app gains popularity in the media, the crowded Slack channel (chat) or your internal bulletin board. Users will come quickly and lightning-fast to your website, your service will be overwhelmed by them, the number of users will reach the limit and even go beyond it. In a furious race, trying to fix error 502, you must find servers, start new entities, and reconfigure your network and your load balancers. You frantically trying to put all the pieces together. You feel as if you have to fight with a spoon against a knife. Dreamily you think: “It should be automated”, maybe then I will have more time to relax and read the classics.

Do not experience more of these feelings! Docker 1.12 will help you. This release comes with a set of new features that provide the following conditions:

- Rapid deployment and rollback of service

- Automatic load balancing

- Enable scaling of services and infrastructure in which they operate

- Restore service or failed nodes automatically

And when you learn what Docker offers, it will be easy to use.

Routing grid

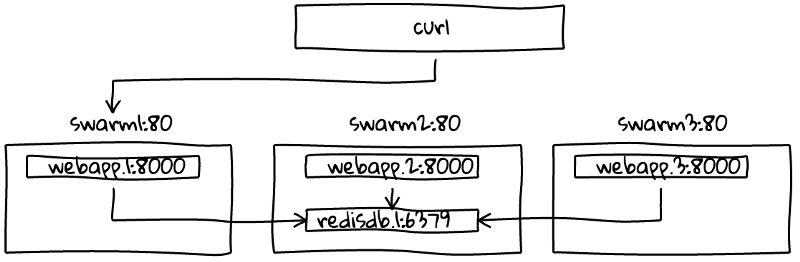

Docker 1.12 provides a complete set of new definitions. One of them is a new feature called “Routing Grid”. Computer network geometry and its routing algorithm are not new; the genius of the Docker engineering team was to use this approach to simplify the delivery of software changes and the discovery service in the Micro Service Architecture. “Grid” is just a new way of routing and balancing traffic in Docker 1.12 containers. The new routing strategy allows the service to reach the same port on all nodes in Swarm, even if the node does not have a service deployed on it. The routing grid also transparently distributes requests across all available services in Swarm, identifying failed nodes.

The new approach makes it very easy to set up load balancing services: imagine three Swarm nodes with seven different services running in Swarm. From the outside, we can send a request to any node and it will be transferred (routed) to a random service automatically, or we can always send a request to a single node and Docker will internally distribute the balance between the services. Thus, we obtain load balancing of services with our own internal forces.

The routing grid allows us to process containers in a truly transparent way, in the sense that we are not worried about how many containers our application serves, the cluster processes the entire network and performs load balancing. If before that we had to connect a reverse proxy server before the services, which was supposed to act as a load balancer, now we can relax and let the Docker do load balancing for us. Since Docker does all the dirty work for us, the difference between when we have one container and 100 containers is now trivially small; it's just a reason to have more computer (computational) resources to be able to scale and then run just one command to increase scaling. We no longer need to think about architectural analysis before scaling, since all this is done by Docker. When it starts scaling, we can relax, knowing that Docker will execute it transparently, for one container as well as 100.

You may be wondering why we use the terminology “services” instead of “containers”. This is something new in Docker 1.12, let's see.

Services and Tasks

Docker 1.12 introduces a new abstraction - “services”. Services determine the desired state of the containers in the cluster. Internally, the kernel uses this new concept to ensure that the containers are running, handle errors, and route traffic to the containers.

Let's dive a little deeper and discuss new task concepts. Services consist of tasks representing a single container entity that should be running. Swarm schedules these tasks between nodes. Services for the most part define running container entities (tasks) that must be running all the time, just as they should work (configurations and flags for each container), as well as how to update them (for example, floating updates). All of this together represents the desired state of the service, and the Docker kernel constantly monitors the current state of the cluster and makes changes to make sure that they match the state you want.

Here is a small preview of the redis service, which will be discussed later in this article. To run it, we need to execute a command like this:

$ docker service create --name redisdb --replicas=3 redis:alpine Routing grid in action

Enough theory, let's see it all in action. We'll start with a small nodejs service that will help show the inner workings of the routing grid. You will find the code on github here . Let's take a look at the most important webapp.js file:

// -, , , IP var http = require('http'); var os = require(“os”); var redis = require('redis'); var server = http.createServer(function (request, response) { // “OK” response.writeHead(200, {“Content-Type”: “text/plain”}); // log , var version = “2.0”; var log = {}; log.header = 'webapp'; log.name = os.hostname(); log.version = version; // IPv4 var interfaces = os.networkInterfaces(); var addresses = []; for (var k in interfaces) { for (var k2 in interfaces[k]) { var address = interfaces[k][k2]; if (address.family === 'IPv4' && !address.internal) { addresses.push(address.address); } } } log.nics = addresses; // redis- (redisdb) var client = redis.createClient('6379', 'redisdb'); client.on('connect', function(err,reply) { if (err) return next(err); console.log('Connected to Redis server'); client.incr('counter', function(err, reply) { if(err) return next(err); console.log('This page has been viewed ' + reply + ' times!'); console.log(JSON.stringify(log)); response.end(“ Hello, I'm version “+ log.version +”.My hostname is “+ log.name +”, this page has been viewed “+ reply +”\n and my ip addresses are “ + log.nics + “\n” ); }); // client.incr }); // client.on }); // http.createHttpServer // http- 8000 server.listen(8000); The service only returns the host name, the IP address of the container that launched it, and the counter with the number of visits.

That's all our application. Before diving into it, we must be sure that we have switched to Docker 1.12

$ docker version Client: Version: 1.12.0 API version: 1.24 Go version: go1.6.3 Git commit: 8eab29e Built: Thu Jul 28 21:04:48 2016 OS/Arch: darwin/amd64 Experimental: true Server: Version: 1.12.0 API version: 1.24 Go version: go1.6.3 Git commit: 8eab29e Built: Thu Jul 28 21:04:48 2016 OS/Arch: linux/amd64 Experimental: true We will start by creating a cluster of 3 nodes using a Docker machine:

$ docker-machine create --driver virtualbox swarm-1 Running pre-create checks… Creating machine… … Setting Docker configuration on the remote daemon… Checking connection to Docker… Docker is up and running! … $ docker-machine create --driver virtualbox swarm-2 … Docker is up and running! $ docker-machine create --driver virtualbox swarm-3 … Docker is up and running! $ docker-machine ls NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS swarm-1 — virtualbox Running tcp://192.168.99.106:2376 v1.12.0 swarm-2 — virtualbox Running tcp://192.168.99.107:2376 v1.12.0 swarm-3 — virtualbox Running tcp://192.168.99.108:2376 v1.12.0 For clarity, we have reduced the output of some commands. Then, we set up our swarm. Docker 1.12 makes it very easy to do this:

$ eval $(docker-machine env swarm-1) $ docker swarm init --advertise-addr $(docker-machine ip swarm-1) Swarm initialized: current node (bihyblm2kawbzd3keonc3bz0l) is now a manager. To add a worker to this swarm, run the following command: docker swarm join \ --token SWMTKN-1–1n7gtfmvvrlwo91pv4r59vsdf73bwuwodj3saq0162vcsny89l-5zige8u81ug5adk3o4bsx32fi \ 192.168.99.106:2377 … Now, we will copy and paste the command, which was at the output of the previous command for the “worker” node, in the other two nodes

$ eval $(docker-machine env swarm-2) $ docker swarm join \ --token SWMTKN-1–1n7gtfmvvrlwo91pv4r59vsdf73bwuwodj3saq0162vcsny89l-5zige8u81ug5adk3o4bsx32fi \ 192.168.99.106:2377 This node joined a swarm as a worker. $ eval $(docker-machine env swarm-3) $ docker swarm join \ --token SWMTKN-1–1n7gtfmvvrlwo91pv4r59vsdf73bwuwodj3saq0162vcsny89l-5zige8u81ug5adk3o4bsx32fi \ 192.168.99.106:2377 … This node joined a swarm as a worker. Note: when you initialize a new swarm, the command will output two new commands that you can use to attach other nodes to the swarm. Don't worry about losing these commands; You can get them again at any time by running the “docker swarm join-token worker | manager”.

Before continuing, let's make sure that our swarm cluster is functioning. We specify our Docker client for the manager node and check its status:

$ eval $(docker-machine env swarm-1) $ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 5jqsuf8hsemi7bfbwzfdxfcg5 swarm-2 Ready Active 93f0ghkpsh5ru7newtqm82330 swarm-3 Ready Active b2womami9n2t8tti1acvz6v5j * swarm-1 Ready Active Leader $ docker info … Swarm: active NodeID: b2womami9n2t8tti1acvz6v5j Is Manager: true ClusterID: bimg9n2ti2tnsprkdjqg07tdm Managers: 1 Nodes: 3 Orchestration: Task History Retention Limit: 5 Raft: Snapshot interval: 10000 Heartbeat tick: 1 Election tick: 3 Dispatcher: Heartbeat period: 5 seconds CA configuration: Expiry duration: 3 months Node Address: 192.168.99.106 Let's start creating a new network, which we will use for our examples:

$ docker network create --driver overlay webnet a9j4al25hzhk2m7rdfv5bqt54 Thus, we are now ready to launch a new service for Swarm. We'll start by running the redis database and the “lherrera / webapp” container, which saves the visit pages to redis and displays other interesting details, such as the host name and container IP address.

When we deploy our web application, it becomes possible for it to connect to the redis database using the Redis “redisdb” service. We do not need to use IP addresses, because Swarm has an internal DNS that automatically assigns a DNS record to each service. Everything is very simple!

We can only deploy services from the control nodes. As long as your Docker client still points to the control node, we can just type “docker service create” on the command line:

$ docker service create --name webapp --replicas=3 --network webnet --publish 80:8000 lherrera/webapp:1.0 avq41soybmtw2jamdus0m3pg $ docker service create --name redisdb --network webnet --replicas 1 redis:alpine bmotumdr8bcewcfj6zqkws97x $ docker service ls ID NAME REPLICAS IMAGE COMMAND avq41soybmtw webapp 3/3 lherrera/webapp:1.0 bmotumdr8bce redisdb 1/1 redis:alpine In the service creation team, we need to specify at least an image (in our case, lherrera / webapp). By convention, we also specify the name of the webapp. We must also specify the command in order to launch inside the containers only after (connecting) the image. In the previous command, we also specify that we want three replicas of the container running at any current time. Using the “- replicas” flag means that we do not have to worry about which node it goes to, if we want one service per node, we can use the “- mode = global” command instead.

You can see that we are using the “webnet” network that we created earlier. To be able to create our service from outside Swarm, we need to specify the port on which the Swarm listens for web requests. In our example, we “set” port 8000 inside the service to port 80 on all nodes. Thanks to the routing grid, it will reserve port 80 on all routing nodes and the Docker Engine will balance traffic between port 8000 in all container replicas.

Swarm will use the description of this service as the desired state for your application and will begin to deploy the application on the nodes to achieve and maintain this desired state. We could specify additional service parameters to complete our description, such as reload rules, memory and CPU resource limits, node containers, etc. For a complete list of all available flags, you can use the “docker service create” command (see here).

At this stage, we can be sure that our web application is correctly connected to the webnet network.

$ docker network ls NETWORK ID NAME DRIVER SCOPE df19a5c87d90 bridge bridge local 7c5762c8c6ff docker_gwbridge bridge local eb0bd5a4920b host host local bqqh4te5f5tf ingress overlay swarm 3e06a1616b7b none null local a9j4al25hzhk webnet overlay swarm $ docker service inspect webapp … “VirtualIPs”: [ { “NetworkID”: “7i9t9uhtr7x0hybsvnsheh92u”, “Addr”: “10.255.0.6/16” }, { “NetworkID”: “a9j4al25hzhk2m7rdfv5bqt54”, “Addr”: “10.0.0.4/24” } ] }, … Now let's go back to our example and make sure that our service is up and running:

$ docker service ps webapp ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR bb8n4iagxcjv4rn2oc3dj6cvy webapp.1 lherrera/webapp:1.0 swarm-1 Running Preparing about a minute ago chndeorlvglj45alr4vhy50vg webapp.2 lherrera/webapp:1.0 swarm-3 Running Preparing about a minute ago 4txay0gj6p18iuiknacdoa91x webapp.3 lherrera/webapp:1.0 swarm-2 Running Preparing about a minute ago One question that you need to be aware of: although the Docker-service creates a team that quickly returns with id, the actual scaling of the service can take some time, especially if the images must reside on the nodes. In this case, just run the “docker service ps webapp” command several times until all the replicas are provided to the service.

When the services are running (it may take a few minutes, get some coffee for this time), we can check that our services receive in response to requests.

Initially, we need to get the IP of the first node:

$ NODE1=$(docker-machine ip swarm-1) Then we will set port 80 for that IP address, because we published a service on port 80 in Swarm. We use curl in the example below, but you can also set the IP in your browser to get the same result.

Now, we are ready to test our new service:

$ curl $NODE1 Hello, I'm version 1.0.My hostname is 5a557d3ed474, this page has been viewed 1 and my ip addresses are 10.255.0.9,10.255.0.6,172.18.0.3,10.0.0.7,10.0.0.4 $ curl $NODE1 Hello, I'm version 1.0.My hostname is 4e128c8af4ae, this page has been viewed 2 and my ip addresses are 10.255.0.7,10.255.0.6,172.18.0.3,10.0.0.5,10.0.0.4 $ curl $NODE1 Hello, I'm version 1.0.My hostname is eaa73f01996c, this page has been viewed 3 and my ip addresses are 10.255.0.8,10.255.0.6,172.18.0.4,10.0.0.6,10.0.0.4 We can see that the first request was sent to container “5a557d3ed474”. If you hit “refresh” a few times or call “curl” again from the command line, you will see a request that was sent to all three replicas of the containers we created.

To demonstrate other aspects of the routing grid, try “assign” port 80 to the IP of the other two Docker nodes. You will see the same thing as before, it follows from this that it does not matter which node you requested; above the request, internal load balancing between the three containers will always be performed.

Floating Updates (Rolling Updates)

As part of the service description, you can set a strategy for updating your service. For example, you can use the — update-delay flag to configure the delay between updates for a service task (container) or task set. Or you can use the — update-parallelism flag to change the default behavior: updating one container at a time. You can set all these flags in the process of creating a service or later using the “docker service update” command, as we will see in the following example. The update command requires almost identical parameters with the “docker service create” command, but some flags have different names with the update command. Everything you can install in the create command you can change in the update command, so you have complete freedom to change any part of the service description at any time.

Let's update our webapp service to see how the “rolling update” mechanism works. The development team worked hard to improve the webapp and we released a new tag called “2.0”. Now we will update our new service with this new image:

$ docker service update --update-parallelism 1 --update-delay 5s --image lherrera/webapp:2.0 webapp Note that we also indicated that we want the update to happen one at a time and set the interval to 5 seconds between each update. This approach means that we will not have any downtime!

While updates are taking place, watch them in real time, launching “docker service ps webapp” every few seconds. You will see old images that are turned off one by one and replaced by new ones:

$ docker service ps webapp ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 7bdpfbbjok78zt32xd9e2mqlt webapp.1 lherrera/webapp:2.0 swarm-3 Running Running 43 seconds ago bb8n4iagxcjv4rn2oc3dj6cvy \_ webapp.1 lherrera/webapp:1.0 swarm-1 Shutdown Shutdown 44 seconds ago af2rlu0laqhrj3uz8brym0f8r webapp.2 lherrera/webapp:2.0 swarm-2 Running Running 30 seconds ago chndeorlvglj45alr4vhy50vg \_ webapp.2 lherrera/webapp:1.0 swarm-3 Shutdown Shutdown 30 seconds ago 0xjt6gib1hql10iqj6866q4pe webapp.3 lherrera/webapp:2.0 swarm-1 Running Running 57 seconds ago 4txay0gj6p18iuiknacdoa91x \_ webapp.3 lherrera/webapp:1.0 swarm-2 Shutdown Shutdown 57 seconds ago This is just something incredible!

If our application also prints a version of the image, another way to see updates in real time is to press the service (end point) repeatedly during the update. When the service is updated, you will slowly see more and more requests returning “version 2.0” until the update is complete and all services return version 2.0.

$ curl $NODE1 Hello, I'm version 1.0.My hostname is 5a557d3ed474, this page has been viewed 4 and my ip addresses are 10.255.0.9,10.255.0.6,172.18.0.3,10.0.0.7,10.0.0.4 $ curl $NODE1 Hello, I'm version 2.0.My hostname is e0899324d9df, this page has been viewed 5 and my ip addresses are 10.255.0.10,10.255.0.6,172.18.0.4,10.0.0.8,10.0.0.4 $ curl $NODE1 ... Please note that Redis considers continuations ineffective (the Redis service graph is unchanged), since we did not change the Redis service, only the webapp image.

Growth problem solution

A swarm of honey bees is a familiar sight at the beginning of summer. Honey bees instinctively use survival in a colony by joining together in a swarm when they become many. And we do the same in our container clusters.

Let's say that our node has reached the power limit and we need to add an additional node to our cluster. It's that simple, you can do it with just 4 teams! For clarity, we are going to use a few more to make sure that what is happening is well understood.

$ docker-machine create -d virtualbox swarm-4 … Docker is up and running! Join the node the swarm as a worker as before: $ eval $(docker-machine env swarm-4) $ docker swarm join \ --token SWMTKN-1–1n7gtfmvvrlwo91pv4r59vsdf73bwuwodj3saq0162vcsny89l-5zige8u81ug5adk3o4bsx32fi \ 192.168.99.106:2377 This node joined a swarm as a worker. Verify that both the new host and the webapp service are running:

$ eval $(docker-machine env swarm-1) $ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 93f0ghkpsh5ru7newtqm82330 swarm-3 Ready Active e9rxhj0w1ga3gz89g927qvntd swarm-4 Ready Active 5jqsuf8hsemi7bfbwzfdxfcg5 swarm-2 Ready Active b2womami9n2t8tti1acvz6v5j * swarm-1 Ready Active Leader $ docker service ps webapp ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 7bdpfbbjok78zt32xd9e2mqlt webapp.1 lherrera/webapp:2.0 swarm-3 Running Running 5 minutes ago bb8n4iagxcjv4rn2oc3dj6cvy \_ webapp.1 lherrera/webapp:1.0 swarm-1 Shutdown Shutdown 5 minutes ago af2rlu0laqhrj3uz8brym0f8r webapp.2 lherrera/webapp:2.0 swarm-2 Running Running 5 minutes ago chndeorlvglj45alr4vhy50vg \_ webapp.2 lherrera/webapp:1.0 swarm-3 Shutdown Shutdown 5 minutes ago 0xjt6gib1hql10iqj6866q4pe webapp.3 lherrera/webapp:2.0 swarm-1 Running Running 6 minutes ago 4txay0gj6p18iuiknacdoa91x \_ webapp.3 lherrera/webapp:1.0 swarm-2 Shutdown Shutdown 6 minutes ago luis@aurelio [13:14:27] [~/webapp] [2.0] Now let's activate our webapp service for four replicas:

$ docker service update --replicas=4 webapp We have to wait a few minutes for the image to load on the new nodes:

$ docker service ps webapp ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 7bdpfbbjok78zt32xd9e2mqlt webapp.1 lherrera/webapp:2.0 swarm-3 Running Running 10 minutes ago bb8n4iagxcjv4rn2oc3dj6cvy \_ webapp.1 lherrera/webapp:1.0 swarm-1 Shutdown Shutdown 10 minutes ago af2rlu0laqhrj3uz8brym0f8r webapp.2 lherrera/webapp:2.0 swarm-2 Running Running 10 minutes ago chndeorlvglj45alr4vhy50vg \_ webapp.2 lherrera/webapp:1.0 swarm-3 Shutdown Shutdown 10 minutes ago 0xjt6gib1hql10iqj6866q4pe webapp.3 lherrera/webapp:2.0 swarm-1 Running Running 10 minutes ago 4txay0gj6p18iuiknacdoa91x \_ webapp.3 lherrera/webapp:1.0 swarm-2 Shutdown Shutdown 10 minutes ago 81xbk0j61tqg76wcdi35k1bxv webapp.4 lherrera/webapp:2.0 swarm-4 Running Running 20 seconds ago Just, yes? Bees in a swarm are always in a good mood!

For global services (as opposed to replication, as we saw in the example), Swarm launches a new task automatically for a service on a new available node.

Routing stability

If something is not going well enough, the cluster will manage the state of these services (for example, running replicas) and, in the event of a failure, restore the service to the desired state. In this scenario, new containers and IP addresses appear and disappear. Fortunately, the new built-in service discovery mechanism will help us adapt our services to these situations and container failures and even now a complete node failure will go unnoticed.

To see this in action, we will continue to look at our example and simulate the node of our small failed cluster.

Let's make sure our webapp is still running on our cluster:

$ docker service ps webapp ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 7bdpfbbjok78zt32xd9e2mqlt webapp.1 lherrera/webapp:2.0 swarm-3 Running Running 10 minutes ago bb8n4iagxcjv4rn2oc3dj6cvy \_ webapp.1 lherrera/webapp:1.0 swarm-1 Shutdown Shutdown 10 minutes ago af2rlu0laqhrj3uz8brym0f8r webapp.2 lherrera/webapp:2.0 swarm-2 Running Running 10 minutes ago chndeorlvglj45alr4vhy50vg \_ webapp.2 lherrera/webapp:1.0 swarm-3 Shutdown Shutdown 10 minutes ago 0xjt6gib1hql10iqj6866q4pe webapp.3 lherrera/webapp:2.0 swarm-1 Running Running 10 minutes ago 4txay0gj6p18iuiknacdoa91x \_ webapp.3 lherrera/webapp:1.0 swarm-2 Shutdown Shutdown 10 minutes ago 81xbk0j61tqg76wcdi35k1bxv webapp.4 lherrera/webapp:2.0 swarm-4 Running Running 20 seconds ago Nodes:

$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 21z5m0vik76msi90icrf8prvf swarm-3 Ready Active 7zyckxuwsruehcfosgymwiucm swarm-4 Ready Active 9aso727d8c4vc59cxu0e8g778 swarm-2 Ready Active bihyblm2kawbzd3keonc3bz0l * swarm-1 Ready Active Leader This is where the fun begins - who doesn't like to shut down working nodes to see what happens?

$ docker-machine stop swarm-2 Let's see how our services responded:

$ docker service ps webapp ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 7bdpfbbjok78zt32xd9e2mqlt webapp.1 lherrera/webapp:2.0 swarm-3 Running Running 11 minutes ago bb8n4iagxcjv4rn2oc3dj6cvy \_ webapp.1 lherrera/webapp:1.0 swarm-1 Shutdown Shutdown 11 minutes ago af2rlu0laqhrj3uz8brym0f8r webapp.2 lherrera/webapp:2.0 swarm-4 Running Running 11 minutes ago chndeorlvglj45alr4vhy50vg \_ webapp.2 lherrera/webapp:1.0 swarm-3 Shutdown Shutdown 11 minutes ago 0xjt6gib1hql10iqj6866q4pe webapp.3 lherrera/webapp:2.0 swarm-1 Running Running 11 minutes ago 4txay0gj6p18iuiknacdoa91x \_ webapp.3 lherrera/webapp:1.0 swarm-4 Shutdown Shutdown 11 minutes ago 15pxa5ccp9fqfbhdh76q78aza webapp.4 lherrera/webapp:2.0 swarm-3 Running Running 4 seconds ago 81xbk0j61tqg76wcdi35k1bxv \_ webapp.4 lherrera/webapp:2.0 swarm-2 Shutdown Running about a minute ago Great! Docker deployed a new container on the swarm-3 node, after we “killed” the swarm-2 node. As you can see, we still have three running replicas (two in the “swarm-3” node) ...

$ curl $NODE1 Hello, I'm version 2.0.My hostname is 0d49c828b675, this page has been viewed 7 and my ip addresses are 10.0.0.7,10.0.0.4,172.18.0.4,10.255.0.10,10.255.0.6 $ curl $NODE1 Hello, I'm version 2.0.My hostname is d60e1881ac86, this page has been viewed 8 and my ip addresses are 10.0.0.7,10.0.0.4,172.18.0.4,10.255.0.10,10.255.0.6 And the traffic is automatically redirected to the newly created container!

Swarm growth and development

Software changes fast. Failures happen, and they inevitably occur when you least expect it. A new abstraction “services” was introduced in Docker 1.12, simplifying updates and preventing downtime and service failures. In addition, this new approach makes planning and maintaining long-running services, such as a web server, much easier. You can now deploy a replicable, distributed, load-balanced service to Swarm on the Docker Engine with a few commands. A cluster can manage the state of your services and even bring the service to the desired state if the node or container has failed.

Solomon Hykes well described how we hope to make the life of developers simpler: “With the development of instrumentation, networks and security in our orchestration (orchestration) tools, Docker connects developers to build more complex applications that can be developed on a scale from a desktop computer to the cloud, regardless of basic infrastructure. ”

With the release of Docker 1.12, we think it will surely deliver its promise. Docker makes things simpler, I hope this is the moment when these surreal moments that we mentioned at the beginning will disappear. Do you have any other surrealistic moments in DevOps? Share this with us!

More information and links to Docker 1.12

- https://www.youtube.com/watch?v=dooPhkXT9yI is an excellent in-depth look at the specifics of how Swarm nodes work and exchange information among themselves.

- https://www.youtube.com/watch?v=_F6PSP-qhdA is an in-depth review part 2, focused on how orchestrated services are orchestrated and how failures are handled.

')

Source: https://habr.com/ru/post/310390/

All Articles