How the search is arranged

Andrei Aksenov ( shodan , developer of the search engine Sphinx)

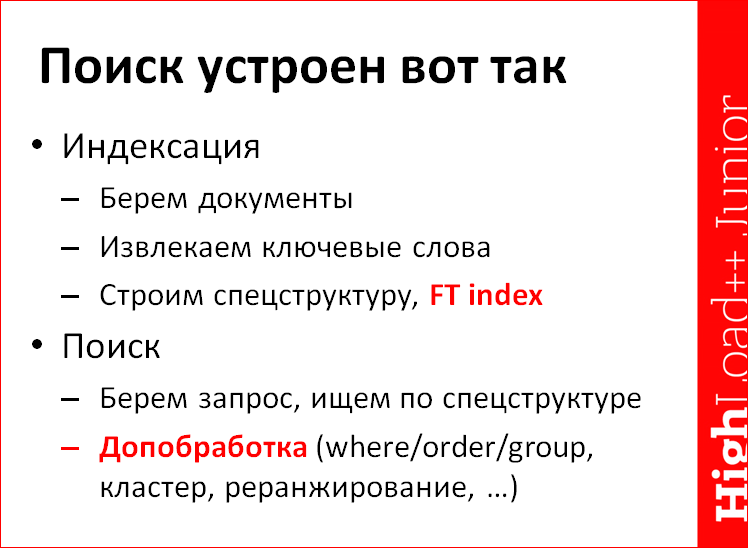

The search is arranged like this:

')

Indexing - by and large, nothing complicated. It is clear that in a small account, there is hidden in each of the three “details” not that a demon, but a whole somewhere herd, somewhere a legion, is not entirely clear. But the concept is always simple. It all starts with a small unpretentious patichka to Mnogocharya, and then you have been doing this garbage for 15 years.

You take documents, you collapse them into keywords. And just take and break up the document on the keywords “mom, soap, frame” - that you are not far from grep, because then all the same to sort out these keywords. We must build some kind of specials. structure - full-text index. Mankind invented quite a lot of options for building it, but, thank God, they were all abandoned in normal production systems, by and large, at the moment exactly one option has won. About him and I will talk. All the others are more likely to have historical significance, perhaps, and they are of no practical interest.

Then, when we have this special. The structure of the magic called "index" was built, it is not only necessary to make a search, unfortunately. Relatively speaking, search by text is not enough for you, in general, never already. Those. I can’t think of such a use case when you only need text data to do a search. Because if you are looking for some logs, then you need to find the key or mask, and probably either sort, or group, or do some additional operations on the data. You just do not text. In order to find, you still need at least some sort by date, or the number of files on the schedule to give out and group the search results by day, etc.

If you are a web search that is illusoryly simple ... Or, okay, a web search, some search on a local collection of legal documents, which, it would seem, was enough to find anything, rank it and - hooray, here it is. No shit like that. Also the text is not enough for this, because in the web search, in general, hell and holocaust. The ranking is based not only on the text of the documents, but also on dozens and hundreds of additional factors. And even in the sensible desktop search for a rather interesting collection, again, besides the text, there will be a whole lot more.

I spent a lot of precious seconds to explain that there is an extra. processing, and if there used to be ideas that it was not needed at all, today there are no such ideas. Everywhere and always you will definitely have some kind of extra. treatment. I, in principle, did not see a single query that would just do full-text matching and just sort it for some kind of relevant relevance. This, of course, is an exaggeration. It is clear that when you do any search on some forum, then you, most likely, the default mode is the same sort by the same relevance, if you don’t really bother. But you have to understand that in this case you will still have three matches for your divine 2000 posts for an average query and, as you do not sort them, you will still get three matches.

Data is everything. To understand how it is arranged, of course, it is necessary to dance from the data with which we work, from the device of that very special. structure of the magic called "full-text index."

The full-text index, in fact, the structure in the first approximation is completely dull, like a stick. Here he is:

The index looks like this. In fact, it looks completely wrong, in fact, everything is much more complicated. But, as soon as you go beyond the limits of the first draft, which was written on the knee, then all meta-information becomes attached to the dictionary. Lists of not only documents are needed, but also lists of positions, some morphological information is stored inside these lists of positions ... And then there is still a lot of joy, and the attribute to the side lies somewhere. But, I repeat, in the first approximation, here it is - the visualization of this structure.

A certain incomprehensible arrow operator (=>) was introduced here, which is not 2/3 of the comparison operator of two elements for sorting, which they push into all esoteric languages. This is a kind of link that, they say, we have a separate dictionary - the left column. If we block the right half, then we will have a dictionary and an arrow - this is also part of the dictionary. We can assume that this pointer is different for each word. He shows respectively on the list of documents. According to this dictionary, we can find all the documents where “abyrvalg” occurs - here, obviously, 123; or all the documents where Petya Petrov is - that’s obvious, these are documents number 8 and 2. For some reason, Vasya Vasechkin is not found anywhere. Vasya was unlucky.

In fact, the dictionary element is not just a word, there is typically still some additional stuffing. Here is an example of minced meat at the end. For example, the actual word, firstly, the shift to the list of documents, secondly, the shift to the list of positions, thirdly ... Then all this could be put in the lists themselves, in separate files where we show, and thus more compact dictionary to do. But then, by the dictionary itself it is not clear, but what is the frequency of this very word? And the frequency of this very word, or the frequency of positions, is needed in order to build a more optimal query plan on the one hand, plus give you statistics on keywords without jerking the main healthy index data on the other. And in addition, some additional stuffed information can be stored. This is how we in Sphinx store a field mask that was matched with this word in order to slightly accelerate the search on individual fields, if that can be done.

Again, things may turn out to be a little bit wrong, this is an example invented, if that. Those. in fact, in both open source systems in the world and accessible to the common man, everything is different. What is in Sphinx, that in Lucene, the dictionary is actually different, with other data, a slightly different format, etc. But conceptually it differs not much. Those. Yes, it’s not like that, just a few other fields, we don’t have a position offset, that's all.

Another interesting point that is probably worth mentioning is that the pointers may not always be stored, which means that the data is worth simply zainlaynit and the dictionary after this all have to shake. Those. not just such structures lie, as it were ...

How does a hipster implement a search? It takes such a garbage, json-document, and to disk, then in jQuery we load ...

But it does not work well enough, not efficiently enough. Therefore, it is necessary, firstly, not a json document with all these data, but, naturally, in a binary format, and secondly, in order to make it more or less compact and work well, this dictionary would still be a good shake. In order to have a quick search, we build a sorted vector. Ideally and in business, of course, it would be necessary for the search to build just a hash, well, naturally, a hash was built for all the words, and then an instant lookup of a word.

But with a hash, two problems:

- an instant lookup will be there, but then it is necessary to keep completely uncompressed elements in the dictionary, and it swells four times from it.

- In addition, if you have a hash, then there is no range search in the hash and, in general, on physical constraints ... Therefore, goodbye, search for substrings. In principle, we immediately hated you, but users constantly demand for some reason. Of course, I would have made a hash, but I don’t understand why the hell should look for substrings.

The main happiness of compression is in the dictionary ... Naturally basic, I will not give you specific numbers now, that "exactly 37% of all compression is due to this, and the remaining 69% is due to another", but the bulk of compression is in the dictionary after you sorted, in human, it means that a normal dictionary is achieved by prefixing the language. Those. people are quite stupid and limited creatures, unlike robots, and therefore the vocabulary of all human languages is at the same time extremely small. In fact, what lexicon was Pushkin? Our everything, by the way, is a monument, almost in every city, and the streets are for sure. A lexicon, God forbid, 30 thousand Leu. Well, how many of these 30 thousand lem can generate word forms? Do not sleep, communicate, a maximum of 200 thousand.

Let's take someone more academic than Pushkin, for example, the classical morphological dictionary of Zaliznyak and all that gradually grew out of it. The order in the Russian language is 100-200 thousand lem more or less running, and, of course, the Russian language is still quite mutable from the point of view of a programmer and inflectional from the point of view of a person who has not forgotten philology, if he knew right away. And this means that from each specific lem, from each concrete root, many different prefixes can be generated - running, running, running, running, running, etc. There are many such inclinations in Russian, therefore there are many word forms. Each word form in the limit is indexed as a separate word. But even them, even look at the whole dictionary, their miserable millions. It really is: 1. very few, and 2. they resemble each other like twins.

After you have sorted the lexicographical dictionary, you get it ... And there are some typos, of course. Humans - they, as it were, are stupid and limited, and this is manifested not only in the fact that the dictionary is rather limited, but also in the fact that they constantly strive somehow to write in a bydlyatsky way. They will come up with some kind of Albany, then they will think it, then they are just stupid and sealed up, as I, for example, in every second word, thank God, that the autocorrectors fix. And it turns out this: abyr, abyrrr, abyrvalg, etc.

There is no human opportunity to store an extra 8 bytes for every freaking byyr, because in Unicode it will be exactly 8 bytes. It is much more interesting to save once the prefix “abyr”, and then for “abyrr” to save, for example, the edit code is small in a few bit, that we are shorter, now we add a +1 character to the end, and save this character, and then we add two more characters at the end, and then we cut the two, and we keep the “valg”. Due to this simple trick the dictionary, I emphasize, human language is reduced very significantly.

Unfortunately, this does not help a damn bots. Bots hate fierce hatred, because it is because of the bots that generate every kind of url, all session_ID, utm_campaign, and all the other stuffing sessions - because of this, when you index, for example, url, you have a dictionary that swells into Hell-given, and especially nothing can be done about it. You have such a session_ID random completely and sparse, and there are no prefixes there. This filth subsides the dictionary.

For a normal language with a dictionary, everything is interesting. Here I complained about life that for all automatically gene-generated data with a dictionary everything is bad. Actually bad, but not bad-bad. A dictionary in some ugly case where you don’t have this text, but there are many, many automatically and randomly generated data, well, if you generate 100 million unique keywords randomly and index them, naturally, you will have most of the indexes in dictionary. You, by and large, the entire index will degenerate into a dictionary. But, fortunately, in those collections that are usually indexed, the data is more than meaningful, therefore, in addition to the dictionary, there are also, in fact, basic data, documents, and positions.

I have been talking about prefixes for a long time and forgot to tell about inlining. Inline is an extremely stupid thing. Why save an eight-bit offset per document if you have only one document and one position? This simple trick several years ago in Sphinx in one hit and one simple upgrade cut off the size of the index, in my opinion, either by 30% or 40%. And then in Lucene this idea was stolen from us, or came up independently, which is actually more likely.

The main part of the index, however, is documents and positions. These are just sorted lists. Always sorted, otherwise nothing. Otherwise, they cannot be effectively crossed at the moment when you search two keywords at the same time. They are probably trying not to sort them, but to sort them by some lightweight rank, etc. only two categories of persons. First of all, these are dudes who really need to defend their dissertation, or they will call the military commissar. And the second category of individuals is the dudes who need to defend their dissertation, because it means moving up the internal career ladder in Yandex and Google. I have not seen other scientific papers on this topic, i.e. there is no evidence that somehow you can deftly and efficiently put documents in an unnatural order, and not in a natural order sorted by ID. It is impossible for some lightweight rank to be put in the index in order to remove the top 1000 for this lightweight rank for one word, and not all 30 million documents and, accordingly, top 1000 for another word - it works quite well, but people have not the first decade of trying, no shit does not come out.

Positions Positions are needed at the moment when you are looking, firstly, for more than one keyword in order to make a ranking, and secondly, when you have a less trivial search operator than just “give me everything and back off”. It is clear that if you are looking for a phrase, then an exact match of the phrase, not to mention searching nearby, etc., you immediately need a position. Even if you then will not rank this data, just to put together a phrase, you will have to move positions. And if you need at least some ranking, the more or less sensible ranking also wants to look at the position, and to do it very slowly.

There is a lot of this data, it is a lot of them. Estimate yourself: for every occurrence of every word in every document we must somewhere save some internal document number. In fact, for a search engine it does not matter whether an external number or an internal number, but we must save it. Roughly speaking, such data are at least comparable in size with the original text, and then, if it is inaccurately stored and a lot of overheads, it is many times larger than the original text. There is no human ability to work with an index that is three times larger than the size of the original indexed text. This slowly, badly and, in general, memory eats. It is much better to shake everything up smartly so that it does not take up 300% of the size of the source text, but ideally 5%. When you have 60 times less data, naturally, any operation on this data is faster.

Compression. Compression is everything. Suddenly about the details of implementation in specific search engines.

In Lucene today, as I recall, the basic data that is stored, according to the full-text index, look like this. Separately, there is a stream with blocks of compressed ID documents. Separately, in addition to it, roughly speaking, in a separate file or for individual offsets are blocks of frequencies, i.e. not just the fact that we have a document 1, 2, 3 and 17, but also facts that in document No. 1 we had a frequency three times the word was met, in document No. 2 we had 17 times, and so on. Such a block of frequencies in a particular document. Naturally, this block of frequencies determines the length of the number of positions for the third megavector, in which specific positions are stored. There, respectively, how much tf is in a block, so much data will be in posting.

Accordingly, dudes keep it in three different streams. These data are not mixed up. We have them in the current version somewhat mixed up, i.e. docids, tfs, document frequencies, plus some minor additional meta-information, specifically, an offset to the list of postings and, in my opinion, there are some light tricks about the number of postings, the mask, which is not always there, etc. We have such a basic intestine for those who are interested, in the index file with the extension SPD, and separately lies the file in which all the positions lie.

Again, inlining works well. If you have one position, you need to save it, no need to store a pointer to it. In this case, if you have exactly one position, exactly one entry, it is also inline with us into a common megakishku documents.

How it will be arranged in the fresh version, which we have been preparing for a long time, and about which I recently began to tell, I do not know yet. Previously, the layout works pretty cool in the prototype, when we, in general, all the data is mixed, i.e. and docids and postings are one flat intestine. This is bad because when you only need document identifiers, and you, roughly speaking, search for one keyword, you absolutely do not care about the position and entry of this word in the document. You no longer have the opportunity to look at the comparatively small in this case list of document identifiers, throw out and not look at the position numbers at all. But on the other hand, in this way the total size of the index is specifically reduced, and the code is simplified. Accordingly, not yet decided. On the one hand, I would like to separate postings separately, just to optimize such single-word searches or boolean searches, where positions are generally not needed. On the other hand, it greatly inflates the index. Still need to think, benchmark, etc.

I think it will be especially funny and ironic if somewhere in the hall there is some messed-up Cossack from Google who smirks softly and thinks to himself that “everything is not so with us”. This is not the only method to make a format, and certainly not the only correct one. Experiments on how we can keep this data polovchee (lists of documents and lists of positions), a lot of them, they must be stored somehow efficiently, and then effectively read and work. The experiments will probably never cease.

I vaguely remember that once I read some piece of paper from Google. Google, in general, the well-known "open", "open source" company - neither a patch to the outside and not a single document, which is obsolete for less than five years, cannot be handed out. But I read, nevertheless, a document that is unclear how outdated, where briefly and briefly in two words it was mentioned that Google has an even more interesting storage format for all this. Instead of storing any separate lists of documents, tfs and others, they store one giant list of positions. Or was it some kind of experiment that was a google or battle index? An "open" company, nothing can be understood. But I remembered the following idea, in any case an interesting one - a gigantic general list of positions is kept, and a dense one. Type, here we have the first document, it has 1000 words, respectively, it occupies position numbers from the 1st to the 1000th, and here is the second document, it has 12 words, it occupies the positions from the 1001st to the 1012th, and here is the third document, etc. And, as it were, I casually read that the cool format, such as it became good, and the borders of the documents at the same time are determined by external metainformation, i.e. a small such gut lies separately, in which specific boundaries of documents are prescribed, that our first one started from position No. 1 and ended in No. 1000, the second - from No. 1001 and ended in No. 1012 inclusive, etc.

The data is like this - see the slide above. In Lucene this way, in Sphinx - this way, in Sphinx of the next version - it’s not clear how, and the “big uncles” also don’t understand how it changes regularly. Why am I telling all this at all? All the same do not care. But it’s good when you don’t care about the insides of what you are going to work with, especially when you came to a report on how it works inside, but unfortunately, this matter affects two important characteristics quite well - the speed of work of everything and more on volume.

Because even just the data encoding mechanism that you are going to add to the inside of the index changes the amount of this data and, at a minimum, the read speed of processing this data in a time that is slightly less than infinity. And about "a little less than infinity" - this is not a joke. Here is an example of a conditional frequency word. In fact, the word "what" is less frequent. The most frequent, in my opinion, in Russian, is not what you thought, but the preposition "and", but for example it will do. Suppose we have such a list of document identifiers [1,3,4,5,6, etc.]. It grows, and it has rather dense documents. Why do we need to store large numbers, which gradually, in general, will grow to a million, and they need a lot of bits? Let's calculate the deltas between each adjacent tsiferki. I counted, and I got [1,2,1,1,1,4 ... etc]. Perhaps, I was short-cut somewhere, but it doesn’t matter. It is important that the absolute order of tsiferok in the second vector, which is signed "varint", is significantly less. So, a simple move, let's take these little digits and encode them with a variable number of bytes. Seven-bit values - 8 bytes, 14-bit values - 16 bytes, etc. Some have already guessed how to do this, and in four hours they will write the implementation in php and throw it out.

Suddenly, instead of 32 bits, or God forbid, even 64 for each ID, we keep, save, Lord, 8, on average. And, of course, we occasionally encounter some ugly peaks, when here the jump in the main list is immediately from 11 million to 12 million. There will be 12 million minus 11 million, then 24 bits will be required for this value, for this delta, and it, all the same, is encoded in four bytes. At three it is not encoded, because you will have overheads encoding. But, on average, you will have one byte, not four.

Suddenly, the data collapsed four times, and this is the science of 20 years ago. So modern science (that is, just 10 years old) is funny block codes, which, firstly, subtract one more, because you have to have a delta - your numbers start with one, not from scratch. Subtracted one - saved bitik. It turns out such a thing and a sequence of these zeros and ones (occasionally triples), they can be encoded depending on how you approach the projectile, and sometimes 0 (zero) bits. I mean, when you have a long enough block, in which only zeros, i.e. You consistently have a lot of documents - a block, for example, from 128 documents in a row, 1,2,3, is a very frequent word. Or you simply robbed the posts of the same person from the blozhik and, obviously, his rattler is found in all these documents in a row. That also happens. Understandably, the deltas between adjacent ID documents are one by one, and all constants, and this fact can be shaken, relatively speaking, 0 bits per document, plus a small fixed overhead. We are writing one byte, that, say, the next 128 deltas are one. Such happiness in real data is extremely rare in fact. If I correctly remember my experiments with a codec, then such block coding didn’t give special joy to zero bits, but coding a sufficiently large block of documents into one, two or three bits compared to eight again collapses the size of the index several times . The effect, I hope, is clear - it’s one thing if we need to read 100 MB from the disk or memory and shovel it. If we didn’t press the data at all, varint shook 25 MB, the codec is more decent, it means it presses quite well. I believe that it is still important to at least imagine what is going on inside there, and why, in general, all these codecs are needed, how to tune, etc.

Suddenly we recall the nasty fact that, besides the text in the index, there are also those divine figures, i.e. some kind of meta-information attached to the document, an attribute in one form or another. Those. data that we do not index with a full-text indexer, but which, nevertheless, must also be present and consist, because with it additional operations are inevitable. Obvious - filtering, grouping, sorting. Less obvious is the ranking, on the one hand, and just the storage, on the other hand, at the moment when you suddenly make a database curve from the search.

Unfortunately, humanity has come up with a lot of concepts for the moment, and a million different storage methods, and one particular has not yet won. The data, we can say that we have them as if relational, with a rigidly predetermined scheme. You can, on the contrary, say that we want complete dynamism and debauchery, and therefore we have a complete schemaless. After that, you can also save data in different ways.

Non-relational can be crookedly saved, on the one hand, well, traditionally schemaless it is customary to store especially well, that is, at best, in some binary formatics.

In this regard, it does not cease to amaze me the history of PostgreSQL, which made support for json and, in the first iteration, stored this json, roughly speaking, just text. In general, without any additional attempts to somehow speed up work with this very json.

Thank God, even Lucene does not store data in such a terrible way, but as far as I know it (here I can be mistaken because I look at them every day). They have the same very flexible inside, but, accordingly, the braking structure, which I affectionately call Flexi-Brake, at least, such a data structure is used to store additional attributes in the default way. Those.when you save an attribute, it is not the schema that is naturally made there ala relational database with wildly fast access, but the json-document is stored, conditionally speaking. Or, rather, a bson document in some kind of binary format, where access is faster than text parsing. And so that all this is not so hellishly slowed down, everything is arranged with multi-level caches, so that after the first time access is fast, and the first time access is very slow.

In Sphinx, the solution is not to say that it is fundamentally better, but it works in some places, it seems to me, still more cheerful. We, on the contrary, have a hell of a relational approach, a giant table with a fixed scheme in memory, which, of course, is inconvenient when you want to fill in sparse json data there. But we probably don’t want to give it up, because I believe that a person should have a choice - either shoot himself in the head, or shoot himself in the artery on his leg and bleed out. Accordingly, the choice is the relational storage of attributes, if you know in advance that you have a “price” column in each document and it is flat. This is no longer a matter of entering the artery, but on two toes, in principle, pulls, with prices flattened. Nevertheless, if you know in advance that you have it in every document, get it into the scheme right away, it will be cool,efficiently stored, occupy four bytes per document, and access to it will be instant. There is no need to parse json, bson download, nor through a million caches through Lucene to wade. But, of course, the schemes sometimes change on the fly, and sometimes any exceptions occur, so json has not been canceled, we have support and an internal format.

I tried to make myself a good developer and steal someone's good implementation, but it turned out that I was a bad developer, and therefore all other people's implementations are even worse than I can write, I had to write my own.

In addition to storing attributes, it is not enough just to store them, it is also desirable to index them somehow. This, as far as I know, is more or less decently nobody is doing. I emphasize that the key word “more or less decently” is here.

It seems that in some places Lucene makes some kind of completely hellish stuffing with emulation of indices in columns, by and large, elements of a full-text index. This is on the one hand.And there are no native indices.

And Sphinx has a no less monumental solution. We have a tiny block index for individual blocks of record, so that if suddenly at some point a complete enumeration of all records has occurred, not every record should be sorted out, but first the upper level should be skipped and a certain number of blocks should be folded immediately.

However, as far as I know, in search engines there is no creative storage of attribute at the moment. Those.when you have a scheme, you can be creative - to store not just a stupid line-by-line matrix, but all sorts of compression there, poolonochnye and reaped ideas to do at least for individual columns. If you don’t even have a scheme, everything is also interesting - you can take this schemaless document, flatten it into a cloud with key-value tags and then search linearly for that cloud.

You can not do this, but, thank God, no one keeps the text. You can, forgive me, make not just a cloud of key-value tags, but at least put a hash on it for quick access. And you can not flatten it, you can keep an honest hierarchy, you can do key compression - a million different tricks, but they are still not very expensive to search for, at least, open source. We are working on something, but have not yet finalized it, I think it is still a bit early to boast.

Suddenly pro ranking.

With ranking, my current hypothesis is such that there are, by and large, two situations - either it is not there at all, or ideally you need it hard, but you get off easy. Those. if you don’t have a task to rank something at all, then everything is fine, Boolean matching, no heavy processing of documents is needed.

If you have, in principle, the quality of the search at least means something, if you wanted the ranking to be more interesting, but you don’t know how, you don’t know how or, in principle, “on the three results will come off”, then you get off with some light shnyaga of the type of built-in canonical BM25 ranking, invented 40 years ago and everywhere well described. This is a natural simple formula, it only looks scary, in fact, in it two variables, by and large, are integrated by all the matching words. TF (term frequency) is the frequency of the word in the document, and IDF (inverse document frequency) is the inverse frequency of the collection. This is a logarithmic metric, which, roughly speaking, is 0 (zero) for documents that are everywhere. Those.a document that is everywhere - in every document of the entire collection, it does not mean anything in terms of ranking. The fact that we found it is about nothing. And vice versa, the document that is in one document from the collection, here it has the maximum IDF - 1 (one). And the function there is nonlinear, there is a certain logarithm, so that life does not seem like honey. TF is linear, so TF smoothes. Here, instead of F, Q, I, D it is necessary, in fact, to write TF, roughly speaking. But since the fourth year the hands of Photoshop do not reach, in order to tint the formula from Wikipedia, it looks more frightening than it could.

Even in this formula there is a third factor, this factor is no longer a text one - it is the average length of the document. Look, avgdl in the formula, and avg_doc_length on the slide. This lightweight mathematics, which does something with the length of the document, is a rationing for the length of the document. The closer the document is to the average length, the better for this formula. It is well studied, it has been used by everyone for 100 years and gives quite good results, purely statistical, without taking into account the positions.

It does not take into account the position, and some kind of repack, which repeats all the key words a million times and powerfully spam the base according to the exact coincidence of the phrase “I feel you”, the song of the same name Depeche Mode pushes to about 112. This vile fact strained me another 12 -14 years ago, so we have a default runner right in the BM25 that mixes a component based on proximity, the degree of coincidence of the query phrase and the document. He is also quite a lightweight thing in the calculations, but looks at the position at least somehow.

If you need a hard qualitative ranking, then in fact everything is fun, because there are probably a lot of factors to do well. Never two. The case is not limited to BM25, more precisely, the BM25 does not go anywhere, but instead of the native BM25, you need to use all sorts of modifications, compose together many of these same BM25s, look at additional interesting factors, counted in the text, and what is even more interesting, look at the mass of factors that extra-text that are tied to a specific document. Actually, this is the main headache and machine learning from large search engines. The variables that are taken into account in these calculations are hundreds and thousands of them, i.e. 800 factors for each document, which is taken into account in the ranking - it is easy, it also happens. So the ranking is arranged in brief. It is clear thatthat it is possible to arrange a separate master class of the day for three different aspects, first about general sorts, about BM25, to talk about, then about all possible factors and machine learning to get into ...

Next is the next sudden move. We talked about a certain basic format, that ok, there is a word, there is a list of documents, etc., and searches sometimes pretend that they are real-time. How does it work inside? There should have been (but it is not) a picture that everyone knows, like this dude, “there is no spoon,” and he is like this: “not a damn philosopher”. There is no real-time in the full understanding of the term realtime, in fact, either. Those. full understanding - it would be as if the natural index structure was honestly updated in real-time.

There is no full-fledged real-time in nature, because the list of documents that is associated with every word is potentially large and it is compressed, it is inhuman to update it. To him it is possible, at best, to add something to the end — yes, this is possible and easy, and putting a document there in the middle is almost impossible. Therefore, in order for the full-text index to pretend that it is real-time, humanity has been pursuing many strange concepts. As a result, one won - the entire realtime is emulated in a simple way: when new documents arrive or new versions of old documents arrive, we create a new small nano-index with these new documents or new versions. In the event that these are replays, namely new versions, then in the old existing indices (they are nano or mega - it doesn’t matter), we populate some flags,that if you find this document, then you don’t find it, it really is no more. And we build cascades of such indices and constantly. To prevent them from becoming three million, we merge and, accordingly, at the very moment of merger, nano-indices physically wipe the records previously suppressed by flags from this index. Here is the canonical technology.

The word that was thought up in Lucene, and the whole world now knows, it is called a "segment." So we did not begin to invent our own terminology, we have the same segments, conceptually the same thing. Those.there is no realtimeness, in fact. When you pour new data into a realtime type system, it quickly creates an additional tiny index, so-called. a segment, old versions, perhaps suppresses a kill-list, masks or something else, it doesn’t matter, and sometime later, when the two segments merge into ecstasy, it will physically delete this data. All realtime is always arranged like this. At the moment, the new cool method is not invented. For example, compressed data, you go, inside the zip archive put another zip archive ...

More about different physics, about different physical differences, because they regularly ask the question: “Why not Lucene?”. I am particularly pleased when you write an article like “here we are making a bunch of improvements”, and the first comment in the style “nobody needs them, kill yourself against the wall”. No, we will kill, of course, sooner or later, people are all mortal, but the comments that, they say, no one for hell do not need to accelerate the indexing three times and some other nice buns are fun. Sometimes, it's not that every day, but the question is asked. The answer is so ambivalent, twofold. Conceptually, everything inside is the same. This structure with a dictionary, with lists, segments to ensure realtime and other pleasures of life - it is conceptually the same everywhere. Tried all sorts of different approaches, this works best. It is implemented everywhere.

There is, however, a subtle point called “implementation details, different formats, etc.”. Unfortunately, these very “implementation details, different formats, etc.” change everything in places, in places by orders of magnitude. Offhand, I came up with and wrote three differences on the slide. We have a common dictionary of all words for all fields of all documents that Lucene has, respectively, a separate dictionary for each word.

I still want to sometime arrange an interesting benchmark called “let's throw a million json documents there”, and in each field with a unique name. It is not difficult to do, I'm curious how it will behave after that when searching the entire collection at once. Or I don’t correctly read java-code, which, in principle, probably, but not because java is a complicated language, but because java provokes you to create 20 different factories, decorators and other nonsense in order to wrap four lines of code that doing actual work. It interferes. Accordingly, if I made my way through some decorator incorrectly, then maybe everything is not so bad, but it seems like this - if you make a lot of fields, then the poor thing will be bent forever.

We, on the contrary, do not turn around forever, but if you are looking for a low-frequency and high-selective field, i.e. you have a huge collection of documents, one meter each, and to them a tiny title, and you search for this title, there are two words out of a million. It is effective to have a separate index for these two words out of a million, for this separate field, and we have not implemented it. We have the best that is implemented - this is a mask in the dock list, but this is not good enough. Accordingly, we in this case proshmanaem the entire index, rather than a separate specific field, this, in turn, is ineffective.

Different yuzkeysy, different breaks in different places.

We have a memory attribute table, about all the details of the implementation of which you can talk for a long time, and the ability to simply json-documents in the form of attributes in the form of separate attributes. Lucene's relationals do not have this, they have documents on the disk. When we last benchmarked, they had a surprisingly slow cold access to a separate field of a separate document, but this is never visible, because they have a policy of “do you need 64 GB of memory on the server and give 62 of them to the cache”.

The physical level is different, the brightest differences, about which I know, they are like that. Surely there are some more.

In addition to physics, I still have to open and quickly close the topic called “what Sphinx is not Lucene, and how we can equip Russia”, I have such a general impression from the code that conceptually different approaches to the projectile are formed. Where we prefer not to do something than to do it badly, and if we do, then the concept of “let's all be able to quickly and quickly count, and the user himself caches at his level,” then Lucene has the opposite - “Let's spend a lot of memory, count at least somehow, and then inside the server or library at both levels (and within the library itself and within all servers) a million caches at different levels, sometimes even impossible to disable”.

In fact, both approaches are bad. In Sphinx, the hell you turn on some kind of cache that you would like to enable at the server level, and in Lucene it’s not good to make a distinct benchmark. You did it, he repeated them a thousand times on ten requests, well, great - you just measured the return rate from the cache. And, because the hell cuts off caches, you kind of even if you make as many as 20 of ten requests, you still measure the weather on Mars.

And then suddenly the story about Xapian. Builds a concept in the Absolute. A strange esoteric system, which has long been dead, is probably used by only one person in the world in the production that wrote it, called Xapian. I tried it once. I started some kind of test search, she gave me the result for 0.000. “No shit” - thought Russian men. I zhahnul a few more requests, she gave a few more answers out there and also to 0.000. I was completely surprised and had already begun to polish the wall, in order to suicide at this place, and then they engraved a portrait there, but nevertheless I guessed to conduct a second test and include something, whether sorting by attribute or search by phrase and .d Suddenly, the secret became clear - a stupid sheep, when searching for individual keywords, took out 10 documents,in advance for some kind of useless magic formula sorted for each word, Merdjila together these lists, considered the BM25 approximation and quickly gave a completely unnecessary result to the set. There was not even an exact number of matches in it, because the full lists of documents were not moved, the full match result set was not considered, and the approximation was considered. Type: “Here we have a million documents, this word is found in 1%, and this word in 3%. Suppose, and so come down. What are they morons, hahaha. As soon as you turn on something more interesting than this search by keyword, and it is rarely needed, and rarely, when it gives good results, performance immediately drops somewhere 30 times lower than Sphinx. And on this I am a benchmark and finished my acquaintance with the system too.merdjila together these lists, considered the BM25 approximation and very quickly gave a completely unnecessary result to the set. There was not even an exact number of matches in it, because the full lists of documents were not moved, the full match result set was not considered, and the approximation was considered. Type: “Here we have a million documents, this word is found in 1%, and this word in 3%. Suppose, and so come down. What are they morons, hahaha. As soon as you turn on something more interesting than this search by keyword, and it is rarely needed, and rarely, when it gives good results, performance immediately drops somewhere 30 times lower than Sphinx. And on this I am a benchmark and finished my acquaintance with the system too.merdjila together these lists, considered the BM25 approximation and very quickly gave a completely unnecessary result to the set. There was not even an exact number of matches in it, because the full lists of documents were not moved, the full match result set was not considered, and the approximation was considered. Type: “Here we have a million documents, this word is found in 1%, and this word in 3%. Suppose, and so come down. What are they morons, hahaha. As soon as you turn on something more interesting than this search by keyword, and it is rarely needed, and rarely, when it gives good results, performance immediately drops somewhere 30 times lower than Sphinx. And on this I am a benchmark and finished my acquaintance with the system too.because the complete lists of documents were not moved, the full match result set was not considered, and approximation was considered. Type: “Here we have a million documents, this word is found in 1%, and this word in 3%. Suppose, and so come down. What are they morons, hahaha. As soon as you turn on something more interesting than this search by keyword, and it is rarely needed, and rarely, when it gives good results, performance immediately drops somewhere 30 times lower than Sphinx. And on this I am a benchmark and finished my acquaintance with the system too.because the complete lists of documents were not moved, the full match result set was not considered, and approximation was considered. Type: “Here we have a million documents, this word is found in 1%, and this word in 3%. Suppose, and so come down. What are they morons, hahaha. As soon as you turn on something more interesting than this search by keyword, and it is rarely needed, and rarely, when it gives good results, performance immediately drops somewhere 30 times lower than Sphinx. And on this I am a benchmark and finished my acquaintance with the system too.and rarely, when it gives good results, performance immediately drops somewhere 30 times lower than Sphinx. And on this I am a benchmark and finished my acquaintance with the system too.and rarely, when it gives good results, performance immediately drops somewhere 30 times lower than Sphinx. And on this I am a benchmark and finished my acquaintance with the system too.

Do not underestimate the power of just stupid bugs. Those.there are differences in the approach, there are still troubles in the benchmarks, but I would also like to draw attention to the fact that it is always inevitable in individual cases just stupid ass in implementations, in a particular system. Here, inevitable, and nothing here.

I remembered the story about prefixes, because it is cool and revealing. Benchmark once Lucene on the prefix search, no matter what, or direct prefix, or search for a substring, and wondered: "Why so slow?". There then it was customary for decorators to write 15 total, not 25 total, so they managed to get to the problem especially quickly. It turned out that in the prefix search, the implementation is “excellent” in Lucene. She simply went over the entire dictionary linearly, in general, the whole. Thank you for not regekspov engine. Well, a linear dictionary search of 10 million keywords was also a very good idea. I, as it were, was glad, and after that there was an episode when reality demonstrated that we should not rejoice when Sphinx otnegoed too, with the same case, with the search for substrings, but in a somewhat different situation.There is a dictionary index so that when searching for substrings we don’t go through the 10 million vocabulary, in general, everything is linear, we immediately had it, but a situation called “and if some bastard zhahnet a request like“ a * ”” not that they would not have foreseen, but on our tests, it more or less somehow adequately behaved. And then all of a sudden, the server in the production server drops the clients, and not just drops, but its button has to. And not with the button that is ctrl, alt, del, but the button that “pull out of the socket”. An autopsy revealed that the client had a little bit wrong in the settings, we had a little bit wrong in defaults, etc. and the query “Zhuravlev” with an asterisk (*) at the end replaced the letter “e” with a space, because the charset_table. After that, there was a query "in" with an asterisk at the end. And everything would be fine if the expansion limit was sane, not 175 thousand words. I do not rememberwhether it was in the config, it was a configuration error or an error of our defaults, it doesn’t matter, but in any case, if for these 175 thousand words (of which 174500 words were met once in one document), there would be no read buffer size in 4 MB for each word, the server would also be a little easier. But nothing, found out what it falls from, scratched a turnip, fixed it, now, that means everyone is fine. And Lucene corrected, and it dawned on them that brute force is not cool, and it dawned on us that the word that needs to be read three bytes of data does not have to be read through a 4 MB buffer. We are now spending only 8 kb on it. In fact, we don’t read it in general through the buffer, but this is a separate complicated story.if for these 175 thousand words (of which 174500 words were met once in one document) there were no read buffers of 4 MB per word, then the server would also be a little bit easier. But nothing, found out what it falls from, scratched a turnip, fixed it, now, that means everyone is fine. And Lucene corrected, and it dawned on them that brute force is not cool, and it dawned on us that the word that needs to be read three bytes of data does not have to be read through a 4 MB buffer. We are now spending only 8 kb on it. In fact, we don’t read it in general through the buffer, but this is a separate complicated story.if for these 175 thousand words (of which 174500 words were met once in one document) there were no read buffers of 4 MB per word, then the server would also be a little bit easier. But nothing, found out what it falls from, scratched a turnip, fixed it, now, that means everyone is fine. And Lucene corrected, and it dawned on them that brute force is not cool, and it dawned on us that the word that needs to be read three bytes of data does not have to be read through a 4 MB buffer. We are now spending only 8 kb on it. In fact, we don’t read it in general through the buffer, but this is a separate complicated story.scratched turnip, fixed, now, then, everyone is fine. And Lucene corrected, and it dawned on them that brute force is not cool, and it dawned on us that the word that needs to be read three bytes of data does not have to be read through a 4 MB buffer. We are now spending only 8 kb on it. In fact, we don’t read it in general through the buffer, but this is a separate complicated story.scratched turnip, fixed, now, then, everyone is fine. And Lucene corrected, and it dawned on them that brute force is not cool, and it dawned on us that the word that needs to be read three bytes of data does not have to be read through a 4 MB buffer. We are now spending only 8 kb on it. In fact, we don’t read it in general through the buffer, but this is a separate complicated story.

Suddenly about the benchmarks. Everyone knows that it is possible to properly benchmark, wrong benchmark, and in general. Everyone knows that it is necessary to compare approximately the same, watch the time, and then begin to think that sometimes there are caches, they knock something, etc. And ideally, you should look at the average time, even ideally they look at the histogram, count quantiles, medians ... Unfortunately, specifically in the case of search engines, and not with the database selects benchmark, this is the approach: The

same query, Karl, the same! It is in the search, unfortunately, due to the lack of a certain standardization, perhaps, or something else, this very concept of the same request, this same request is not the same shit. Very differently can be considered inside.

Here is a living example of how the full text part is considered.

Shpinx. Default - we want to find all the words, and this is relatively quickly calculated, because you take the rarest word, then additionally reduce all those found by the rarest word, filter out this case about more frequent, about more frequent, etc. It is clear that take the word that occurs in the three documents and then filter it, it will give you two documents at the exit and fire. Merging three documents + 1 million documents + 1 million documents on the second word + another 2 million on the third is relatively slow. But at the same time, we have a relatively heavy ranking. By default, the same ranking, which looks not only at the frequencies of keywords that were found there, but also looks at least a bit in the position, considers not a very difficult factor called proximity, proximity of the request and the document, but still considersand, I stress, positions for this, and this, roughly speaking, at least twice as much work at once.

Lucene uses a conditionally slow implementation, which is like OR'it, i.e. the base boolean matching is theoretically slower, but the ranking is much more lightweight. And suddenly this Xapian story is smiling and waving. We gave a pre-cached, roughly speaking, rezalt set for each keyword, which does not mean in the battle at all damn.

Here are three "identical" requests. And from the user's point of view, yes, they are exactly the same. We simply took the system and, without regaining consciousness, jumped to the text “mother, soap, frame” in each request.

And this is still in some way flowers, because there is a moment with internal caches, as written on the slide. Caches everywhere, just hell. We once spat benchmarked certain things, simply because we could not turn off all the caches. Those.You can interface a specific benchmark in Lucene and simply fill it with requests in the hope that these caches will run out. Insert a virtual box with 1 GB of memory and a 10 GB index, and delete it with queries. In no other way can we measure the performance of real code that was interesting to measure, and not the performance of the cache.

And my favorite example, about Marketing driven default 3, is snippets, such a completely separate sideways point, not always and not all important, but very significant. Many times, not every day, even, probably, not every week, but nevertheless repeatedly, they asked the question: “Why do you have such brake snippets? In Solr, in general, nyashka works like a scalded rabbit on amphetamines, and you have a torment? ”. An autopsy revealed that there are three main points:

- -, , Solr' , , 10 . Those. , , , . .

- – , , , , , 10 .

- – « 64 ». , , 4 ? , , Solr' , 64 «, ». Sphinx , - . - , -, , , .

These were all lamentations on a topic called “how to benchmark and what is the same request”. There is no identical request. How to benchmark is incomprehensible even to a full-text system developer. And the trouble. Therefore, in order to distinctly benchmark, unfortunately, you need to at least roughly imagine how everything is arranged inside, otherwise your benchmark at best will be somewhat incorrect on the one hand, in the worst case it will show in production suddenly characteristics exactly 100 times worse than were on tests from the other side. The type of caches will end, the hit rate of 99% will be 1%, and everything will be wrong.

Results

That search is arranged like this. I tried to tell how it is, in principle, arranged on the physical level - dictionaries, document sheets, suddenly compression, it is suddenly important.

There are exactly two open source systems and a myriad of all commercial systems. In fact, that those that others are conceptually arranged in exactly the same way, there is no breakthrough in commercial either. There are the same dictionaries, lists, lists, dictionaries, etc. Everything is exactly the same, however, the implementation details change. A number of implementation details also tried to highlight, they say, here Lucene does this, we do a little differently and in some places we are going to redo it, etc. And from these details of the implementation and the general approaches to the projectile, called “we want to honestly count, and they want to cache valiantly”, there are such strange problems that are specific today for a search called “fuck you mark me, I have such a cache inside, such a cache that you will always benchmark only that cache. ” Incredibly insulting, but the fact given to us in sensations,such.

Contacts

» Shodan@sphinxsearch.com

» shodan

This report is a transcript of one of the best speeches at the conference of developers of high-loaded HighLoad ++ systems. Now we are actively preparing for the conference in 2016 - this year HighLoad ++ will be held in Skolkovo on November 7 and 8.

Also, some of these materials are used by us in an online training course on the development of high-load systems HighLoad.Guide is a chain of specially selected letters, articles, materials, videos. Already, in our textbook more than 30 unique materials. Get connected!

Source: https://habr.com/ru/post/310208/

All Articles