Applying R to work with the statement “Who is to blame? Of course IT! ”

Continuation of previous publications “DataScience Tools as an Alternative to the Classical Integration of IT Systems” ,

“Ecosystem R as a tool for automating business tasks” and a Gentleman's set of R packages for automating business tasks . This publication has 2 goals:

- Take a look at typical tasks that occur in business from a slightly different angle.

- Try to solve them, in part or in full, using the tools provided by R.

Extreme who?

It is no secret that representatives of IT services, including developers, on the one hand, and business employees - consumers of IT services - on the other hand, very often cannot find a common language. It may seem paradoxical that this is happening against the background of the fact that the IT environment, being digital, gives a lot of any objective indicators. But with a closer look, this becomes quite logical, since in 99% of the IT service is a “consumer of the budget” allocated by the business. Accordingly, the budget holder feels entitled to demand satisfaction, complying with his own subjective feelings, and not with some figures and graphs.

Therefore, the answer to the question "Who is to blame?" Is generally known. Of course IT is to blame! But this is a dead-end option, since the cause of the problems is not clarified and is not eliminated, which means that these problems will arise again and again.

To translate this question into a constructive course, the first step is to transform this question into “What IT system is to blame?”.

The next step arises from the side of IT is a brilliant idea: let's monitor everything, measure everything, store for a long time, and after feeding it all to experts / neural networks / anyone else, so that he can tell us the reason for the failure. Many vendors are hitting up, drawing space specifications with price tags comparable to the average city's annual budget.

Naturally, after a year of activity, it turns out that there is no money for such toys and there will be no, and again everyone is left with nothing.

Possible solution

Can anything be done about it? If you temper ambition, turn on your head and roll up your sleeves, you can, and even very well. The logic of the steps that allow you to get out of a stalemate situation is approximately as follows:

Let's be honest, IT already has a lot of monitoring tools (both free and vendor) that somehow collect some data and store some of it. First you need to stop at this. Since they didn’t buy anything else, there was no clear evidence that much more was needed.

Business users do not care at all about IT's offal and don't even talk to them about this topic. They are worried about two things: so that the application (s) with which they work is accessible and that it does not slow down. More precisely, not even the entire application, but only a limited set of windows and buttons. This is a small amount of KPI (in fact, a KPI business) and must be additionally monitored and used to talk with the business.

The ideas of cross-correlation of 100,500 metrics and automatic dependency searching and root cause search are good for fantastic stories. In reality, this does not work. First, in a complex IT system there is always n + 1 metrics, which was forgotten, but which turned out to be the cause. Secondly, it is strange to try to force the idea of linear dependence on a purely non-linear IT system, the structure of which is often known only “in general”. Thirdly, without additional external knowledge, it is impossible to determine what is the cause and what the consequence (see Spurious correlations ), i.e. The dependency search algorithm must be very complex and contain a model of the observed system. It is long, expensive, with an incomprehensible result.

- In any case, problems in IT systems are divided into 2 classes: a) known, when you should come up and “hit a hand” in the upper left corner when symptoms arise, b) unknown, with which you have to manually examine and understand.

Thus, it is possible to transform the idea of the correlation of metrics as follows, which has a practical implementation:

We automate known solutions of known problems and do not consider them further. What to do, sometimes for life it is necessary to take medication.

We begin to collect, preferably with existing means, the values of business metrics that bother business users. These metrics (time series) are used as reference indicators.

We turn on the head and limit the number of iron metrics only to the iron on which these business applications run.

Instead of talking about neural networks, we first use the “visual correlation” approach. In this case, time series of business metrics and technological metrics suspected as the cause of problems are displayed on the screen in a convenient form for comparison. We give an IT specialist a handy tool for changing sets of metrics for analysis.

Since the analysis is carried out relatively rarely, does not require a real-time reaction, we do not organize any intermediate repositories with unloading of all metrics from all systems. Data for analysis is collected on request from existing monitoring, control and diagnostic systems.

- After the first successful steps we can add extra. processing algorithms, incl. taking into account the accumulated knowledge of the possible causes and ways of specifying the diagnosis.

West will save us?

For this case, the western vendors have the concept of "umbrella systems". But in relation to our task, it is redundant and expensive, since it is never known what can happen in IT and in business. To collect everything that is possible, and then to drive into one system is not an option, it is very expensive. "Marry" a bunch of heterogeneous systems in the framework of the model of the world manufacturer of the "umbrella" system also fails. Too simple and not congruent to the friend and the initial problem of the model in these umbrellas. There are a few more “ambushes”:

Flash \ Java GUI "umbrella"!

Full support for the Russian language is missing!

For deep configuration, use the mouse + internal scripting languages, sacred knowledge for which cost more than a dozen kilo dollars.

Embedded mathematics ends on calculating the mean. Complex algorithms? What are you, this is professional services, prepare a suitcase of money!

- Can you get an answer what to do with missing data? Data received in the past time? Aperiodic time series? Differing periodicity of time series? Forget it!

Is it possible to solve this problem?

Practice has shown that within the framework of ecosystem R, this problem is completely solvable. What we get at the output:

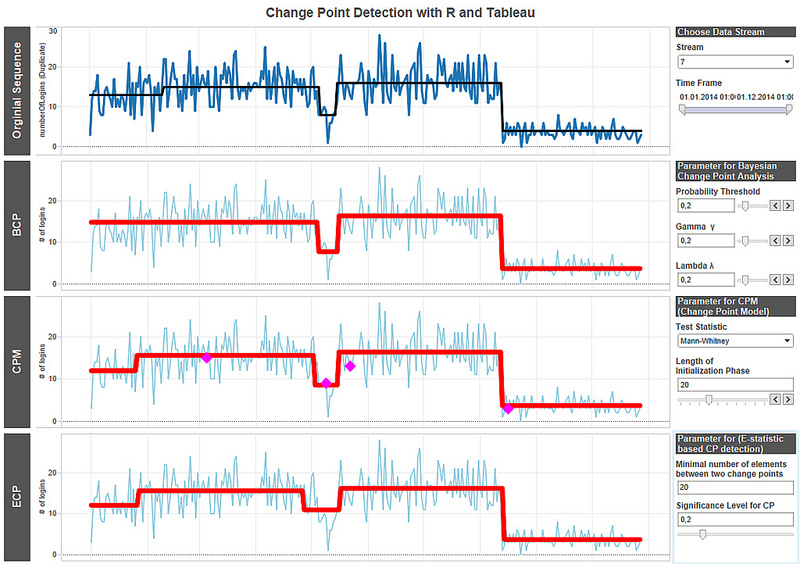

- Analytical console + Shiny based dashboards (HTML + JS + CSS). We can both show business traffic lights and engage in interactive analysis of metrics to search for possible causes without immersion into the R console. Everything is in Russian. For certain reasons, I cannot yet publish a screenshot, so for example, I will provide a link to an ideologically similar illustration (in the picture R + Tableau, borrowed from here )

Any degree of complexity graphical output of ggplot statics (data overlay, transformations, grids, fonts, etc.) + ample opportunities for htmlwidgets dynamics.

Mathematical processing of any complexity, in the framework of the artist. It is possible to tighten neural networks and forecasting.

Support for working with time series for every taste and color.

Convenient data processing (hadleyverse).

Convenient and easy import of data from any other systems using any protocols. Any complexity of the data import logic is possible, including complex intermediate preprocessing with two or more phased import procedures. The possibility of elementary adaptation of imports with changes in external systems.

No less simple export.

- Ability to develop procedures for automatic changes in external systems, while remaining within R.

Conclusion

A possible question “Why is R?” Approximate answers are:

According to the results of practical application, I consider the ecosystem R to be well applicable to “everyday” business tasks. Therefore, R was used in the formulation of the problem.

Mathematics + integrated web interface without web programming + simple import / export and integration mechanisms + convenient methods and methods for working with data.

The speed and ease of obtaining the final result.

- I was not able to find any acceptable analogue of the ecosystem in other languages, including within python. Looking for a long time. If I missed something similar, please write in the comments.

PS It will be extremely interesting if colleagues who have tasks that could potentially be solved using R, have described the task in the comments in an impersonal way. An example of various business problems, other than widely discussed big data based recommender systems, and automated on the R ecosystem, has already been given in posts. Then there will be an occasion to discuss specific issues and approaches.

Previous post: "Gentleman's set of R packages for automating business tasks"

Next post: "You do not have enough speed R? We are looking for hidden reserves"

')

Source: https://habr.com/ru/post/310108/

All Articles