PCI Express 4.0, cables and everything

Since we have touched on a little in the comments to the previous article on the topic of expanding PCI Express and taking the bus out of the chassis, we should probably tell you a little more about it.

Let's start from afar - with the history of PCI Express in general and the nuances associated with it. The specification of the first revision appeared back in 2003, while maintaining a speed of 2.5 GTs per lane and aggregation of up to 16 lanes per port (by the way, this is probably the only thing that has not changed - despite timid references and even official support in version 2.0 width in x32, as far as I know, no one of ports of such width really still supports this time). Note that the speed specified in GTs (transactions per second) is not data bits, the actual bit rate is lower due to 8b / 10b coding (for versions prior to 2.1 inclusive).

Over the next years, the evolutionary development of the standard began:

')

• 2005 - release of specification 1.1, containing minor improvements without increasing speed

• 2007 - standard version 2.0, speed on the lane doubled (to 5GTs or 4Gbps)

• 2009 - release of version 2.1, containing many improvements, essentially being a preparation for the transition to the third version

• 2010 - a significant leap, the transition to version 3.0, which, in addition to increasing the channel speed to 8 GTs, brought the transition to a new encoding ( 128b / 130b ), which significantly reduced the overhead of transferring the actual data. That is, if for version 2.0 at a speed of 5 GTs, the actual bit rate was only 4 Gbps, then for version 3.0 at a speed of 8 GTs, the bit rate is ~ 7.87 Gbps - the difference is palpable.

• 2014 - release of specification 3.1. It includes various improvements discussed in working groups.

• 2017 - release of the final specification 4.0 is expected.

Next will be a great digression. As can be seen from the schedule for the release of specifications, the growth of bus speed actually stopped for seven years, while the performance of the components of computing systems and interconnect networks did not even think to stand still. Here we must understand that PCI Express, although it is an independent standard, developed by a working group with a huge number of participants (PCI Special Interest Group - PCI-SIG , we are also members of this group), the direction of its development is nevertheless largely determined by opinion and Intel's position, simply because the vast majority of PCI Express devices are in ordinary home computers, laptops and low-end servers - the realm of x86 processors. And Intel is a large corporation, and it may have its own plans, including slightly going against the wishes of other market participants. And many of these participants were, to put it mildly, dissatisfied with the delay in increasing the speed (especially those involved in the creation of systems for High Performance Computing - HPC, or simply supercomputers). Mellanox, for example, has long rested on the development of InfiniBand in the bottleneck of PCI Express, NVIDIA also clearly suffered from the inconsistency of the PCI Express speed with the needs for data transfer between the GPU. Moreover, purely technical speed could be increased for a long time, but a lot rests on the need to maintain backward compatibility. What it all eventually led to:

• NVIDIA has created its own interconnect ( NVLINK , the first version has a speed of 20 GTs per lane, the second will already have 25 GTs) and declares its readiness to license it to everyone (unfortunately only the host part, end-point licensing is not expected yet)

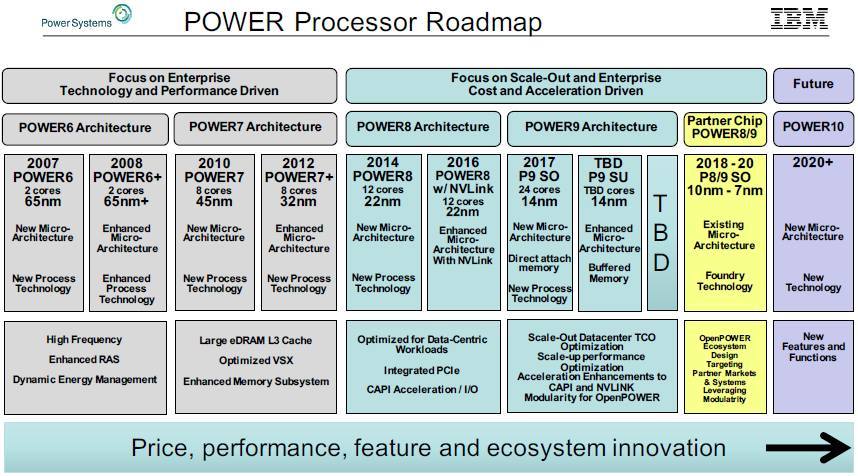

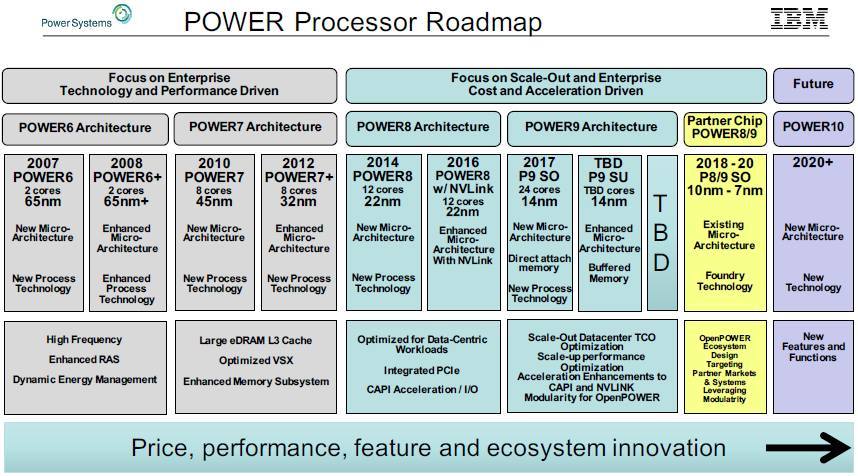

• IBM adds support for NVLINK 1.0 to POWER8 processors (already available on POWER8 + processors)

• In POWER9 (which will hit the market in 2017), IBM will support NVLINK 2.0, and you can use OpenCAPI, the coherent interface for connecting accelerators, on the same physical ports.

• In POWER9, IBM is implementing PCI Express at a speed of 16 GTs, which meets the draft specification 4.0 specification - that is, it looks like Intel will not be the first to support the new standard.

POWER Roadmap

By the way, there is a curious moment regarding the indication of speed. A common option for specifying the speed of operation of a PCI Express device is Gen1, Gen2, Gen3. In fact, this should be treated exactly as an indication of the supported speed, and not compliance with the standard of the corresponding version. That is, for example, no one forbids a device fully compliant with 3.1 standard not to be able to work at speeds above 5 GTs.

Actually, what is good about PCI Express from the point of view of the developer is that it is the most direct and unified (supported by a variety of platforms) way to connect anything to the central processor with minimal overhead. Of course, Intel processors still have QPIs - but this is a bus, access to which is given to a very (VERY!) Limited circle of particularly close companies. In IBM, POWER8 is X-Bus, A-Bus and, in the future (for POWER9), OpenCAPI, but it’s generally better not to think about the cost of licensing the first two, and there’s still no third one (although it should be open). And of course, direct connection via PCI Express is relevant only when you want a lot, quickly and with minimal delay. For all other cases, there is USB, SAS / SATA, Eth and their ilk.

Despite the fact that PCI Express was originally conceived precisely for connecting components inside a computer, the desire to connect something with a cable appeared quickly enough. In everyday life, this is usually not required (well, except for laptops, sometimes I would like to connect an external video card or something like that), but for servers, especially with the advent of PCI Express switches, this has become very relevant - the required number of slots is sometimes not so just fit inside a single chassis. And with the development of GPU and computing interconnect networks, especially with the advent of GPU Direct technology, the desire to have many devices connected via PCI Express to one host only increased.

The first options for cabling PCI Express were standardized in 2007 (specification 1.0), the second revision was released in 2012, keeping the type of connectors and cable unchanged. This is not to say that such a connection is very widespread (the niche is still quite narrow), but nevertheless several large vendors produced both connectors and cables, including active optics. One of the most well-known companies offering various expansion chassis options with a PCI Express bus connection cable is One Stop Systems.

PCI Express External Connection Cable

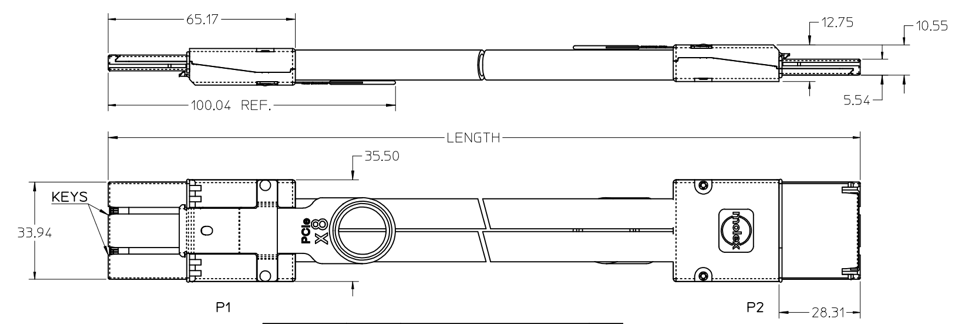

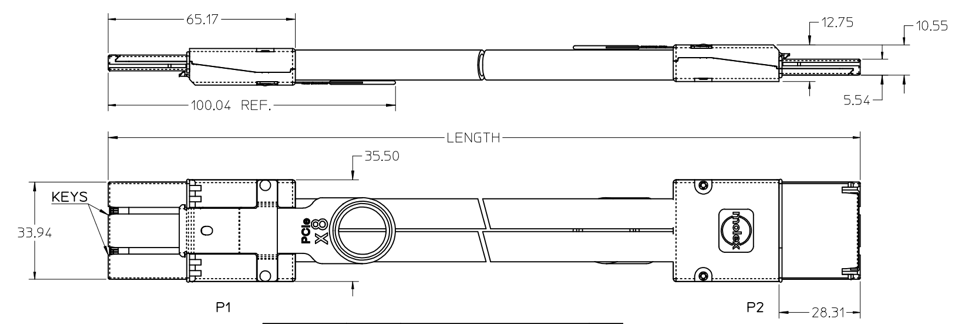

However, the cable (and connector), which were chosen initially, is not very convenient today. The first (and quite substantial) trouble is that it is impossible to place the number of connectors required to output an x16-wide port on one low-profile card (more precisely, a special connector for x16 can be used, but at the same time, universality is lost in terms of using smaller ports Yes, and this type of connector somehow did not stick). The second disadvantage comes from the fact that this type of cable is not used anywhere else.

Meanwhile, there is a standard in the industry with a long history of cable usage, namely SAS. And the current version of SAS 3.0 works at a speed of 12GTs, which is one and a half times higher than the speed of PCI Express Gen3, that is, SAS cables are also well suited for connecting PCI Express through them. In addition, Mini-SAS HD connectors are still very convenient because there is 4 lanes at once through a single cable, and there are assemblies for 2 and 4 connectors, which makes it possible to use x8 and x16 wide ports. The dimensions of the connectors are compact enough for an assembly of 4 connectors to fit on a low-profile map. An additional advantage of these cables is that the Tx and Rx signals are separated in the connector itself and in the cable assembly, this reduces their mutual influence. Accordingly, now more and more solutions, where it is necessary to bring the PCI Express cable out of the chassis, use Mini-SAS HD.

Mini-SAS HD cable and connectors

As a consequence of the above, as well as the fact that SAS 4.0 will be released in the near future, which will have a speed of 24GTs and retain the cables of the same form factor (Mini-SAS HD), the participants of PCI-SIG decided to standardize the use of this type of cables for external PCI Express connection (including revision 4.0 in the future).

Now a little about the nuances of using cables (any) and the problems that have to be addressed. The cables of interest to us are of two types - passive copper and active optical. With copper problems less, but for them you still need to consider the following points:

• when using passive cables, for reasons of signal integrity, it is necessary to install either red-drives or a PCI Express switch on the adapter board; a switch is needed if you want to be able to bifurcate the x16 port coming from the host to more ports (for example, 2x8 or 4x4), and also if the far side does not support working with a separate 100MHz Reference Clock or you need to provide an opaque bridge (NT Bridge a) between two hosts;

• if you need an aggregated link (x8, x16) you should pay particular attention to the allowable cable length variation of a particular manufacturer (it is especially large for long cables, and then you can get a significant length skew between the lanes of one port, which exceeds the tolerances set in the standard );

• please note that in the Mini-SAS HD cable only four diffs pass. the pairs and lines of the ground, that is, the entire set of sideband signals necessary for full-fledged PCI Express operation, cannot be stretched there; This may not be critical if the cable is used to connect two switches, but in the case when you just need to connect the endpoint remotely, you may have to use additional cables to loop through the reset signals and control the Hot Plug;

• It is probably unnecessary to mention that both sides must be grounded, otherwise a parasitic current may occur through the ground lines of the cable, which is completely undesirable.

The use of active optical cables makes it possible not to think about some of the issues that have to be solved when using passive copper (redrawers can be avoided, since the transceiver itself is the end point of the electric signal, grounding also ceases to be disturbed, since the two sides of the cable are galvanically isolated), at the same time, the optics not only does not solve the remaining problems inherent in passive cables, but also introduces new ones inherent only in it:

• in addition to the restriction on the same length of cables, there is also a restriction on their identity - it is highly undesirable to use cables from different manufacturers within the same port, since they may have different delay on the transceivers;

• optical transceivers do not support electrical idle status transmission;

• surprise - active transceivers are very hot, and they need to be cooled;

There may also be certain nuances related to the transceiver impedance, their signal levels and termination.

Of course, Mini-SAS HD is not the only type of cables and connectors that can be used to connect PCI Express. You can recall, for example, classic QSFP or CXP, which are quite suitable for this purpose, or think about more exotic options like installing an optical transceiver directly to the board and leaving the card immediately with optics (Avago Broadcom has many suitable options, well, or for example, Samtec FireFly ) - but all of these options are significantly more expensive or not very convenient based on the dimensions of the connectors.

In addition to the Mini-SAS HD standardization initiative mentioned above as a cable for external connections, PCI-SIG is also developing a new cable standard, which, although it is called OCuLink (Optical & Cuprum Link), will most likely mean only passive copper cables like for internal (within the chassis), and for external connections. Connectors and cables of this standard are quite compact, there are already serial products on the market that correspond to this standard that has not yet been released (Molex has it called NanoPitch , Amphenol also offers cables of this form factor, including active ones). Unfortunately, these connectors also do not imply placing four connectors on a low-profile card. Combined with the fact that none of the vendors with whom we communicate, is not planning to make active optics with such connectors, this is unlikely to promote the use of this type of cables for external connections. But as an option for internal cables, this is quite interesting, moreover, we have already seen projects using them and are going to use this type of cables in our server to connect the disk controller to the motherboard.

OCuLink internal cable

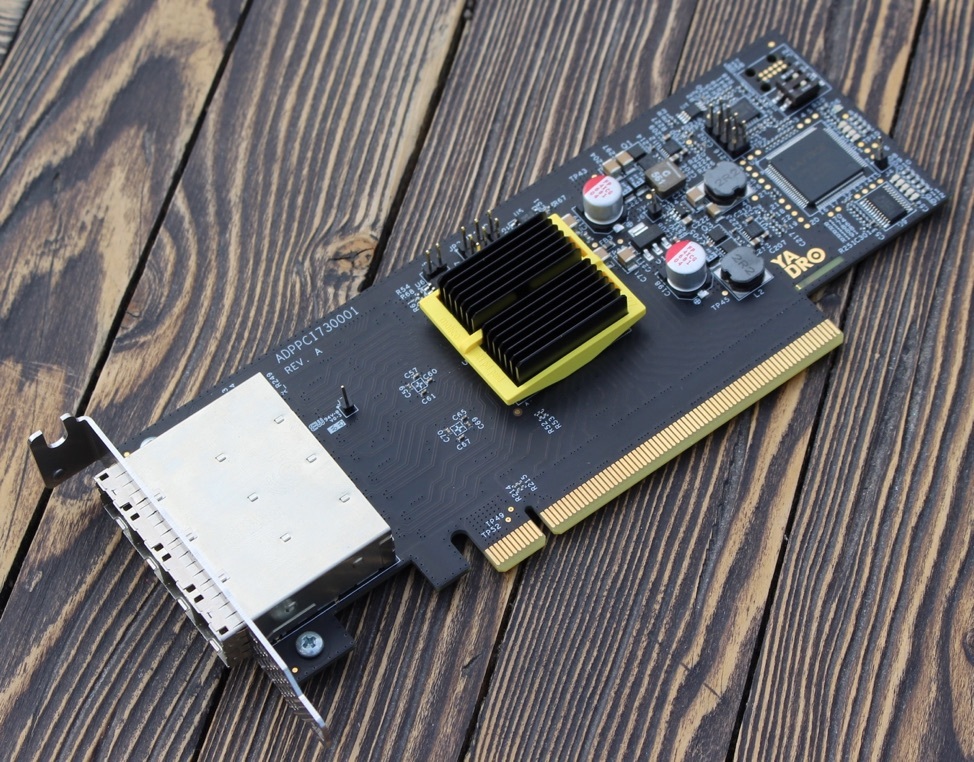

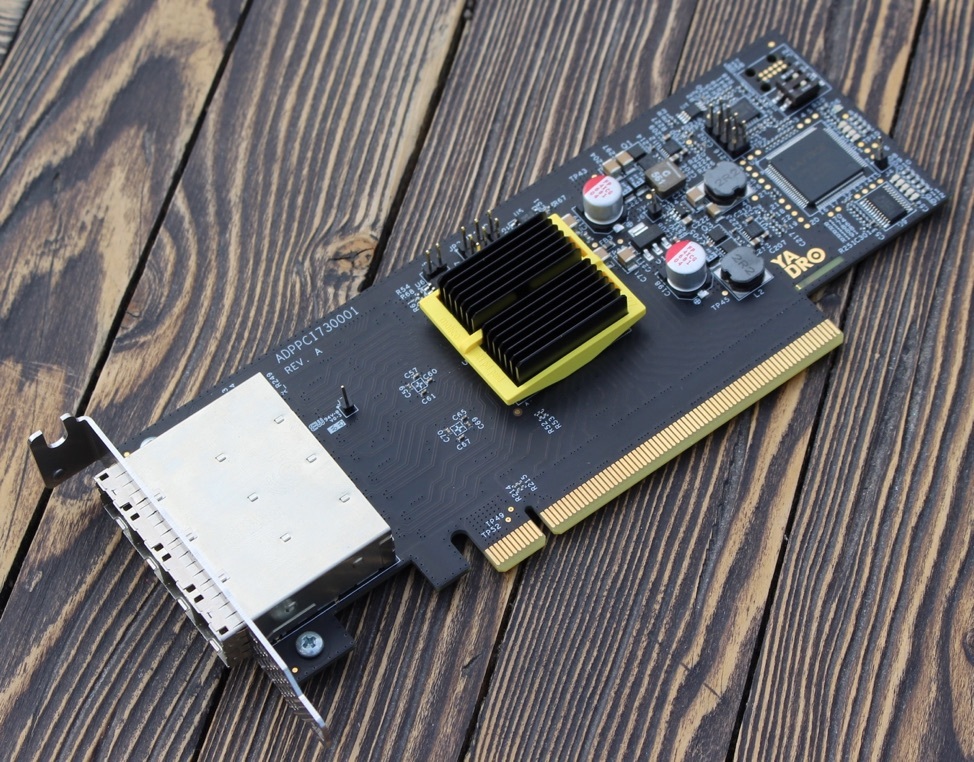

Now a little about our products. We have a project, we will probably also talk about it in more detail later, which involves creating a chassis with an advanced PCI Express switching topology and connecting it to several hosts. It is clear that in this case we cannot do without PCI Express output via cables, and for this we made an adapter card based on the PCI Express switch PLX (which was bought by Avago, which was also renamed Broadcom after it, too, bought it - In general, these acquisitions are already fed up, so we will still call it PLX). For our solution, we used Mini-SAS HD cables - yet this seems to us the best option, and judging by the direction of the PCI-SIG operation, we are not alone in this conviction.

Self-designed adapter for PCI Express cable output

After receiving and testing the first samples, we found with some surprise that using high-quality passive cables it is possible to ensure bus performance at a speed of 8GTs (Gen3) through a cable up to 10 meters long (we simply did not see longer than passive cables). And if more is needed, then we can work with active optical cables (checked - it works).

In fact, putting a fairly expensive PCI Express switch chip in our project only makes sense on one side — the host side — to allow bifurcation of port x16 to four ports on x4. At the other end of the cable, it is enough to put the adapter with the re-drivers, because in our version, everything will be on this side and so connect to the PCI Express switch, which can be programmed to the required port splitting.

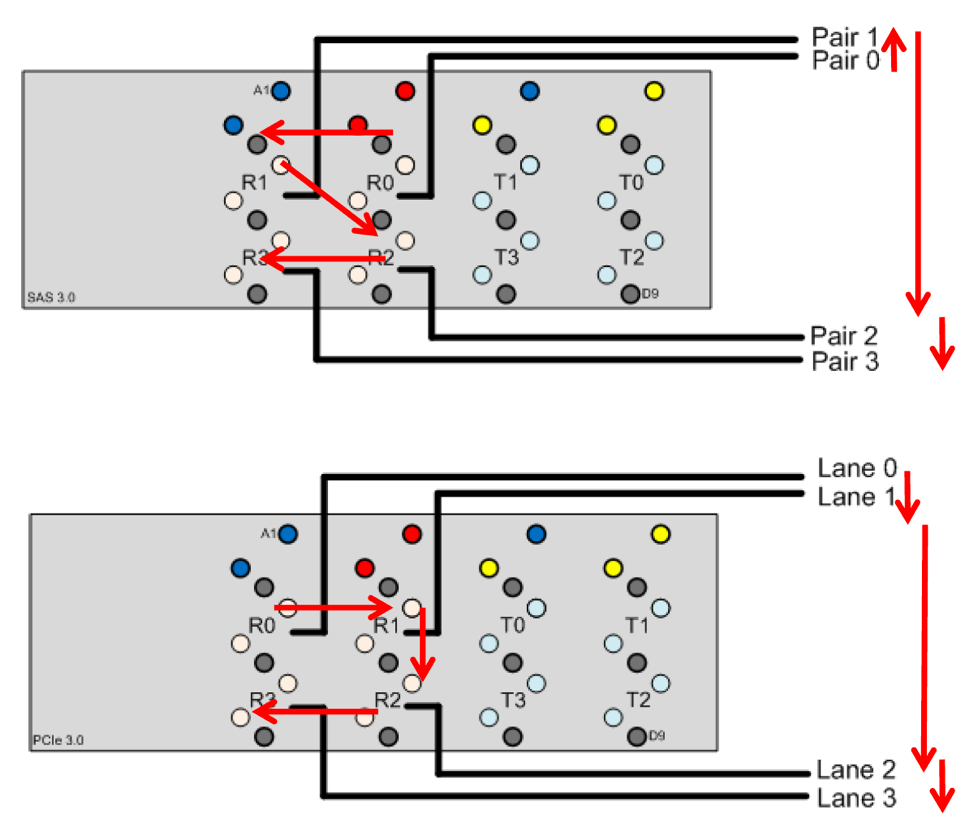

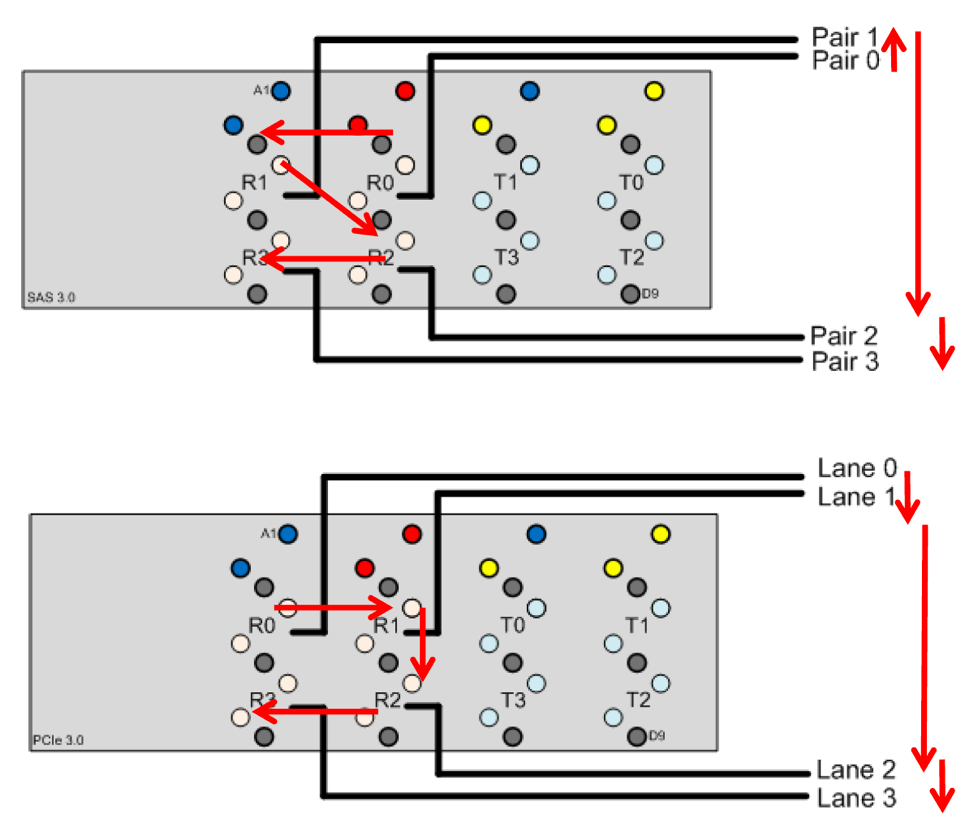

When using Mini-SAS HD for the transfer of PCI Express, you should pay attention to one more thing. The numbering of pairs in the connector, the estimated SAS, is not very convenient to breakout in the case of PCI Express. As long as you do not intend to work with third-party equipment, this is not critical - you can connect as you like at all. But if there is a desire to ensure compatibility with other products in the future, it is better to adhere to the PCI-SIG recommendation and change the order of connecting lanes.

Recommended for PCI Express pairing on the connector

A small disclaimer - information about everything mentioned below can be found using Google. Of course, we know about some things much more details than we can tell based on the conditions of the NDA.

Story

Let's start from afar - with the history of PCI Express in general and the nuances associated with it. The specification of the first revision appeared back in 2003, while maintaining a speed of 2.5 GTs per lane and aggregation of up to 16 lanes per port (by the way, this is probably the only thing that has not changed - despite timid references and even official support in version 2.0 width in x32, as far as I know, no one of ports of such width really still supports this time). Note that the speed specified in GTs (transactions per second) is not data bits, the actual bit rate is lower due to 8b / 10b coding (for versions prior to 2.1 inclusive).

Over the next years, the evolutionary development of the standard began:

')

• 2005 - release of specification 1.1, containing minor improvements without increasing speed

• 2007 - standard version 2.0, speed on the lane doubled (to 5GTs or 4Gbps)

• 2009 - release of version 2.1, containing many improvements, essentially being a preparation for the transition to the third version

• 2010 - a significant leap, the transition to version 3.0, which, in addition to increasing the channel speed to 8 GTs, brought the transition to a new encoding ( 128b / 130b ), which significantly reduced the overhead of transferring the actual data. That is, if for version 2.0 at a speed of 5 GTs, the actual bit rate was only 4 Gbps, then for version 3.0 at a speed of 8 GTs, the bit rate is ~ 7.87 Gbps - the difference is palpable.

• 2014 - release of specification 3.1. It includes various improvements discussed in working groups.

• 2017 - release of the final specification 4.0 is expected.

Effects

Next will be a great digression. As can be seen from the schedule for the release of specifications, the growth of bus speed actually stopped for seven years, while the performance of the components of computing systems and interconnect networks did not even think to stand still. Here we must understand that PCI Express, although it is an independent standard, developed by a working group with a huge number of participants (PCI Special Interest Group - PCI-SIG , we are also members of this group), the direction of its development is nevertheless largely determined by opinion and Intel's position, simply because the vast majority of PCI Express devices are in ordinary home computers, laptops and low-end servers - the realm of x86 processors. And Intel is a large corporation, and it may have its own plans, including slightly going against the wishes of other market participants. And many of these participants were, to put it mildly, dissatisfied with the delay in increasing the speed (especially those involved in the creation of systems for High Performance Computing - HPC, or simply supercomputers). Mellanox, for example, has long rested on the development of InfiniBand in the bottleneck of PCI Express, NVIDIA also clearly suffered from the inconsistency of the PCI Express speed with the needs for data transfer between the GPU. Moreover, purely technical speed could be increased for a long time, but a lot rests on the need to maintain backward compatibility. What it all eventually led to:

• NVIDIA has created its own interconnect ( NVLINK , the first version has a speed of 20 GTs per lane, the second will already have 25 GTs) and declares its readiness to license it to everyone (unfortunately only the host part, end-point licensing is not expected yet)

• IBM adds support for NVLINK 1.0 to POWER8 processors (already available on POWER8 + processors)

• In POWER9 (which will hit the market in 2017), IBM will support NVLINK 2.0, and you can use OpenCAPI, the coherent interface for connecting accelerators, on the same physical ports.

• In POWER9, IBM is implementing PCI Express at a speed of 16 GTs, which meets the draft specification 4.0 specification - that is, it looks like Intel will not be the first to support the new standard.

POWER Roadmap

Remarks

By the way, there is a curious moment regarding the indication of speed. A common option for specifying the speed of operation of a PCI Express device is Gen1, Gen2, Gen3. In fact, this should be treated exactly as an indication of the supported speed, and not compliance with the standard of the corresponding version. That is, for example, no one forbids a device fully compliant with 3.1 standard not to be able to work at speeds above 5 GTs.

Actually, what is good about PCI Express from the point of view of the developer is that it is the most direct and unified (supported by a variety of platforms) way to connect anything to the central processor with minimal overhead. Of course, Intel processors still have QPIs - but this is a bus, access to which is given to a very (VERY!) Limited circle of particularly close companies. In IBM, POWER8 is X-Bus, A-Bus and, in the future (for POWER9), OpenCAPI, but it’s generally better not to think about the cost of licensing the first two, and there’s still no third one (although it should be open). And of course, direct connection via PCI Express is relevant only when you want a lot, quickly and with minimal delay. For all other cases, there is USB, SAS / SATA, Eth and their ilk.

Closer to cables

Despite the fact that PCI Express was originally conceived precisely for connecting components inside a computer, the desire to connect something with a cable appeared quickly enough. In everyday life, this is usually not required (well, except for laptops, sometimes I would like to connect an external video card or something like that), but for servers, especially with the advent of PCI Express switches, this has become very relevant - the required number of slots is sometimes not so just fit inside a single chassis. And with the development of GPU and computing interconnect networks, especially with the advent of GPU Direct technology, the desire to have many devices connected via PCI Express to one host only increased.

The first options for cabling PCI Express were standardized in 2007 (specification 1.0), the second revision was released in 2012, keeping the type of connectors and cable unchanged. This is not to say that such a connection is very widespread (the niche is still quite narrow), but nevertheless several large vendors produced both connectors and cables, including active optics. One of the most well-known companies offering various expansion chassis options with a PCI Express bus connection cable is One Stop Systems.

PCI Express External Connection Cable

However, the cable (and connector), which were chosen initially, is not very convenient today. The first (and quite substantial) trouble is that it is impossible to place the number of connectors required to output an x16-wide port on one low-profile card (more precisely, a special connector for x16 can be used, but at the same time, universality is lost in terms of using smaller ports Yes, and this type of connector somehow did not stick). The second disadvantage comes from the fact that this type of cable is not used anywhere else.

Meanwhile, there is a standard in the industry with a long history of cable usage, namely SAS. And the current version of SAS 3.0 works at a speed of 12GTs, which is one and a half times higher than the speed of PCI Express Gen3, that is, SAS cables are also well suited for connecting PCI Express through them. In addition, Mini-SAS HD connectors are still very convenient because there is 4 lanes at once through a single cable, and there are assemblies for 2 and 4 connectors, which makes it possible to use x8 and x16 wide ports. The dimensions of the connectors are compact enough for an assembly of 4 connectors to fit on a low-profile map. An additional advantage of these cables is that the Tx and Rx signals are separated in the connector itself and in the cable assembly, this reduces their mutual influence. Accordingly, now more and more solutions, where it is necessary to bring the PCI Express cable out of the chassis, use Mini-SAS HD.

Mini-SAS HD cable and connectors

As a consequence of the above, as well as the fact that SAS 4.0 will be released in the near future, which will have a speed of 24GTs and retain the cables of the same form factor (Mini-SAS HD), the participants of PCI-SIG decided to standardize the use of this type of cables for external PCI Express connection (including revision 4.0 in the future).

What to consider when transferring PCI Express over cable

Now a little about the nuances of using cables (any) and the problems that have to be addressed. The cables of interest to us are of two types - passive copper and active optical. With copper problems less, but for them you still need to consider the following points:

• when using passive cables, for reasons of signal integrity, it is necessary to install either red-drives or a PCI Express switch on the adapter board; a switch is needed if you want to be able to bifurcate the x16 port coming from the host to more ports (for example, 2x8 or 4x4), and also if the far side does not support working with a separate 100MHz Reference Clock or you need to provide an opaque bridge (NT Bridge a) between two hosts;

• if you need an aggregated link (x8, x16) you should pay particular attention to the allowable cable length variation of a particular manufacturer (it is especially large for long cables, and then you can get a significant length skew between the lanes of one port, which exceeds the tolerances set in the standard );

• please note that in the Mini-SAS HD cable only four diffs pass. the pairs and lines of the ground, that is, the entire set of sideband signals necessary for full-fledged PCI Express operation, cannot be stretched there; This may not be critical if the cable is used to connect two switches, but in the case when you just need to connect the endpoint remotely, you may have to use additional cables to loop through the reset signals and control the Hot Plug;

• It is probably unnecessary to mention that both sides must be grounded, otherwise a parasitic current may occur through the ground lines of the cable, which is completely undesirable.

The use of active optical cables makes it possible not to think about some of the issues that have to be solved when using passive copper (redrawers can be avoided, since the transceiver itself is the end point of the electric signal, grounding also ceases to be disturbed, since the two sides of the cable are galvanically isolated), at the same time, the optics not only does not solve the remaining problems inherent in passive cables, but also introduces new ones inherent only in it:

• in addition to the restriction on the same length of cables, there is also a restriction on their identity - it is highly undesirable to use cables from different manufacturers within the same port, since they may have different delay on the transceivers;

• optical transceivers do not support electrical idle status transmission;

• surprise - active transceivers are very hot, and they need to be cooled;

There may also be certain nuances related to the transceiver impedance, their signal levels and termination.

Alternatives to Mini-SAS HD

Of course, Mini-SAS HD is not the only type of cables and connectors that can be used to connect PCI Express. You can recall, for example, classic QSFP or CXP, which are quite suitable for this purpose, or think about more exotic options like installing an optical transceiver directly to the board and leaving the card immediately with optics (

In addition to the Mini-SAS HD standardization initiative mentioned above as a cable for external connections, PCI-SIG is also developing a new cable standard, which, although it is called OCuLink (Optical & Cuprum Link), will most likely mean only passive copper cables like for internal (within the chassis), and for external connections. Connectors and cables of this standard are quite compact, there are already serial products on the market that correspond to this standard that has not yet been released (Molex has it called NanoPitch , Amphenol also offers cables of this form factor, including active ones). Unfortunately, these connectors also do not imply placing four connectors on a low-profile card. Combined with the fact that none of the vendors with whom we communicate, is not planning to make active optics with such connectors, this is unlikely to promote the use of this type of cables for external connections. But as an option for internal cables, this is quite interesting, moreover, we have already seen projects using them and are going to use this type of cables in our server to connect the disk controller to the motherboard.

OCuLink internal cable

What we do

Now a little about our products. We have a project, we will probably also talk about it in more detail later, which involves creating a chassis with an advanced PCI Express switching topology and connecting it to several hosts. It is clear that in this case we cannot do without PCI Express output via cables, and for this we made an adapter card based on the PCI Express switch PLX (which was bought by Avago, which was also renamed Broadcom after it, too, bought it - In general, these acquisitions are already fed up, so we will still call it PLX). For our solution, we used Mini-SAS HD cables - yet this seems to us the best option, and judging by the direction of the PCI-SIG operation, we are not alone in this conviction.

Self-designed adapter for PCI Express cable output

After receiving and testing the first samples, we found with some surprise that using high-quality passive cables it is possible to ensure bus performance at a speed of 8GTs (Gen3) through a cable up to 10 meters long (we simply did not see longer than passive cables). And if more is needed, then we can work with active optical cables (checked - it works).

In fact, putting a fairly expensive PCI Express switch chip in our project only makes sense on one side — the host side — to allow bifurcation of port x16 to four ports on x4. At the other end of the cable, it is enough to put the adapter with the re-drivers, because in our version, everything will be on this side and so connect to the PCI Express switch, which can be programmed to the required port splitting.

And a bit of compatibility

When using Mini-SAS HD for the transfer of PCI Express, you should pay attention to one more thing. The numbering of pairs in the connector, the estimated SAS, is not very convenient to breakout in the case of PCI Express. As long as you do not intend to work with third-party equipment, this is not critical - you can connect as you like at all. But if there is a desire to ensure compatibility with other products in the future, it is better to adhere to the PCI-SIG recommendation and change the order of connecting lanes.

Recommended for PCI Express pairing on the connector

Source: https://habr.com/ru/post/309898/

All Articles