Google Device Lab Winners: Explore Project Tango

Hello colleagues! Our small company Vizerra develops custom projects and its own products in the field of augmented and virtual reality (hereinafter referred to as ARVR) for a variety of customers . On almost every project we need to come up with some new and unexpected solution. The ARVR market is very young and many solutions are created during projects. Thus its development takes place.

Article winning author Alexander Lavrov, in the framework of the competition "Device Lab from Google . "

In this regard, we constantly try new equipment with an eye to its use in projects. Today we want to talk about this already famous, but low-lit in the Russian-speaking Internet device such as Project Tango. Most recently, he simply became Tango, marking the transition to the commercial stage of his life and devices for end users began to appear.

Our acquaintance with Project Tango began in the spring of this year, when an idea arose to try marker-free tracking for a number of our projects.

')

It turned out that to get the device for experiments is very difficult. We were helped by the Skolkovo Foundation by introducing Google to the Russian office. The guys were very responsive and gave the much-desired device to their hands.

We were able to quickly achieve the results we needed, showed our tests at Startup Village, and with a sense of accomplishment, returned the device to Google.

A couple of months ago, we found out that Google Device Lab had started, and we decided that we needed to pay back good for the good — to share our experience with other developers and sat down to write this article. In the process of writing an article, its concept changed several times. I wanted to do something useful as usual, and not to describe our next project. In the end, after consulting we decided that the most useful would be to write a Russian-language full review of Project Tango, taking into account all the bumps we had. Then we began to combine disparate sources, so that the reader would have created a fairly complete picture after reading this article.

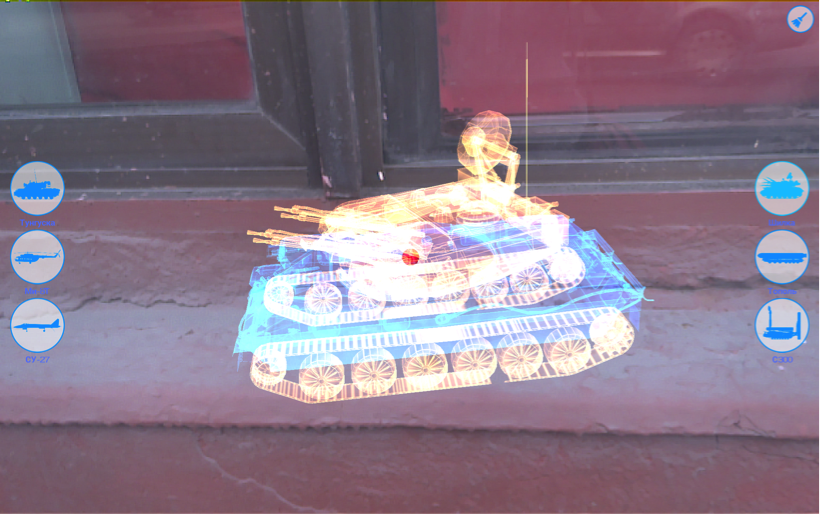

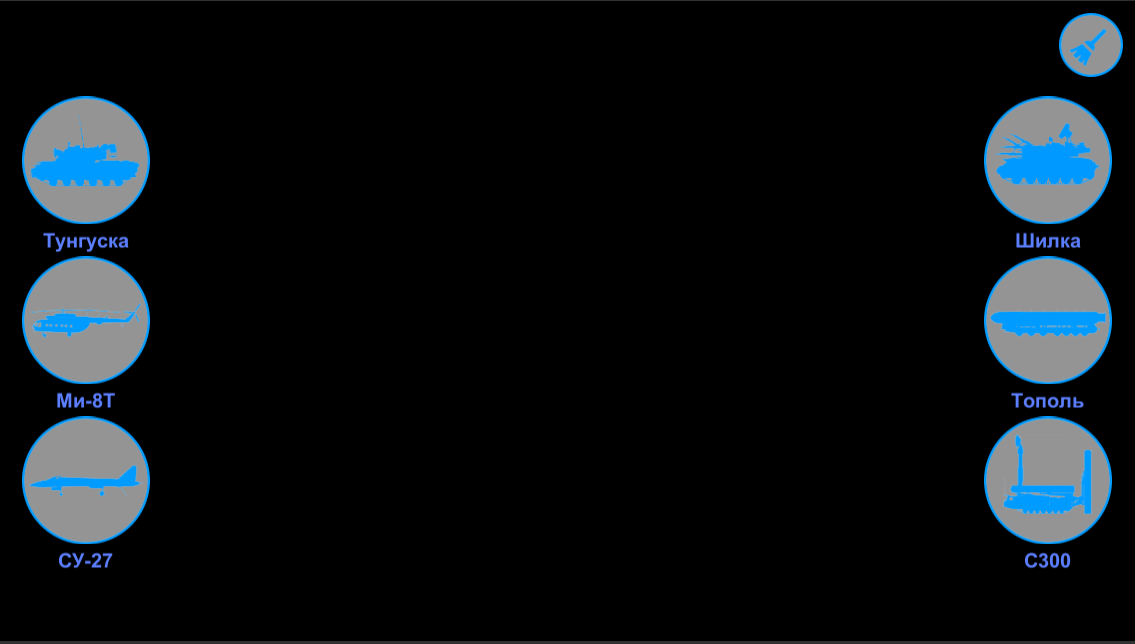

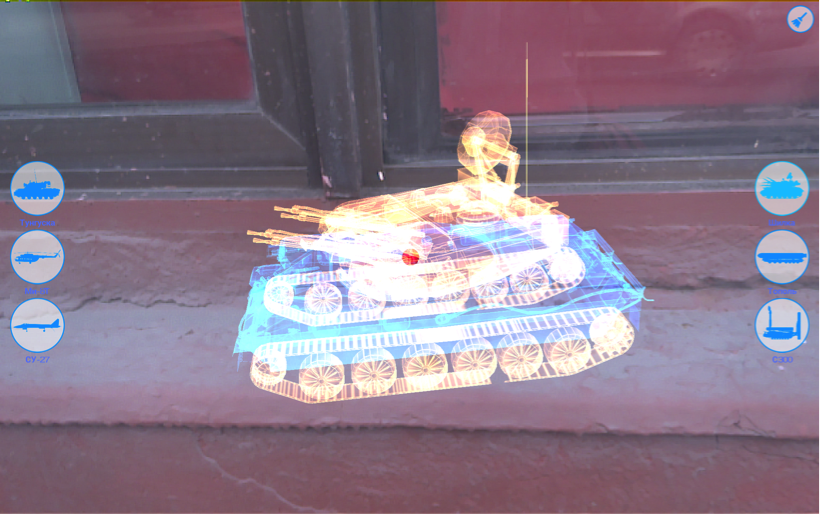

And to make it more fun to do this, we used animated miniature models of military equipment from one of our past projects as content.

First, let's see what the device itself is.

At the time of testing, there were 2 available modifications:

We got for experiments a 7 inch Yellowstone tablet that is part of the Project Tango Tablet Development Kit.

The base tablet itself is not surprising in principle. The device contains a 2.3 GHz quad-core Nvidia Tegra K1 processor, 128GB flash memory, 4 megapixel camera, 1920x1200 resolution touch screen and 4G LTE.

The operating system uses Android 4.4 (KitKat). We have already lost the order of systems under 5.0. Last year, the minimum requirement for customers was support for devices with Android 5.0.

It should be remembered that the device is part of the Development Kit, and not a device for the end user. For the prototype, it looks great and even convenient to use! Filling the device is not the most modern, but the device works perfectly. Obviously, in serial devices, end-manufacturers using the Tango platform can easily use the latest hardware and OS.

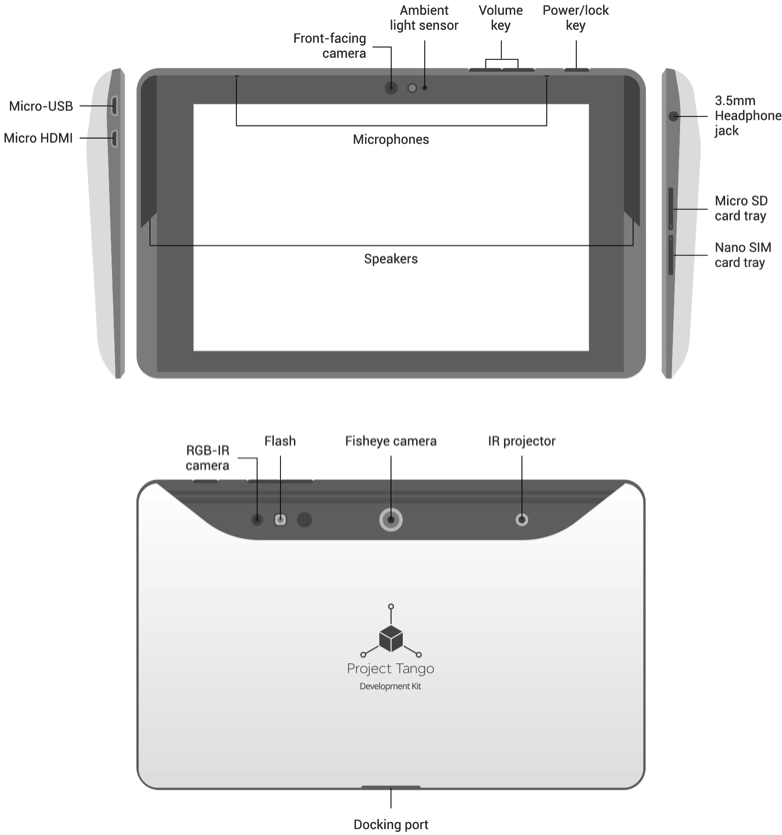

Much more interesting is the set of built-in sensors. It seems that at the moment it is the most "pumped" tablet. How exactly they are used will be discussed later.

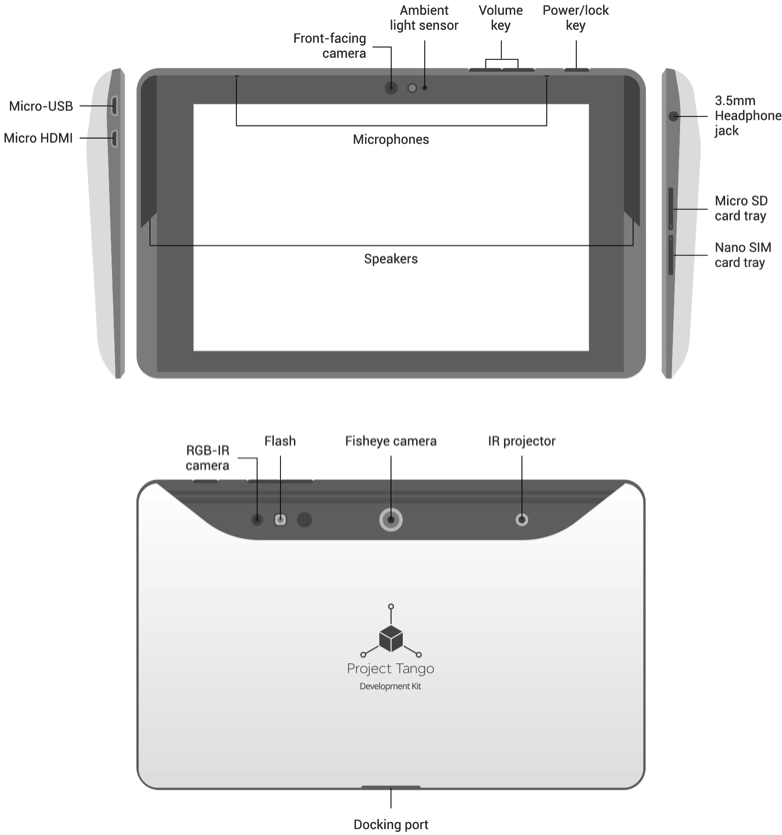

In the diagram below you can see the location of external sensors on the device.

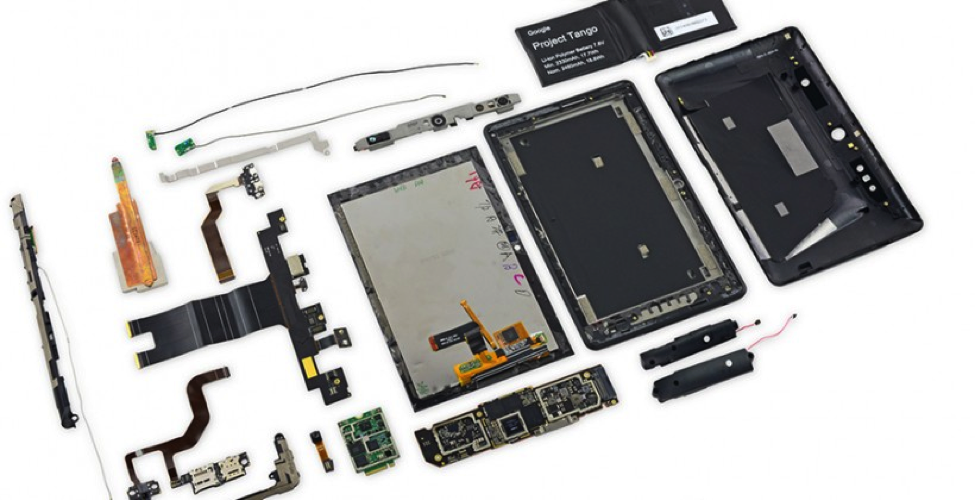

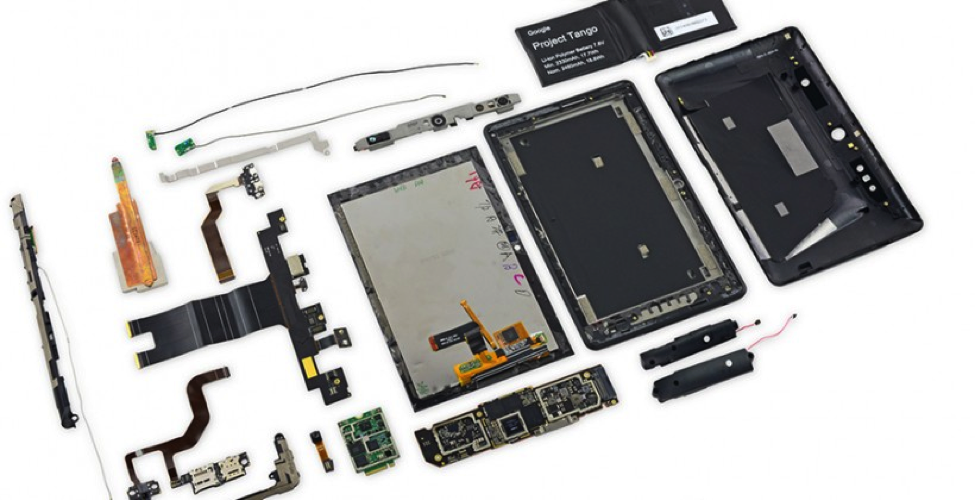

For those who like to see “what is actually inside it,” we attach a photo of the device in a disassembled form. Did not disassemble themselves. Thank you, colleagues from the portal slashgear.com for an interesting photo.

Google warns that Tango and Android are not real-time hardware systems. The main reason is that the Android Linux Kernel cannot provide guarantees of the program execution time on the device. For this reason, Project Tango can be considered a real-time software system.

To make it clear what will be discussed later during development, let's understand the principle of the device.

Firstly, it should be understood that the Tango is not just an experimental tablet, which is demonstrated everywhere, but a new scalable platform that uses computer vision to enable devices to understand their position in relation to the world around them. That is, it is assumed that computer vision sensors will be added to the usual sensors in a smartphone or tablet.

The device works similarly to how we use our human visual apparatus to find our way into the room, and then to know where we are in the room, where the floor, walls and objects around us. These insights are an integral part of how we move through space in our daily lives.

Tango gives mobile devices the ability to determine their position in the world around them using three basic technologies: Motion Tracking, Area Learning (exploring areas of space) and Depth Perception (depth perception). Each of them individually has its own unique capabilities and limitations. All together, they allow you to accurately position the device in the outside world.

Motion tracking

Motion tracking

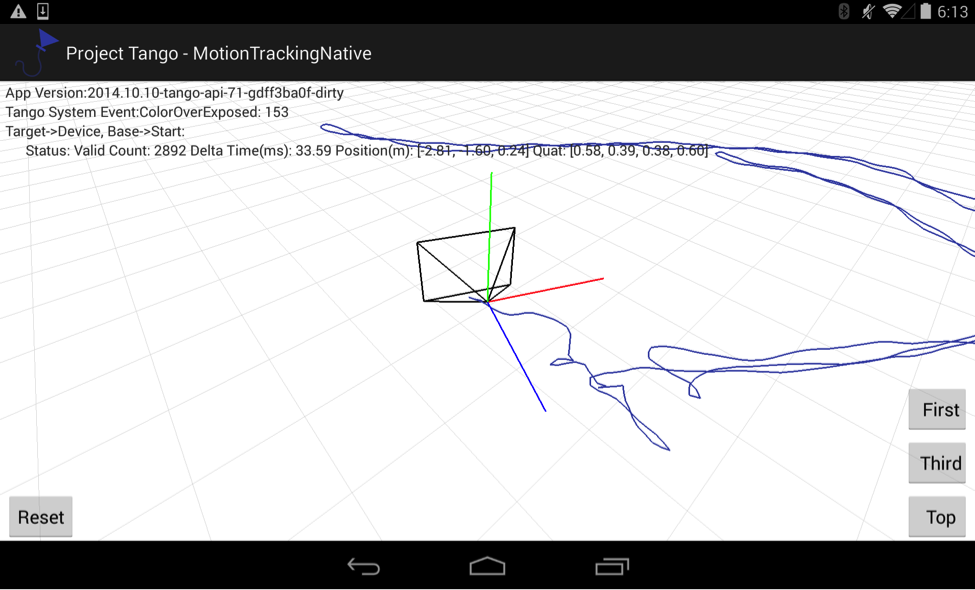

This technology allows the device to track its own movement and orientation in 3D space. We can walk with the device as we need and it will show us where it is and in what direction the movement takes place.

The result of this technology is the relative position and orientation of the device in space.

The key term in the Motion Tracking technology is Pose (posture) - a combination of the position and orientation of the device. The term sounds, quite frankly, quite specific and, probably, Google decided to emphasize to them its desire to improve the human-machine interface. Usually, posture refers to the position taken by the human body, the position of the body, head and limbs relative to each other.

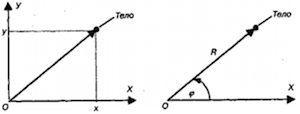

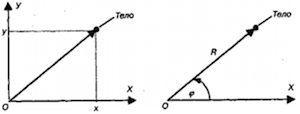

The Tango API provides the position and orientation of a user device with six degrees of freedom. The data consists of two main parts: a vector in meters to move and a quaternion for rotation. Poses are indicated for a particular pair of trajectory points. In this case, a reference system is used - the totality of the reference body, the associated coordinate system and the time reference system , in relation to which the movement of some bodies is considered.

In the context of Tango, this means that when requesting a specific pose, we must indicate the target point to which we are moving relative to the base point of the movement.

In the context of Tango, this means that when requesting a specific pose, we must indicate the target point to which we are moving relative to the base point of the movement.

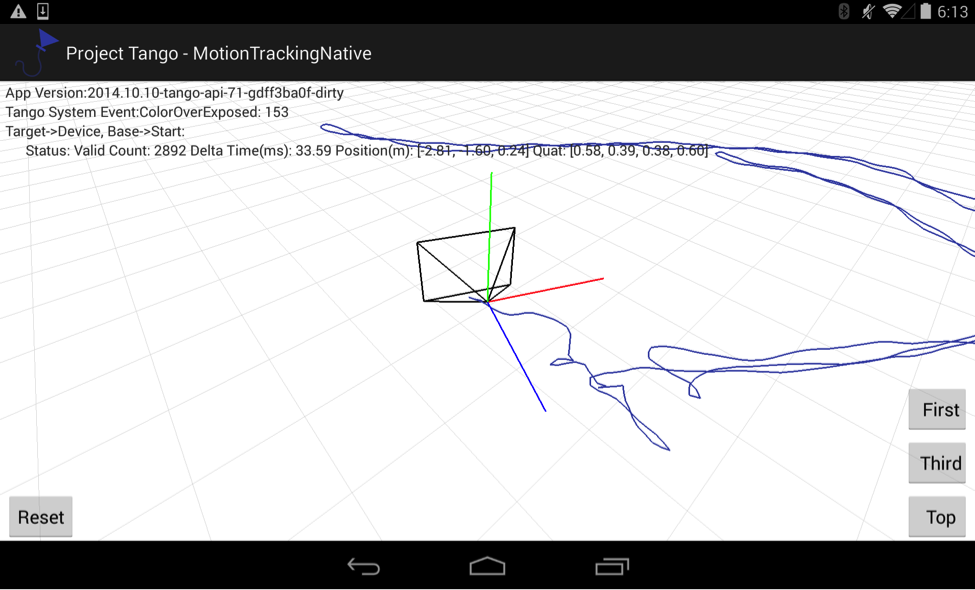

Tango implements Motion Tracking using visual inertial odometry (visual-inertial odometry or VIO).

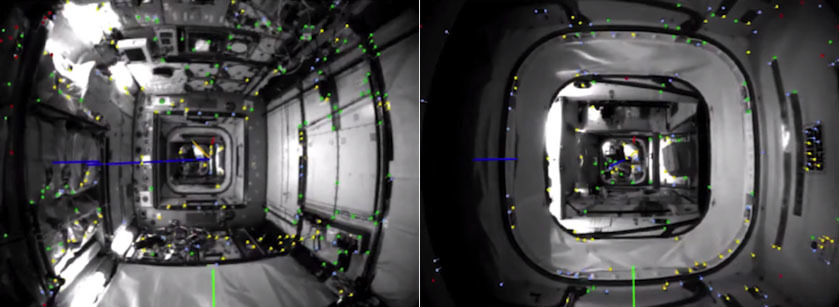

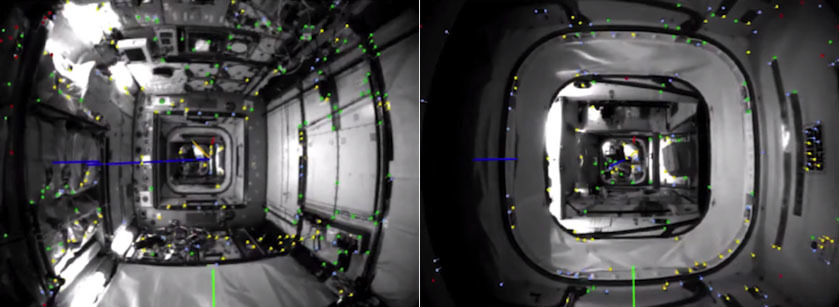

Odometry is a method of assessing the position and orientation of a robot or other device by analyzing the sequence of images taken by the camera (or cameras) mounted on it. Standard visual odometry uses images from the camera to determine the change in the position of the device, by searching for the relative position of the various visual features in these images. For example, if you took a photograph of a building from a distance, and then took another photograph from a closer distance, you can calculate the distance that the camera moves based on the zoom and position of the building in the photographs. Below you can see images with an example of such a search for visual signs.

Images are the property of NASA .

Visual inertial odometry complements visual odometry with inertial motion sensors capable of tracking the rotation of the device and its acceleration in space. This allows the device to calculate its orientation and movement in 3D space with even greater accuracy. Unlike GPS, motion tracking using the VIO also works indoors. What was the reason for the emergence of a large number of ideas to create relatively inexpensive indoor navigation systems.

Visual inertial odometry provides an improved definition of orientation in space compared to the standard Android Game Rotation Vector APIs. It uses visual information that helps to estimate the rotation and linear acceleration more accurately. Thus, we observe a successful combination of previously known technologies to eliminate their known problems.

All of the above is necessary so that when combining the rotation and position tracking in space, we can use the device as a virtual camera in the appropriate 3D space of your application.

Motion tracking is a basic technology. It is easy to use, but has several problems:

<img

src = " habrastorage.org/files/72c/374/358/72c37435811a46ecbce09b4ce4952b6c.png " align = "right" /> Area Learning

People can recognize where they are, noticing the signs they know around them: doorway, stairs, table, etc. Tango provides the mobile device with the same ability. With the help of motion tracking technology alone, the device “sees” through the camera the visual features of the area, but does not “remember” them. With the help of area learning, the device not only “remembers” what it “saw”, but can also save and use this information. The result of this technology is the absolute position and orientation of the device in the space already known to him.

Tango uses the simultaneous localization and mapping method (SLAM from Simultaneous Simultaneous Localization and Mapping) - a method used in mobile offline tools for building a map in an unknown space or for updating a map in a previously known space while simultaneously monitoring your current location and distance traveled.

Published approaches are already used in self-driving cars, unmanned aerial vehicles, autonomous underwater vehicles, planet rovers, home robots, and even inside the human body.

In the context of Tango Area learning solves 2 key tasks:

Let's take a closer look at exactly how these tasks are solved.

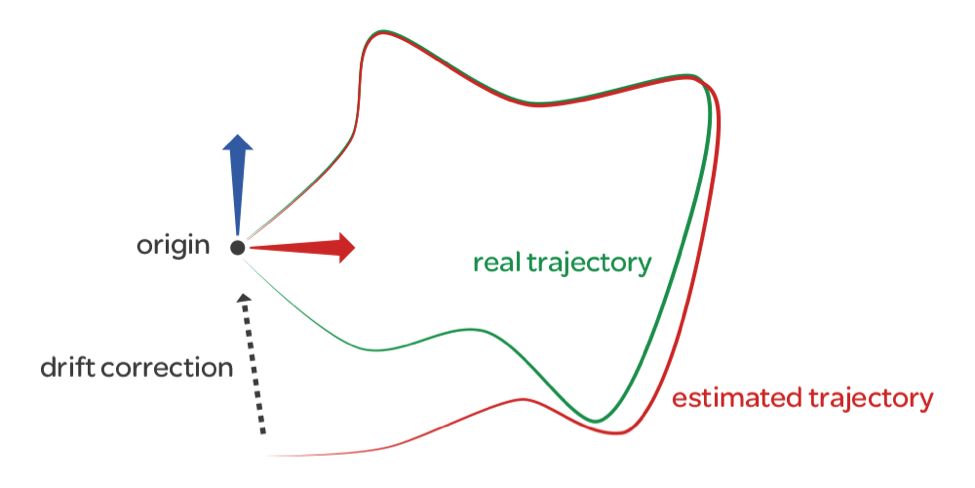

Drift correction

As mentioned earlier, area learning remembers visual signs in areas of the real world that the device “saw” and uses them to correct errors in its position, orientation and movement. When a device sees a place that it saw earlier in your session, it understands and adjusts its path to match its previous observations.

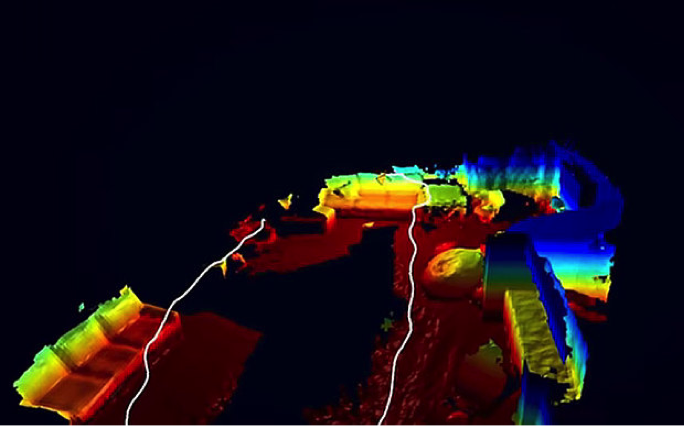

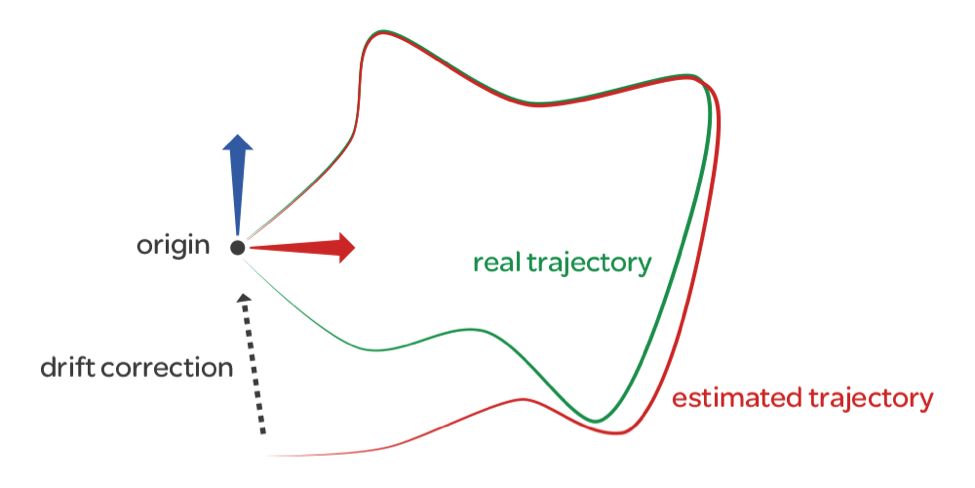

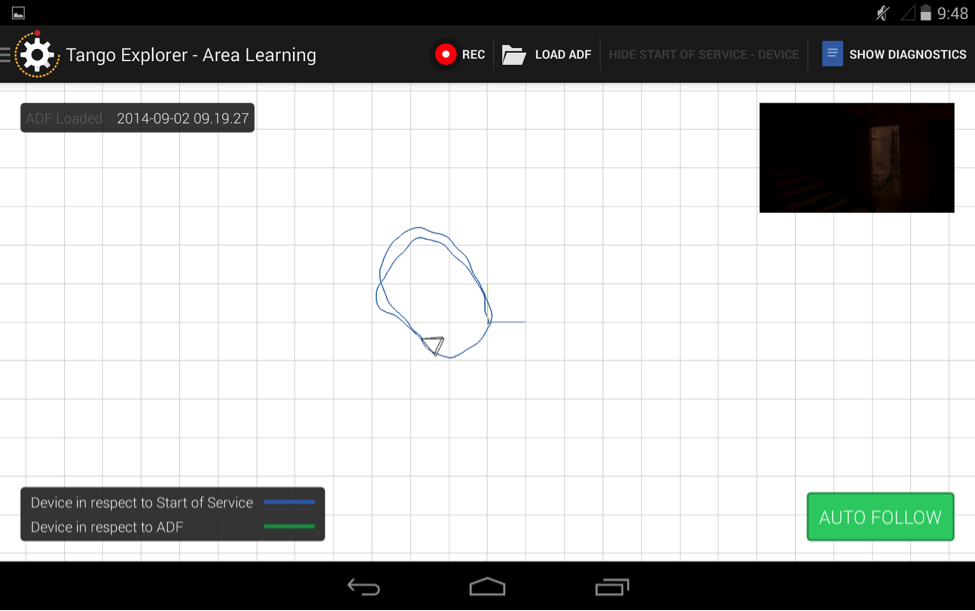

The figure below shows an example of drift correction.

As soon as we start walking through the well-known Tango area, then we actually get two different trajectories that occur simultaneously:

The green line is the real trajectory along which the device moves in real space; the red line shows how, over time, the calculated trajectory has moved away from the real trajectory. When the device returns to the origin and realizes that it has already seen this area before, it corrects drift errors and corrects the calculated trajectory to better match the real trajectory.

Without drift correction, a game or an application using virtual 3D space in accordance with the real world may encounter inaccuracy in tracking movement after prolonged use. For example, if a door in the game world corresponds to a door frame in the real world, then errors can cause the door to appear in the game in the wall, and not in the real door frame.

Localization

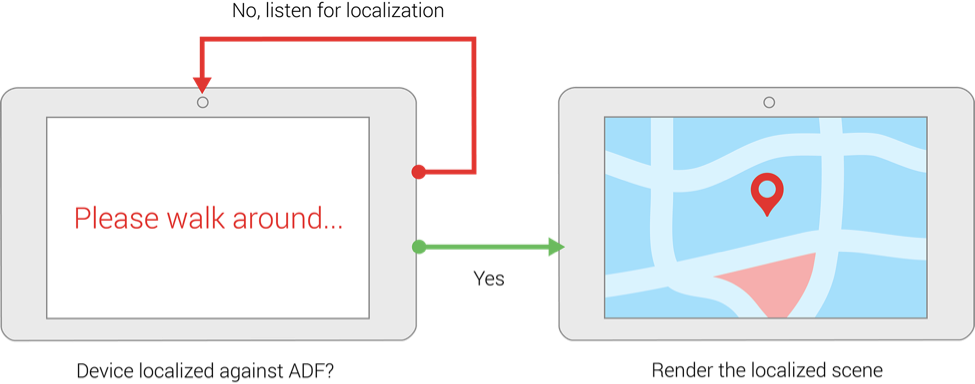

After we have passed through the area you need with area learning enabled, we can save in the Area Description File (ADF) what the device "saw."

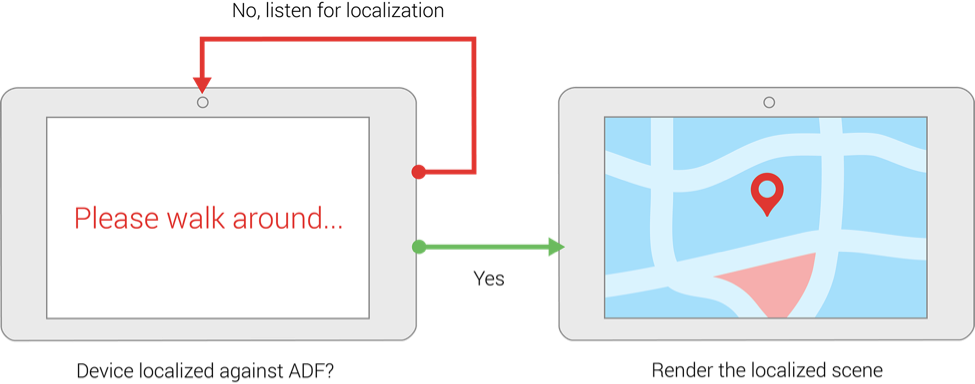

Exploring the area and loading it as an ADF has several advantages. For example, you can use it to align the device’s coordinate system with a previously existing coordinate system so that you always appear in the same physical place in a game or application. The image below shows the search cycle known to the device area.

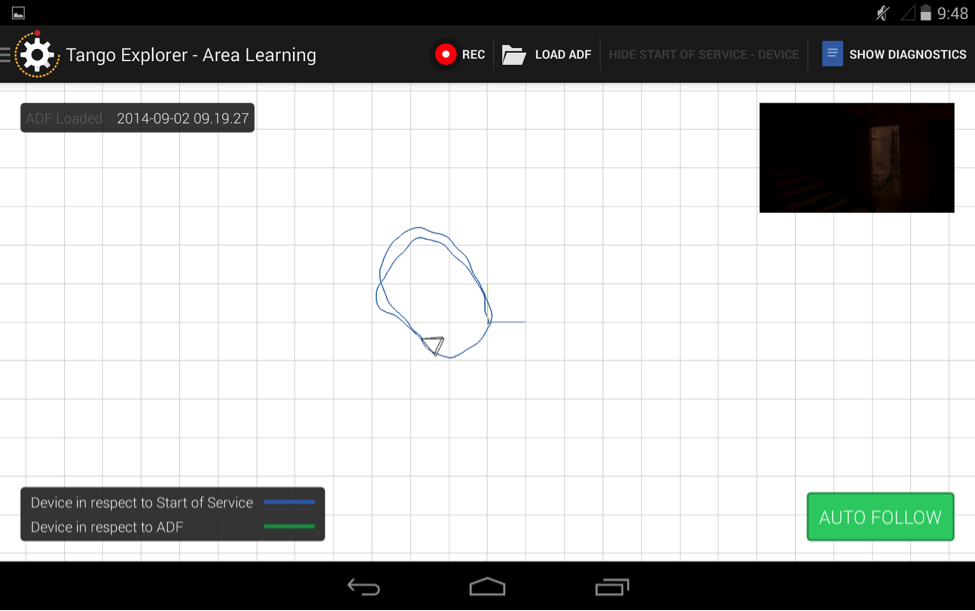

There are two ways to create an ADF. The easiest way to use the application is TangoExplorer , and then your application loads the resulting ADF.

We can also use the Tango API to explore the space, save and load the ADF within our application.

It should be noted a number of features that should be remembered when using area learning:

Depth perception

Depth perception

With this technology, the device can understand the shape of our environment. This allows you to create "augmented reality", where virtual objects are not only part of our real environment, they can also interact with this environment. For example, many are already predicting computer games in real space.

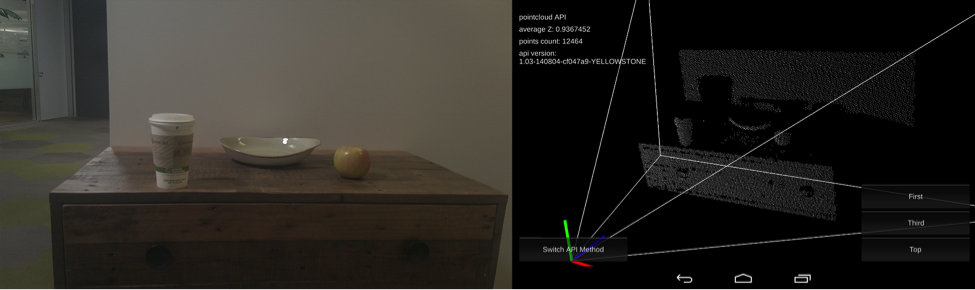

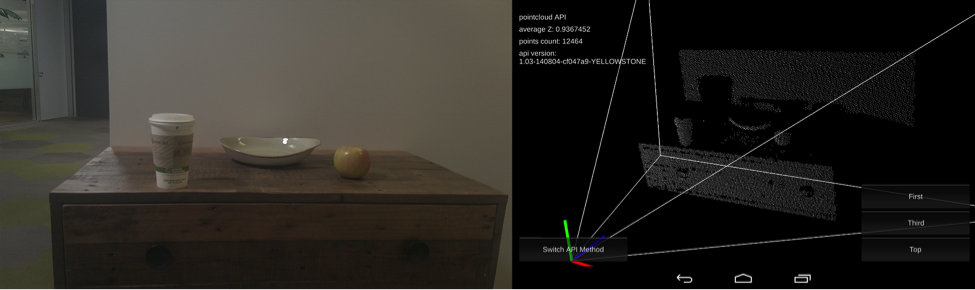

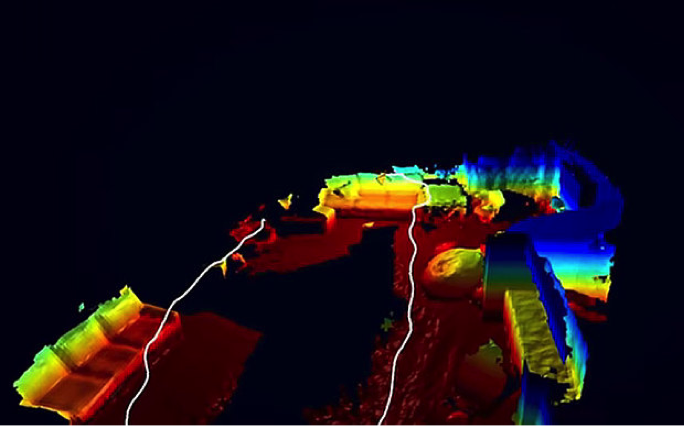

Tango API provides data on the distance to objects that the camera shoots in the form of a cloud of points. This format gives (x, y, z) coordinates to the maximum number of points in the scene that can be calculated.

Each measurement is a floating point value that records the position of each point in meters in the camera's reference system of the scanning depth scene. A point cloud is associated with color information obtained from an RGB camera. This association gives us the opportunity to use Tango as a 3D scanner and opens up many interesting opportunities for game developers and creative applications. Of course, the degree of scanning accuracy cannot be compared with special 3D scanners that give accuracy in fractions of a millimeter. According to the experience of colleagues, the maximum accuracy of Intel RealSense R200 (used in the Tango) is 2 millimeters, but it can be much worse, because strongly depends on the distance to the subject, on the shooting conditions, on the reflective properties of the surface, on the movement. You also need to understand that increasing the accuracy of the image depth leads to an increase in noise in the signal, it is inevitable.

However, it should be noted that for the construction of collisions in the game, the placement of virtual furniture in a real apartment, measuring the distances of the current accuracy of the resulting 3D cloud of points is quite enough.

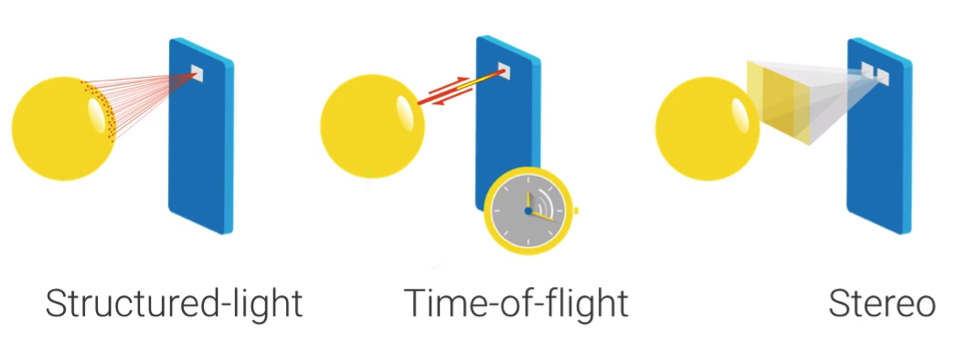

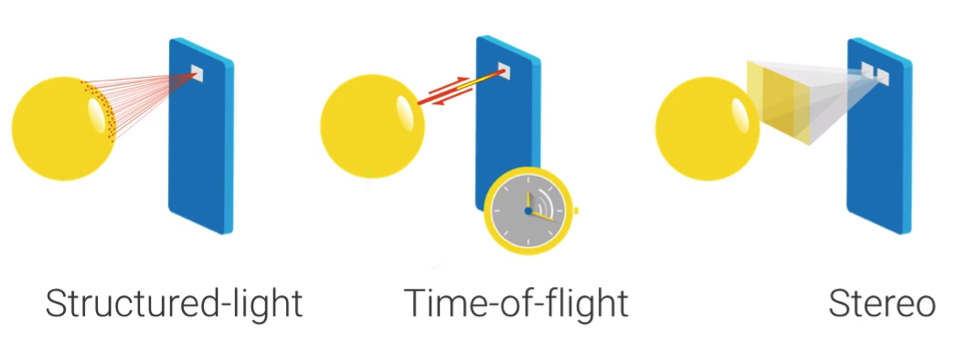

To implement depth perception, we can choose one of the most common depth perception technologies. Recall that Tango is a platform and includes 3 technologies.

A description of each of them is a topic for a separate large article. Let's go over them briefly, so that there is an understanding of their role in Tango and the general principles of their functioning.

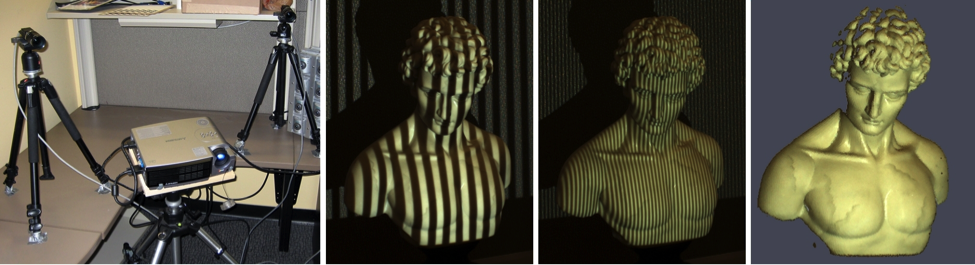

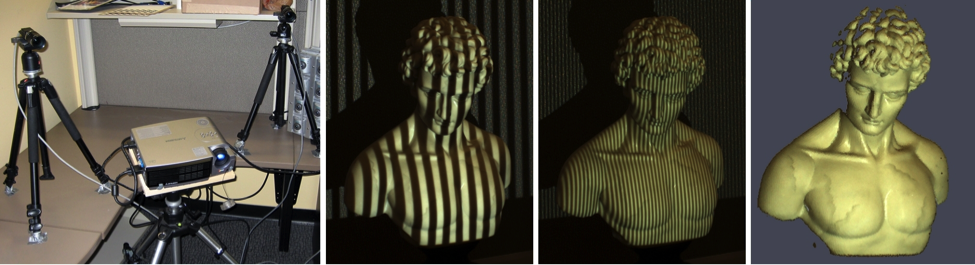

Structured Light

Structured light projects a known pattern (usually a grid or horizontal stripes) onto real objects. Thus, the deformation of this known pattern when applied to the surface allows the computer vision system to obtain information about the depth and surface of objects in the scene relative to the device. A well-known example of using structured light is 3D scanners.

Invisible structured light is used when the visible pattern interferes with other computer vision methods used in the system. This is the case of the Tango, which uses an infrared emitter.

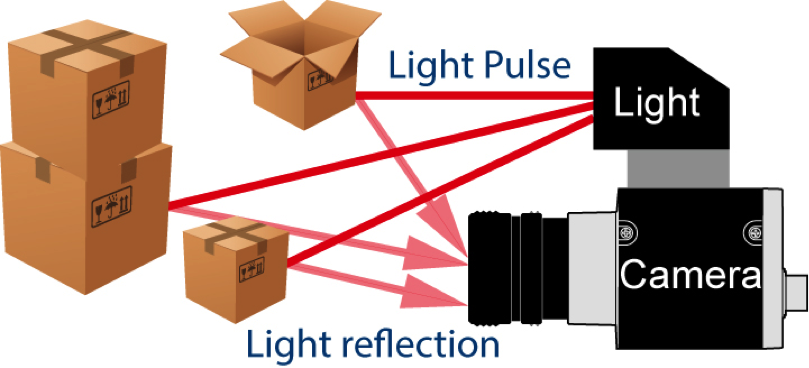

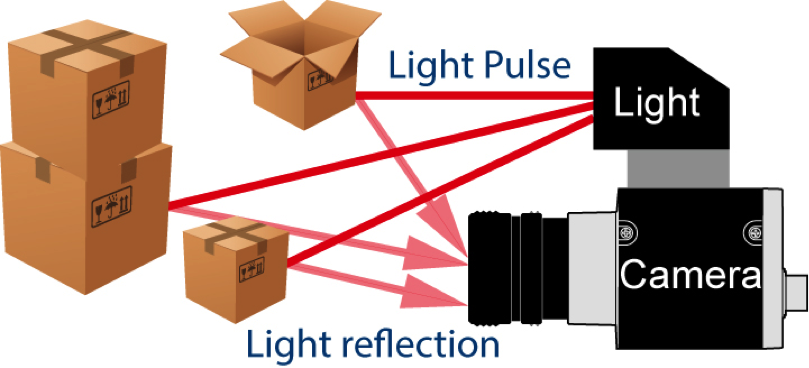

Time of flight

This technology is based on determining the time it takes infrared radiation to fly from the radiation source to the object and return to the camera. The farther the distance to the surface, the longer it takes.

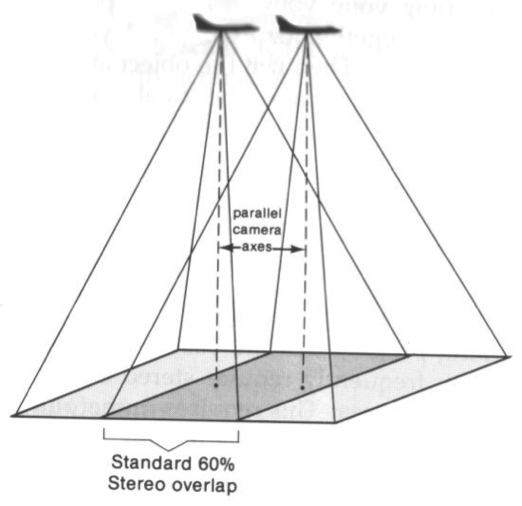

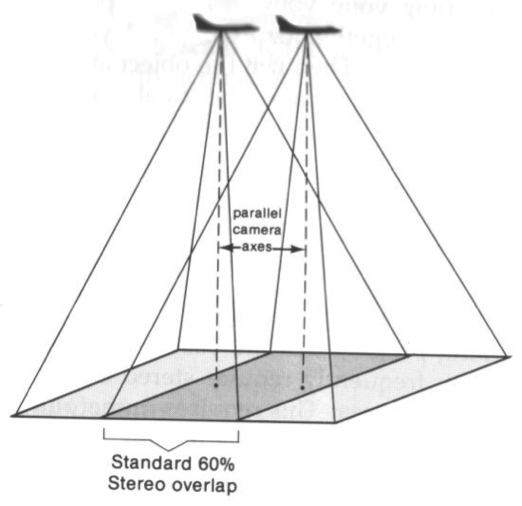

Stereo

We did not find accurate information on how this technology works in Tango. Apparently the implementation is quite standard and the developers did not dwell on this. At one time, 2 snapshots are taken, the so-called stereo pair. Based on them, using photogrammetry, the distance to the surfaces is determined. Thus, for example, satellite imagery of the height of the terrain of the Earth.

It is important to keep in mind that Structured Light and Time of Flight require the device to have both an infrared (IR) emitter and an IR camera. Stereo technology is enough 2x cameras and does not require an infrared emitter.

Note that the current devices are actually designed to work indoors at moderate distances to the scanned object (from 0.5 to 4 meters). This configuration provides a fairly large depth at an average distance, while not imposing excessive power requirements for the IR emitter and the performance of the device itself in the processing depth. For this reason, do not count on scanning very close objects and recognizing gestures.

It should be remembered that for a device that perceives depth with the help of an infrared camera, there are some situations where accurate work is difficult.

We reviewed the principles of operation of the device in sufficient detail. Now let's move from theory to practice. Let's try to create our first project.

Tango provides an SDK for the Unity3D game engine and supports OpenGL and other 3D systems via C or Java. We use in the work basically Unity3D and all examples will be created further in his development environment.

Integration with unity3D

To develop applications in Unity3D for Project Tango, we need:

Project Description

We decided to implement the arrangement of miniatures of military equipment using Tango. The following was not planned intricate sequence of actions:

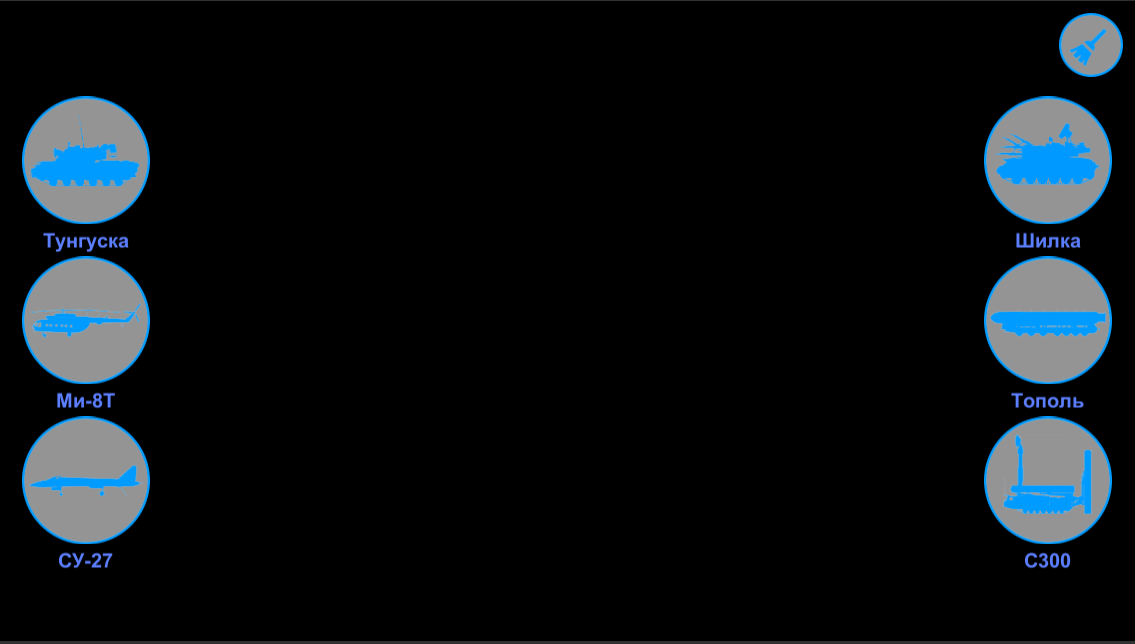

The interface for the demo decided to make it minimalist, but understandable for the user.

Implementation

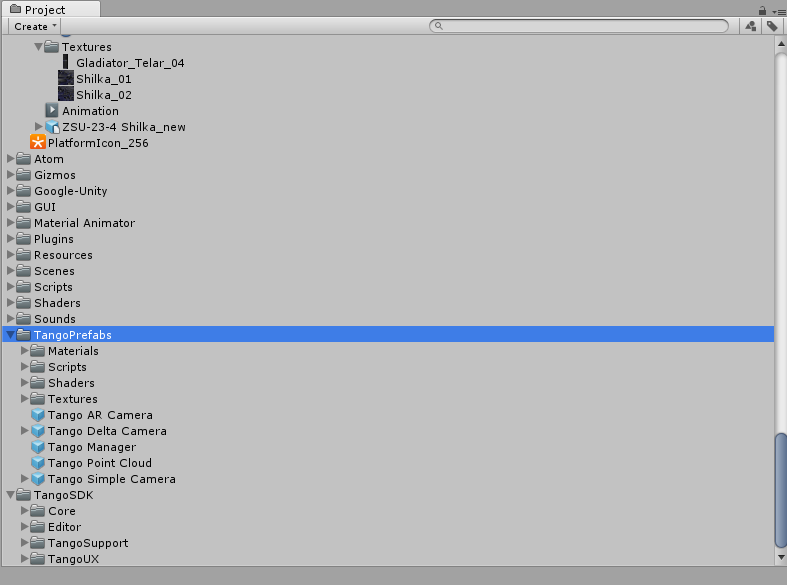

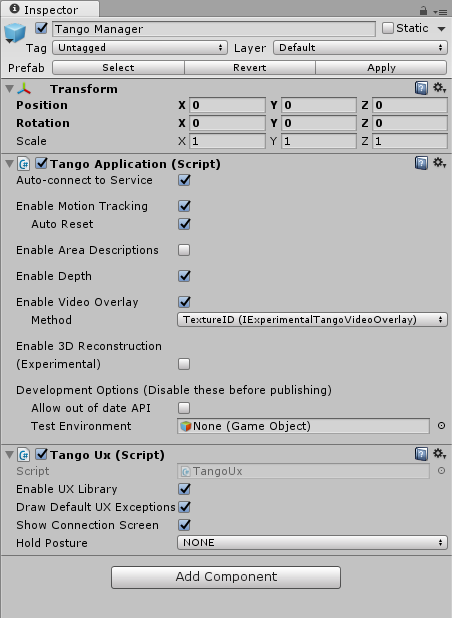

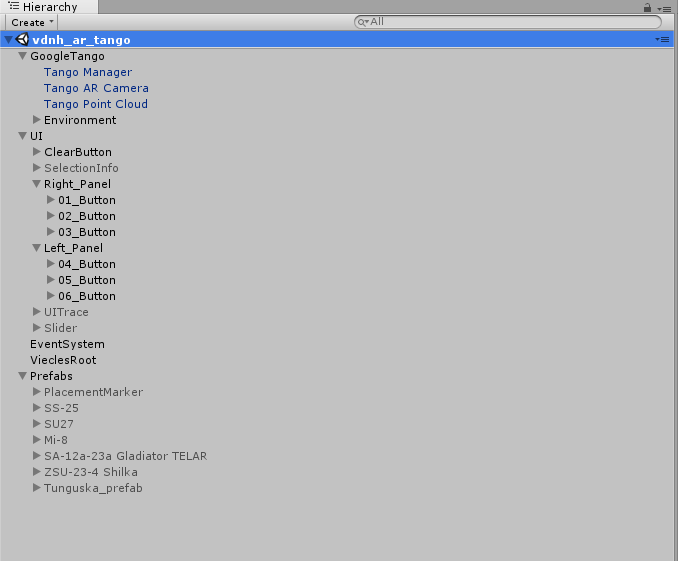

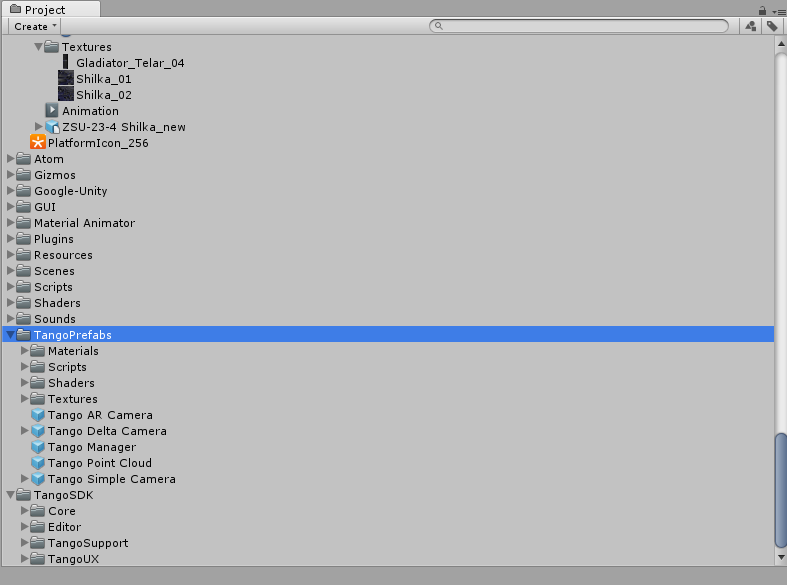

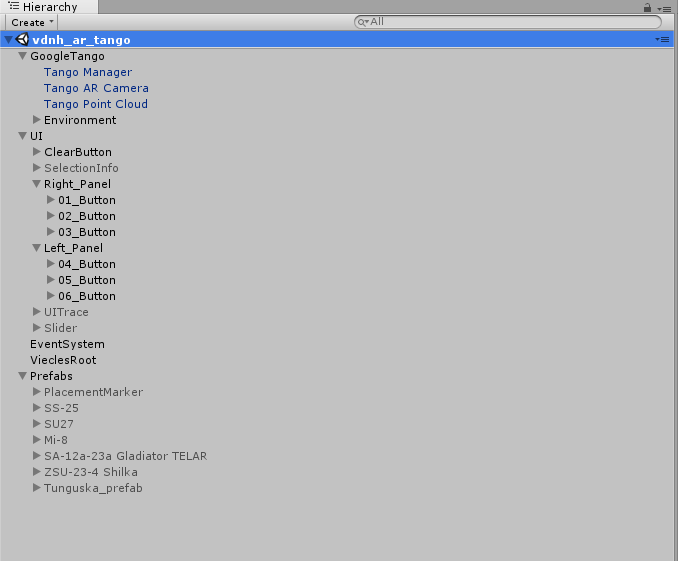

As a basis, we took resources from TangoSDK / Examples / AugmentedReality with github and our models from an already implemented project.

Implementation description

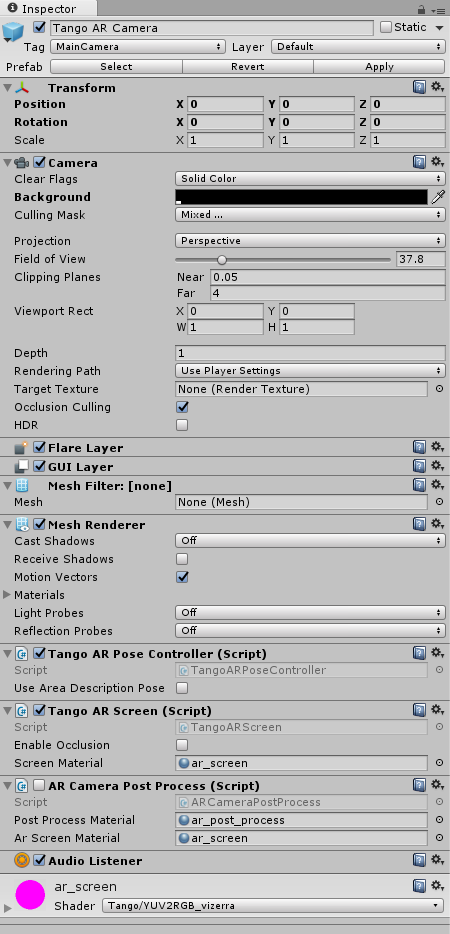

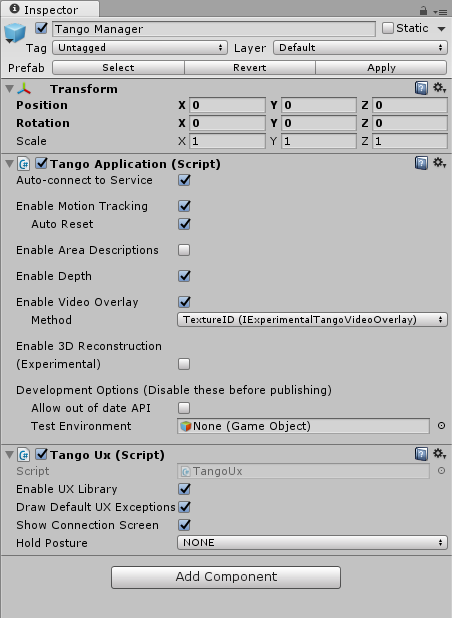

In order to realize our plans, we will describe our actions in steps:

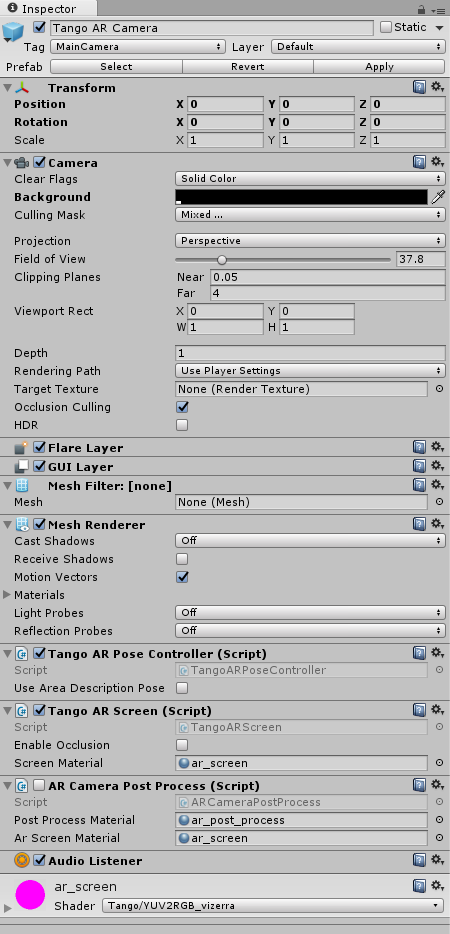

- Displaying the image from the camera of the device on the screen, this will help us Tango AR Camera

- Next, you need to ensure that the real movement of the device in the virtual scene is repeated. Motion Tracking Tango Application , Enable Motion Tracking .

, , , , , . Tango Tango Point Cloud , .

— :

The result of the trace can have the following values:

To model by pressing the button was put on the surface in the class:

create a function:

A prefab with a model for placement is passed to the function. The function checks the possibility of placement and, if successful, creates a model in the specified coordinates.

- We need to create a functional for the possibility of moving the placed model. The function is responsible for moving the model:

We briefly describe how it works: in the function, we get the touch data through

We are looking for the intersection with spaced objects in the scene, and in case of success we mark the object as:

We also send to the function of calculating the intersection with the point cloud the position of the beginning of the beam, it will coincide with the position of the finger on the screen.

Further, in the process of moving around the screen, the model is set to a new position, if the result of the trace allows you to do this, I remind you, the trace is done in the asynchronous method

It remains to add a function to delete the placed models. In general, everything is ready, you can run!

More information can be found with the project code on gitHub .

Publishing apps that use the Tango framework requires one additional step in addition to the standard Android publishing process. In order for the application to be installed only on Tango compatible devices, you must add the following lines to the application manifest file.

If you want to get a single APK for devices with Tango support and without it, then you can determine in Tango the availability of Tango features and use their capabilities in the application itself.

So we went along with you from theoretical foundations to the publication of the application.

It can be safely concluded that the platform can already be used for commercial purposes. It works quite stable. The technologies included in it do not conflict with each other and complement each other well, solving the stated problems.

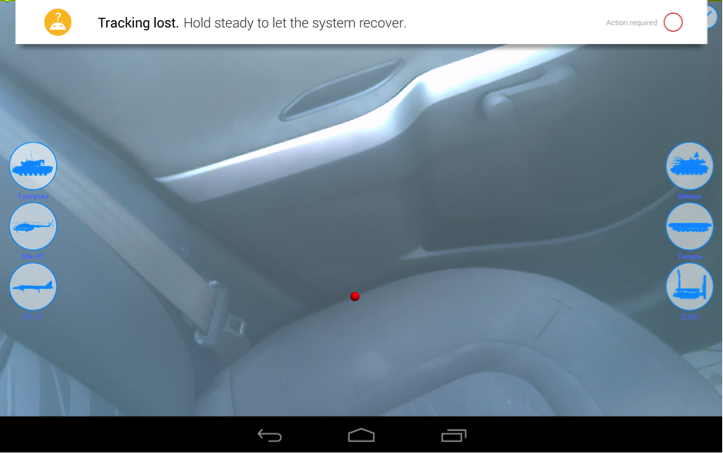

In addition, commercial devices began to go with large vendors . The only fly in the big barrel of honey is a problem when the device is operating in bright light. I'd like to believe that Google and Intel will solve this problem in the coming years or maybe they are already solving it. Thank you for your attention and we hope that the article was interesting for you.

Article winning author Alexander Lavrov, in the framework of the competition "Device Lab from Google . "

In this regard, we constantly try new equipment with an eye to its use in projects. Today we want to talk about this already famous, but low-lit in the Russian-speaking Internet device such as Project Tango. Most recently, he simply became Tango, marking the transition to the commercial stage of his life and devices for end users began to appear.

Our acquaintance with Project Tango began in the spring of this year, when an idea arose to try marker-free tracking for a number of our projects.

')

It turned out that to get the device for experiments is very difficult. We were helped by the Skolkovo Foundation by introducing Google to the Russian office. The guys were very responsive and gave the much-desired device to their hands.

We were able to quickly achieve the results we needed, showed our tests at Startup Village, and with a sense of accomplishment, returned the device to Google.

A couple of months ago, we found out that Google Device Lab had started, and we decided that we needed to pay back good for the good — to share our experience with other developers and sat down to write this article. In the process of writing an article, its concept changed several times. I wanted to do something useful as usual, and not to describe our next project. In the end, after consulting we decided that the most useful would be to write a Russian-language full review of Project Tango, taking into account all the bumps we had. Then we began to combine disparate sources, so that the reader would have created a fairly complete picture after reading this article.

And to make it more fun to do this, we used animated miniature models of military equipment from one of our past projects as content.

Hardware

First, let's see what the device itself is.

At the time of testing, there were 2 available modifications:

- Tablet "Yellowstone." Usually when people talk about Tango, the vast majority imagine it is him.

- Telephone "Peanut". Honestly, we don’t know anyone who really held him in his hands.

We got for experiments a 7 inch Yellowstone tablet that is part of the Project Tango Tablet Development Kit.

The base tablet itself is not surprising in principle. The device contains a 2.3 GHz quad-core Nvidia Tegra K1 processor, 128GB flash memory, 4 megapixel camera, 1920x1200 resolution touch screen and 4G LTE.

The operating system uses Android 4.4 (KitKat). We have already lost the order of systems under 5.0. Last year, the minimum requirement for customers was support for devices with Android 5.0.

It should be remembered that the device is part of the Development Kit, and not a device for the end user. For the prototype, it looks great and even convenient to use! Filling the device is not the most modern, but the device works perfectly. Obviously, in serial devices, end-manufacturers using the Tango platform can easily use the latest hardware and OS.

Much more interesting is the set of built-in sensors. It seems that at the moment it is the most "pumped" tablet. How exactly they are used will be discussed later.

- Fisheye camera to track movement

- 3D depth sensors

- Accelerometer

- Ambient light sensor

- Barometer

- Compass

- GPS

- Gyroscope

In the diagram below you can see the location of external sensors on the device.

For those who like to see “what is actually inside it,” we attach a photo of the device in a disassembled form. Did not disassemble themselves. Thank you, colleagues from the portal slashgear.com for an interesting photo.

Google warns that Tango and Android are not real-time hardware systems. The main reason is that the Android Linux Kernel cannot provide guarantees of the program execution time on the device. For this reason, Project Tango can be considered a real-time software system.

Principle of the device

To make it clear what will be discussed later during development, let's understand the principle of the device.

Firstly, it should be understood that the Tango is not just an experimental tablet, which is demonstrated everywhere, but a new scalable platform that uses computer vision to enable devices to understand their position in relation to the world around them. That is, it is assumed that computer vision sensors will be added to the usual sensors in a smartphone or tablet.

The device works similarly to how we use our human visual apparatus to find our way into the room, and then to know where we are in the room, where the floor, walls and objects around us. These insights are an integral part of how we move through space in our daily lives.

Tango gives mobile devices the ability to determine their position in the world around them using three basic technologies: Motion Tracking, Area Learning (exploring areas of space) and Depth Perception (depth perception). Each of them individually has its own unique capabilities and limitations. All together, they allow you to accurately position the device in the outside world.

Motion tracking

Motion trackingThis technology allows the device to track its own movement and orientation in 3D space. We can walk with the device as we need and it will show us where it is and in what direction the movement takes place.

The result of this technology is the relative position and orientation of the device in space.

The key term in the Motion Tracking technology is Pose (posture) - a combination of the position and orientation of the device. The term sounds, quite frankly, quite specific and, probably, Google decided to emphasize to them its desire to improve the human-machine interface. Usually, posture refers to the position taken by the human body, the position of the body, head and limbs relative to each other.

The Tango API provides the position and orientation of a user device with six degrees of freedom. The data consists of two main parts: a vector in meters to move and a quaternion for rotation. Poses are indicated for a particular pair of trajectory points. In this case, a reference system is used - the totality of the reference body, the associated coordinate system and the time reference system , in relation to which the movement of some bodies is considered.

In the context of Tango, this means that when requesting a specific pose, we must indicate the target point to which we are moving relative to the base point of the movement.

In the context of Tango, this means that when requesting a specific pose, we must indicate the target point to which we are moving relative to the base point of the movement.Tango implements Motion Tracking using visual inertial odometry (visual-inertial odometry or VIO).

Odometry is a method of assessing the position and orientation of a robot or other device by analyzing the sequence of images taken by the camera (or cameras) mounted on it. Standard visual odometry uses images from the camera to determine the change in the position of the device, by searching for the relative position of the various visual features in these images. For example, if you took a photograph of a building from a distance, and then took another photograph from a closer distance, you can calculate the distance that the camera moves based on the zoom and position of the building in the photographs. Below you can see images with an example of such a search for visual signs.

Images are the property of NASA .

Visual inertial odometry complements visual odometry with inertial motion sensors capable of tracking the rotation of the device and its acceleration in space. This allows the device to calculate its orientation and movement in 3D space with even greater accuracy. Unlike GPS, motion tracking using the VIO also works indoors. What was the reason for the emergence of a large number of ideas to create relatively inexpensive indoor navigation systems.

Visual inertial odometry provides an improved definition of orientation in space compared to the standard Android Game Rotation Vector APIs. It uses visual information that helps to estimate the rotation and linear acceleration more accurately. Thus, we observe a successful combination of previously known technologies to eliminate their known problems.

All of the above is necessary so that when combining the rotation and position tracking in space, we can use the device as a virtual camera in the appropriate 3D space of your application.

Motion tracking is a basic technology. It is easy to use, but has several problems:

- The technology does not understand by itself what it is to be around the user. In Tango, other technologies are responsible for this task: Area Learning and Depth Perception.

- Motion Tracking does not "remember" previous sessions. Each time you start a new motion tracking session, the tracking begins again and reports its position in relation to its starting position in the current session.

- Also, judging by the experiments, shaking the device leads to loss of tracking. This was very clearly manifested when used in a car.

- When a device is moved over long distances and for long periods of time, small errors accumulate, which can lead to large errors in absolute position in space. There will be a so-called "drift" (drift). This is another term borrowed from the real world. Usually, this requirement means the deviation of a moving vessel from the course under the influence of wind or current, as well as the movement of ice carried by the current. To combat drift, Tango uses Area Learning to correct these errors. We will look at this aspect in detail in the next part of the article.

<img

src = " habrastorage.org/files/72c/374/358/72c37435811a46ecbce09b4ce4952b6c.png " align = "right" /> Area Learning

People can recognize where they are, noticing the signs they know around them: doorway, stairs, table, etc. Tango provides the mobile device with the same ability. With the help of motion tracking technology alone, the device “sees” through the camera the visual features of the area, but does not “remember” them. With the help of area learning, the device not only “remembers” what it “saw”, but can also save and use this information. The result of this technology is the absolute position and orientation of the device in the space already known to him.

Tango uses the simultaneous localization and mapping method (SLAM from Simultaneous Simultaneous Localization and Mapping) - a method used in mobile offline tools for building a map in an unknown space or for updating a map in a previously known space while simultaneously monitoring your current location and distance traveled.

Published approaches are already used in self-driving cars, unmanned aerial vehicles, autonomous underwater vehicles, planet rovers, home robots, and even inside the human body.

In the context of Tango Area learning solves 2 key tasks:

- Improving the accuracy of the trajectory obtained using motion tracking. This process is called "Drift Correction."

- The orientation and positioning of the device itself within the previously studied area. This process is called Localization.

Let's take a closer look at exactly how these tasks are solved.

Drift correction

As mentioned earlier, area learning remembers visual signs in areas of the real world that the device “saw” and uses them to correct errors in its position, orientation and movement. When a device sees a place that it saw earlier in your session, it understands and adjusts its path to match its previous observations.

The figure below shows an example of drift correction.

As soon as we start walking through the well-known Tango area, then we actually get two different trajectories that occur simultaneously:

- The path you follow ("The real trajectory")

- Path calculated by the device ("Calculated Path")

The green line is the real trajectory along which the device moves in real space; the red line shows how, over time, the calculated trajectory has moved away from the real trajectory. When the device returns to the origin and realizes that it has already seen this area before, it corrects drift errors and corrects the calculated trajectory to better match the real trajectory.

Without drift correction, a game or an application using virtual 3D space in accordance with the real world may encounter inaccuracy in tracking movement after prolonged use. For example, if a door in the game world corresponds to a door frame in the real world, then errors can cause the door to appear in the game in the wall, and not in the real door frame.

Localization

After we have passed through the area you need with area learning enabled, we can save in the Area Description File (ADF) what the device "saw."

Exploring the area and loading it as an ADF has several advantages. For example, you can use it to align the device’s coordinate system with a previously existing coordinate system so that you always appear in the same physical place in a game or application. The image below shows the search cycle known to the device area.

There are two ways to create an ADF. The easiest way to use the application is TangoExplorer , and then your application loads the resulting ADF.

We can also use the Tango API to explore the space, save and load the ADF within our application.

It should be noted a number of features that should be remembered when using area learning:

- Tango depends on the visual diversity of the area we are going to localize. If we are in a building with many identical rooms or in a completely empty room with empty walls, it will be difficult for the device to localize itself.

- The environment may look completely different from different points of view and positions, and may also change over time (furniture can be moved, lighting will vary depending on the time of day). Localization is more likely to succeed if localization conditions are similar to those that existed when the ADF was created.

- As the environment changes, we can create multiple ADFs for the same physical location under different conditions. This gives our users the ability to select the file that most closely matches their current conditions. You can also add multiple sessions to a single ADF to capture visual descriptions of the environment from each position, angle, and with each change in lighting or a change in environment.

Depth perception

Depth perceptionWith this technology, the device can understand the shape of our environment. This allows you to create "augmented reality", where virtual objects are not only part of our real environment, they can also interact with this environment. For example, many are already predicting computer games in real space.

Tango API provides data on the distance to objects that the camera shoots in the form of a cloud of points. This format gives (x, y, z) coordinates to the maximum number of points in the scene that can be calculated.

Each measurement is a floating point value that records the position of each point in meters in the camera's reference system of the scanning depth scene. A point cloud is associated with color information obtained from an RGB camera. This association gives us the opportunity to use Tango as a 3D scanner and opens up many interesting opportunities for game developers and creative applications. Of course, the degree of scanning accuracy cannot be compared with special 3D scanners that give accuracy in fractions of a millimeter. According to the experience of colleagues, the maximum accuracy of Intel RealSense R200 (used in the Tango) is 2 millimeters, but it can be much worse, because strongly depends on the distance to the subject, on the shooting conditions, on the reflective properties of the surface, on the movement. You also need to understand that increasing the accuracy of the image depth leads to an increase in noise in the signal, it is inevitable.

However, it should be noted that for the construction of collisions in the game, the placement of virtual furniture in a real apartment, measuring the distances of the current accuracy of the resulting 3D cloud of points is quite enough.

To implement depth perception, we can choose one of the most common depth perception technologies. Recall that Tango is a platform and includes 3 technologies.

A description of each of them is a topic for a separate large article. Let's go over them briefly, so that there is an understanding of their role in Tango and the general principles of their functioning.

Structured Light

Structured light projects a known pattern (usually a grid or horizontal stripes) onto real objects. Thus, the deformation of this known pattern when applied to the surface allows the computer vision system to obtain information about the depth and surface of objects in the scene relative to the device. A well-known example of using structured light is 3D scanners.

Invisible structured light is used when the visible pattern interferes with other computer vision methods used in the system. This is the case of the Tango, which uses an infrared emitter.

Time of flight

This technology is based on determining the time it takes infrared radiation to fly from the radiation source to the object and return to the camera. The farther the distance to the surface, the longer it takes.

Stereo

We did not find accurate information on how this technology works in Tango. Apparently the implementation is quite standard and the developers did not dwell on this. At one time, 2 snapshots are taken, the so-called stereo pair. Based on them, using photogrammetry, the distance to the surfaces is determined. Thus, for example, satellite imagery of the height of the terrain of the Earth.

It is important to keep in mind that Structured Light and Time of Flight require the device to have both an infrared (IR) emitter and an IR camera. Stereo technology is enough 2x cameras and does not require an infrared emitter.

Note that the current devices are actually designed to work indoors at moderate distances to the scanned object (from 0.5 to 4 meters). This configuration provides a fairly large depth at an average distance, while not imposing excessive power requirements for the IR emitter and the performance of the device itself in the processing depth. For this reason, do not count on scanning very close objects and recognizing gestures.

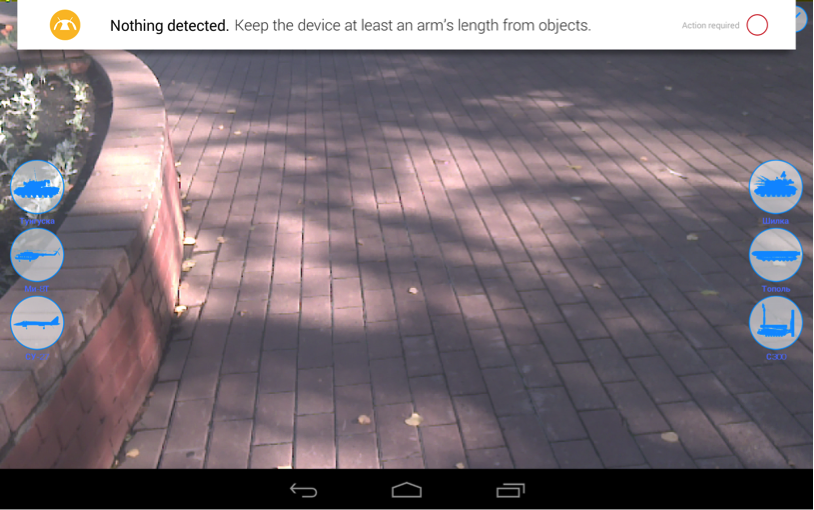

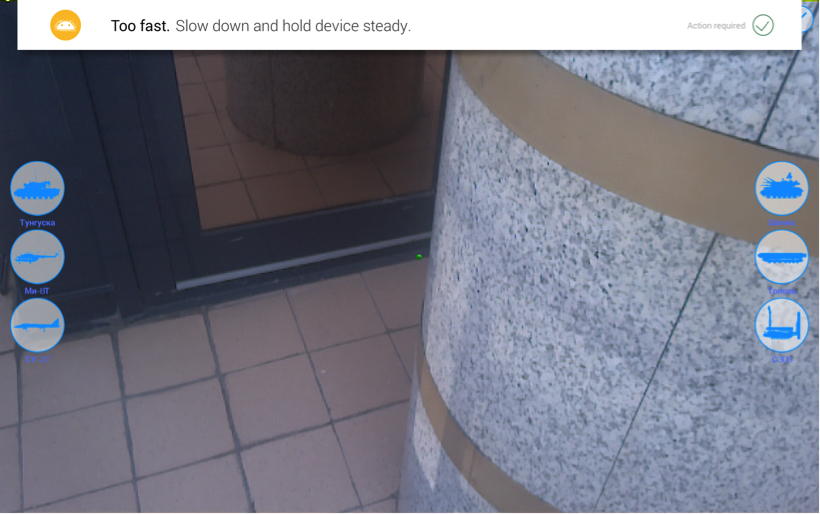

It should be remembered that for a device that perceives depth with the help of an infrared camera, there are some situations where accurate work is difficult.

- When moving too fast, Tango may not have time to build depth and politely asks to move more slowly.

- When moving too fast, Tango may not have time to build depth and politely asks to move more slowly.

- When moving too fast, Tango may not have time to build depth and politely asks to move more slowly.

Our project with Tango

We reviewed the principles of operation of the device in sufficient detail. Now let's move from theory to practice. Let's try to create our first project.

Tango provides an SDK for the Unity3D game engine and supports OpenGL and other 3D systems via C or Java. We use in the work basically Unity3D and all examples will be created further in his development environment.

Integration with unity3D

To develop applications in Unity3D for Project Tango, we need:

- Installed Android SDK 17+

- Unity (5.2.1 or higher), with an environment tuned for Android development.

- Tango Unity SDK .

- For Windows, you should install the Google USB Driver if the device was not automatically recognized.

Project Description

We decided to implement the arrangement of miniatures of military equipment using Tango. The following was not planned intricate sequence of actions:

- The user picks up the Tango tablet;

- On the screen in places where it is possible to place the technique - a green marker is drawn, where it is impossible - a red one:

- The following selection of military equipment is offered:

- The placed equipment can be moved in the plane of the screen using the touch interface, and it will move along the physical surface.

- You can remove the placed technique.

- A restriction has been introduced - 10 placed copies of the equipment, with sufficient optimization of the models, this number can be increased. If there are more than 10 models, then the very first placed model is replaced and further along the cycle.

The interface for the demo decided to make it minimalist, but understandable for the user.

Implementation

As a basis, we took resources from TangoSDK / Examples / AugmentedReality with github and our models from an already implemented project.

Implementation description

In order to realize our plans, we will describe our actions in steps:

- Displaying the image from the camera of the device on the screen, this will help us Tango AR Camera

- Next, you need to ensure that the real movement of the device in the virtual scene is repeated. Motion Tracking Tango Application , Enable Motion Tracking .

, , , , , . Tango Tango Point Cloud , .

— :

- , — UI, EventSystem.

- We also need to provide data directly to the model of technology. We placed the objects in the scene, in the Prefabs object.

- We have added a script to each button.

ViecleSpawnScript

and made a link in the script to the model. Now our buttons “know” which model is connected with them.

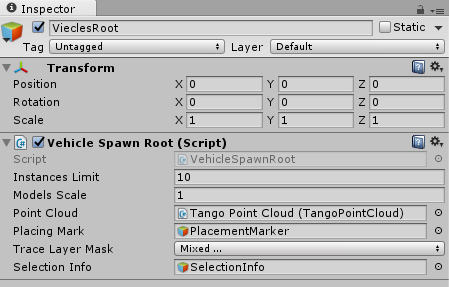

- Now we need to implement the logic that allows you to arrange objects, move them, delete. We implemented logic in the classroom.VehicleSpawnRoot

Below you can see its settings:

Instances Limit - the maximum number of placed models;

Model Scale - the scale of the models with the arrangement;

Point Cloud - link to the Tango Point Cloud object;

Placing Mark - a link to the marker prefab;

Trace Layer Mask - setting up layers for which placement is possible;

Selection Info - a link to an interface element for displaying the name of a vehicle that has been selected.

- Our application must constantly draw a marker in the course of its work, the marker will normally be located where the beam ends from the center of the screen to the surface. The marker is represented by an object in the PlacementMarker scene.

- We need to have data - the point of intersection of the beam and the result of the intersection. Getting the coordinates and the result of the trace we did through asynchronous receiving, launched Coroutine with the functionLazyUpdate

In this function, the definition of the intersection of the beam with a point cloud cloud Point Tango. Point Cloud Tango rebuilds Tango itself, so we have a current point cloud and can take data from it.

The result of the trace can have the following values:

enum ETraceResults { Invalid, // NotFound, // , NotGround, // . ExistingObjectCrossing, // Valid, // } <ol> <li> To model by pressing the button was put on the surface in the class:

VehicleSpawnRoot create a function:

public void Spawn( GameObject prefab ) A prefab with a model for placement is passed to the function. The function checks the possibility of placement and, if successful, creates a model in the specified coordinates.

- We need to create a functional for the possibility of moving the placed model. The function is responsible for moving the model:

private void UpdateTouchesMove() We briefly describe how it works: in the function, we get the touch data through

Input.GetTouch( 0 ); We are looking for the intersection with spaced objects in the scene, and in case of success we mark the object as:

Selected We also send to the function of calculating the intersection with the point cloud the position of the beginning of the beam, it will coincide with the position of the finger on the screen.

Further, in the process of moving around the screen, the model is set to a new position, if the result of the trace allows you to do this, I remind you, the trace is done in the asynchronous method

LazyUpdate It remains to add a function to delete the placed models. In general, everything is ready, you can run!

More information can be found with the project code on gitHub .

Publish app to google play

Publishing apps that use the Tango framework requires one additional step in addition to the standard Android publishing process. In order for the application to be installed only on Tango compatible devices, you must add the following lines to the application manifest file.

<application ...> ... <uses-library android:name="com.vizerra.tangolab" android:required="true" /> </application> If you want to get a single APK for devices with Tango support and without it, then you can determine in Tango the availability of Tango features and use their capabilities in the application itself.

Summary

So we went along with you from theoretical foundations to the publication of the application.

It can be safely concluded that the platform can already be used for commercial purposes. It works quite stable. The technologies included in it do not conflict with each other and complement each other well, solving the stated problems.

In addition, commercial devices began to go with large vendors . The only fly in the big barrel of honey is a problem when the device is operating in bright light. I'd like to believe that Google and Intel will solve this problem in the coming years or maybe they are already solving it. Thank you for your attention and we hope that the article was interesting for you.

Source: https://habr.com/ru/post/309876/

All Articles