How to render the frame of the new Doom

Released in 1993, the first DOOM made fundamental changes to game development and mechanics, it became a world hit and created new idols, such as John Carmack and John Romero .

Today, 23 years later, id Software belongs to Zenimax , all the founders have already left the company, but this did not prevent the id team from demonstrating all their talent by releasing a great game.

New DOOM perfectly complements the franchise. It uses the new engine id Tech 6 ; After the departure of John Carmack, he was replaced by former render programmer Crytek developer Tiago Souza (Tiago Sousa) .

')

Traditionally, id Software, several years after its creation, published the source code of its engines, which often led to the emergence of interesting remakes and analytical articles . It is not known whether id Tech 6 will continue this tradition, but we do not necessarily need the source code to evaluate the curious graphic techniques used in the engine.

How is the frame rendered

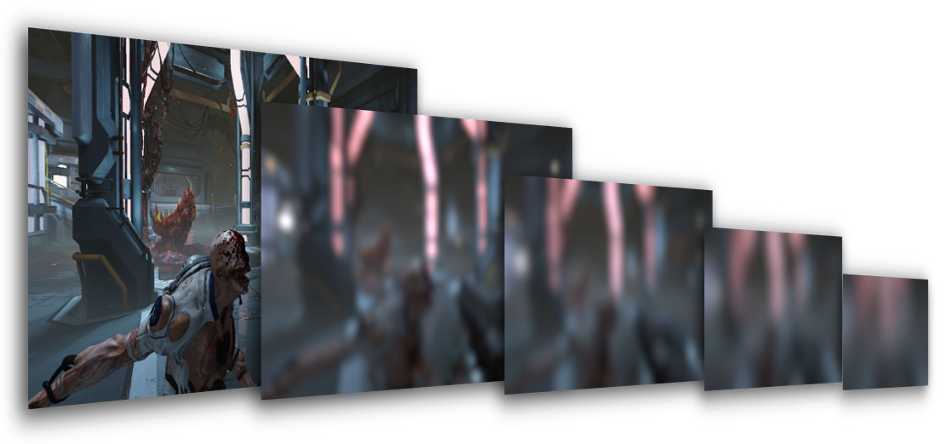

We will examine the scene from the image below, in which the player attacks the blood nest , guarded by several possessed , immediately after receiving the Praetorian armor at the beginning of the game.

Unlike most modern games under Windows, DOOM does not use Direct3D , but OpenGL and Vulkan .

Vulkan is an amazing new technology, and Baldur Karlsson (Baldur Karlsson) just recently added Vulkan support in RenderDoc , so it was difficult to resist the temptation to get inside the DOOM engine. All the observations below are made in a game launched from Vulkan on the GTX 980 with all the settings set to Ultra , some guesses are taken from the presentation of Thiago Souza and Jean Geffroy on Siggraph .

MegaTexture Update

The first stage of rendering is the update of mega-textures ; This technology, introduced in id Tech 5 , was used in RAGE , and now in the new DOOM.

If to explain briefly, the meaning of this technology is that several huge textures (in DOOM they are 16k x 8k in size) are located in the memory of the video processor; each of them is a collection of 128x128 tiles.

128 x 128 pages stored in a 16k x 8k texture

All of these tiles should be an ideal set of actual textures with a good level of mip-texturing, which will later be used by pixel shaders to render the scene that we see.

When a pixel shader reads from a “virtual texture”, it simply reads some of these physical tiles of 128x128 in size.

Of course, depending on where the player is looking, the set of these textures will change: new models appear on the screen, referring to other virtual textures, downloading new tiles and unloading old ones is required ...

So, at the beginning of the frame, DOOM updates several tiles using the

vkCmdCopyBufferToImage instruction to write actual texture data to the memory of the graphics processor.Read in detail about mega-textures here and here .

Shadow Atlas

For each light source casting a shadow, a unique depth map is created, stored in one huge texture atlas tile of 8k x 8k size. However, not every depth map is calculated for each frame: DOOM actively reuses the results of the previous frame and recalculates only those depth maps that require updating.

8k x 8k depth buffer (previous frame)

8k x 8k depth buffer (current frame)

When a light source is static and casts shadows only on static objects, it will be wise to simply keep its depth map “as it is” and not to perform unnecessary recalculations. However, if the enemy moves to this light, then you will need to create a depth map again.

The dimensions of the depth maps can vary greatly depending on the distance from the source to the camera; In addition, recalculated depth maps do not have to be in the same atlas tile.

In DOOM, certain optimizations are used, for example, caching of the static part of the depth map with the calculation of the projections of only dynamic grids and subsequent compositing of the results.

Depth Treatment Pre-Pass

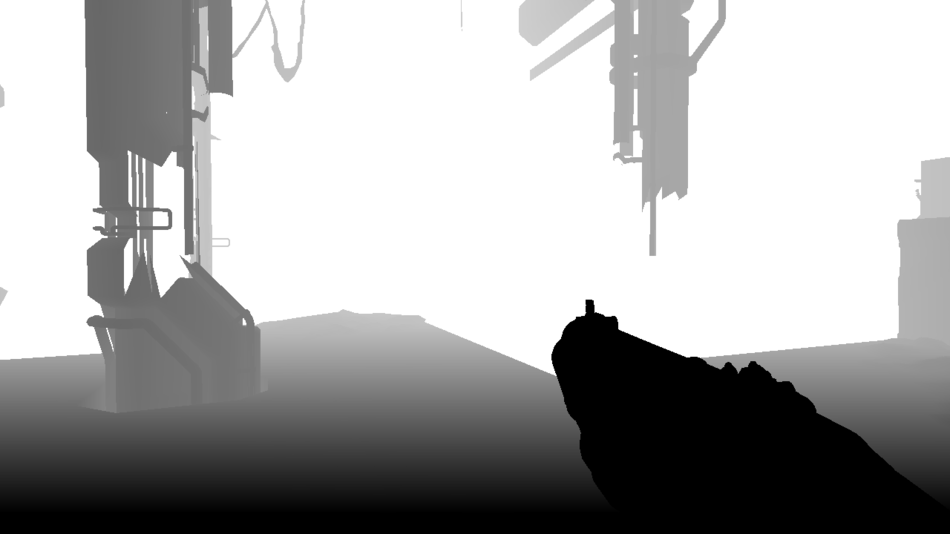

All the opaque grids have already been rendered and their depth information has been transferred to the depth map. First it is the player’s weapon, then static geometry, and finally dynamic geometry.

Depth map: readiness 20%

Depth Map: 40% Readiness

Depth Map: 60% Readiness

Depth Map: 80% Readiness

Depth Map: 100% Ready

But in fact, during the preliminary processing of the depths, not only information about the depths is saved.

When rendering to a depth map of dynamically objects ( possessed , cables, and player’s weapons) their speed per pixel is also computed and written to another buffer to create a velocity map. Calculations are performed by calculating in the vertex shader the difference between the positions of each vertex between the previous and the current frame.

Velocity map

To store speed you need only 2 channels: horizontal speed is stored in red, and vertical is stored in green.

The obsessed move quickly to the player (therefore it is green), and the weapon is almost motionless (black).

And what is this yellow area (red and green are 1)? In fact, this is the original default color of the buffer, meaning that there are no dynamic grids there: this is the “area of static grids”.

Why is DOOM not calculating speed for static grids? Because the speed of a static pixel is easy to find from its depth and the new state of the camera compared to the last frame; it is not necessary to calculate it for each grid.

The velocity map comes in handy later when adding motion blur .

Cut requests

We aim to send as few geometric objects as possible for rendering in the GPU, and the best way to achieve this is to cut off all the grids that are invisible to the player. Most of the clipping of invisible parts in DOOM is performed by the Umbra middleware , but still the engine performs clipping requests to the graphics processor to further trim the visible area.

What is the meaning of cut-off requests to the graphics processor? The first step is to group several grids of the world into a virtual area encompassing all these grids, followed by a request to the graphics processor to render this area according to the current depth buffer. If none of the raster pixels passes the depth test, it means that this area is completely cut off and all the objects in the world contained in this area can be safely ignored during rendering.

However, the problem is that the results of these cutback requests are not immediately available, and we don’t want the GPU pipeline to be idle blocked by these requests. Usually, the reading of results is postponed for subsequent frames, so it is necessary to have a slightly more conservative algorithm to avoid the appearance of objects on the screen.

Check area. Red - cut off, green - visible.

Cluster direct rendering of opaque objects

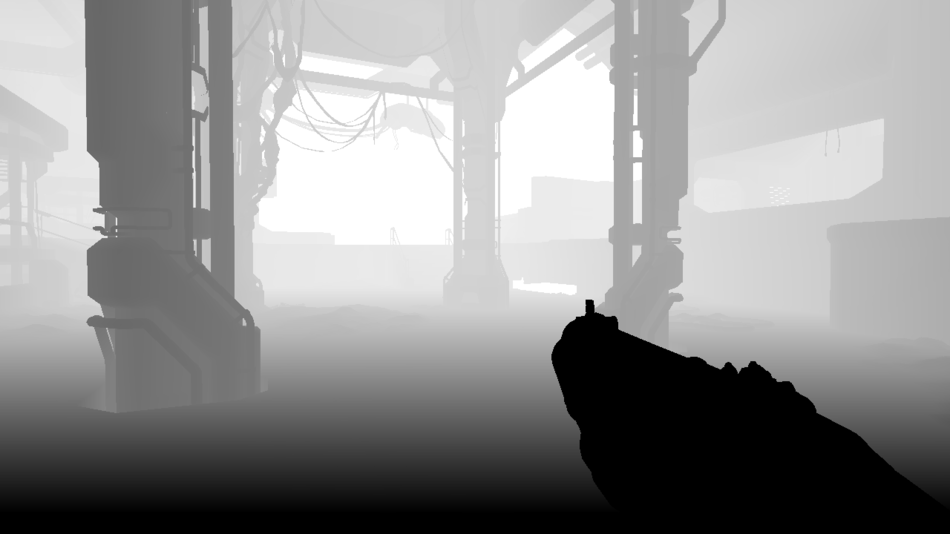

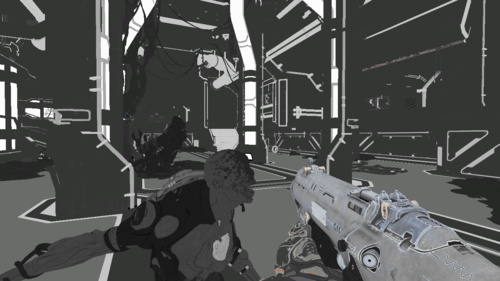

Now all opaque geometry and decals are rendered. Lighting information is stored in a floating point HDR buffer:

25% of lighting

50% of lighting

75% of lighting

100% lighting

The depth check function is set to

EQUAL to avoid unnecessary computation: thanks to the previous preliminary processing of depth processing, we know exactly what depth value each pixel should have. Decals are also applied directly when rendering grids, they are stored in a textural atlas.The picture already looks good, but we still lack transparent materials, such as glass, particles, and there are no reflections of the medium at all.

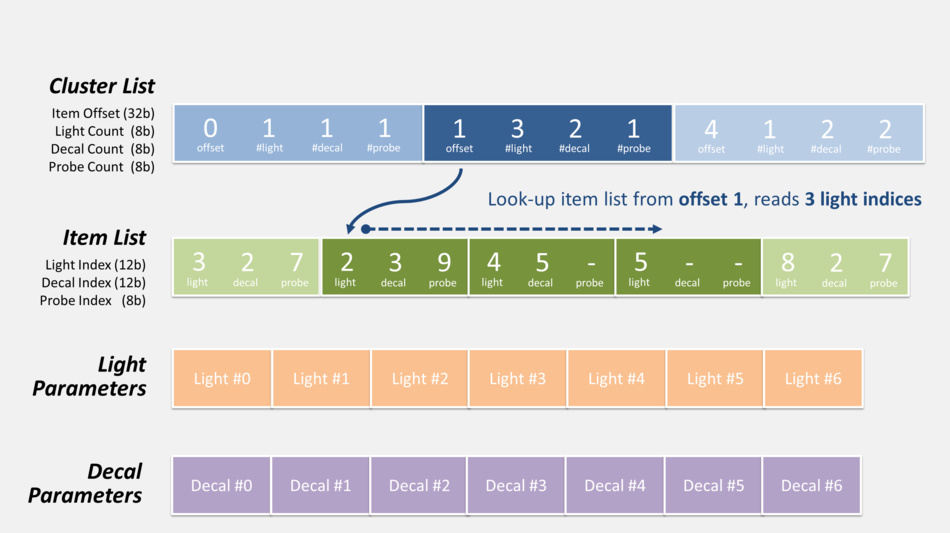

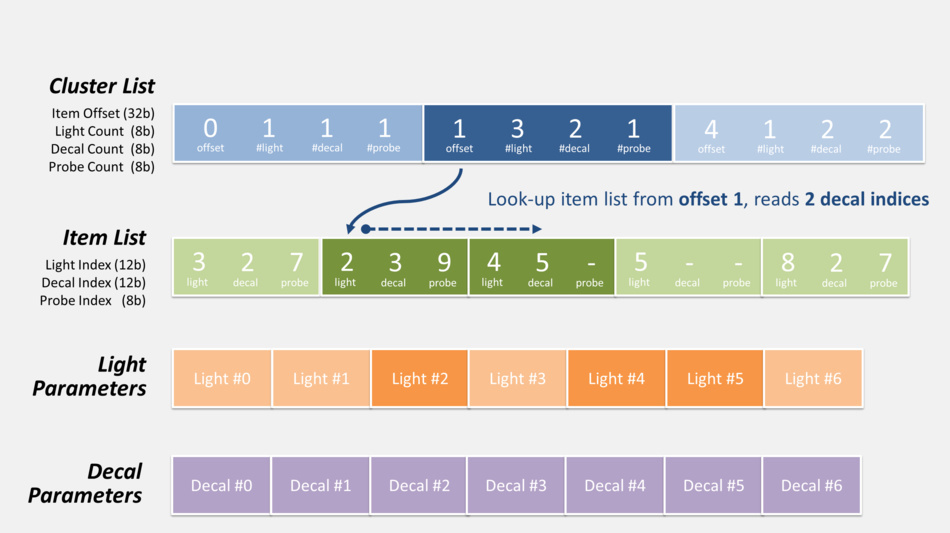

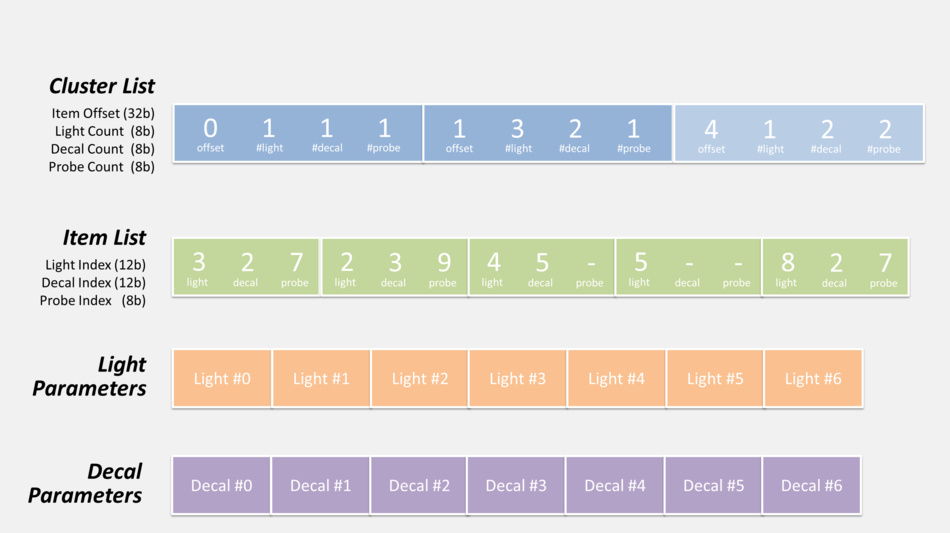

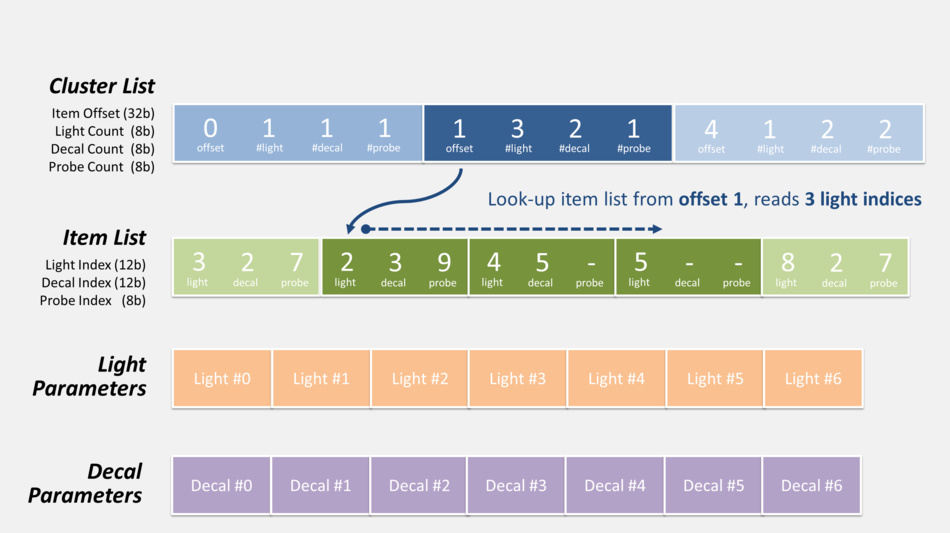

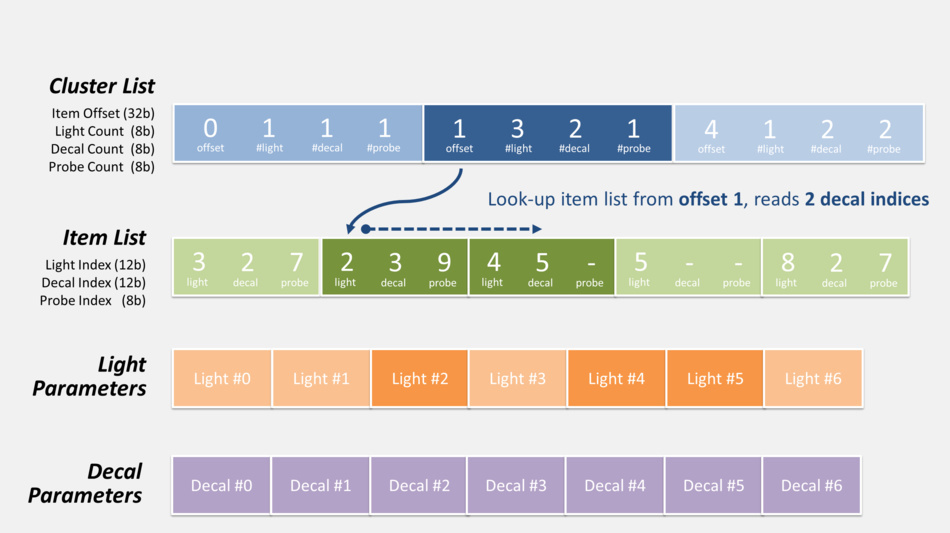

I will say a few words about this passage: it uses a cluster direct renderer based on the work of Emil Person and Ola Olsson .

The weak point of direct rendering has always been the impossibility of processing a large number of sources of illumination, this task is much easier to accomplish with the help of deferred shading.

How does a cluster renderer work? First, the viewing window is divided into tiles: 16 x 8 areas are created in DOOM. Some renderers stop at this and calculate the list of sources of illumination per tile, which allows to reduce the amount of illumination calculations, but still have certain problems with borderline cases.

Cluster rendering develops this concept deeper, moving from 2D to 3D: without dwelling on the separation of a two-dimensional viewing window, it performs a 3D breakdown of the entire camera visibility pyramid, creating cuts along the Z axis.

Each “block” is called a “cluster”; you can also call them “ voxels in the shape of a pyramid of visibility”.

Below is a simple split of a 4 x 2 viewing window; 5 depth slices divide the visibility pyramid into 40 clusters.

In DOOM, the visibility pyramid of the camera is divided into 3072 clusters (16 x 8 x 24 in size), the depth slices are logarithmically located along the Z axis.

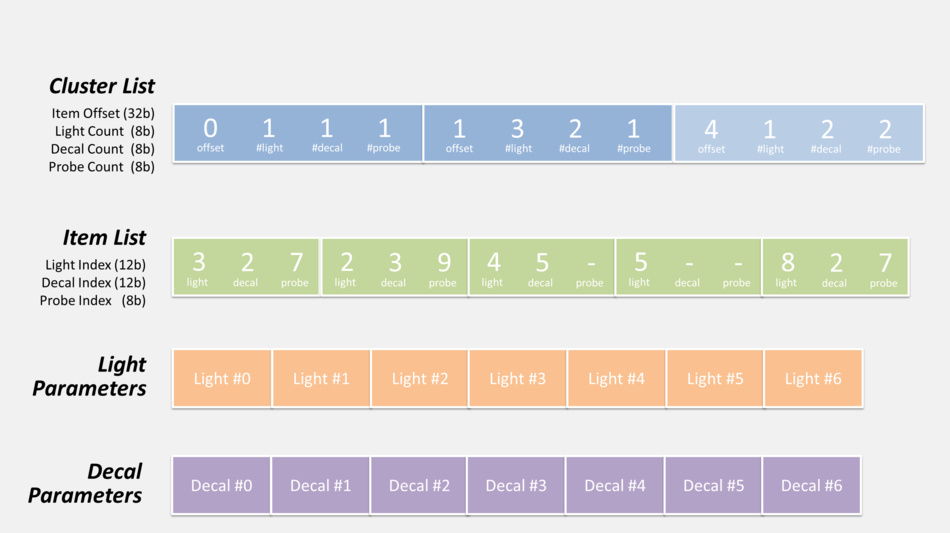

In the case of a cluster renderer, a typical algorithm would be:

- First, the CPU calculates a list of elements that affect the lighting in each cluster: light sources, decals and cubic textures ...

To do this, all these elements are “voxelized”, so that their area of impact can be checked for intersection with the clusters. Data is stored in indexed lists in the buffer of the graphics processor so that shaders can access them. Each cluster can contain up to 256 light sources, 256 decals and 256 cubic textures. - Then the graphics processor renders the pixel:

- from the coordinates and pixel depth it is calculated which cluster it belongs to

- reads the list of decals / light sources for this cluster. It uses indirect addressing with offset and calculation of the index (see illustration below).

- the code passes through all the decals / light sources in the cluster, calculating and adding the degree of their influence.

Using light sources and decals

Here's how a pixel shader can get a list of lights and decals on this aisle:

There is also a list of probes (not shown in the diagrams above), access to which is obtained in exactly the same way; however, it is not used on this passage and we will return to it later.

The cost of influencing the CPU by creating the list of items for the clusters pays off by significantly reducing the complexity of rendering calculations in the graphics processor down the pipeline.

Clustered direct steel rendering is the last thing to pay attention to: it has a good ability to process more light sources than simple direct rendering; besides, it works faster than deferred shading, which should write and read from multiple G-buffers .

However, I did not mention something: on this passage, it is not just a single write operation that is transferred to the lighting buffer; when it is also executed using MRT , two thin G-buffers are created:

Normal map

Reflection map

The normal map is stored in R16G16 floating point format , the reflection map is stored in R8G8B8A8 , the alpha channel contains a smoothing factor. So in DOOM, a combination of direct and deferred rendering with a hybrid approach is used. These new G-buffers will be useful when adding additional effects, such as reflections.

I missed one more thing: at the same time, a 160 x 120 feedback buffer is created for the mega-texture system. It contains information for the streaming system, telling about textures and their mip-texturing, which need to be passed on.

The megatexures engine works on the principle of feedback: if after the rendering pass it is reported that there are no textures, the engine loads them.

Particles in the GPU

Then a compute shader is launched to update the particle simulation: position, velocity, and lifetime.

It reads the current states of the particles, as well as the buffers of normals and depths (for collision detection), reproduces the simulation stage and stores new states in the buffers.

Ambient light blocking in screen space (SSAO)

At this stage, an SSAO map is generated.

It is designed to darken the color around narrow seams, folds, etc.

It is also used to apply clipping reflections to avoid bright lighting artifacts appearing on clipped grids.

The map is calculated at half the original resolution in the pixel shader, which reads data from the depth buffer, normal maps and reflections.

The first result is quite noisy.

SSAO card

Reflections in screen space

Now the pixel shader creates an SSR map. With the help of the information present on the screen, he reyrates the reflections, causing the rays to reflect from each pixel in the viewing window and read the color of the pixels on which the rays fell.

Depths |  Normals |  Reflection |  Previous frame |

| |||

SSR card | |||

SSR is a good and not very expensive technique for creating dynamic reflections of a scene in real time, creating a constant load; It greatly enhances the sense of immersion and realism.

But she has her own artifacts, as she works only in screen space and lacks “global” information. For example, you can see beautiful reflections in the scene, but when you start to look down, the amount of reflection decreases, and when you look at your feet, you will see almost no reflections. It seems to me that SSRs are well integrated into DOOM, they improve the quality of the image, but at the same time they are rather inconspicuous, and you will not notice their disappearances, if you do not follow them on purpose.

Static Cubic Textures Reflections

After calculating all the dynamic reflections on the previous pass (and their limitations), it is time to create static reflections using IBL .

This technique is based on the use of 128 x 128 generated cubic textures, which represent information about the lighting of the environment in various places on the map (they are also called “environment probes”). In the same way as light sources with decals at the stage of clustering of the visibility pyramid, the probes are also indexed for each cluster.

All cubic level textures are stored in an array; There are dozens of them, but only 5 contribute to our scene (cubic textures in this room):

|  |  |  |  |

Static reflection map

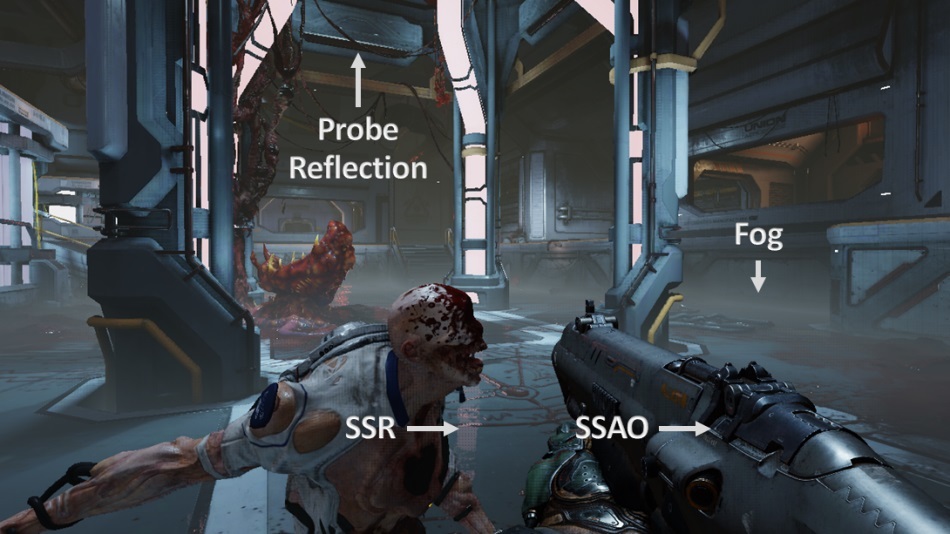

Mixing cards

At this stage, the compute shader combines all previously generated maps. It reads maps of depths and reflections and mixes the lighting of the straight aisle:

- with SSAO information

- with SSR for the pixel in question when it becomes available

- if SSR information is missing, static reflection map data is used as a replacement

- fog effect is also calculated

Mixing and fog: up

Mixing and fog: after

Fog - fog; Probe Reflection - probe reflection

Particle lighting

There are smoke particles in our scene and lighting is calculated for each sprite. Each sprite is rendered as if it is in the space of the world: from its position we obtain a list of sources of illumination and corresponding shadow maps, after which the illumination of the quadrilateral (particles) is calculated. Then the result is saved in a 4k atlas tile; Tiles can be of various sizes depending on the distance from the particle to the camera, quality settings, etc. The atlas has selected areas for sprites of the same resolution, this is what 64 x 64 sprites look like:

Particle Lighting Atlas

In such a low resolution only information about the light is stored. Later, when the particle is actually drawn, the texture is used in full resolution, and the scale of the lighting quadrangle increases and it mixes with the texture.

Here, DOOM separates the particle illumination calculation from the main rendering of the game: whatever resolution you play (720p, 1080p, 4k ...), the particle illumination is always calculated and stored in such small fixed-size tiles.

Zoom out and blur

The scene is reduced several times in size to 40 pixels. The smallest zoom levels are blurred by separate vertical and horizontal passes (a “blur chain” is created).

Why so early to perform the blur? This process usually occurs at the end, during post-processing to create a bloom effect in bright areas.

But all these different levels of blur will be useful to us in the next passage for rendering refractions in glass.

Transparent objects

All transparent objects (glasses, particles) are rendered on top of the scene:

Transparent objects: up to

Transparent objects: after

Glasses are very beautifully rendered in DOOM, especially glasses covered with frost or dirt: decals affect only part of the glass to make the refractions more or less blurred. The pixel shader calculates the coefficient of “blurring” of refraction and selects from the set of “chain of blurring” two cards that are closest to the coefficient of blurring. It reads these two maps and interpolates the two values linearly to approximate the final color of the refraction blur. Thanks to this process, glass can create pixel-by-pixel beautiful refractions at various levels of blur.

Distortion map

Distortion map

Very hot areas can create thermal deformations on the image. In our scene, the image of a nest of blood distorts a little.

Distortion is rendered relative to the depth buffer to create a low-resolution distortion map.

The red and green channels represent the value of the distortion along the horizontal and vertical axes. The blue channel contains the amount of blur applied.

This distortion effect is applied later, as post-processing, using a distortion map to move the desired pixels.

In this scene, the distortions are quite small and almost imperceptible.

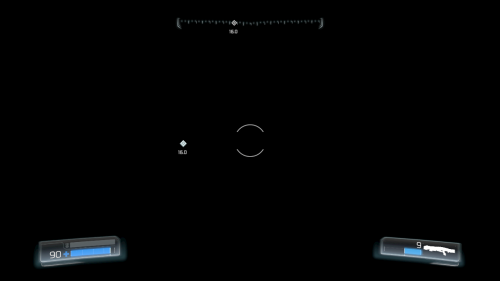

User interface

The UI is rendered to another render buffer in a mode that is pre-multiplied by an alpha channel ( premultiplied alpha mode ) stored in the LDR format.

The advantage of storing the entire UI in a separate buffer instead of drawing directly on top of the completed frame is that the game can apply filtering / postprocessing, for example, chromatic aberration or optical distortion for all UI widgets in one pass.

Rendering does not use some kind of batching technique and simply draws one by one interface elements, for about 120 draw calls.

In subsequent passes, the UI buffer is mixed on top of the game image, creating the final result.

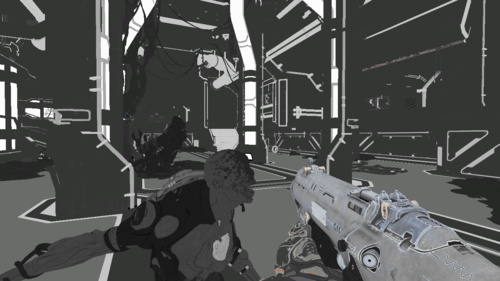

Temporary anti-aliasing (TAA) and motion blur

TAA and motion blur are applied using the velocity map and the rendering results of the previous frame.

Fragments can be tracked, so that the pixel shader knows where the current pixel being processed was in the previous frame. Every second rendering frame slightly shifts the mesh projection by half a pixel: this allows you to eliminate subpixel distortion artifacts.

TAA and motion blur: before

TAA and motion blur: after

The result is very good: not only does the grid become smooth, but the distortion of reflections (at which individual bright pixels may appear in the frame) also decreases. The quality is much better than what could be achieved with the FXAA post-processing method.

Scene brightness

At this stage, the average brightness of the scene is calculated ; this is one of the parameters later transmitted for tone mapping.

The HDR-illumination buffer cyclically decreases twice from its resolution until it becomes a 2 x 2 texture, with each iteration a pixel color value is calculated as the average of the brightness of its four “parent” pixels from a larger map.

Bloom

Bloom

Applies a brightness filter to mute the darkest areas of the image. The result of using the brightness filter is then cyclically reduced and blurred in a manner similar to that described above.

Layers are blurred using Gaussian blur with a vertical and horizontal aisle, on which the pixel shader calculates a weighted average along one axis.

Then blurred layers are combined to create the bloom effect , which is an HDR texture four times smaller than the original resolution.

Final postprocessing

All this stage is performed in a single pixel shader:

- heat distortion applied using distortion map data

- The bloom texture is added on top of the HDR lighting buffer.

- apply effects such as vignetting, dirt / highlights

- average brightness is calculated by sampling the 2x2 brightness map, as well as additional exposure parameters, tone mapping and grading are applied.

Tone Compression: before

Tonal Compression: after

Tonal Compression takes an HDR-lighting buffer containing colors that change over a wide range of brightness, and converts it into 8-bit-per-color (LDR) color so that the frame can be displayed on the monitor.

Cinematic tone mapping operator based on an equation

(x(Ax+BC)+DE) / (x(Ax+B)+DF) - (E/F), this tone compression Uncharted 2 is also applied to GTA V .It should also be noted that the overall red tint of the scene is obtained by color correction.

UI and film grain

Finally, the UI is located on top of the game frame; at the same time, a small film grain effect is added .

UI and graininess: before

UI and graininess: after

And we have completed frame processing, it is ready to be transferred to a monitor for display; a lot of calculations have been done, but it all happened in less than 16 milliseconds.

DOOM manages to create a high-quality picture at a high game speed, because it wisely uses old data calculated in previous frames. In total, we had 1331 draw calls, 132 textures, and 50 render buffers.

Bonus Information

More about glass

The result of glass rendering is very good; at the same time, it was achieved in rather simple ways, which we considered above:

- « » // ; .

:

:

The frame we studied doesn’t see the depth of field , so let's consider the following scene before and after applying it:

Depth of field: before

Depth of field: after

Not all games apply depth of field correctly: the naive approach often consists of using Gaussian blur and performing blurring in one passage depending on pixel depth. This approach is simple and economical, but it has some problems:

- Gaussian blur is good for the bloom effect, it incorrectly creates the bokeh : you need a flat center for the light of a bright pixel to spread across the entire disk or hex. Gaussian blur is not able to create beautiful forms of bokeh.

- applying a depth of field in a single pixel shader step can easily lead to border artifacts.

In DOOM, depth of field is applied correctly; In my experience, one of the approaches that give the best results was chosen: images with large and small depth of field are created: the selection of pixels is made depending on its depth and depth of field parameters.

- An image with a shallow depth can be very blurred, the more it “spreads” to the pixels behind it, the better.

- The image with a large depth is also blurred, but it does not read pixels from the area in focus / shallow depth of field, so it avoids problems with objects in the foreground, mistakenly “spreading” onto the background.

To create a bokeh blur, DOOM works at half resolution and performs a circular blur with 64 texture overlays, each fragment has the same weight, so the brightness actually spreads around, unlike the Gaussian blur.

The diameter of the circle can vary pixel by pixel, depending on the value of the pixel scattering spot .

Then the bokeh extends further with a blur of 16 overlays, but this time the weighted average is not calculated, but the sample values are accumulated and the largest value of the adjacent overlays is stored; this not only expands the first blur, but also eliminates small artifacts (omissions in sampling) of the first pass. The last part of the algorithm is taken from McIntosh (McIntosh).

This technique of iteration over several passes allows to get very beautiful large blur, while remaining at the same time effective in terms of performance; The number of texture overlays per pixel is still quite small, given the large radius of the resulting final circular blur.

Deep Depth of Field

Deep Depth of Field and Blur 1

Deep Depth of Field and Blur 1 and 2

Finally, images with a large and a small depth of field are superimposed on the original scene with alpha channel mixing to create the final depth of field effect. This pass is performed right before applying motion blur.

Additional sources

If you want to dive deeper into idTech 6 technology, then fortunately there are many lectures and publications on this subject:

- The devil is in the details: idTech 666 (Siggraph 2016), Tiago Sousa and Jean Geffroy

- Tech Interview: Doom , Digital Foundry

- id Software Tech Interview , DSOGaming

- QuakeCon 2016: Doom Uncapped – Part1 Part 2

- Doom: The definitive interview , VentureBeat

- Graphics Gems CryEngine 3 (Siggraph 2013), idTech 6.

Source: https://habr.com/ru/post/309844/

All Articles