Face Detection on iOS using Core Image

Core Image is a powerful API built into Cocoa Touch. This is an important part of the iOS SDK. However, she is often overlooked. In this article, we will consider the possibility of face detection and how to use this technology in my IOS applications!

Face recognition in iOS appeared long ago, since the 5th release (approximately in 2011), but this feature was often overlooked. The face detection API allows developers not only to recognize faces, but also to check them for certain properties, such as the presence of a smile, whether a person closes his eyes, etc.

')

First, we will study the technology of face detection using the Core Image framework, creating an application that recognizes a face in a photo and draws a special frame around it. In the second example, we will consider a more detailed method of use, by creating an application that allows the user to take a picture, detect whether a face is present, and get the coordinates of the user's face. Thus, we are going to learn as much as possible about face recognition in iOS, as well as the principle of using a powerful API that is so often overlooked. So let's go!

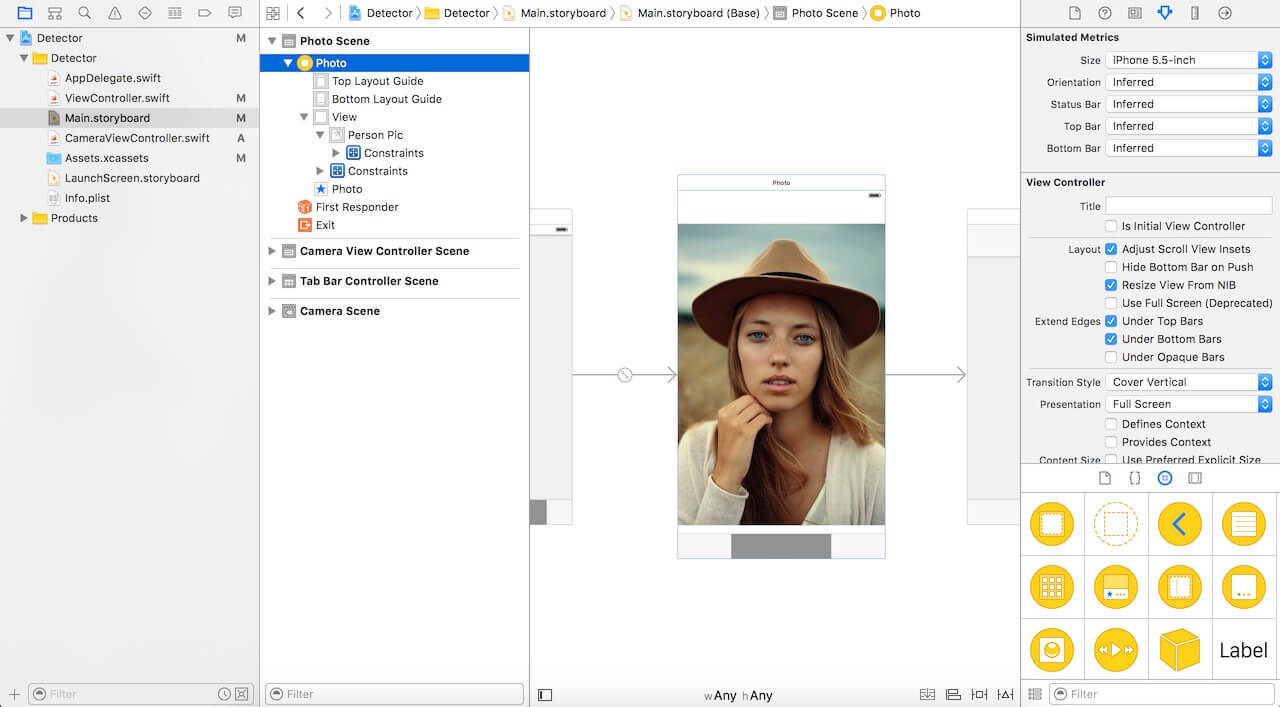

Download the starter project from this link here and open it in Xcode. As you can see, a very simple controller with an IBOutlet connected to an ImageView.

To start face detection using Core Image, you need to import the Core Image library. Go to the ViewController.swift file and paste the following code at the top:

In the start project, we have an ImageView connected to the code as an IBOutlet. Next, we need to implement the code for face recognition. Paste the following code into the project and consider it in more detail:

So what happens here:

Call the detect () method in viewDidLoad. So paste the following line of code into the method:

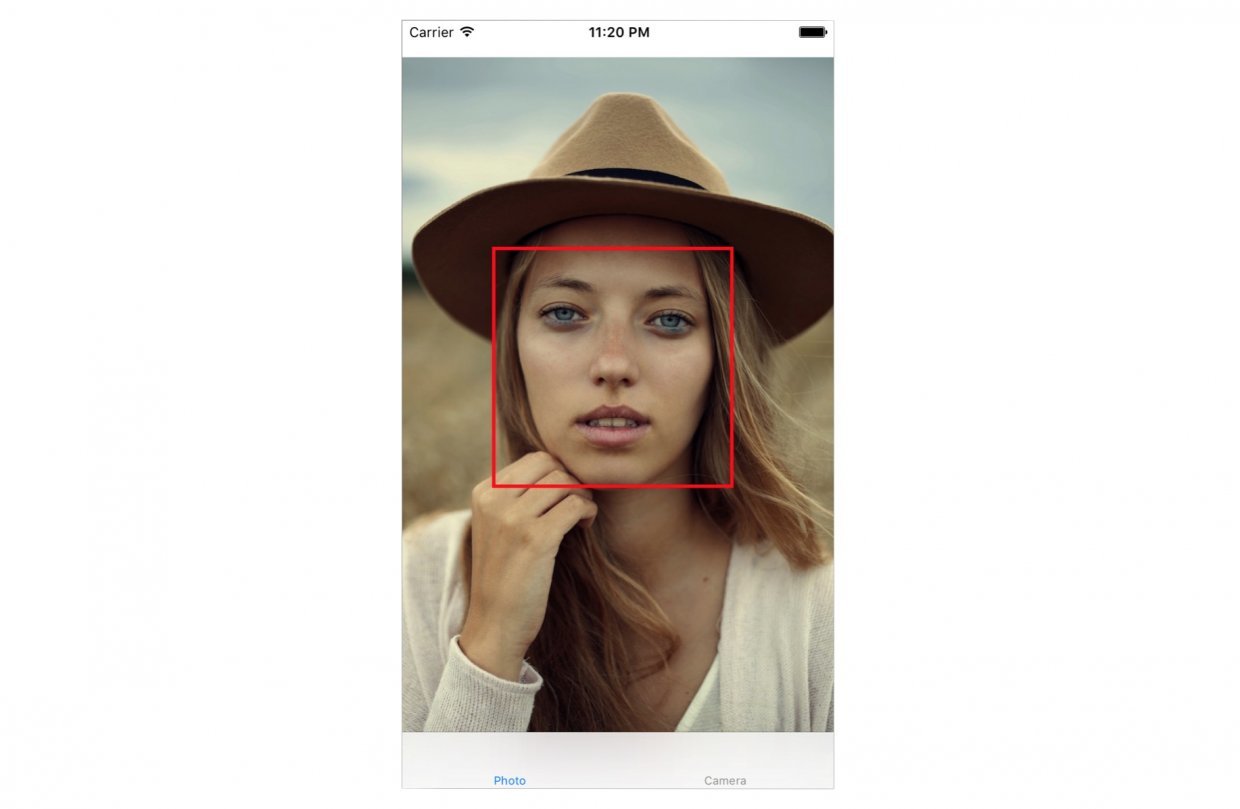

Run the application. You will see something like this:

In the xCode console, we see the coordinates of the detected face:

There are several nuances that we did not consider in the current example:

Now replace the detect () method with the following code:

The code changes above are highlighted by comments.

Let's imagine that we have a camera application that takes photos. As soon as a photo is taken, we want to launch face recognition to determine whether there is a face in the photo or not. If the face is present, we can classify this photo with some tags or something like that. To do this, we need integration with the UIImagePicker class and run our face recognition code after the snapshot is taken.

In the start project, I have already created the CameraViewController class. Update the code to implement the camera functions:

The first few lines of this function set the UIImagePicker delegate. In the didFinishPickingMediaWithInfo method (this is the UIImagePicker delegate method), we set the imageView for the image obtained in the method. Then we call dismiss for the piker and call the detect function.

We have not yet implemented the detect function. Paste the following code and take a closer look at it:

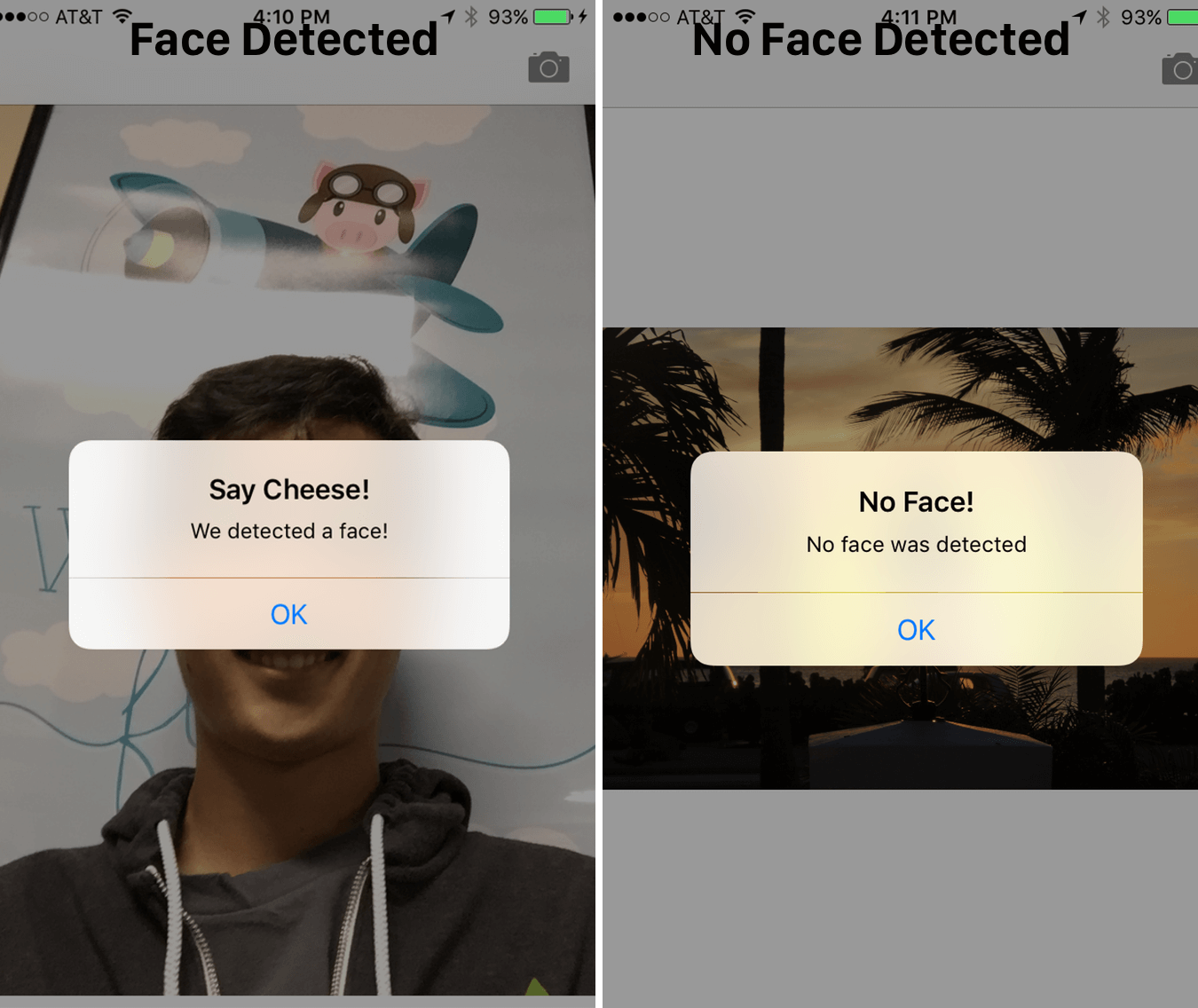

Our detect () function is very similar to its previous implementation. But this time, we use it on the captured image. When a face is detected, we display the warning message “We detected a face!” Otherwise, we display the message “No Face!”. Run the application and test the work.

CIFaceFeature has several properties and methods that I have already mentioned. For example, if you want to perform a detection, if a person smiles, you can call .hasSmile, which returns a boolean. For the experiment, you can call .hasLeftEyePosition to check if the left eye is present (hopefully so) or .hasRightEyePosition for the right eye, respectively.

We can also call hasMouthPosition to check if the mouth is present. If a mouth is present, we can access the coordinates with the mouthPosition property, as shown below:

As you can see, detecting facial features is very easy using Core Image. In addition to detecting the mouth, smile, or eye position, we can also check if the eye is open or closed by calling leftEyeClosed for the left eye and rightEyeClosed for the right eye, respectively.

In this article, we explored face detection functions using the Core Image API main image API and how to use them in an application with a camera. We installed the main UIImagePicker to capture a photo and detect the face that is present in the image.

As you can see, face detection using Core Image core image is a powerful API along with many applications! I hope this article will find this tutorial a useful and informative guide for this little-known API for iOS!

»You can download the final final draft here .

What will we do in this tutorial?

Face recognition in iOS appeared long ago, since the 5th release (approximately in 2011), but this feature was often overlooked. The face detection API allows developers not only to recognize faces, but also to check them for certain properties, such as the presence of a smile, whether a person closes his eyes, etc.

')

First, we will study the technology of face detection using the Core Image framework, creating an application that recognizes a face in a photo and draws a special frame around it. In the second example, we will consider a more detailed method of use, by creating an application that allows the user to take a picture, detect whether a face is present, and get the coordinates of the user's face. Thus, we are going to learn as much as possible about face recognition in iOS, as well as the principle of using a powerful API that is so often overlooked. So let's go!

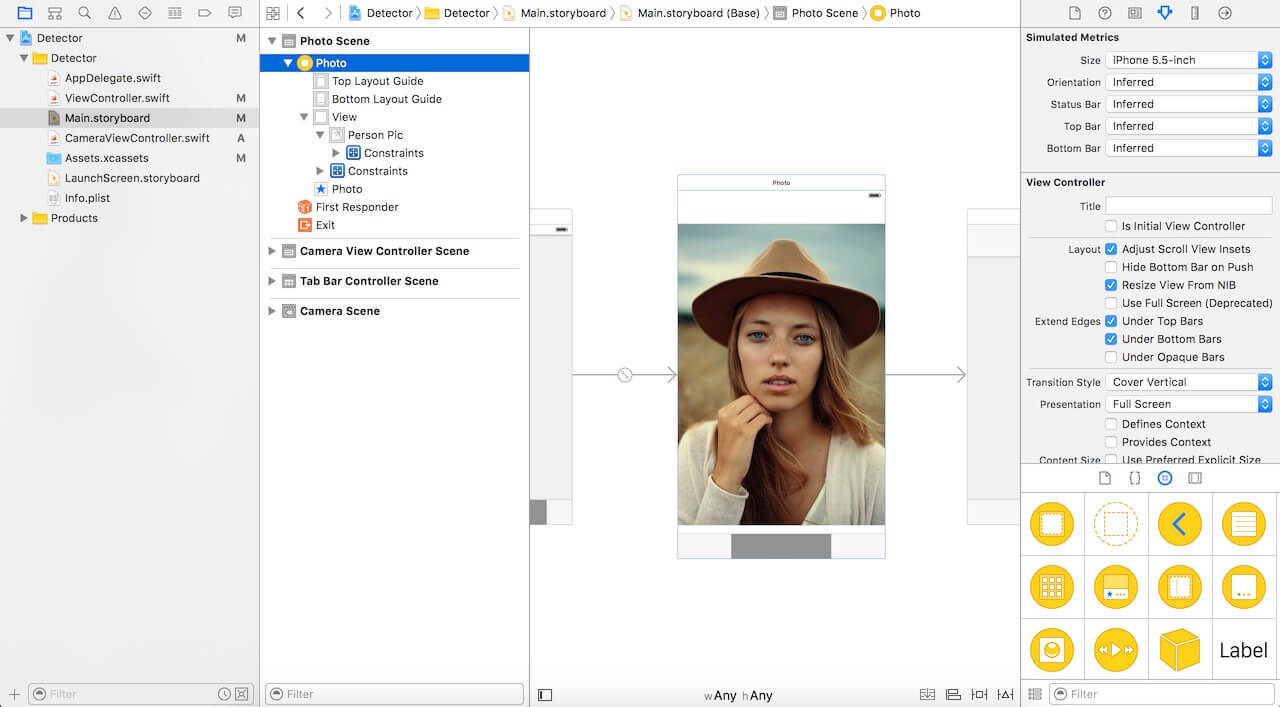

Project Setup

Download the starter project from this link here and open it in Xcode. As you can see, a very simple controller with an IBOutlet connected to an ImageView.

To start face detection using Core Image, you need to import the Core Image library. Go to the ViewController.swift file and paste the following code at the top:

import CoreImage Face Detection using Core Image

In the start project, we have an ImageView connected to the code as an IBOutlet. Next, we need to implement the code for face recognition. Paste the following code into the project and consider it in more detail:

func detect() { guard let personciImage = CIImage(image: personPic.image!) else { return } let accuracy = [CIDetectorAccuracy: CIDetectorAccuracyHigh] let faceDetector = CIDetector(ofType: CIDetectorTypeFace, context: nil, options: accuracy) let faces = faceDetector.featuresInImage(personciImage) for face in faces as! [CIFaceFeature] { print("Found bounds are \(face.bounds)") let faceBox = UIView(frame: face.bounds) faceBox.layer.borderWidth = 3 faceBox.layer.borderColor = UIColor.redColor().CGColor faceBox.backgroundColor = UIColor.clearColor() personPic.addSubview(faceBox) if face.hasLeftEyePosition { print("Left eye bounds are \(face.leftEyePosition)") } if face.hasRightEyePosition { print("Right eye bounds are \(face.rightEyePosition)") } } } So what happens here:

- Create the personciImage variable, retrieve the UIImage from the UIImageView, and convert it to a CIImage. CIImage is required to work with Core Image;

- Create an accuracy variable and set CIDetectorAccuracyHigh. You can choose from CIDetectorAccuracyHigh (provides high computational accuracy) and CIDetectorAccuracyLow (uses low computational accuracy). We choose CIDetectorAccuracyHigh because we need high accuracy;

- We define the faceDetector variable and set it to the CIDetector class and pass the precision variable that we created earlier;

- Next, we get an array of faces, by calling the featuresInImage method, the detector finds faces in this image;

- Perform a cycle over an array of faces and transform each recognized face into CIFaceFeature;

- Create a UIView called faceBox and set its frame to the frame size returned from faces.first. This is necessary in order to draw a rectangle to highlight the recognized face;

- Set the border width in faceBox to 3;

- Set the border color to red;

- The background color is set to transparent, the view will not have a visible background;

- Finally, we add our faceBox to the personPic;

- Not only can the API detect your face, the detector can also detect the left and right eyes. We will not highlight the eyes in the image. Here I just want to show you the relevant properties of CIFaceFeature.

Call the detect () method in viewDidLoad. So paste the following line of code into the method:

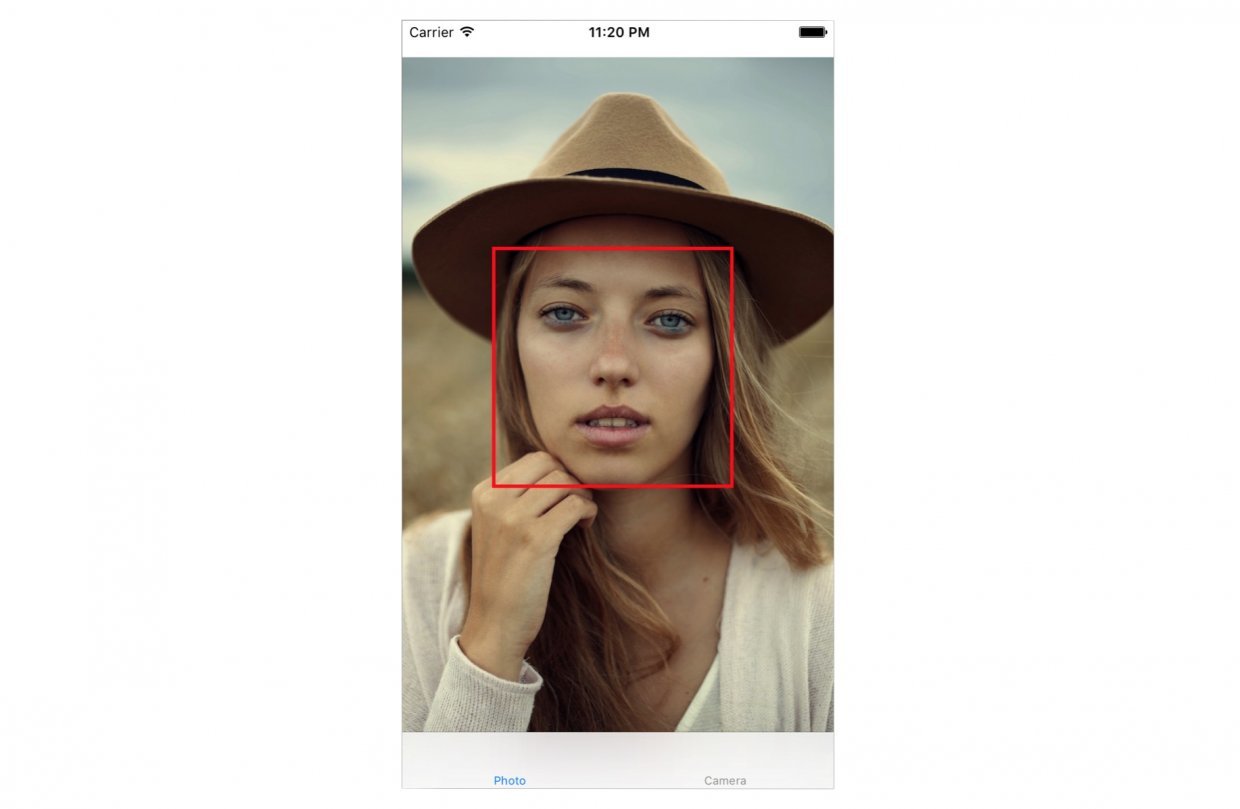

detect() Run the application. You will see something like this:

In the xCode console, we see the coordinates of the detected face:

Found bounds are (177.0, 415.0, 380.0, 380.0) There are several nuances that we did not consider in the current example:

- Face detection is applied to the original image, which has a higher resolution than the imageView. We set the personPic mode to aspect fit (scaling the image while preserving the original aspect ratio). To draw a rectangle correctly, we must calculate the actual position and size of the face in the image view;

- In addition, Core Image and UIView (or UIKit) use two different coordinate systems (see figure below). We must ensure the implementation of the transformation of the Core Image coordinates to the UIView coordinates.

Now replace the detect () method with the following code:

func detect() { guard let personciImage = CIImage(image: personPic.image!) else { return } let accuracy = [CIDetectorAccuracy: CIDetectorAccuracyHigh] let faceDetector = CIDetector(ofType: CIDetectorTypeFace, context: nil, options: accuracy) let faces = faceDetector.featuresInImage(personciImage) // let ciImageSize = personciImage.extent.size var transform = CGAffineTransformMakeScale(1, -1) transform = CGAffineTransformTranslate(transform, 0, -ciImageSize.height) for face in faces as! [CIFaceFeature] { print("Found bounds are \(face.bounds)") // faceBox var faceViewBounds = CGRectApplyAffineTransform(face.bounds, transform) let viewSize = personPic.bounds.size let scale = min(viewSize.width / ciImageSize.width, viewSize.height / ciImageSize.height) let offsetX = (viewSize.width - ciImageSize.width * scale) / 2 let offsetY = (viewSize.height - ciImageSize.height * scale) / 2 faceViewBounds = CGRectApplyAffineTransform(faceViewBounds, CGAffineTransformMakeScale(scale, scale)) faceViewBounds.origin.x += offsetX faceViewBounds.origin.y += offsetY let faceBox = UIView(frame: faceViewBounds) faceBox.layer.borderWidth = 3 faceBox.layer.borderColor = UIColor.redColor().CGColor faceBox.backgroundColor = UIColor.clearColor() personPic.addSubview(faceBox) if face.hasLeftEyePosition { print("Left eye bounds are \(face.leftEyePosition)") } if face.hasRightEyePosition { print("Right eye bounds are \(face.rightEyePosition)") } } } The code changes above are highlighted by comments.

- First, we use an affine transformation to convert Core Image coordinates to UIKit coordinates;

- Second, we added code to calculate the actual position and size of our detected face frame.

Run the application again. You should see a frame around your face. Congratulations, we successfully found a face using Core Image.

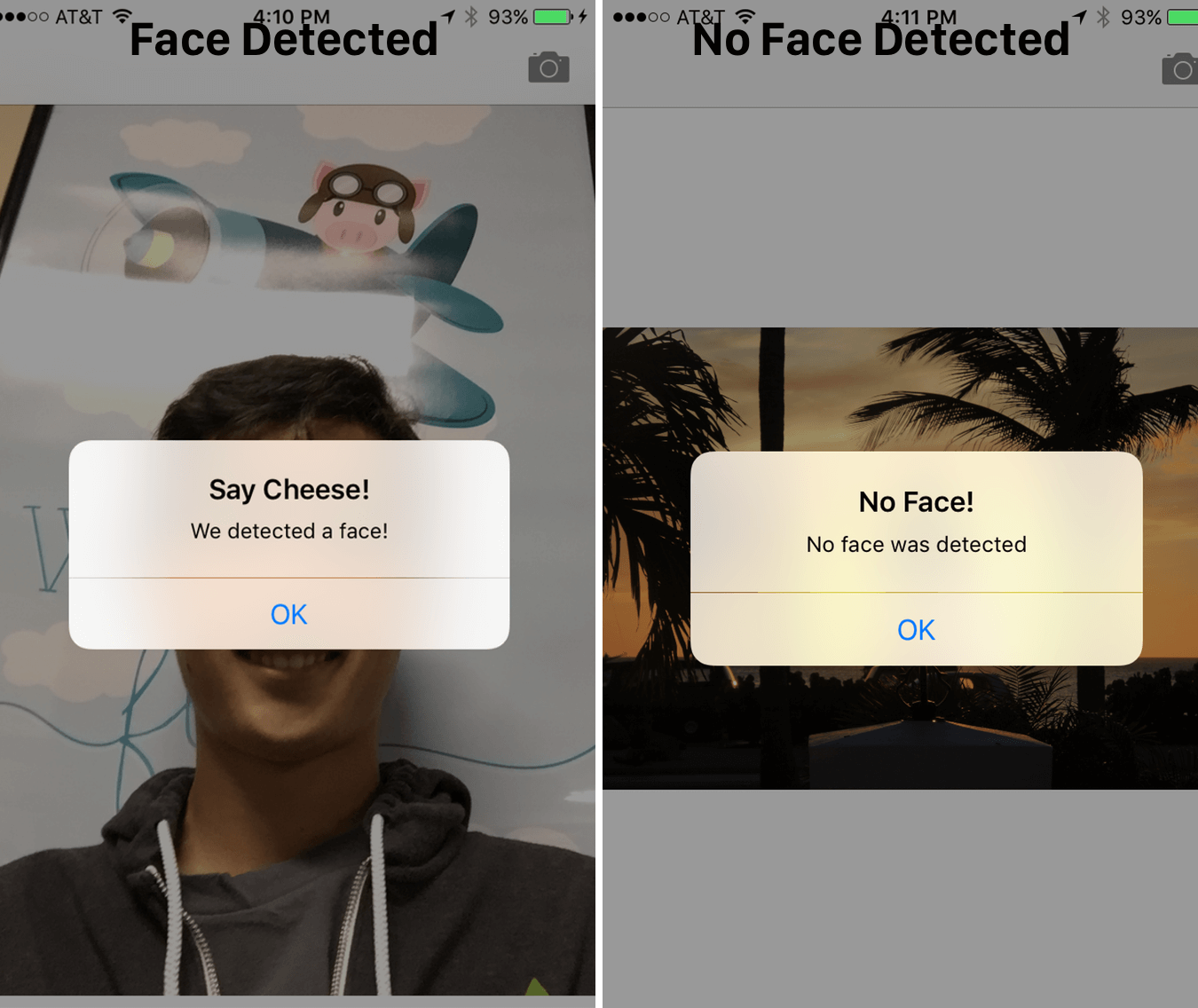

Creating a Camera with Face Recognition feature

Let's imagine that we have a camera application that takes photos. As soon as a photo is taken, we want to launch face recognition to determine whether there is a face in the photo or not. If the face is present, we can classify this photo with some tags or something like that. To do this, we need integration with the UIImagePicker class and run our face recognition code after the snapshot is taken.

In the start project, I have already created the CameraViewController class. Update the code to implement the camera functions:

class CameraViewController: UIViewController, UIImagePickerControllerDelegate, UINavigationControllerDelegate { @IBOutlet var imageView: UIImageView! let imagePicker = UIImagePickerController() override func viewDidLoad() { super.viewDidLoad() imagePicker.delegate = self } @IBAction func takePhoto(sender: AnyObject) { if !UIImagePickerController.isSourceTypeAvailable(.Camera) { return } imagePicker.allowsEditing = false imagePicker.sourceType = .Camera presentViewController(imagePicker, animated: true, completion: nil) } func imagePickerController(picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String : AnyObject]) { if let pickedImage = info[UIImagePickerControllerOriginalImage] as? UIImage { imageView.contentMode = .ScaleAspectFit imageView.image = pickedImage } dismissViewControllerAnimated(true, completion: nil) self.detect() } func imagePickerControllerDidCancel(picker: UIImagePickerController) { dismissViewControllerAnimated(true, completion: nil) } } The first few lines of this function set the UIImagePicker delegate. In the didFinishPickingMediaWithInfo method (this is the UIImagePicker delegate method), we set the imageView for the image obtained in the method. Then we call dismiss for the piker and call the detect function.

We have not yet implemented the detect function. Paste the following code and take a closer look at it:

func detect() { let imageOptions = NSDictionary(object: NSNumber(int: 5) as NSNumber, forKey: CIDetectorImageOrientation as NSString) let personciImage = CIImage(CGImage: imageView.image!.CGImage!) let accuracy = [CIDetectorAccuracy: CIDetectorAccuracyHigh] let faceDetector = CIDetector(ofType: CIDetectorTypeFace, context: nil, options: accuracy) let faces = faceDetector.featuresInImage(personciImage, options: imageOptions as? [String : AnyObject]) if let face = faces.first as? CIFaceFeature { print("found bounds are \(face.bounds)") let alert = UIAlertController(title: "Say Cheese!", message: "We detected a face!", preferredStyle: UIAlertControllerStyle.Alert) alert.addAction(UIAlertAction(title: "OK", style: UIAlertActionStyle.Default, handler: nil)) self.presentViewController(alert, animated: true, completion: nil) if face.hasSmile { print("face is smiling"); } if face.hasLeftEyePosition { print("Left eye bounds are \(face.leftEyePosition)") } if face.hasRightEyePosition { print("Right eye bounds are \(face.rightEyePosition)") } } else { let alert = UIAlertController(title: "No Face!", message: "No face was detected", preferredStyle: UIAlertControllerStyle.Alert) alert.addAction(UIAlertAction(title: "OK", style: UIAlertActionStyle.Default, handler: nil)) self.presentViewController(alert, animated: true, completion: nil) } } Our detect () function is very similar to its previous implementation. But this time, we use it on the captured image. When a face is detected, we display the warning message “We detected a face!” Otherwise, we display the message “No Face!”. Run the application and test the work.

CIFaceFeature has several properties and methods that I have already mentioned. For example, if you want to perform a detection, if a person smiles, you can call .hasSmile, which returns a boolean. For the experiment, you can call .hasLeftEyePosition to check if the left eye is present (hopefully so) or .hasRightEyePosition for the right eye, respectively.

We can also call hasMouthPosition to check if the mouth is present. If a mouth is present, we can access the coordinates with the mouthPosition property, as shown below:

if (face.hasMouthPosition) { print("mouth detected") } As you can see, detecting facial features is very easy using Core Image. In addition to detecting the mouth, smile, or eye position, we can also check if the eye is open or closed by calling leftEyeClosed for the left eye and rightEyeClosed for the right eye, respectively.

In conclusion

In this article, we explored face detection functions using the Core Image API main image API and how to use them in an application with a camera. We installed the main UIImagePicker to capture a photo and detect the face that is present in the image.

As you can see, face detection using Core Image core image is a powerful API along with many applications! I hope this article will find this tutorial a useful and informative guide for this little-known API for iOS!

»You can download the final final draft here .

Source: https://habr.com/ru/post/309802/

All Articles