How we looked for a compromise between accuracy and completeness in a specific task ML

I will talk about a practical example of how we formulated the requirements for a machine learning problem and chose a point on the accuracy / completeness curve. While developing a system of automatic content moderation, we faced the problem of choosing a compromise between accuracy and completeness, and solved it with the help of a simple but extremely useful experiment to collect assessor assessments and calculate their consistency.

We at HeadHunter use machine learning to create custom services. ML is “fashionable, stylish, youth ...”, but in the end, this is just one of the possible tools for solving business problems, and this tool must be properly used.

Formulation of the problem

If it is extremely simplified, the development of the service is an investment of the company's money. A developed service should be profitable (perhaps indirectly - for example, increasing user loyalty). The developers of machine learning models, as you understand, evaluate the quality of their work in several other terms (for example, accuracy, ROC-AUC, and so on). Accordingly, it is necessary to somehow translate business requirements, for example, into requirements for the quality of models. This allows, among other things, not to get involved in improving the model where “it is not necessary”. That is, from the point of view of accounting, to invest less, and from the point of view of product development, to do what is really useful for users. On one specific task, I will talk about how, in a fairly simple way, we set the requirements for the quality of the model.

')

One of the parts of our business is that we provide users-applicants a set of services for creating an electronic resume, and employer users - convenient (mostly paid) ways of working with these resumes. In this regard, it is extremely important for us to ensure the high quality of the resume database from the point of view of perception by HR managers. For example, in the database not only there should not be spam, but the last place of work should always be indicated. Therefore, we have special moderators who check the quality of each resume. The number of new resumes is constantly growing (which, in itself, makes us very happy), but at the same time the load on moderators is growing. We had a simple idea: historical data have been accumulated, let’s teach a model that can distinguish between abstracts that are acceptable for publication and those that need to be improved. Just in case, I will clarify that if the resume “needs some work,” the user has limited possibilities to use this resume, he sees the reason for this and can fix it.

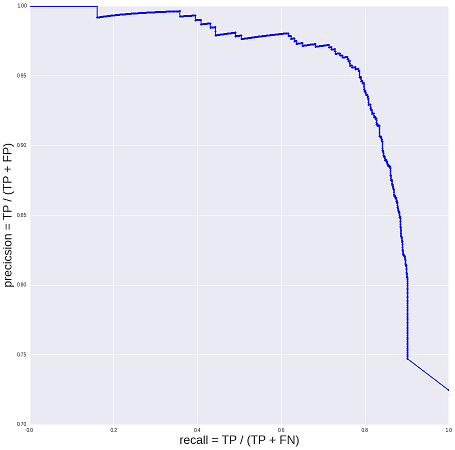

I will not now describe the fascinating process of collecting baseline data and building a model. Perhaps those colleagues who did this will sooner or later share their experiences. Anyway, as a result, we have a model with the following quality:

Accuracy (precision) is plotted on the vertical axis, or the percentage of the resume accepted by the model. On the horizontal axis - the corresponding completeness (recall), or the proportion accepted by the model resume from the total number of "acceptable to publication" summary. Anything that is not accepted by the model is accepted by people. A business has two opposite goals: a good base (the smallest share of “not enough good” summaries) and the cost of moderation (as many as possible to automatically resume, while the lower the cost of development - the better).

Good or bad model turned out? Do I need to improve it? And if it is not necessary, then what threshold (threshold), that is, which point on the curve to choose: which compromise between accuracy and completeness suits the business? At first I answered this question with rather vague constructions in the spirit of “we want 98% accuracy, and 40% completeness would seem quite good.” The rationale for such "product requirements", of course, existed, but so fragile that it was unworthy of being printed. And the first version of the model came out in this form.

Experiment with assessors

Everyone is happy and happy, and the next question is: let the automatic system accept even more resumes! Obviously, this can be achieved in two ways: to improve the model, or, for example, to choose another point on the above curve (worsen the accuracy for the sake of completeness). What have we done to articulate product requirements in a more conscious way?

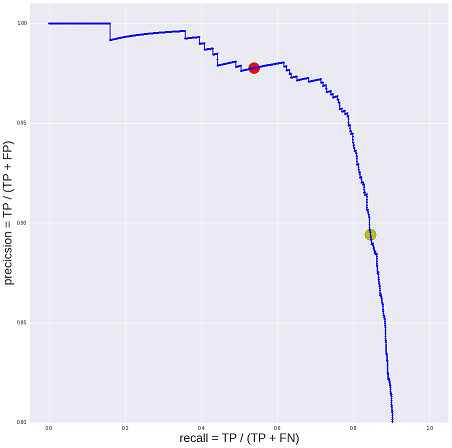

We assumed that in fact people (moderators) could also be wrong, and conducted an experiment. Four random moderators were asked to mark (independently of each other) the same sample of resumes on the test bench. At the same time, the working process was completely reproduced (the experiment was no different from the usual working day). In the sampling was the same trick. For each moderator, we took N random resumes, which he had already processed (that is, the final sample size was 4N).

So, for each resume, we collected 4 independent decisions of the moderators (0 or 1), the solution of the model (real number from 0 to 1) and the initial solution of one of these four moderators (again, 0 or 1). The first thing that can be done is to calculate the average "self-consistency" of the moderators' decisions (it turned out about 90%). Then you can more accurately assess the "quality" of the resume (rating of "publish" or "not publish"), for example, by the method of majority opinion (majority vote). Our assumptions are as follows: we have a “initial moderator rating” and “initial robot rating”, plus three independent moderator ratings. According to the three estimates, there will always be a majority opinion (if there were four estimates, then at a 2: 2 vote, you could have chosen the decision randomly). As a result, we can estimate the accuracy of the “average moderator” - again, it turns out to be about 90%. Putting a point on our curve and see that the model will provide the same expected accuracy with completeness over 80% (as a result, we began to automatically process 2 times more resumes at minimal cost).

Conclusions and spoiler

In fact, while we were thinking about how to build the quality assurance process of the automatic moderation system, we came across a few stones, which I will try to describe next time. In the meantime, on a fairly simple example, I hope I was able to illustrate the use of assessor markup and the simplicity of constructing such experiments, even if you do not have Yandex.Toloki at hand, and also how unexpected the results may be. In this particular case, we found out that 90% accuracy is enough for solving a business problem, that is, before improving the model, it is worth spending some time studying real business processes.

And in conclusion I would like to express my gratitude to Roman Porechem p0b0rchy for consulting our team during the work.

What to read:

- The question of training distribution distribution is an example of a similar problem statement.

- Modeling Amazon Mechanical Turk - pro system for collecting user ratings from Amazon

- Maximum Likelihood Estimation of the Error-Rates Using the EM algorithm — iterative algorithms for predicting the true score (in particular, the algorithm of the authors' name — the Dawide-Skene algorithm)

Source: https://habr.com/ru/post/309792/

All Articles