Your Data is so big: An introduction to Spark in Java

Apache Spark is a universal tool for processing big data, with which you can write to Hadoop from various DBMS, stream any sources in real time, in parallel do some complicated processing with the data, and all this is not with the help of some batches, scripts and SQL queries, and using a functional approach.

About Spark goes a few myths:

Why? Look under the cut.

Surely you've heard about Spark, and most likely even know what it is and what it is eaten with. Another thing is that if you do not professionally work with this framework, you have several typical stereotypes in your head, due to which you risk never to get to know him better.

')

What is Hadoop? Roughly speaking, it is a distributed file system, a data warehouse with a set of APIs for processing this very data. And, oddly enough, it would be more correct to say that Hadoop needs Spark, and not vice versa!

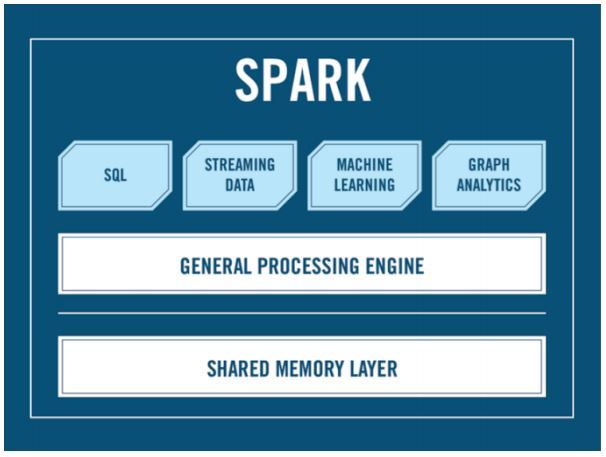

The fact is that the standard Hadoop toolkit does not allow for the processing of existing data at high speed, but Spark does. And the question is, does Spark need Hadoop? Let's take a look at what Spark is:

As you can see, there is no Hadoop here: there is an API, there is SQL, there is streaming and much more. And Hadoop is optional. And Cluster manager, you ask? Who will run your Spark on a cluster? Alexander Sergeevich? This is precisely the issue that our myth’s legs grow from: the most often YARN for Hadoop is used to distribute Spark's job across the cluster, however there are alternatives: Apache Mesos, for example, which you can use if you don’t like for some reason Hadoop.

You can work with Spark for both Java and Scala, while the second option is considered by many to be the best for several reasons:

Let's take it in order, let's start with the first thesis about the coolness and fashionability of Scala. Counterpart is simple and fits into one line: You might be surprised, but most Java developers ... know Java ! And it costs a lot - the team of seniors, moving to the rock turn into a Junior Junior StackOverflow-Driven!

Syntax is a separate story - if you read any Java vs. Java holivar Scala, you will find about these examples (as you can see, the code simply summarizes the lengths of the lines):

Scala

Java

A year ago, even in the Spark documentation, examples looked exactly like this. However, let's look at the Java 8 code:

Java 8

Looks quite good, right? In any case, you also need to understand that Java is a familiar world to us: Spring, design patterns, concepts, and more. On Scala, a javist will have to face a completely different world and it is worth considering whether you or your customer are at such a risk.

All examples are taken from the report of Evgeny EvgenyBorisov Borisov about Spark, which sounded at the JPoint 2016, becoming, by the way, the best report of the conference. Want to continue: RDD, testing, examples and live coding? Watch the video:

More Spark Gods BigData

And if, after viewing Eugene's report, you experienced an existential catharsis, realizing that you need to get to know Spark more closely, you can do it live with Eugene in a month:

October 12-13, St. Petersburg will host a large two-day training “Welcome to Spark” .

Let us discuss the problems and solutions that inexperienced Spark developers initially face. We will deal with the syntax and all sorts of tricks, and most importantly, we will look at how to write Spark in Java using frameworks, tools and concepts you know, such as Inversion of Control, design patterns, Spring framework, Maven / Gradle, Junit. All of them can help to make your Spark-application more elegant, readable and familiar.

There will be a lot of tasks, live coding, and eventually you will leave this training with enough knowledge to start working independently on Spark-e in the familiar Java world.

There is no need to lay out a detailed program here, whoever wants to find it on the training page .

EUGENE BORISOV

Naya Technologies

Evgeny Borisov has been developing in Java since 2001 and has taken part in a large number of Enterprise projects. Having gone from a simple programmer to an architect and tired of the routine, he went out as free artists. Today he writes and conducts courses, seminars and master classes for various audiences: live courses on J2EE for officers of the Israeli army. Spring - by WebEx for Romanians, Hibernate via GoToMeeting for Canadians, Troubleshooting and Design Patterns for Ukrainians.

PS Taking this opportunity, I congratulate everyone on the programmer's day!

About Spark goes a few myths:

- Spark'y need Hadoop: not needed!

- Spark needs Scala: not necessary!

Why? Look under the cut.

Surely you've heard about Spark, and most likely even know what it is and what it is eaten with. Another thing is that if you do not professionally work with this framework, you have several typical stereotypes in your head, due to which you risk never to get to know him better.

')

Myth 1. Spark does not work without Hadoop

What is Hadoop? Roughly speaking, it is a distributed file system, a data warehouse with a set of APIs for processing this very data. And, oddly enough, it would be more correct to say that Hadoop needs Spark, and not vice versa!

The fact is that the standard Hadoop toolkit does not allow for the processing of existing data at high speed, but Spark does. And the question is, does Spark need Hadoop? Let's take a look at what Spark is:

As you can see, there is no Hadoop here: there is an API, there is SQL, there is streaming and much more. And Hadoop is optional. And Cluster manager, you ask? Who will run your Spark on a cluster? Alexander Sergeevich? This is precisely the issue that our myth’s legs grow from: the most often YARN for Hadoop is used to distribute Spark's job across the cluster, however there are alternatives: Apache Mesos, for example, which you can use if you don’t like for some reason Hadoop.

Myth 2. Spark is written in Scala, so for him, too, need to write on Scala

You can work with Spark for both Java and Scala, while the second option is considered by many to be the best for several reasons:

- Scala is cool!

- More concise and convenient syntax.

- Spark API is designed for Scala, and it comes out earlier than the Java API;

Let's take it in order, let's start with the first thesis about the coolness and fashionability of Scala. Counterpart is simple and fits into one line: You might be surprised, but most Java developers ... know Java ! And it costs a lot - the team of seniors, moving to the rock turn into a Junior Junior StackOverflow-Driven!

Syntax is a separate story - if you read any Java vs. Java holivar Scala, you will find about these examples (as you can see, the code simply summarizes the lengths of the lines):

Scala

val lines = sc.textFile("data.txt") val lineLengths = lines.map(_.length) val totalLength = lineLengths.reduce(_+_) Java

JavaRDD<String> lines = sc.textFile ("data.txt"); JavaRDD<Integer> lineLengths = lines.map (new Function() { @Override public Integer call (String lines) throws Exception { return lines.length (); } }); Integer totalLength = lineLengths.reduce (new Function2() { @Override public Integer call(Integer a, Integer b) throws Exception { return a + b; } }); A year ago, even in the Spark documentation, examples looked exactly like this. However, let's look at the Java 8 code:

Java 8

JavaRDD<String> lines = sc.textFile ("data.txt"); JavaRDD<Integer> lineLengths = lines.map (String::length); int totalLength = lineLengths.reduce ((a, b) -> a + b); Looks quite good, right? In any case, you also need to understand that Java is a familiar world to us: Spring, design patterns, concepts, and more. On Scala, a javist will have to face a completely different world and it is worth considering whether you or your customer are at such a risk.

All examples are taken from the report of Evgeny EvgenyBorisov Borisov about Spark, which sounded at the JPoint 2016, becoming, by the way, the best report of the conference. Want to continue: RDD, testing, examples and live coding? Watch the video:

More Spark Gods BigData

And if, after viewing Eugene's report, you experienced an existential catharsis, realizing that you need to get to know Spark more closely, you can do it live with Eugene in a month:

October 12-13, St. Petersburg will host a large two-day training “Welcome to Spark” .

Let us discuss the problems and solutions that inexperienced Spark developers initially face. We will deal with the syntax and all sorts of tricks, and most importantly, we will look at how to write Spark in Java using frameworks, tools and concepts you know, such as Inversion of Control, design patterns, Spring framework, Maven / Gradle, Junit. All of them can help to make your Spark-application more elegant, readable and familiar.

There will be a lot of tasks, live coding, and eventually you will leave this training with enough knowledge to start working independently on Spark-e in the familiar Java world.

There is no need to lay out a detailed program here, whoever wants to find it on the training page .

EUGENE BORISOV

Naya Technologies

Evgeny Borisov has been developing in Java since 2001 and has taken part in a large number of Enterprise projects. Having gone from a simple programmer to an architect and tired of the routine, he went out as free artists. Today he writes and conducts courses, seminars and master classes for various audiences: live courses on J2EE for officers of the Israeli army. Spring - by WebEx for Romanians, Hibernate via GoToMeeting for Canadians, Troubleshooting and Design Patterns for Ukrainians.

PS Taking this opportunity, I congratulate everyone on the programmer's day!

Source: https://habr.com/ru/post/309776/

All Articles