Future sites: automatic assembly based on AI and not only

Our technical director * believes that artificial intelligence will be created approximately by the middle of this century, and in about fifty years it is very likely that a near-singularity will be achieved with virtualization, AI, and all that.

But for a bright tomorrow to come, today we need to solve practical problems associated with it. So we took up the technology that will make sites for people. No, not for the specialists who create complex and high-loaded systems. And for the guys with a “business card site for 3000” - because the AI, at least, will not be lost for a month after the prepayment.

')

The beauty is this: launching a site builder with a neural network and an algorithmic design ** is not a matter of fifty, but of just a couple of years. This is the future that can be felt today.

Since we have an applied task, in the first two chapters we will consider who will apply it in practice and how, and then proceed to the technical aspects of its solution.

A year ago, we launched a simple site builder uKit. Its users are often those who are not very clear “how”, and sometimes there is no time to collect a page for themselves even in a simple system. They repair cars, repair apartments, make haircuts, teach children taekwondo, feed people with borscht and pastries, and so on.

They want customers. In search of clients offline business came to the Internet. They do not need a website, social network, advertising service or any other platform per se. They need to be found and addressed to them.

They want simpler. So fast, beautiful and work. In fact, people still want the magic button: “context - tune in”, “site - create” and so on.

The world is very different. It is clear why the algorithmic design does not quite fit Mail.ru Group *** - this is the world of “advanced users”.

It is clear, than the algorithmic design is beneficial for an ordinary Internet user who has opened a conditional bakery. He often does not fully know what he wants from the site. More precisely, the idea is in my head. But transferring a mental image to a web page is more difficult. Of course, this problem is partially solved by freelancers.

But the same designers do not lose popularity, not because people want to do everything themselves (this is rare) and without programming skills (warmer, but not the main thing). Constructor choose to save. And do not give your money and nerves to the same freelancer.

Today everything has come together. We have the technology. Customers have a demand. And, finally, there is an array of data on which it is possible to train the technology to bring the site for the client to the state of “only correct details”.

Over the past couple of years, at least two “world's first AI systems have been announced, collecting websites for you based on knowledge of your profession (“ I am a cook ”) and preferences in color schemes (“ red-blue is better than black and white ”).

Two years ago, a video from The Grid came out - and so far, in fact, we have seen nothing except the video:

This spring, a more well-known site builder, Wix, opened his designs:

The technology from Wix can be tested - but for now it is hardly different from our converter of Facebook pages to sites where the algorithm is simply calibrated by a council of developers.

It is rather a smart technology: smart wizards. Although they are created as expert systems, they do not accumulate their own experience and operate in a fully deterministic and transparent way for a programmer. For comparison and terminological reconciliation, this is what we mean by AI in an online service:

The same engine on which Prisma and others are assembled

AI begins when there is a neural network, or another mechanism with learning and synthesis. From what is shown by both teams, it is not yet obvious where and how neural networks are used or a similar technology, which could be called "AI".

Surely when reading or watching a video, you remembered this picture:

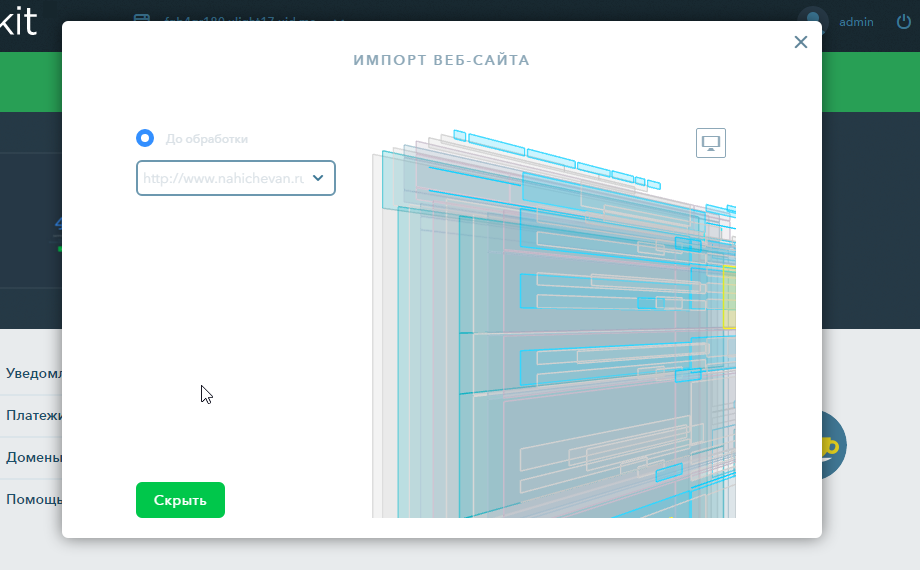

But. Not less interesting, but practically even more useful task will be not “a site from scratch”, but importing information from an already existing, but outdated site, to a new, modern template. This is what we do in the first place.

The reasons for the user to turn to the service “make a new site from the old one” may be a lot. For example, the old site is made on an outdated platform or without it at all, the owner is dissatisfied with the quality of the layout, design, conversion, complex CMS or lack of adaptability.

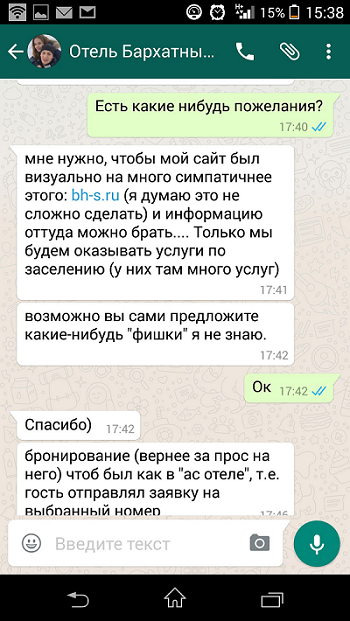

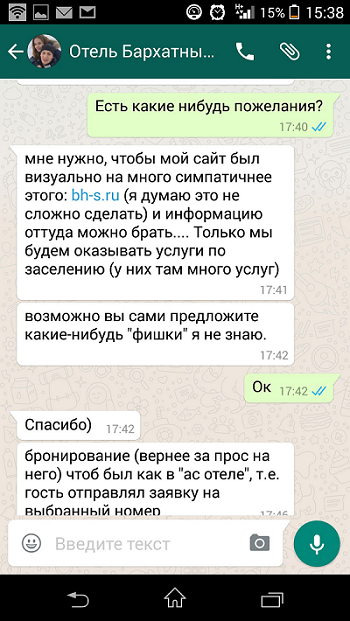

The motivation “to be no worse than that of a competitor” and “what people think” is a very strong engine of progress for a small entrepreneur. Here is an example. The man lived, lived with the site of his canteens:

And then, when they wrote about him in the media, he urgently ordered a rework in our “ Uber for Sites ” service, where that same freelancer collects the site in 3K and 10 days, and we guarantee the time limit and the result.

Now we want to achieve a similar transformation forces of the machine.

After all, when a builder, auto mechanic or owner of a public catering point thinks about a new site, it usually pops up in his head: “expensive”, “how and on what?”, “I can't”, “I don't know how”, “I don't trust”, “No time”, “again all these circles of hell! for what?".

At the same time, he most likely (especially if the business is successful) has accumulated a sufficient amount of information, on the basis of which we could offer one or several versions of the site. And in the future - and other content.

Technically, we need to transfer information from any possible representation to the format that most closely matches the structural elements (widgets) of our designer. Simply put, if we see an image, we design it as a “picture” widget, if we see a large block of text, we use a “text” widget, and so on.

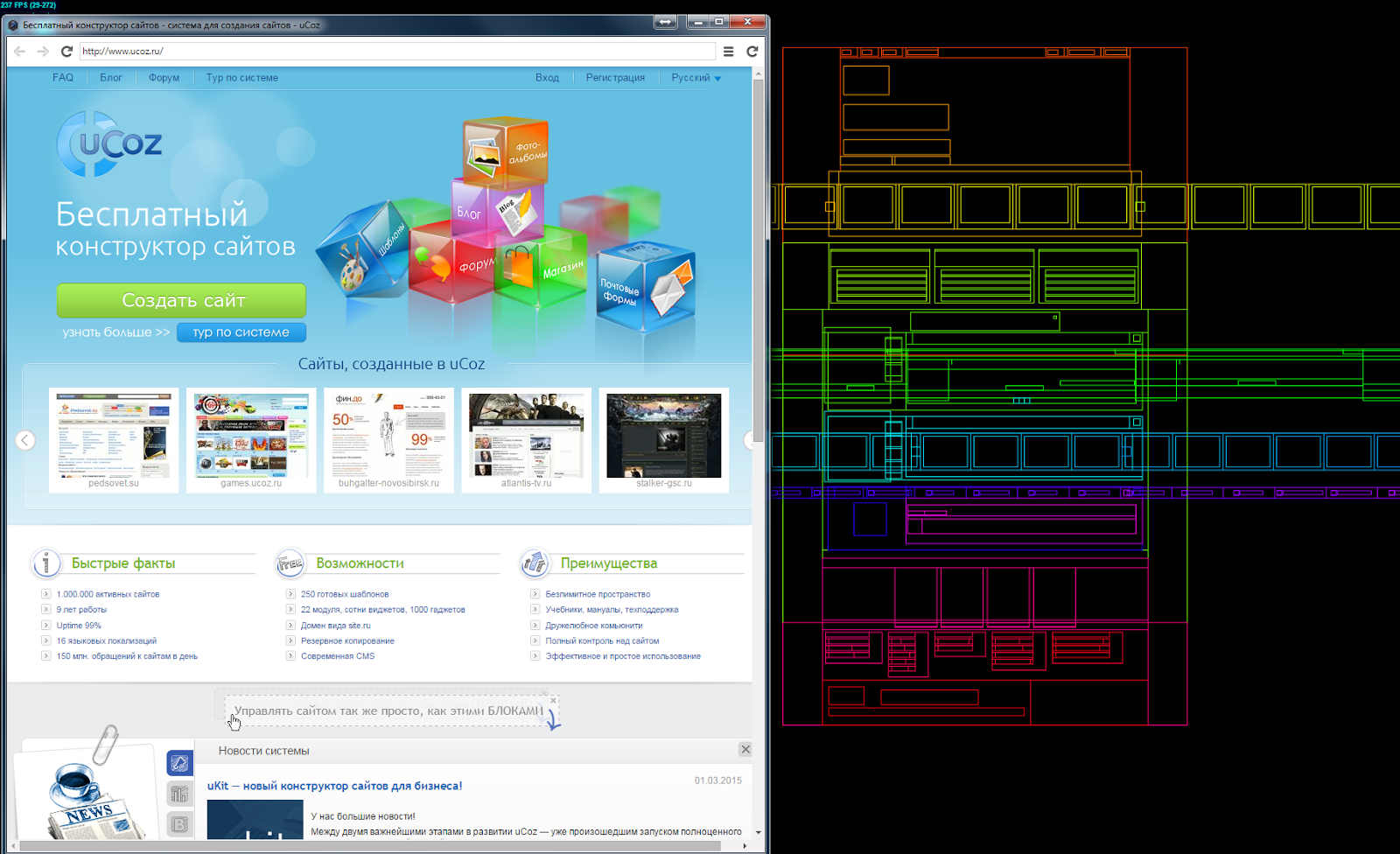

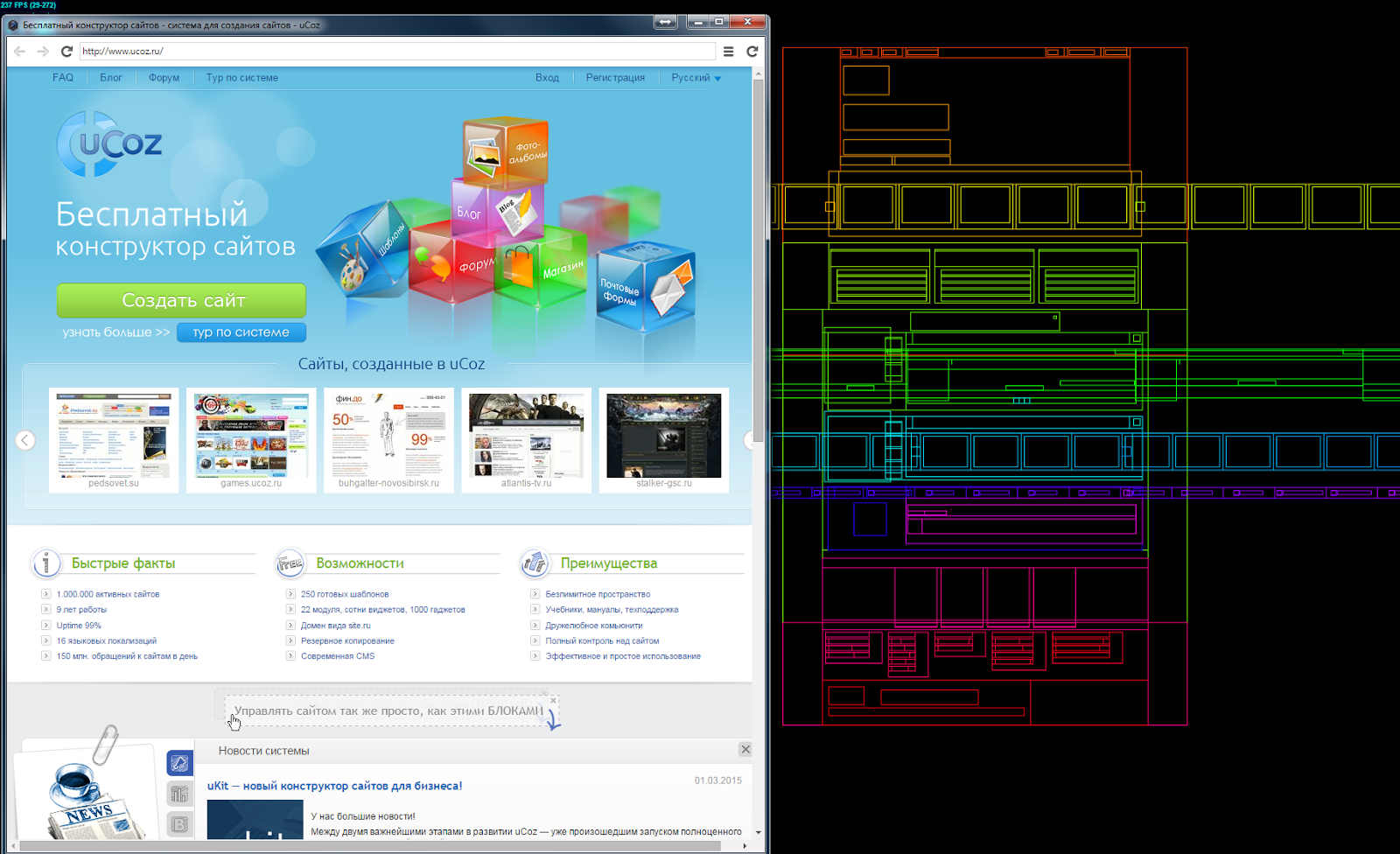

Roughly speaking, any site can be represented as a list of rectangles nested and partially intersecting in space, containing text, graphic and, in some cases, specialized information (maps, video, etc.) information.

An example of an experiment with a web archive, the details of which you will find in the final part of the post

Next - you need to look at the site at the same time as if through the user's eyes (browser), and understand its structure ( DOM tree), taking into account the executable code. That is, to separate aspects: “how it is done” and “how it looks”. And most importantly - "what kind of meaning it carries."

What is the result. We can highlight unique content for each page and re-link it to meet modern design requirements. We can recognize repeatable elements - and create a menu. Choose a template of the desired subject and a combination of colors and fonts. Put on a prominent place contact information (work schedule, address of the organization), make the phone a clickable, etc.

Here are a few problems we encountered after writing the first version of the service. I think, again, we are not alone in the universe.

Anyone who developed business cards in the 2000s, probably at least once tried to make a site with a side menu - to place more points and dropouts. You can also find sites where access to certain pages is only from the side panels.

Today - including, for the sake of mobile users - we make only a horizontal or collapsible (“hamburger”) menu at the top. We faced the question of how not to lose information in the case when the old site has a sidebar.

Decision. In simple cases, we can offer a different solution for the number of pages and widget elements: for example, instead of going through the news widget from the side, like on the old site, make a separate menu item “News”.

In more complex situations (well, ideally) - we need to carry out a “smart” re-arrangement of pages and individual content elements into a more presentable structure. To do this, it is necessary to at least apply the algorithms that make up the core of the heuristic system to rearrange the content. The same step will allow us to preserve the reference value.

In the system, everything should be subject to the rule - you need to try to make the result no worse than the original, even in individual details. Otherwise, it will cause rejection of the user, who decided to experiment with the system. This applies not only to the visual component.

When we made our social buttons, we learned how to count the number of reposts in the entire history of the site - so that by changing one button to another, the site owner was not afraid to reset the repost counters. Now we had to do the same with SEO-settings.

The solution is divided into two parts. In uKit there is a search promotion module for each page where we can transfer metadata: title, keywords, and so on.

The second part is a question about the internal optimization of the site. In parallel with the recognition, we import the page relink graph with each other. The system contains a situation where some, but not all, pages of the imported site contain general information - and based on the relevance of these blocks, it can judge the relationship of the pages.

Let's talk about the stages we are going to begin.

We will create a learning model and a hybrid assessment system, as well as create a database of sites for training. These will be the old pages from our uCoz and People, as well as new ones from uKit. And one more trick - a selection of Web.Archive, where you can see site changes by year. On this good, and will learn neural network.

First, the teacher will be a man. Then the neural network tries to express “what is a good website,” and we will allow the system to experiment with itself - including, at some stage, letting it do A / B tests of redesigns, showing them to real people.

Sites often contain links to social networks, video hosting and so on. And this gives us an additional field for experimentation - if the content on the old site is small or it is bad, you can try to get the missing information from external sources.

Modern data mining capabilities, theoretically, make it possible to collect information about an object from several sources and intelligently group it within a site. That is, we can supply the service with a system of multi-import of content - from Instagram, folders on the hard disk of the computer, Youtube channel, and so on.

One of the existing calibrated proto-examples

Systems that collect a site from several sources in social media also exist in their infancy: they are partially described here . However, the use of AI in them is no question.

We will be happy to answer your questions and discuss about sites that collect themselves.

======

* CTO uKit AI is pavel_kudinov . In a profile on Habré it is possible to look at its side-projects connected with neural networks. Ask questions in the comments. ( up )

** Recommended links and videos on the topic of algorithmic design ( top ):

- uKit AI blog about generative design and more

- Article “Algorithmic Design Tools”

- Short video demonstration: Rene 'system selects buttons for the site

*** Publication on algorithmic design in Mail.ru Group harablog ( top )

But for a bright tomorrow to come, today we need to solve practical problems associated with it. So we took up the technology that will make sites for people. No, not for the specialists who create complex and high-loaded systems. And for the guys with a “business card site for 3000” - because the AI, at least, will not be lost for a month after the prepayment.

')

The beauty is this: launching a site builder with a neural network and an algorithmic design ** is not a matter of fifty, but of just a couple of years. This is the future that can be felt today.

Since we have an applied task, in the first two chapters we will consider who will apply it in practice and how, and then proceed to the technical aspects of its solution.

People do not want a site, and profit

A year ago, we launched a simple site builder uKit. Its users are often those who are not very clear “how”, and sometimes there is no time to collect a page for themselves even in a simple system. They repair cars, repair apartments, make haircuts, teach children taekwondo, feed people with borscht and pastries, and so on.

They want customers. In search of clients offline business came to the Internet. They do not need a website, social network, advertising service or any other platform per se. They need to be found and addressed to them.

They want simpler. So fast, beautiful and work. In fact, people still want the magic button: “context - tune in”, “site - create” and so on.

But does the world need a magic “make beautiful” button?

The world is very different. It is clear why the algorithmic design does not quite fit Mail.ru Group *** - this is the world of “advanced users”.

It is clear, than the algorithmic design is beneficial for an ordinary Internet user who has opened a conditional bakery. He often does not fully know what he wants from the site. More precisely, the idea is in my head. But transferring a mental image to a web page is more difficult. Of course, this problem is partially solved by freelancers.

But the same designers do not lose popularity, not because people want to do everything themselves (this is rare) and without programming skills (warmer, but not the main thing). Constructor choose to save. And do not give your money and nerves to the same freelancer.

Today everything has come together. We have the technology. Customers have a demand. And, finally, there is an array of data on which it is possible to train the technology to bring the site for the client to the state of “only correct details”.

We are not alone in the universe, or the current state of such developments.

Over the past couple of years, at least two “world's first AI systems have been announced, collecting websites for you based on knowledge of your profession (“ I am a cook ”) and preferences in color schemes (“ red-blue is better than black and white ”).

Two years ago, a video from The Grid came out - and so far, in fact, we have seen nothing except the video:

This spring, a more well-known site builder, Wix, opened his designs:

The technology from Wix can be tested - but for now it is hardly different from our converter of Facebook pages to sites where the algorithm is simply calibrated by a council of developers.

It is rather a smart technology: smart wizards. Although they are created as expert systems, they do not accumulate their own experience and operate in a fully deterministic and transparent way for a programmer. For comparison and terminological reconciliation, this is what we mean by AI in an online service:

The same engine on which Prisma and others are assembled

AI begins when there is a neural network, or another mechanism with learning and synthesis. From what is shown by both teams, it is not yet obvious where and how neural networks are used or a similar technology, which could be called "AI".

How it works now: calibrated algorithms

Surely when reading or watching a video, you remembered this picture:

Approximate translation (just in case)

Machine, please make a website. Adaptive. With big pictures. Use my favorite fonts. With a cool menu that makes “vzhzhzhzh.” And so the site loaded quickly.

Thank you, your man.

PS And so without bugs :)

Thank you, your man.

PS And so without bugs :)

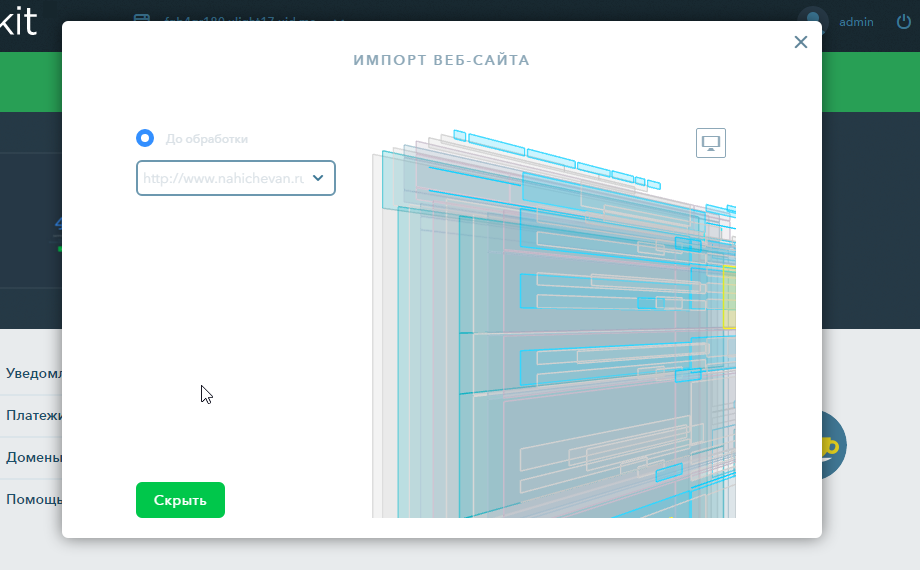

But. Not less interesting, but practically even more useful task will be not “a site from scratch”, but importing information from an already existing, but outdated site, to a new, modern template. This is what we do in the first place.

The reasons for the user to turn to the service “make a new site from the old one” may be a lot. For example, the old site is made on an outdated platform or without it at all, the owner is dissatisfied with the quality of the layout, design, conversion, complex CMS or lack of adaptability.

The motivation “to be no worse than that of a competitor” and “what people think” is a very strong engine of progress for a small entrepreneur. Here is an example. The man lived, lived with the site of his canteens:

And then, when they wrote about him in the media, he urgently ordered a rework in our “ Uber for Sites ” service, where that same freelancer collects the site in 3K and 10 days, and we guarantee the time limit and the result.

Now we want to achieve a similar transformation forces of the machine.

After all, when a builder, auto mechanic or owner of a public catering point thinks about a new site, it usually pops up in his head: “expensive”, “how and on what?”, “I can't”, “I don't know how”, “I don't trust”, “No time”, “again all these circles of hell! for what?".

At the same time, he most likely (especially if the business is successful) has accumulated a sufficient amount of information, on the basis of which we could offer one or several versions of the site. And in the future - and other content.

Technically, we need to transfer information from any possible representation to the format that most closely matches the structural elements (widgets) of our designer. Simply put, if we see an image, we design it as a “picture” widget, if we see a large block of text, we use a “text” widget, and so on.

Roughly speaking, any site can be represented as a list of rectangles nested and partially intersecting in space, containing text, graphic and, in some cases, specialized information (maps, video, etc.) information.

An example of an experiment with a web archive, the details of which you will find in the final part of the post

Next - you need to look at the site at the same time as if through the user's eyes (browser), and understand its structure ( DOM tree), taking into account the executable code. That is, to separate aspects: “how it is done” and “how it looks”. And most importantly - "what kind of meaning it carries."

What is the result. We can highlight unique content for each page and re-link it to meet modern design requirements. We can recognize repeatable elements - and create a menu. Choose a template of the desired subject and a combination of colors and fonts. Put on a prominent place contact information (work schedule, address of the organization), make the phone a clickable, etc.

It sounds clear. But not so simple

Here are a few problems we encountered after writing the first version of the service. I think, again, we are not alone in the universe.

The menu on the left and side panels

Anyone who developed business cards in the 2000s, probably at least once tried to make a site with a side menu - to place more points and dropouts. You can also find sites where access to certain pages is only from the side panels.

Today - including, for the sake of mobile users - we make only a horizontal or collapsible (“hamburger”) menu at the top. We faced the question of how not to lose information in the case when the old site has a sidebar.

Decision. In simple cases, we can offer a different solution for the number of pages and widget elements: for example, instead of going through the news widget from the side, like on the old site, make a separate menu item “News”.

In more complex situations (well, ideally) - we need to carry out a “smart” re-arrangement of pages and individual content elements into a more presentable structure. To do this, it is necessary to at least apply the algorithms that make up the core of the heuristic system to rearrange the content. The same step will allow us to preserve the reference value.

Ensuring referential integrity

In the system, everything should be subject to the rule - you need to try to make the result no worse than the original, even in individual details. Otherwise, it will cause rejection of the user, who decided to experiment with the system. This applies not only to the visual component.

When we made our social buttons, we learned how to count the number of reposts in the entire history of the site - so that by changing one button to another, the site owner was not afraid to reset the repost counters. Now we had to do the same with SEO-settings.

The solution is divided into two parts. In uKit there is a search promotion module for each page where we can transfer metadata: title, keywords, and so on.

The second part is a question about the internal optimization of the site. In parallel with the recognition, we import the page relink graph with each other. The system contains a situation where some, but not all, pages of the imported site contain general information - and based on the relevance of these blocks, it can judge the relationship of the pages.

Let's look into the near future: how it will work with the neural network

Let's talk about the stages we are going to begin.

Machine learning

We will create a learning model and a hybrid assessment system, as well as create a database of sites for training. These will be the old pages from our uCoz and People, as well as new ones from uKit. And one more trick - a selection of Web.Archive, where you can see site changes by year. On this good, and will learn neural network.

First, the teacher will be a man. Then the neural network tries to express “what is a good website,” and we will allow the system to experiment with itself - including, at some stage, letting it do A / B tests of redesigns, showing them to real people.

Data mining

Sites often contain links to social networks, video hosting and so on. And this gives us an additional field for experimentation - if the content on the old site is small or it is bad, you can try to get the missing information from external sources.

Modern data mining capabilities, theoretically, make it possible to collect information about an object from several sources and intelligently group it within a site. That is, we can supply the service with a system of multi-import of content - from Instagram, folders on the hard disk of the computer, Youtube channel, and so on.

One of the existing calibrated proto-examples

Systems that collect a site from several sources in social media also exist in their infancy: they are partially described here . However, the use of AI in them is no question.

Thank!

We will be happy to answer your questions and discuss about sites that collect themselves.

======

* CTO uKit AI is pavel_kudinov . In a profile on Habré it is possible to look at its side-projects connected with neural networks. Ask questions in the comments. ( up )

** Recommended links and videos on the topic of algorithmic design ( top ):

- uKit AI blog about generative design and more

- Article “Algorithmic Design Tools”

- Short video demonstration: Rene 'system selects buttons for the site

*** Publication on algorithmic design in Mail.ru Group harablog ( top )

Source: https://habr.com/ru/post/309516/

All Articles