Selection of equipment for corporate cloud storage

Data is the basis of any business. If the place of their storage is not sufficiently reliable or unable to provide constant access, then practically all the activity of the enterprise will be at risk.

Of course, it is possible and necessary to ensure the safety and availability of information with the correct choice of server software and proper configuration. But no less important, and iron - equipment that stores and processes data. If it does not meet the needs of the company, then no software will make it sufficiently reliable and fault tolerant.

')

In this article we will look at one of the approaches to the choice of hardware for creating corporate cloud storage.

Why cloud?

Cloud infrastructure has several advantages:

- The ability to quickly scale . The increase in storage capacity and computing power is achieved by quickly connecting additional servers and storage systems. This is especially true for companies whose cloud load is assumed to be irregular.

- Cost reduction . The cloud allows you to create a single center in which all computational processes will be performed, while to increase the disk space it will be enough just to purchase drives without the need to organize the installation of new servers.

- Simplify business processes . Cloud storage, as opposed to local storage, implies the possibility of permanent access to it. This means that you can work with files at any time of the day from any place. Employees will be able to obtain the necessary information for work easier and faster, it will be possible to organize remote workplaces.

- Increased fault tolerance . It is quite obvious that if the data is stored on several servers, then their safety in case of technical problems will be higher than if they were only on one machine.

Why your own?

Now on the market there are many public cloud services. For many small and medium-sized companies, they really become a good choice, especially when it comes to services that pay only for the resources used or for testing the service. However, its cloud storage also offers several advantages. It will come in handy if:

- Company activity imposes restrictions on the location of servers . Russian state. institutions, as well as organizations involved in the processing of personal data, are required by law to store all their information in the territory of the Russian Federation. Accordingly, it is not possible to rent foreign servers for them, and in general it is very undesirable to trust sensitive information to contractors. Creating a private repository will help you take full advantage of the cloud without breaking the law.

- Full security policy management is required . How data protection in Microsoft or Amazon services is arranged is impossible to know for sure. Completely secure information as you consider it necessary, you can only in your own cloud.

- Setting up equipment for themselves . When renting you have to work with what the supplier gives. However, having your own servers at your disposal, you can configure them to solve specific tasks specifically for your business, as well as use software that your IT team is fluent in.

Equipment selection

When purchasing equipment for cloud storage, the question often arises: rent a car or buy your own. Above we have already figured out in which cases our own server is irreplaceable. However, you can organize the cloud and third-party services. This is especially true for small companies, because it is not always possible to allocate from the budget the necessary amount for the purchase of servers, it is not always possible to create your own server, and you do not need to spend money on servicing the machines.

But if you still decide to take your own servers for cloud storage, you should bear in mind that changing the equipment will be problematic and costly. It doesn't matter if the power is not enough: you can always add another machine to the cluster. But if the performance is redundant, then nothing can be done about it. Therefore, the choice is to do the following.

Clearly define the purpose for which the server will be used . In our case, this is file storage. Accordingly, the greatest interest are drives. What you should pay attention to when choosing them:

Capacity It depends on how many employees will use the storage, what types of files they will upload to the server, how much information is already waiting to be transferred to the cloud and how much the amount of working data increases annually.

On average, to work with text files, presentations, PDF and a small number of images you need an average of 10-15 GB per employee. To work with large volumes of high-quality images and photos you need to increase to at least 50-100 GB, or even more. The needs of video and audio processing personnel can reach several terabytes per person. In some cases, for example, when using large corporate software packages with version version support, we can talk about 10 terabytes per user of the cloud. Do not forget to take into account the capacity for backup files and unforeseen needs of the company.

As regards RAID controllers , it is better not to use onboard solutions for the corporate cloud. Their performance may not be enough to handle a large number of requests at a satisfactory speed. So it is better to choose discrete models from the lower and middle price ranges.

It is also necessary to determine the computing power . If you create cloud storage on the basis of several servers, then it is recommended to select identical or very close configurations. This will somewhat simplify the management of load distribution. And in general, it is better not to rely on one powerful machine, complementing it with an expensive processor and RAM, but to buy 2-3 cheaper cars. Why?

If your storage will only receive and distribute static files, without the possibility of launching them, then the processor power is not too important. Therefore, it is better not to chase after the number of cores and choose a model with a good “tact”. Of the low-cost options, Intel Xeon E56XX series with 4 cores will work well, of the more expensive models, we can recommend machines on the Intel Core i5.

Examples of server models

If you prefer not to build the server yourself, but to immediately purchase ready-to-work equipment, then pay attention to several suitable models for creating file storage.

Dell PowerEdge T110 . The server is equipped with an Intel Core i3 2120 processor with only two cores, but each of them has a good 3.3 GHz clock speed, which is more important for our cloud. The initial configuration of the RAM is not very large - 4 GB, but it can be expanded to 32 GB. The server comes in two trim levels - without a pre-installed hard drive or with a 1TB HDD-drive and SATA interface.

Lenovo ThinkServer RS140 . It has a powerful Intel Xeon E3 processor with four cores of 3.3 GHz each. RAM out of the box - 4 GB, plus four more slots for its expansion. Also included are two 1 TB hard drives with a SATA interface.

HP ProLiant ML10 Gen9 . In many ways similar to the model described above - all the same Intel Xeon E3 and two terabyte HDD. The main difference in RAM - the HP server has two plates of 4 GB each.

Is there enough storage space for files?

Storage capacity is the cornerstone of the file server. Having estimated the amount of stored data and growth dynamics, it is possible in six months to come to the unpleasant conclusion that you made a mistake with the forecast, and the data is growing faster than planned.

In the case of storage virtualization, you almost always (with a reasonable approach to storage planning) will be able to expand the virtual machine disk subsystem or increase the LUN. In the case of a physical file server and local drives, your capabilities will be much more modest. Even in spite of the availability of free slots in the server for additional drives, you may encounter the problem of selecting drives suitable for your RAID array.

But before you solve the problem in an extensive way, you should remember about the technical tools that help to combat the lack of space.

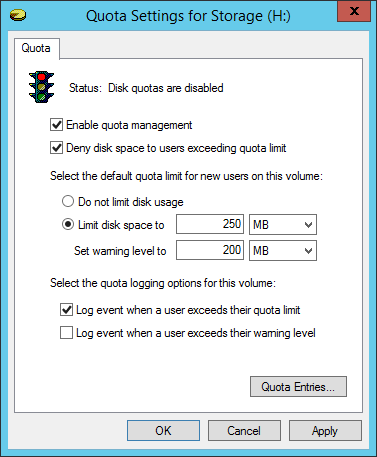

NTFS disk quotas

One of the oldest and most reliable user restriction mechanisms is file system quotas.

By turning on quotas for a volume, you can limit the amount of files saved by each user. The user's quota includes files for which at the NTFS level he is the owner. The main drawback of the mechanism is that, firstly, it is not so easy to determine which files belong to a particular user, and secondly, files created by administrators will not be included in the quota. The quota mechanism is rarely used in practice, it is almost completely replaced by the File Server Resource Manager, which first appeared in Windows Server 2003 R2.

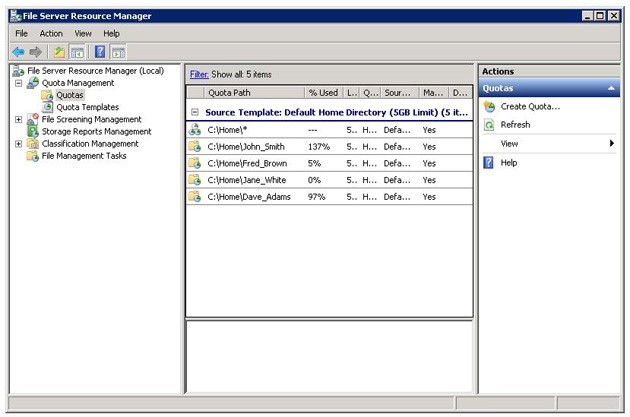

File Server Resource Manager

This component of Windows Server will allow you to allocate disk space at the folder level. If you allocate personal home directories for file servers, as well as dedicated folders for common department documents, then FSRM is the best choice.

Of course, quoting by itself does not increase the amount of file storage. But users, as a rule, are loyal to a fair (equal) division of resources, and in case of shortages they are ready to overcome small bureaucratic procedures for expanding disk space.

Quotas will also help against server overload in case of a user randomly posting large amounts of information. At the very least, this will not affect other employees or departments.

In addition, the FSRM includes a screening (filtering) mechanism for files that can be stored on the server. If you are sure that mp3 and avi files have no place on the file server, you can prevent them from being saved using FSRM.

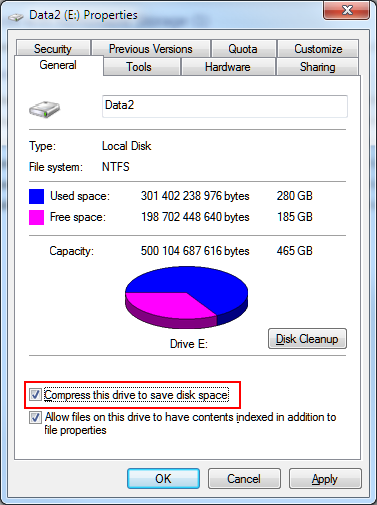

NTFS compression

Regular files respond well to compression using NTFS, and given the performance of modern processors, the server has enough resources for this operation. If there is not enough space, you can safely turn it on for a volume or individual folders. For example, in Windows Server 2012, a more sophisticated mechanism has emerged, using which NTFS compression on file servers is in the past for most scenarios.

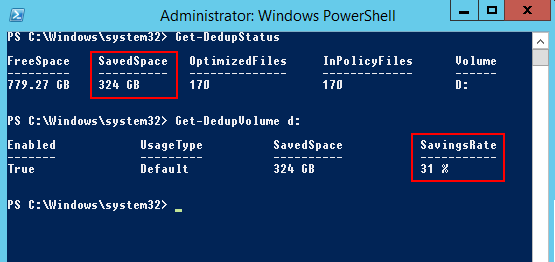

Deduplication

Windows Server 2012 includes the ability to deduplicate data located on an NTFS volume. This is a fairly advanced and flexible mechanism that combines both deduplication with a variable length block and effective compression of stored blocks. At the same time, for different data types, the mechanism can use different compression algorithms, and if compression is not effective, do not use it. Such subtleties are not available traditional compression using NTFS.

In addition, deduplication does not optimize files that users have been working with for the last 30 days (this interval can be customized) in order not to reduce the speed of working with dynamically changing data.

You can estimate the potential increase in free space with the ddpeval utility. On a typical file server, the savings are 30-50%.

File Server Performance

As we noted earlier, the file server is not the most resource-demanding service, but it should still be wise to approach the configuration of the disk and network subsystems.

Disk subsystem

The linear read or write speed does not play a decisive role for the file server. Any modern hard disk has high linear read / write speeds, but they are important only when the user copies a large file to his local disk, or, conversely, places it on the server.

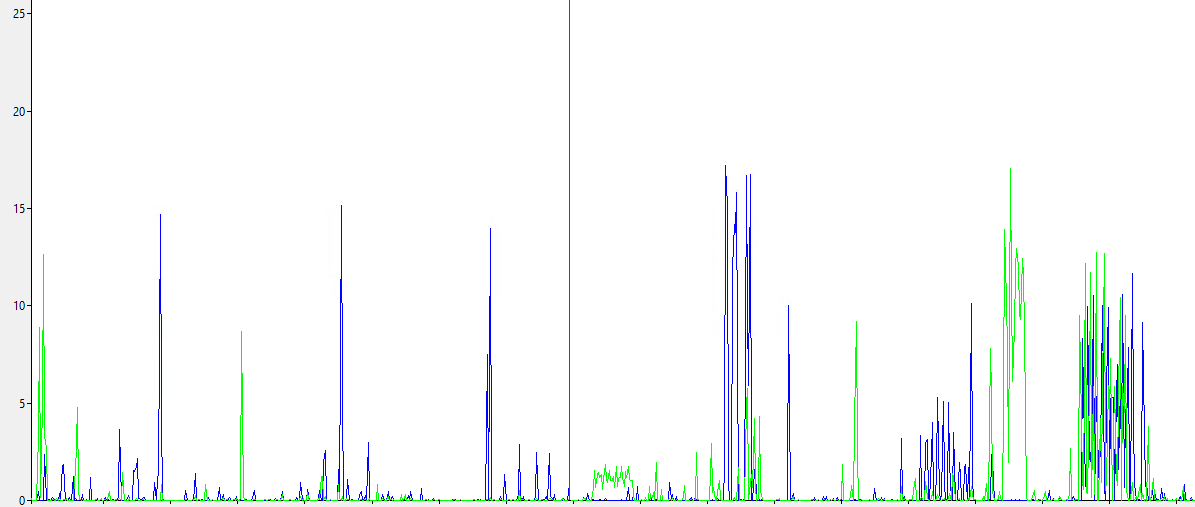

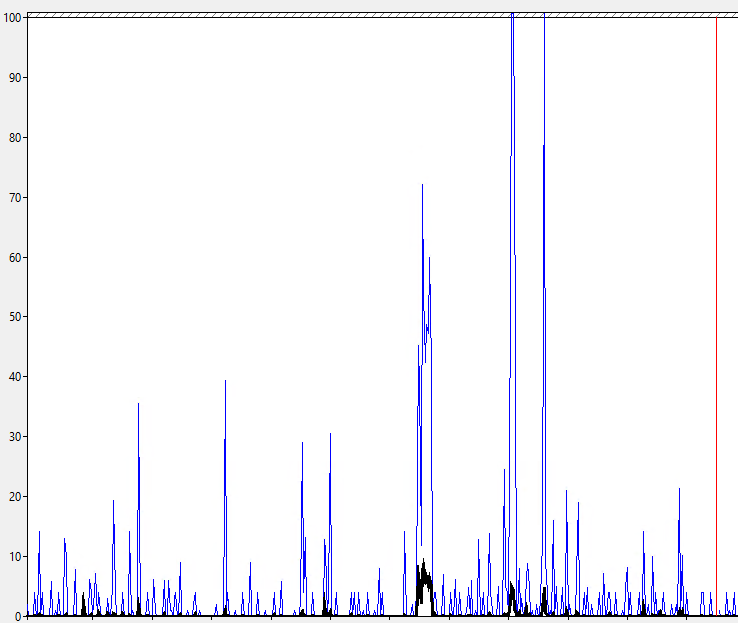

If you look at Perfmon statistics, the average read / write speed for 150-200 users is quite low and is only a few megabytes per second. Peak values are more interesting. But it should be borne in mind that these peaks are limited by the speed of the network interface, and for a normal server it is 1 Gbit / s (that is, 100 MB exchange with the disk subsystem).

In normal operation, access to files is nonlinear, arbitrary blocks are read from and written to the disk, so the performance of the disk in random access operations, that is, the maximum IOPS, is more critical.

For 150-200 employees, the indicators are quite modest - 10-20 input / output operations per second with a disk queue within 1-2.

Any array of standard SATA drives will satisfy these requirements.

For 500-1000 active users, the number of operations jumps to 250-300, and the disk queue reaches 5-10. When the queue reaches this value, users can notice that the file server is “slowing down”.

In practice, to achieve 300 IOPS performance, you already need an array of at least 3-4 typical SATA drives.

This should take into account not only the "raw performance", but also the delay introduced by the operation of the RAID controller - the so-called RAID penalty. This topic is clearly explained in the article https://habrahabr.ru/post/164325/ .

To determine the required number of disks we use the formula:

Total number of Disks required = ((Total Read IOPS + (Total Write IOPS*RAID Penalty))/Disk Speed IOPS) RAID-5 with write penalty in 4 operations, 50% read profile, 50% write, 75 IOPS disk speed, 300 IOPS target performance:

(300*0,5 + (300*0,5*4))/75 = 10 . If you have a lot of active users, then you will need a capacious server or more productive disks, such as SAS with a rotation speed of 10,000 RPM.

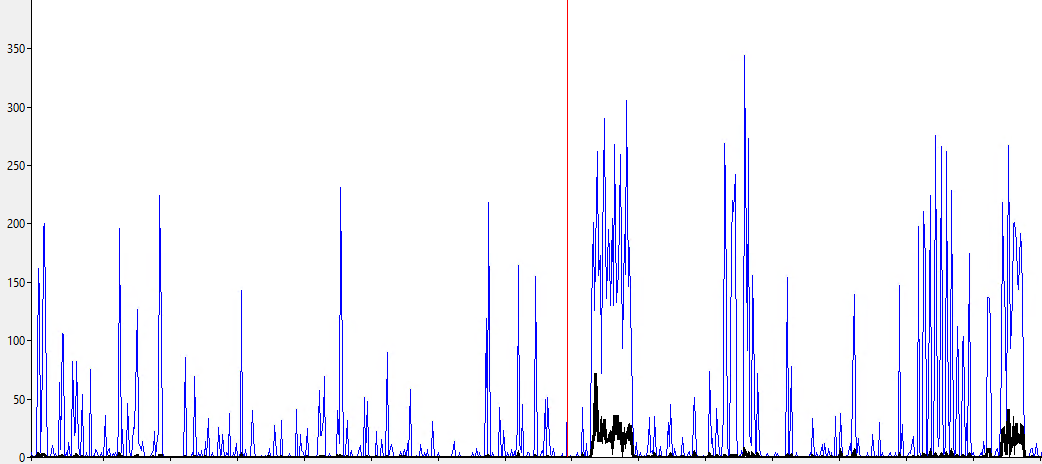

Network interface speed

The low speed of the network interface is one of the reasons for delays when working with a file server. In 2016, a server with a 100 Mbit / s network card is nonsense.

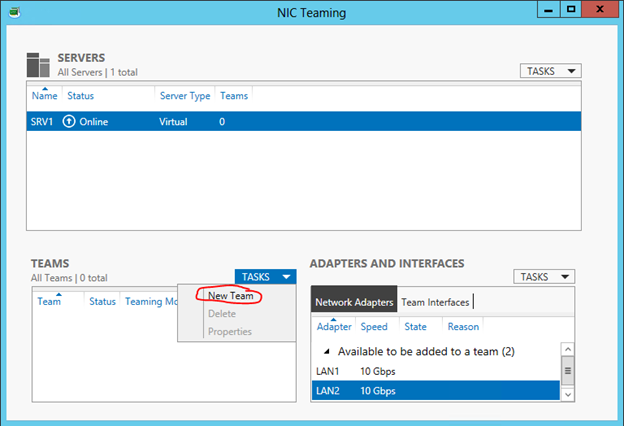

A typical server is equipped with a 1 Gb / s network card, but this also limits the disk exchange rate of about 100 Mb / s. If there are several network cards in the server, then you can combine them (aggregate) into one logical interface to increase both the performance and the availability of the cloud. The good news is that for a file server (“many clients access a single server”), aggregation works well.

HP server owners can use the proprietary HP Network Configuration Utility

If you are using Windows Server 2012, then using a regular NIC Teaming tool will be easier and more reliable.

You can learn more about this setup and the nuances of using it in the Hyper-V environment from this article .

Source: https://habr.com/ru/post/309390/

All Articles