Elixir: Preparing parsing correctly - yecc and leex

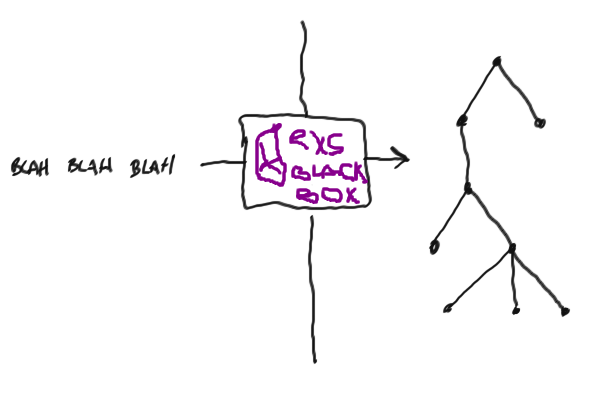

Lexical analysis (tokenization) and parsing are among the most important concepts in computer science and programming. These concepts are based on a huge amount of theoretical knowledge, but today we will not talk about them, because there are really a lot of them. In addition, the approach to parsing through "science" can cause severe disgust and scare. Meanwhile, the practical application is very simple and straightforward. If you want to know more about the theory - go to Wikipedia ( lexical analysis and parsing ), or read the delightful book of the dragon (recommended for reading in general to all programmers).

An ordinary person is afraid to use lexers and parsers, and instead he writes a bicycle in regular expressions. It seems to me that the apparent complexity is the cause. In this post, I will try to debunk her!

Why?

To begin with, we note that lexers and parsers are usually used together, but this is generally not necessary. You can use a lexer, tokenize some string into a set of tokens. Or you can use the parser to understand the grammar of anything.

I said that people often use regulars for "parsing" and understanding the text. Despite the fact that it works on simple tasks for parsing, in most cases the bike is very fragile and does not ride at all. In addition, the regulars are very limited in the grammars that you are trying to describe with them (for example, try parsing html with regulars) ( translator : not really. But you can also write a cluster application in assembler. The scale of the problem is approximately the same). Therefore, sometimes you need something more powerful.

Say hi leex and yecc

Two modules are built into erlang that make parsing great: leex and yecc . Corresponding to the name, leex is a lexer: it reads a file with a specific syntax and generates an erlang module (as a *.erl file), which can then be compiled and used for tokenization. yecc basically behaves the same way, only it generates not a lexer, but a parser.

Since the modules are available in the form of erlang batteries (somewhere in the Parsing tools group), then, in theory, they can be great to use in all places where they can solve the problem.

A small, frail and implausible example.

Each article that explains something requires examples, so let's do it: we will stream and parse the list of the Elixir language, which can consist only of numbers and atoms, which is simply written in a string. The final goal is to get the original Elixir list from this line:

iex> ListParser.parse("[1, 2, [:foo, [:bar]]]") [1, 2, [:foo, [:bar]]] Since it is absolutely pointless - take as an excellent example.

So: lexer

First of all, we need to tokenize the line: tokenization simply means turning the string into a list of tokens, which in fact are not very structured compared to the usual list of characters (string).

For example, one of the tokens could be a number, for example 4917 : the number 4917 bit more structure than the list of characters [?4, ?9, ?1, ?7] because we can consider it as one.

Tokenize our list is generally very simple - we separately tokenize:

- brackets (left

[and right]), - commas

- numbers

- atoms.

For simplicity, we will deal only with simple atoms, such as :foo or :foo_bar , and with hard ones :'foo and bar' or "hello world" will not be dealt with.

It is very easy and quick to make your own tokenizer for such a simple syntax, but leex will greatly simplify our work by allowing you to write a lexer with a very simple syntax. Basically, we set tokens with regulars, and associate them with Erlang expressions that represent this token. I mentioned that regulars are evil for such work: yes, they are bad for parsing because of the usually recursive data structure, but they are excellent for splitting rows into one-dimensional structures.

The syntax of these rules is simple:

Regular expression: Erlang code.

Here in Erlang code you need to return the {:token, value} tuple if we want the lexer to generate a token for us (actually {token, Value} tuple if you write to Erlang and not Elixir ).

Our lexer looks very simple:

Rules. [0-9]+ : {token, {int, TokenLine, TokenChars}}. :[a-z_]+ : {token, {atom, TokenLine, TokenChars}}. \[ : {token, {'[', TokenLine}}. \] : {token, {']', TokenLine}}. , : {token, {',', TokenLine}}. We return {:token, Value} to indicate leex that we need to get matching tokens (therefore the first element of the tuple is :token ), and we want to add this to the result of lexical analysis.

TokenLine and TokenChars are variables that leex substitutes for Erlang expressions that follow every regular schedule. These variables contain the string of the associated token and the contents of the associated token as a list of characters.

We will use two- or three-element tuples as token values, because this format will therefore need yecc . Sometimes we are interested in the value of the token, so we use a three-element tuple. But sometimes, the value of the token itself is not important (for example, if it is a comma), so a two-element tuple is enough. This type of token is required. In this case, yecc can easily give us a clear error message.

We don’t have to save all the tokens that you found; you can easily drop them. To do this, pass :skip_token to the tuple. The most typical application is to eliminate gaps:

[\s\t\n\r]+ : skip_token. Regulars can very easily become nauseous, but we can simply define them using the form

ALIAS = REGEX

Such definitions are placed at the beginning of the file, before the list of rules. To use them in regular definitions, you can wrap them in {} :

Definitions. INT = [0-9]+ ATOM = :[a-z_]+ WHITESPACE = [\s\t\n\r] Rules. {INT} : {token, {int, TokenLine, TokenChars}}. {ATOM} : {token, {atom, TokenLine, TokenChars}}. \[ : {token, {'[', TokenLine}}. \] : {token, {']', TokenLine}}. , : {token, {',', TokenLine}}. {WHITESPACE}+ : skip_token. We are quite ready to try our lexer. First, we need to write a file with the .xrl extension. Then, we will turn this file into an erlang module as a .erl file using :leex.file/1 . Finally, we can compile the newly generated Erlang module. Remember that most erlang modules accept a list of characters instead of binary strings, so you need to wrap in single and not double quotes. (Note: Erlang uses single quotes for complex atoms, such as 'foo bar' . These atoms cannot be represented through a regular schedule, but do you remember that?)

iex> :leex.file('list_lexer.xrl') iex> c("list_lexer.erl") iex> {:ok, tokens, _} = :list_lexer.string('[1, [:foo]]') iex> tokens {:"[", 1}, {:int, 1, '1'}, {:",", 1}, {:"[", 1}, {:atom, 1, ':foo'}, {:"]", 1}, {:"]", 1}] Crunchy! leex also provides the ability to determine the erlang code that will be associated with the lexer. This is implemented using the Erlang code. section Erlang code. at the very end of the .xrl file. We can use this advantage to convert atomic tokens to directly atoms.

... {INT} : {token, {int, TokenLine, list_to_integer(TokenChars)}}. {ATOM} : {token, {atom, TokenLine, to_atom(TokenChars)}}. ... Erlang code. to_atom([$:|Chars]) -> list_to_atom(Chars). to_atom/1 simply to_atom/1 first character of the token of an atom (which is a colon, $: in the world of Erlang ), and converts everything else into an atom. Also use list_to_integer/1 to convert the token number to the direct number.

Lexer completely looks like this:

Definitions. INT = [0-9]+ ATOM = :[a-z_]+ WHITESPACE = [\s\t\n\r] Rules. {INT} : {token, {int, TokenLine, list_to_integer(TokenChars)}}. {ATOM} : {token, {atom, TokenLine, to_atom(TokenChars)}}. \[ : {token, {'[', TokenLine}}. \] : {token, {']', TokenLine}}. , : {token, {',', TokenLine}}. {WHITESPACE}+ : skip_token. Erlang code. to_atom([$:|Chars]) -> list_to_atom(Chars). Everything works as expected:

iex> {:ok, tokens, _} = :list_lexer.string('[1, :foo]') iex> tokens [{:"[", 1}, {:int, 1, 1}, {:",", 1}, {:atom, 1, :foo}, {:"]", 1}] Parser

Now we have a flat list token. We want to give them some kind of structure, and then turn them into an Elixir list: we need to pair the list of tokens. The work of the parser is based on grammar, which is a list of rules that describe how tokens should be structured.

Of course, we can write our own parser (although it is much more complicated than our own lexer), it is easier to use yecc : it allows you to describe the rules of grammar in a very declarative way, and besides, it is very easy to use with leex .

A small digression: at this stage you may think that the names of the parser and lexer do not make sense. In fact, it is not. Ob are named after two very famous programs: lex lexer and yacc parser. Looks like the guys from Erlang are not that crazy?

Let's go back to yecc . The main element of the syntax is the rules that are described in the following form:

Left-hand side -> Right-hand side: Erlang expressions.

To the left is the token category, to the right is the token list category. The category of tokens can be of two types - deadlock and non- deadlock ( terminal and non-terminal ). Dead-end - these are tokens that do not contain anything inside; non-dead-end, respectively.

For example,: :"[" or {atom, Atom} token - deadlock. A non-peak list can be presented through a list of stub elements:

list -> '[' ']'. % or... list -> '[' elems ']'. % , '%' Erlang. As you can see, you can select several conditions for each category: a category can take any of the listed values (think of them as OR ).

elems not a dead end itself. We can represent it as a single element, or an element + a comma + a list of elements:

elems -> elem. elems -> elem ',' elems. Accordingly, elems can be elem , or elem, elem and so on.

elem itself is also not a dead-end: it represents a number, an atom, or a list. Notice how elegantly we can present the list nesting to the list:

elem -> int. elem -> atom. elem -> list. Krasava!

All non-dead-end elements must at some stage be revealed in dead-end: it is impossible to have non-dead-end elements that do not open up to anything. yecc also requires users to determine which categories are terminal and which ones are not at the beginning of the file:

Terminals '[' ']' ',' int atom. Nonterminals list elems elem. You can also define the root element, which will be the starting non-stub element that generates the original grammar. In our case, this is the list:

Rootsymbol list. We are almost done! It remains only to turn the lists that have just been parsed into Elixir lists. We will do this in the Erlang code associated with each parsing rule. In these expressions, we have a couple of special atoms: $1 , $2 , $3 and so on. yecc substitutes in them the result of Erlang code, which is associated with a category with the same index as in the right part of the rule. Now I can hear you thinking, "Shield?" You are right, without an example you will not understand:

list -> '[' ']' : []. % an empty list translate to, well, an empty list list -> '[' elems ']' : '$2'. % the list is formed by its elements elems -> elem : ['$1']. % single-element list (and base case for the recursion) elems -> elem ',' elems : ['$1'|'$3']. % '$3' will be replaced recursively elem -> int : extract_token('$1'). elem -> atom : extract_token('$1'). elem -> list : '$1'. % , Erlang. Erlang code. extract_token({_Token, _Line, Value}) -> Value. All is ready! Here is the final version of our parser:

Nonterminals list elems elem. Terminals '[' ']' ',' int atom. Rootsymbol list. list -> '[' ']' : []. list -> '[' elems ']' : '$2'. elems -> elem : ['$1']. elems -> elem ',' elems : ['$1'|'$3']. elem -> int : extract_token('$1'). elem -> atom : extract_token('$1'). elem -> list : '$1'. Erlang code. extract_token({_Token, _Line, Value}) -> Value. Now we can create an Erlang file from a yecc file (which has the extension .yrl ), just like we did with leex :

iex> :yecc.file('list_parser.yrl') iex> c("list_parser.erl") iex> :list_parser.parse([{:"[", 1}, {:atom, 1, :foo}, {:"]", 1}]) {:ok, [:foo]} Works!

Sticking tanchik

We can throw the result of the lexer immediately into the parser and-ii:

iex> source = "[:foo, [1], [:bar, [2, 3]]]" iex> {:ok, tokens, _} = source |> String.to_char_list |> :list_lexer.string iex> :list_parser.parse(tokens) {:ok, [:foo, [1], [:bar, [2, 3]]]} Amazing!

I do not understand, but where is Elixir?

Manually generating Erlang files from .xrl and .yrl files, and then compiling these files is already very annoying. By the way, Mix can do everything for us!

Mix supports the concept of "compilers": they do just what you can assume - compile. Mix provides compilers for Erlang (they simply compile .erl files through the installed Erlang ), another compiler for Elixir, but there are also compilers for leex and yecc . They are actually even included by default, you can check this by calling the Mix.compilers/0 function inside the Mix project ( translator : and not only. By the way, really by default, check now!):

iex> Mix.compilers() [:yecc, :leex, :erlang, :elixir, :app] The last thing that should be done to make it all work perfectly in the Mix project is to put the .xrl and .yrl in the src/ project directory, and voila - the modules are visible in your code after compilation.

mix new list_parser mkdir list_parser/src mv ./list_parser.yrl ./list_lexer.xrl ./list_parser/src/ And we will add a small wrapper:

# ./list_parser/lib/list_parser.ex defmodule ListParser do @spec parse(binary) :: list def parse(str) do {:ok, tokens, _} = str |> to_char_list |> :list_lexer.string {:ok, list} = :list_parser.parse(tokens) list end end Where's geshaft?

All of the above may look very abstract, but I am sure that leex and yecc have a billion uses. For example, I recently wrote a parser for PO files as part of the GNU gettext binding development for Elixir . I used yecc to describe the parser: it all resulted in a very declarative, clean and easy-to-understand grammar ( look here ), and in general I am super-keen and happy. We did not use leex , mainly because it seemed to us to be too powerful a tool for such a simple task, and we wrote our own lexer (as I said, this is also possible).

Another example? Well, there is this: have you ever heard of the Elixir language anywhere? The language is very bad, built at the top of Erlang VM , focused on parallel programming, pad resistance ... Yes, it parses yecc :)

We summarize

We easily made a lexer and a parser for converting Elixir list-dump lines into real Elixir lists. We used leex to generate lexer and yecc to generate parser.

We considered only the most elementary capabilities of these tools: they can be more complicated ( yecc generates LALR parsers, if you know what it is). But for this - welcome to the documentation .

')

Source: https://habr.com/ru/post/309382/

All Articles