Learning OpenGL ES2 for Android Lesson №4. Textures

Before you start

»If you are new to OpenGL ES, I recommend first learning the previous 3 lessons: one / two / three

The basics of the code used in this article are taken from here and from here .

The result of this lesson will be a dolphin jumping over the surface of the sea.

')

A texture is a raster image that is superimposed on the surface of a polygonal model to give it a color, color, or illusion of relief. The use of textures can be easily imagined as a drawing on the surface of a sculpture image.

The use of textures also makes it possible to reproduce small details of the surface, the creation of which by polygons would be excessively resource intensive. For example, scars on the skin, folds on clothes, small stones and other objects on the surface of walls and soil.

The quality of the textured surface is determined by texels - the number of pixels per minimum unit of texture. Since the texture itself is an image, the resolution of the texture and its format play a large role, which subsequently affects the speed and quality of graphics in the application.

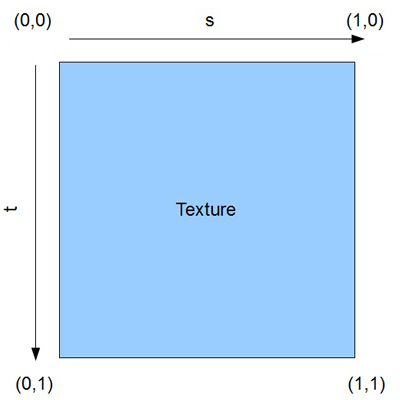

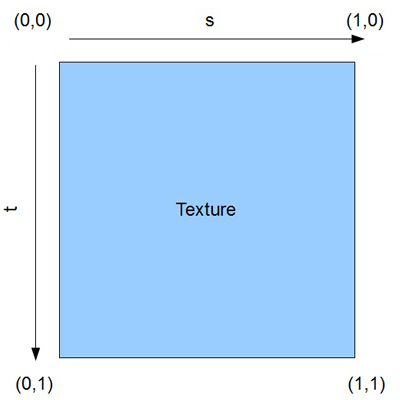

In OpenGL, texture coordinates are usually given in coordinates (s, t) or (u, v) instead of (x, y). (s, t) is a texel texture, which is then converted to a polygon.

In most computer coordinate imaging systems, the Y axis is downward and X is to the right, therefore the upper left corner corresponds to the image at the point (0, 0).

We must remember that for some android systems, memory will only work with textures, the sides of which are multiples of 2 to the power n. Therefore, you need to strive for the atlas with textures to be of the appropriate sizes in pixels, for example, 512x512 or 1024x512. Also, if you do not use the POT texture (POT - Power Of Two, that is, the power of two), you will not be able to use tiling or automatic mipmap generation. In this case, tiling refers to multiple repetitions of a single texture. Remember, the bottom right corner always has coordinates (1,1), even if the width is twice the height. This is called normalized coordinates.

Many small textures are often used in applications, and switching from one texture to another is a relatively slow process. Therefore, in such situations it is advisable to use one large image instead of many small ones. Such an image is called a texture atlas. Sub-textures are mapped onto an object using a UV transform, and the coordinates in the atlas determine which part of the image to use.

Since in our application there are three textures (sky, sea and dolphin), they are combined into one atlas of png size 1024x1024.

As you can see, I added another dolphin image (in the lower right corner). Then you can play and connect it instead of the one on the left. This atlas is very poorly made, as there is a lot of free space left. There are algorithms and programs that allow you to pack images in an optimal way. For example, as in the photo.

The weight (size of the memory occupied) of the texture can be defined in this way: multiply byte per pixel of height and pixel width, thus a 32-bit texture of size 1024 by 1024 takes 4 * 1024 * 1024 = 4'194'304 bytes.

The 16-bit texture of 1024 to 1024 only takes 2MB, so it’s worth considering whether to use 32-bit images or not.

There is hardware texture compression, which usually allows you to reduce the weight of textures by four times. However, now these questions are not the main ones, just passing information for reflection.

In this tutorial, we will use only the GL_TEXTURE_2D method, which allows you to put a texture on the plane (there is also GL_TEXTURE_CUBE_MAP, which works with the texture of an expanded cube consisting of 6 squares).

Before putting on, find out what.

One rectangle (consisting of two triangles) will lie in the x0y plane, we will wear a sky texture on it. To do this, in the private void prepareData () method of the OpenGLRenderer class, create an array of coordinates float [] vertices, where we will enter the coordinates of not only the triangles, but also the coordinates of the corresponding texture.

The first three numbers of the line are the coordinates of the upper left corner of the sky (-2, 4, 0), the next two numbers are the coordinates of the texel point (0,0), which correspond to our vertex of the triangle. Pay attention to the second point (second line), which coincides with the lower left edge of the sky (-2, 0, 0), for it coordinates of the texel point (0, 0.5f), i.e. s = 0 (left edge of texel), and t = 0.5, since the sky texture takes up only half of the texel vertically. Then we set the third point (upper right edge of the sky) and the fourth point to draw two triangles using the GL_TRIANGLE_STRIP method (see the previous lesson).

I first decided to make the second plane (sea) perpendicular to the first (sky), but then slightly increased the angle, lowering the front edge of the sea for the beauty of the front view on the device.

Notice how the coordinates that cut us from the atlas of the sea have changed. I placed the image of a dolphin on a plane that is parallel to the sky and shifted to us along the 0Z axis by 0.5 units.

If there is a desire to change the dolphin to another, then it must be done here. So, we have passed the first step and made the correspondence between the vertices of the triangles and the points of the texel.

Before describing the loading of textures, you need to deal with such a concept as a texture slot. It is to him that we connect the texture, with the help of it we can make various manipulations with it and change its parameters.

You can select the current slot for operation as follows:

where GLES20.GL_TEXTUREx is the number of the selected slot, for example GLES20.GL_TEXTURE0,

the constants are written for 32 textures (last GL_TEXTURE31).

To connect the texture to the slot, use the procedure

Where: the first parameter is the texture type, the second is the texture reference.

This procedure attaches a texture to the current slot that was previously selected by GLES20.glActiveTexture ().

That is, in order to attach a texture to a specific slot, you need to call two procedures:

It is important to remember that you cannot connect one texture to multiple slots at the same time. If you switched it to another slot and did not install the texture for the slot to which it was previously connected, then an attempt to read will most likely lead to the application crash.

As soon as we placed our graphic file texture.png in the resource folder of the drawable project, the system automatically assigned it the id number (the resource identifier is an integer that is a link to this resource). Resource identifiers are stored in the R.java file.

The TextureUtils class has a loadTexture method. This method takes as an input the resource id of the image, and the output returns the id of the created texture object that will contain this image.

So, first we give the context and the resource identifier of the graphic file as public arguments to the public static int loadTexture (Context context, int resourceId) {

Then we create an empty array of one element. In this array, OpenGL ES will write the free number of the texture, which is called the textureI textures

Then we generate a free name for the texture, which will be written to textureIds [0]

glGenTextures (1, textureIds, 0);

The first parameter determines how many texture objects we want to create. Usually we create only one. The next parameter is the name of the texture where OpenGL ES will write the id of the generated texture objects. The last parameter simply tells OpenGL ES, from which point in the array to start writing id.

We check if nothing is written, then return zero.

The inScaled flag is enabled by default and should be disabled if we need a non-scalable version of the bitmap.

We load image in Bitmap from a resource

The texture object is still empty. This means that it still does not have any graphic data. Load our bitmap image. To do this, we first need to bind the texture. In OpenGL ES, the binding of something means that we want OpenGL ES to use this particular object for all subsequent calls until we change the binding again. In this case, we want to bind the texture of the object. To do this, we use the glBindTexture () method. As soon as we tie the texture, we can manage its properties, such as image data.

Selecting the active texture slot

Make a texture named textureIds [0] current

Create texture transparency. If you do not write these two lines, our dolphin will be on a black opaque background, as in the screenshot above.

There is one more detail that we need to determine before we can use a texture object. It is connected with the fact that our triangle can occupy more or less pixels on the screen compared to how many pixels there are in the indicated texture zone. For example, on the screen, we can use much more pixels compared to what was transferred from the texture zone. Naturally, it can be the other way around: we use fewer pixels on the screen than on the selected texture zone. The first case is called magnification, and the second is minification. In each of these, we need to tell OpenGL ES how to increase or decrease the texture. In OpenGL ES terminology, the corresponding mechanisms are called minification and magnification filters. These filters are properties of the texture object, just like the image data itself. To install them, you first need to check if the texture object is bound using glBindTexture (). If so, set them as follows:

About the influence of filters on the image can be read here .

We rewrite Bitmap in memory of the video card

We delete Bitmap from memory, since the picture has already been rewritten to video memory

And finally, we call the glBindTexture method again, in which GL_TEXTURE_2D is passed 0 to the texture slot. Thus, we untie our texture object from this slot.

Once again, we first placed the texture object in the GL_TEXTURE_2D slot,

then they performed all the operations with it, then freed the slot. As a result, the texture object is now configured, ready to work, and not tied to any texture slot.

In previous lessons we wrote shaders in the program body itself as line objects. It is convenient to take them to a separate resource, as suggested here . Thus, in the res project folder, a raw folder is created in which two vertex_shader.glsl and fragment_shader.glsl files are laid. Here are their contents.

vertex_shader.glsl

Here we, as before, calculate the final coordinates (gl_Position) for each vertex using the matrix. And in the a_Texture attribute, we receive data on texture coordinates, which we immediately write in varying variable v_Texture. This will allow us in the fragment shader to obtain interpolated data on texture coordinates.

fragment_shader.glsl

First, set the average accuracy of calculations

There is a special type of uniform in GLSL called sampler2D. Samplers can only be declared in a fragment shader.

In it, we have a uniform variable u_TextureUnit, into which we get the slot number of the texture, which contains the texture we need. Pay attention to the type of the variable. Let me remind you that from the application we passed 0 to this variable as an integer. Those. the number passed to the shader (0 in our case) indicates which slot of the texture to watch.

In varying variable v_Texture come interpolated texture coordinates from the vertex shader. And the shader knows which point of the texture should be displayed at the current point of the triangle.

It remains to use the texture coordinates and the texture itself to get the final fragment. This will execute the texture2D method, and in gl_FragColor we will get the color of the desired point from the texture.

Download sources from here . Good luck and all the best!

Main sources:

" Www.opengl.org/sdk/docs/

» Startandroid.ru

" Andmonahov.blogspot.com

» Developer.android.com/reference

» Www.opengl.org

" Www.learnopengles.com

» W3bsit3-dns.com/forum/lofiversion

» Developer.android.com/guide

»If you are new to OpenGL ES, I recommend first learning the previous 3 lessons: one / two / three

The basics of the code used in this article are taken from here and from here .

The result of this lesson will be a dolphin jumping over the surface of the sea.

')

Little about textures

A texture is a raster image that is superimposed on the surface of a polygonal model to give it a color, color, or illusion of relief. The use of textures can be easily imagined as a drawing on the surface of a sculpture image.

The use of textures also makes it possible to reproduce small details of the surface, the creation of which by polygons would be excessively resource intensive. For example, scars on the skin, folds on clothes, small stones and other objects on the surface of walls and soil.

The quality of the textured surface is determined by texels - the number of pixels per minimum unit of texture. Since the texture itself is an image, the resolution of the texture and its format play a large role, which subsequently affects the speed and quality of graphics in the application.

Texture coordinates

In OpenGL, texture coordinates are usually given in coordinates (s, t) or (u, v) instead of (x, y). (s, t) is a texel texture, which is then converted to a polygon.

In most computer coordinate imaging systems, the Y axis is downward and X is to the right, therefore the upper left corner corresponds to the image at the point (0, 0).

We must remember that for some android systems, memory will only work with textures, the sides of which are multiples of 2 to the power n. Therefore, you need to strive for the atlas with textures to be of the appropriate sizes in pixels, for example, 512x512 or 1024x512. Also, if you do not use the POT texture (POT - Power Of Two, that is, the power of two), you will not be able to use tiling or automatic mipmap generation. In this case, tiling refers to multiple repetitions of a single texture. Remember, the bottom right corner always has coordinates (1,1), even if the width is twice the height. This is called normalized coordinates.

Many small textures are often used in applications, and switching from one texture to another is a relatively slow process. Therefore, in such situations it is advisable to use one large image instead of many small ones. Such an image is called a texture atlas. Sub-textures are mapped onto an object using a UV transform, and the coordinates in the atlas determine which part of the image to use.

Since in our application there are three textures (sky, sea and dolphin), they are combined into one atlas of png size 1024x1024.

As you can see, I added another dolphin image (in the lower right corner). Then you can play and connect it instead of the one on the left. This atlas is very poorly made, as there is a lot of free space left. There are algorithms and programs that allow you to pack images in an optimal way. For example, as in the photo.

The weight (size of the memory occupied) of the texture can be defined in this way: multiply byte per pixel of height and pixel width, thus a 32-bit texture of size 1024 by 1024 takes 4 * 1024 * 1024 = 4'194'304 bytes.

The 16-bit texture of 1024 to 1024 only takes 2MB, so it’s worth considering whether to use 32-bit images or not.

There is hardware texture compression, which usually allows you to reduce the weight of textures by four times. However, now these questions are not the main ones, just passing information for reflection.

In this tutorial, we will use only the GL_TEXTURE_2D method, which allows you to put a texture on the plane (there is also GL_TEXTURE_CUBE_MAP, which works with the texture of an expanded cube consisting of 6 squares).

How to wear a texture?

Before putting on, find out what.

One rectangle (consisting of two triangles) will lie in the x0y plane, we will wear a sky texture on it. To do this, in the private void prepareData () method of the OpenGLRenderer class, create an array of coordinates float [] vertices, where we will enter the coordinates of not only the triangles, but also the coordinates of the corresponding texture.

//coordinates for sky -2, 4, 0, 0, 0, -2, 0, 0, 0, 0.5f, 2, 4, 0, 0.5f, 0, 2, 0, 0, 0.5f, 0.5f, The first three numbers of the line are the coordinates of the upper left corner of the sky (-2, 4, 0), the next two numbers are the coordinates of the texel point (0,0), which correspond to our vertex of the triangle. Pay attention to the second point (second line), which coincides with the lower left edge of the sky (-2, 0, 0), for it coordinates of the texel point (0, 0.5f), i.e. s = 0 (left edge of texel), and t = 0.5, since the sky texture takes up only half of the texel vertically. Then we set the third point (upper right edge of the sky) and the fourth point to draw two triangles using the GL_TRIANGLE_STRIP method (see the previous lesson).

I first decided to make the second plane (sea) perpendicular to the first (sky), but then slightly increased the angle, lowering the front edge of the sea for the beauty of the front view on the device.

//coordinates for sea -2, 0, 0, 0.5f, 0, -2, -1, 2, 0.5f, 0.5f, 2, 0, 0, 1, 0, 2,-1, 2, 1, 0.5f, Notice how the coordinates that cut us from the atlas of the sea have changed. I placed the image of a dolphin on a plane that is parallel to the sky and shifted to us along the 0Z axis by 0.5 units.

//coordinates for dolphin -1, 1, 0.5f, 0, 0.5f, -1, -1, 0.5f, 0, 1, 1, 1, 0.5f, 0.5f, 0.5f, 1, -1, 0.5f, 0.5f, 1, If there is a desire to change the dolphin to another, then it must be done here. So, we have passed the first step and made the correspondence between the vertices of the triangles and the points of the texel.

Second step or how textures are loaded

Before describing the loading of textures, you need to deal with such a concept as a texture slot. It is to him that we connect the texture, with the help of it we can make various manipulations with it and change its parameters.

You can select the current slot for operation as follows:

GLES20.glActiveTexture(GLES20.GL_TEXTUREx); where GLES20.GL_TEXTUREx is the number of the selected slot, for example GLES20.GL_TEXTURE0,

the constants are written for 32 textures (last GL_TEXTURE31).

To connect the texture to the slot, use the procedure

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, texture_id); Where: the first parameter is the texture type, the second is the texture reference.

This procedure attaches a texture to the current slot that was previously selected by GLES20.glActiveTexture ().

That is, in order to attach a texture to a specific slot, you need to call two procedures:

GLES20.glActiveTexture(_); GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, __); It is important to remember that you cannot connect one texture to multiple slots at the same time. If you switched it to another slot and did not install the texture for the slot to which it was previously connected, then an attempt to read will most likely lead to the application crash.

As soon as we placed our graphic file texture.png in the resource folder of the drawable project, the system automatically assigned it the id number (the resource identifier is an integer that is a link to this resource). Resource identifiers are stored in the R.java file.

The TextureUtils class has a loadTexture method. This method takes as an input the resource id of the image, and the output returns the id of the created texture object that will contain this image.

So, first we give the context and the resource identifier of the graphic file as public arguments to the public static int loadTexture (Context context, int resourceId) {

Then we create an empty array of one element. In this array, OpenGL ES will write the free number of the texture, which is called the textureI textures

final int[] textureIds = new int[1]; Then we generate a free name for the texture, which will be written to textureIds [0]

glGenTextures (1, textureIds, 0);

The first parameter determines how many texture objects we want to create. Usually we create only one. The next parameter is the name of the texture where OpenGL ES will write the id of the generated texture objects. The last parameter simply tells OpenGL ES, from which point in the array to start writing id.

We check if nothing is written, then return zero.

if (textureIds[0] == 0) { return 0; } The inScaled flag is enabled by default and should be disabled if we need a non-scalable version of the bitmap.

final BitmapFactory.Options options = new BitmapFactory.Options(); options.inScaled = false; We load image in Bitmap from a resource

final Bitmap bitmap = BitmapFactory.decodeResource( context.getResources(), resourceId, options); The texture object is still empty. This means that it still does not have any graphic data. Load our bitmap image. To do this, we first need to bind the texture. In OpenGL ES, the binding of something means that we want OpenGL ES to use this particular object for all subsequent calls until we change the binding again. In this case, we want to bind the texture of the object. To do this, we use the glBindTexture () method. As soon as we tie the texture, we can manage its properties, such as image data.

Selecting the active texture slot

glActiveTexture(GL_TEXTURE0); Make a texture named textureIds [0] current

glBindTexture(GL_TEXTURE_2D, textureIds[0]); Create texture transparency. If you do not write these two lines, our dolphin will be on a black opaque background, as in the screenshot above.

GLES20.glBlendFunc(GLES20.GL_SRC_ALPHA, GLES20.GL_ONE_MINUS_SRC_ALPHA); GLES20.glEnable(GLES20.GL_BLEND); There is one more detail that we need to determine before we can use a texture object. It is connected with the fact that our triangle can occupy more or less pixels on the screen compared to how many pixels there are in the indicated texture zone. For example, on the screen, we can use much more pixels compared to what was transferred from the texture zone. Naturally, it can be the other way around: we use fewer pixels on the screen than on the selected texture zone. The first case is called magnification, and the second is minification. In each of these, we need to tell OpenGL ES how to increase or decrease the texture. In OpenGL ES terminology, the corresponding mechanisms are called minification and magnification filters. These filters are properties of the texture object, just like the image data itself. To install them, you first need to check if the texture object is bound using glBindTexture (). If so, set them as follows:

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_LINEAR); GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_LINEAR); About the influence of filters on the image can be read here .

We rewrite Bitmap in memory of the video card

GLUtils.texImage2D(GLES20.GL_TEXTURE_2D, 0, bitmap, 0); We delete Bitmap from memory, since the picture has already been rewritten to video memory

bitmap.recycle(); And finally, we call the glBindTexture method again, in which GL_TEXTURE_2D is passed 0 to the texture slot. Thus, we untie our texture object from this slot.

glBindTexture(GL_TEXTURE_2D, 0); return textureIds[0]; Once again, we first placed the texture object in the GL_TEXTURE_2D slot,

glBindTexture(GL_TEXTURE_2D, textureIds[0]); then they performed all the operations with it, then freed the slot. As a result, the texture object is now configured, ready to work, and not tied to any texture slot.

Shader texture access

In previous lessons we wrote shaders in the program body itself as line objects. It is convenient to take them to a separate resource, as suggested here . Thus, in the res project folder, a raw folder is created in which two vertex_shader.glsl and fragment_shader.glsl files are laid. Here are their contents.

vertex_shader.glsl

attribute vec4 a_Position; uniform mat4 u_Matrix; attribute vec2 a_Texture; varying vec2 v_Texture; void main() { gl_Position = u_Matrix * a_Position; v_Texture = a_Texture; } Here we, as before, calculate the final coordinates (gl_Position) for each vertex using the matrix. And in the a_Texture attribute, we receive data on texture coordinates, which we immediately write in varying variable v_Texture. This will allow us in the fragment shader to obtain interpolated data on texture coordinates.

fragment_shader.glsl

precision mediump float; uniform sampler2D u_TextureUnit; varying vec2 v_Texture; void main() { gl_FragColor = texture2D(u_TextureUnit, v_Texture); } First, set the average accuracy of calculations

precision mediump float; There is a special type of uniform in GLSL called sampler2D. Samplers can only be declared in a fragment shader.

uniform sampler2D u_TextureUnit; In it, we have a uniform variable u_TextureUnit, into which we get the slot number of the texture, which contains the texture we need. Pay attention to the type of the variable. Let me remind you that from the application we passed 0 to this variable as an integer. Those. the number passed to the shader (0 in our case) indicates which slot of the texture to watch.

In varying variable v_Texture come interpolated texture coordinates from the vertex shader. And the shader knows which point of the texture should be displayed at the current point of the triangle.

It remains to use the texture coordinates and the texture itself to get the final fragment. This will execute the texture2D method, and in gl_FragColor we will get the color of the desired point from the texture.

Download sources from here . Good luck and all the best!

Main sources:

" Www.opengl.org/sdk/docs/

» Startandroid.ru

" Andmonahov.blogspot.com

» Developer.android.com/reference

» Www.opengl.org

" Www.learnopengles.com

» W3bsit3-dns.com/forum/lofiversion

» Developer.android.com/guide

Source: https://habr.com/ru/post/309138/

All Articles