Sort a huge file with an array with a known data dictionary

Hi Habr! Recently came an interesting assignment:

The laziest way to sort can be using "external sorting with merge", but this is a very long and hard method. In this publication I will tell you what method occurred to me - I could not help but share it.

So, I was confused by the data dictionary: from 1 to 10,000 and at the same time, limited resources. Decided to do the following. To begin with, we will generate the input file we need, without writing it with our hands. For this, I used the good old rnd:

Next, the sorting itself:

')

1. Create an array int [10000]. In RAM, it will take 40 MB. In C # (a little more in other languages). I will call it a RAM array, the original array is an IN array , and the output array is an OUT array .

Why 10,000? Because the data dictionary we have in the range from 1 to 10,000.

2. Read the source file element by element (line by line in my case) and do the following with each record:

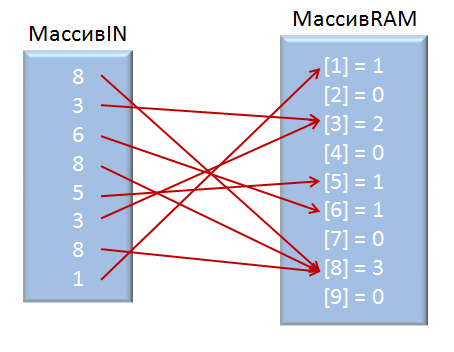

That is, in the element of the RAM array with the index equal to the value read from the array, we make +1. As a result, the RAM array will contain the number of repetitions of each element from the array IN .

He visually tried to portray in the figure:

3. And the last stage we run through our RAM array and write down in turn all the indices of the RAM array elements by the number of their repetitions:

Thus, the file size of 575MB with 100,000,000 entries was sorted and recorded in 7.53 seconds on the machine: AMD A10, 6GB, SATA. Without greed to memory and resources.

Finally I want to say that you need to use this method carefully , avoiding type overflow. That is, if we take an array of 5,000,000,000 values, even with the same data dictionary, and assume that all the values in it are the same number, then an overflow of the int type will occur, and the program will throw an exception.

I think it makes no sense to lay out the whole project, but if you have any wishes, I’ll post it. Successful development!)

There is a multi-gigabyte file containing an array of integers from 1 to 10,000. The elements are arranged chaotically with repetitions. It is necessary to sort it. Take into account resource constraints.

The laziest way to sort can be using "external sorting with merge", but this is a very long and hard method. In this publication I will tell you what method occurred to me - I could not help but share it.

So, I was confused by the data dictionary: from 1 to 10,000 and at the same time, limited resources. Decided to do the following. To begin with, we will generate the input file we need, without writing it with our hands. For this, I used the good old rnd:

System.IO.StreamWriter file = new System.IO.StreamWriter(@"C:\1\Nabor.txt", true); // Random rnd = new Random(); this.progressBar1.Maximum = Convert.ToInt32(this.textBox1.Text); // int value; for (int i = 0;i<Convert.ToInt32(this.textBox1.Text);i++) // { value = rnd.Next(0, 10000); file.WriteLine(value); // this.progressBar1.Value++; } file.Close(); Next, the sorting itself:

')

1. Create an array int [10000]. In RAM, it will take 40 MB. In C # (a little more in other languages). I will call it a RAM array, the original array is an IN array , and the output array is an OUT array .

Why 10,000? Because the data dictionary we have in the range from 1 to 10,000.

2. Read the source file element by element (line by line in my case) and do the following with each record:

string line; // int[] arr; arr = new int[10000]; // System.IO.StreamReader file2 = new System.IO.StreamReader(@"C:\1\Nabor.txt"); while ((line = file2.ReadLine()) != null) { arr[Convert.ToInt32(line)]++; // } file2.Close(); That is, in the element of the RAM array with the index equal to the value read from the array, we make +1. As a result, the RAM array will contain the number of repetitions of each element from the array IN .

He visually tried to portray in the figure:

3. And the last stage we run through our RAM array and write down in turn all the indices of the RAM array elements by the number of their repetitions:

System.IO.StreamWriter file3 = new System.IO.StreamWriter(@"C:\1\Sort.txt", true); int i, j; for (i=0;i<10000;i++) { if (arr[i]>0) // { for (j = 0; j < arr[i]; j++) // , = RAM { file3.WriteLine(i); // , } } } file3.Close(); Thus, the file size of 575MB with 100,000,000 entries was sorted and recorded in 7.53 seconds on the machine: AMD A10, 6GB, SATA. Without greed to memory and resources.

Finally I want to say that you need to use this method carefully , avoiding type overflow. That is, if we take an array of 5,000,000,000 values, even with the same data dictionary, and assume that all the values in it are the same number, then an overflow of the int type will occur, and the program will throw an exception.

I think it makes no sense to lay out the whole project, but if you have any wishes, I’ll post it. Successful development!)

Source: https://habr.com/ru/post/308978/

All Articles