Refactoring banking IT infrastructure and how we were friends of the IT team with the information security team

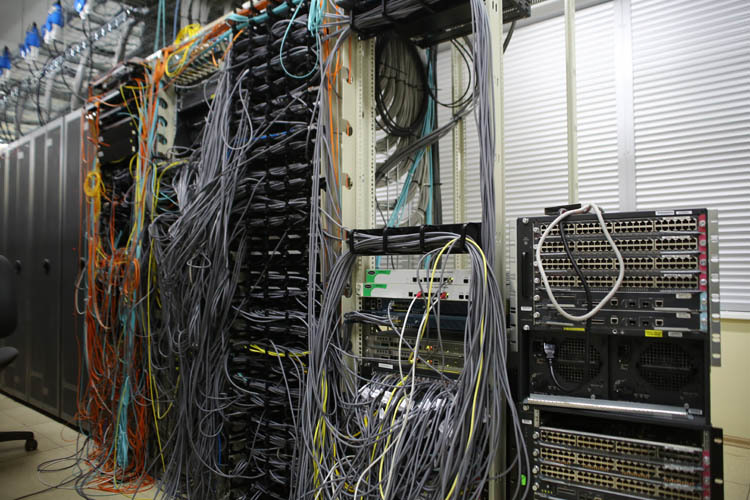

One bank, the Russian branch of a large European banking group, has set ourselves the task of segmenting the network . Its servers were located in our data centers. At the time of commencement of work, the customer had a separate infrastructure for the actual financial transactions and a large peer-to-peer network of users physically separated from it into a couple of large nodes and many branches. The first worked like a clock, and the second was difficult. In addition, an internal reorganization began under the requirements of 152- on personal data.

To understand how to better organize a new network, we asked for the correspondence of IP addresses and services on them. More precisely, in the end, we needed a map with what and where the traffic drives, so that it would be clear what to allow and what to prohibit.

')

At this point, the security forces said that they could not submit such documents. And they offered to assemble a traffic map by themselves, since, firstly, they are really interested in the actual picture of what is happening on the network, and secondly, the description of traffic and applications in the network is only in their plans. Simply put, our appearance for them was a great opportunity to update the traffic exchange pattern on the network - it seems that it often happened that IT connected something and forgot to inform the IB about it.

So we began to disassemble all network traffic.

The first stage: "friend or foe"

We brought the equipment to ensure the cut-off of the “good” traffic from the “left” one a bit earlier and connected in the mirroring mode. This is a regular function that allows you to determine in advance on a full copy of traffic whether the settings are working properly. In this mode, you can see what is cut off and what is not. In our case, while there was nothing to cut off, it was necessary to first identify the application, collect statistics and mark the transaction.

We agreed on implementation at the time when 95% of traffic in two weeks will be subject to the rules. The rest (these are, as a rule, small one-time transactions of several kilobytes) were measured in thousands and were hard to typify. One could spend his whole life studying this traffic: it behaved like an infinite non-periodic fraction - it changed constantly. It was assumed that if something goes wrong with these trifles (recall, in the user subnet), then you just need a new rule for the firewall. All described traffic was added to the permissions for the firewall. If something new had arisen, it would be cut off by default and offered for analysis by the IT team and information security specialists.

We started collecting information - who goes where and by what protocols. At the end of the first week, we had a table with IP addresses and traffic volumes for them. Began to track typical connections, in general, to build a full network profile. Each connection, which we defined as the behavior of an application, was later designated (at our request) as some well-known service by the IT team. In 99% of cases - successfully and correctly.

There were many surprises in the zoo. What is even more important - even large volumes of traffic behaved non-periodically, and some services did not show themselves immediately. Only 4 weeks later we reached the coverage of 95% in the table for the week, and after three months we achieved the fact that in the new weeks nothing unexpected came up.

For a week, roughly terabit of traffic rushed through the firewall. Character changed often enough, and as traffic was determined, new sets of rules were constantly required. For example, for several weeks we did not have traffic to one of the groups of IP addresses, and then it unexpectedly went. As it turned out, it was a server with data surveillance: most likely, someone somewhere in our vast country nakosorezil, and it took a few weeks to search the video from the central office. Naturally, this created a rather unfamiliar for us load on the network. Or there was one branch that dumped its file storage into the backup every few weeks, for example, and therefore it was possible not to catch this important operation for him for a week.

Security officers divided the services into critical and not so, that is, they marked out the priorities of risks.

The second stage: how to share

It turned out a large matrix, which, in fact, contained the rules for the firewall. By the way, we wrote a very convenient script, which from the XLS file for the information security and IT teams, after they signed what is what, made the rule set directly for implementation on the firewall. They put a plus in the Excel spreadsheet — they allowed the traffic, they put a minus — we cut.

When the predicted mode of operation to cover 95% of the traffic began to coincide with the actual (within the mirror connection), it became clear that the traffic definition work was completed. The security men received a set of documents that go where and where (and it seems they were very glad that someone had sorted it all out for them). IT service was also very happy that it turned out to achieve an indicator in a reasonable time.

Now it was necessary to separate the network. There are several basic approaches in such cases:

- Classic, by geography: each office has its own subnet, they are combined into regional subnets, and then fall into the general network of the company.

- Legacy-approach, it is "according to the existing network project." Sometimes it is rational, but, as a rule, for large companies it is threatened with a sea of crutches. The fact is that historically, for example, it may turn out that the floors from the first to the fourth offices in Moscow and St. Petersburg are one network, and the fifth floor and Yekaterinburg is another. And the third floor has the right to use the fifth printers.

- Simplified segmentation: tops and all critical in one subnet, users - in another.

- Atomic segmentation is when each subgroup is assigned its own subnet. For example, 2-3 people are sitting in a room, they are engaged only in the maintenance of one thing - once a subgrid. Their neighbors are two subgrids, and so on. This is, in fact, a paradise for security guards - it is most convenient for them to control people, but hell is for those who should support this.

- Functional segmentation: users unite according to what they can do and what they cannot (regardless of geography), that is, in fact, 6-10 key roles are given.

The legacy approach and geography were abandoned almost immediately, since the network, naturally, made it possible to deliver the addressing independently of the city so that the devices “saw” each other as being nearby. Simplified segmentation was too simplified. There remained a functional (role-playing) and its critical case - the atomic one. Naturally, the security team chose the maximum division into subnets. We set the experience and showed how many hundreds of times the config grows and how they support it. They left to think and returned with permission to do the role-playing system.

Attracted HR-department, which provided staffing. Together with IT and IS, they defined the roles of each employee, assigned him his subnet - and again added to the rules for the “big piece of hardware”. Again, for a couple of weeks we looked at the mirror traffic, whether everything is processed correctly.

In addition to the "human" roles, we had others - for example, printers in the "printers" group, servers in the "servers" group, and so on. Each resource has been assessed for criticality for business processes yet. For example, fileshare, DNS and Radius-servers were considered normal, and servers, the failure of which "put" the accounting, for example, critical.

The third stage: implementation

Since each time we received new information, we simply entered it with our miracle script from human-readable XLS to the firewall rules, we constantly had the most current version of network policies. The firewall still stood in mirror mode, that is, quietly let all traffic through itself, but showed on the copy for us, IT and IB, that it would cut off in combat mode. At the moment when everyone started to arrange everything involved, the IT team marked us a window for working at the weekend. Why at the weekend - so that in the event of a surprise, then there was no press release.

The surprise did not occur, switching passed as expected regularly. We wrote executive documentation with settings and put the system into operation.

Returning a little back, at this stage there was only one small difficulty: the IT team believed that the firewall was their network node, and they were in charge of them. Bezopasniki saw this as an access node (something like an ACS for data) and believed that it was completely their device. Fortunately, we configured both roles on the piece of hardware: security guards do not touch the network configs, and the IT team cannot prescribe new permissions unexpectedly for the IS (in fact, such cases caused the security team to have an actual traffic card - a lot of things were done “on hot beta” past them and remained forever in production).

The final schedule is:

| Stage name | Duration | Comment |

Preparation of technical and commercial proposals | Week 1 | This is very fast for the integrator. |

Signing a contract | Week 1 | It is very fast for a bank. |

Development of project documentation | 1 month | + time for coordination with the customer (this is |

Installation and commissioning of equipment | 3 weeks | Since the work was performed on 4 sites of the customer in the agreed "window", the process took such time |

Trial operation | 3 months | To achieve the targets, it was necessary to carry out an iterative process for setting rules on ITU |

Final tests, commissioning | 1 day | Switching |

Summary Architecture:

The main piece of hardware is Palo Alto 3020. There are 6 of them at 4 sites. Two pieces for large offices (connected by a failover cluster), one at a time - for data centers (these are our two data centers where the bank's IT infrastructure is located). In data centers, firewalls are connected in a transparent mode, not a cluster, since the architecture of the two data centers is itself a large cluster in essence.

References:

- Refactoring of the Aeroexpress network (a good example for fast-growing companies)

- Tales of our team

- My mail is AVrublevsky@croc.ru

Our script code for converting customer requirements to network rules went between our own engineers on the hands - a cool thing that saves time.

Source: https://habr.com/ru/post/308886/

All Articles