Path to http / 2

From the translator: here is a brief overview of the HTTP protocol and its history - from version 0.9 to version 2.

HTTP is a web threading protocol. Every web developer is obliged to know it. Understanding how HTTP works will help you make better web applications.

In this article we will discuss what HTTP is, and how it became exactly as we see it today.

What is HTTP?

So, to begin with, what is HTTP? HTTP is an application layer protocol implemented on top of the TCP / IP protocol. HTTP defines how the client and server interact with each other, how content is requested and transmitted over the Internet. By the application layer protocol, I understand that this is just an abstraction that standardizes how network nodes (clients and servers) interact with each other. HTTP itself depends on the TCP / IP protocol, which allows sending and sending requests between the client and the server. The default is TCP port 80, but others can be used. HTTPS, for example, uses port 443.

HTTP / 0.9 - the first standard (1991)

The first documented version of HTTP was HTTP / 0.9 , released in 1991. It was the simplest protocol in the world, with a single method - GET. If the client needed to receive any page on the server, he made a request:

GET /index.html And from the server came about this answer:

(response body) (connection closed) That's all. The server receives the request, sends the HTML in response, and as soon as all the content is transferred, closes the connection. There are no headers in HTTP / 0.9, the only method is GET, and the answer comes in HTML.

So, HTTP / 0.9 was the first step in all further history.

HTTP / 1.0 - 1996

Unlike HTTP / 0.9, which is designed only for HTML responses, HTTP / 1.0 handles other formats as well: images, videos, text, and other types of content. New methods have been added (such as POST and HEAD). The request / response format has changed. HTTP headers were added to requests and responses. Added status codes to distinguish between different server responses. Introduced support for encodings. Added composite data types (multi-part types), authorization, caching, various content encodings and much more.

Here is what a simple HTTP / 1.0 request and response looked like:

GET / HTTP/1.0 Host: kamranahmed.info User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_5) Accept: */* In addition to the request, the client sent personal information, the required type of response, etc. In HTTP / 0.9, the client would not send such information, since the headers simply did not exist.

An example of a response to a similar request:

HTTP/1.0 200 OK Content-Type: text/plain Content-Length: 137582 Expires: Thu, 05 Dec 1997 16:00:00 GMT Last-Modified: Wed, 5 August 1996 15:55:28 GMT Server: Apache 0.84 (response body) (connection closed) The beginning of the response is HTTP / 1.0 (HTTP and version number), then the status code is 200, then the description of the status code.

In the new version, the request and response headers were encoded in ASCII (HTTP / 0.9 was all encoded in ASCII), but the response body could be of any content type — image, video, HTML, plain text, etc. Now the server could send any the type of content to the client, so the phrase “Hyper Text” in the abbreviation of HTTP has become a distortion. HMTP, or Hypermedia Transfer Protocol, would probably become a more appropriate name, but by that time everyone was accustomed to HTTP.

One of the main disadvantages of HTTP / 1.0 is that you cannot send multiple requests during the same connection. If the client needs to get something from the server, it needs to open a new TCP connection, and as soon as the request is completed, the connection will be closed. For each subsequent request, you need to create a new connection.

Why is that bad? Let's assume that you open a page containing 10 images, 5 style files and 5 javascript files. In total, when requesting this page you need to get 20 resources - and this means that the server will have to create 20 separate connections. This number of connections significantly affects performance, as each new TCP connection requires a “triple handshake” followed by a slow start .

Triple handshake

"Triple handshake" is an exchange of a sequence of packets between the client and the server, allowing you to establish a TCP connection to start data transfer.

- SYN - The client generates a random number, for example, x , and sends it to the server.

- SYN ACK - The server confirms this request by sending back an ACK packet consisting of a random number selected by the server (say, y ) and the number x + 1 , where x is the number received from the client.

- ACK - the client increases the number of y received from the server and sends y + 1 to the server.

Translator's note : SYN - synchronization of sequence numbers, ( eng. Synchronize sequence numbers). ACK - the “Confirmation Number” field is enabled ( English Acknowledgment field is significant).

Only after the completion of the triple handshake begins the transfer of data between the client and the server. It is worth noting that the client can send data immediately after sending the last ACK packet, but the server still expects an ACK packet to complete the request.

Nevertheless, some HTTP / 1.0 implementations tried to overcome this problem by adding a new Connection: keep-alive header that would tell the server "Hey, buddy, don't close this connection, it will still come in handy for us." However, this possibility was not widespread, so the problem remained relevant.

In addition to the fact that HTTP is a connectionless protocol, it also does not provide state support. In other words, the server does not store information about the client, so each request has to include all the information the server needs, regardless of past requests. And this only adds fuel to the fire: in addition to the huge number of connections that the client opens, he also sends repeated data, overloading the network unnecessarily.

HTTP / 1.1 - 1999

Three years have passed since the days of HTTP / 1.0, and in 1999 a new version of the protocol was released - HTTP / 1.1, including many improvements:

- New HTTP methods - PUT, PATCH, HEAD, OPTIONS, DELETE.

- Identification of hosts. In HTTP / 1.0, the Host header was optional, and HTTP / 1.1 made it so.

- Permanent connections. As mentioned above, in HTTP / 1.0 one connection processed only one request and then immediately closed after that, which caused serious performance problems and problems with delays. Permanent connections appeared in HTTP / 1.1, i.e. connections that did not close by default, while remaining open to multiple consecutive requests. To close the connection, it was necessary to add the Connection: close header when prompted. Clients typically sent this header in the last request to the server to safely close the connection.

- Streaming data, in which the client can send multiple requests to the server within a connection without waiting for responses, and the server sends responses in the same sequence in which requests are received. But, you might ask, how does a client know when one answer ends and another begins? To resolve this task, a Content-Length header is set, with which the client determines where one response ends and the next one can be expected.

Note that in order to experience the benefits of persistent connections or streaming data, the Content-Length header must be available in the response. This will allow the client to understand when the transfer is complete and it will be possible to send the next request (in the case of normal sequential requests) or start waiting for the next answer (in the case of streaming).

But there were still problems with this approach. What if the data is dynamic and the server cannot find out the length of the content before sending it? It turns out, in this case, we can not use permanent connections? To solve this problem, HTTP / 1.1 introduced cunked encoding — a mechanism for breaking up information into small parts (chunks) and their transmission.

- Chunked Transfers, if the content is built dynamically and the server cannot determine the Content-Length at the beginning of the transfer, it begins to send the content in parts one after another, and add a Content-Length to each transmitted part. When all parts are sent, an empty packet is sent with the Content-Length header set to 0, signaling to the client that the transfer is complete. To tell the client that the transfer will be done in parts, the server adds a Transfer-Encoding header : chunked .

- Unlike basic authentication in HTTP / 1.0, digest authentication and proxy authentication have been added to HTTP / 1.1.

- Caching

- Byte ranges.

- Encodings

- Content negotiation .

- Client cookies .

- Improved compression support .

- Other...

HTTP / 1.1 features are a separate topic for conversation, and in this article I will not linger on it for a long time. You can find a huge amount of materials on this topic. I recommend reading Key differences between HTTP / 1.0 and HTTP / 1.1 and, for superheroes, a reference to the RFC .

HTTP / 1.1 appeared in 1999 and has been a standard for many years. And, although it was much better than its predecessor, it eventually began to become obsolete. The web is constantly growing and changing, and every day the loading of web pages requires more resources. Today, a standard web page has to open more than 30 connections. You will say: "But ... because ... in HTTP / 1.1 there are persistent connections ...". However, the fact is that HTTP / 1.1 supports only one external connection at a time. HTTP / 1.1 tried to fix this by streaming data, but this did not completely solve the problem. There was a problem of blocking the beginning of the queue ( head-of-line blocking ) - when a slow or large request blocked all subsequent ones (after all, they were executed in the order of the queue). To overcome these HTTP / 1.1 flaws, developers have devised workarounds. An example of this is the sprites encoded in CSS images, the concatenation of CSS and JS files, domain sharding and others.

SPDY - 2009

Google went further and began experimenting with alternative protocols, setting a goal to make the web faster and improve security by reducing the time it takes to delay web pages. In 2009, they introduced the SPDY protocol.

It seemed that if we continue to increase the network bandwidth, it will increase its performance. However, it turned out that from a certain point on throughput growth ceases to affect performance. On the other hand, if you operate with a delay value, that is, reduce the response time, the performance gain will be constant. That was the main idea of SPDY.

It should be clarified what the difference is: the delay time is a value that indicates how long the data transmission from the sender to the receiver will take (in milliseconds), and the throughput capacity is the amount of data transmitted per second (bits per second).

SPDY included multiplexing, compression, prioritization, security, etc ... I do not want to dive into the story about SPDY, because in the next section we will examine the typical HTTP / 2 properties, and HTTP / 2 learned a lot from SPDY.

SPDY did not try to replace HTTP with itself. It was a transitional level above HTTP that existed at the application level and modified the request before sending it over the wires. He began to become the standard de facto, and most browsers began to support it.

In 2015, Google decided that there should not be two competing standards, and combined SPDY with HTTP, giving rise to HTTP / 2.

HTTP / 2 - 2015

I think you have already seen that we need a new version of the HTTP protocol. HTTP / 2 was designed to transport content with low latency. The main differences from HTTP / 1.1:

- binary instead of text

- multiplexing - sending several asynchronous HTTP requests over a single TCP connection

- HPACK header compression

- Server Push - multiple responses to one request

- prioritize requests

- security

1. Binary protocol

HTTP / 2 tries to solve the increased delay problem that existed in HTTP / 1.x by switching to a binary format. Binary messages are faster sorted automatically, but, unlike HTTP / 1.x, they are not convenient for people to read. The main components of HTTP / 2 are frames (Frames) and streams (Streams).

Frames and threads.

Now HTTP messages consist of one or more frames. For metadata, the HEADERS frame is used, for basic data, the DATA frame is used, and there are other types of frames (RST_STREAM, SETTINGS, PRIORITY, etc.) that can be found in the HTTP / 2 specification .

Each HTTP / 2 request and response receives a unique stream identifier and is divided into frames. Frames are simply binary parts of the data. The frame collection is called a stream. Each frame contains a stream identifier indicating which thread it belongs to, and each frame contains a common header. Also, besides the fact that the stream identifier is unique, it is worth mentioning that each client request uses odd id, and the response from the server is even.

In addition to HEADERS and DATA, RST_STREAM is also worth mentioning - a special type of frame used to interrupt threads. The client can send this frame to the server, signaling that it no longer needs this stream. In HTTP / 1.1, the only way to stop the server from sending responses was to close the connection, which increased the delay time, because you had to open a new connection for any further requests. And in HTTP / 2, the client can send RST_STREAM and stop receiving a certain stream. At the same time, the connection will remain open, which allows other threads to work.

2. Multiplexing

Since HTTP / 2 is a binary protocol that uses frames and threads for requests and responses, as mentioned above, all streams are sent on a single TCP connection, without creating additional ones. The server, in turn, responds in a similar asynchronous manner. This means that the response has no order, and the client uses the flow identifier to figure out which flow a particular packet belongs to. This solves the problem of blocking the beginning of the queue ( head-of-line blocking ) - the client does not have to stand idle while waiting for the processing of a long request, because the rest of the requests can be processed while waiting.

3. HPACK header compression

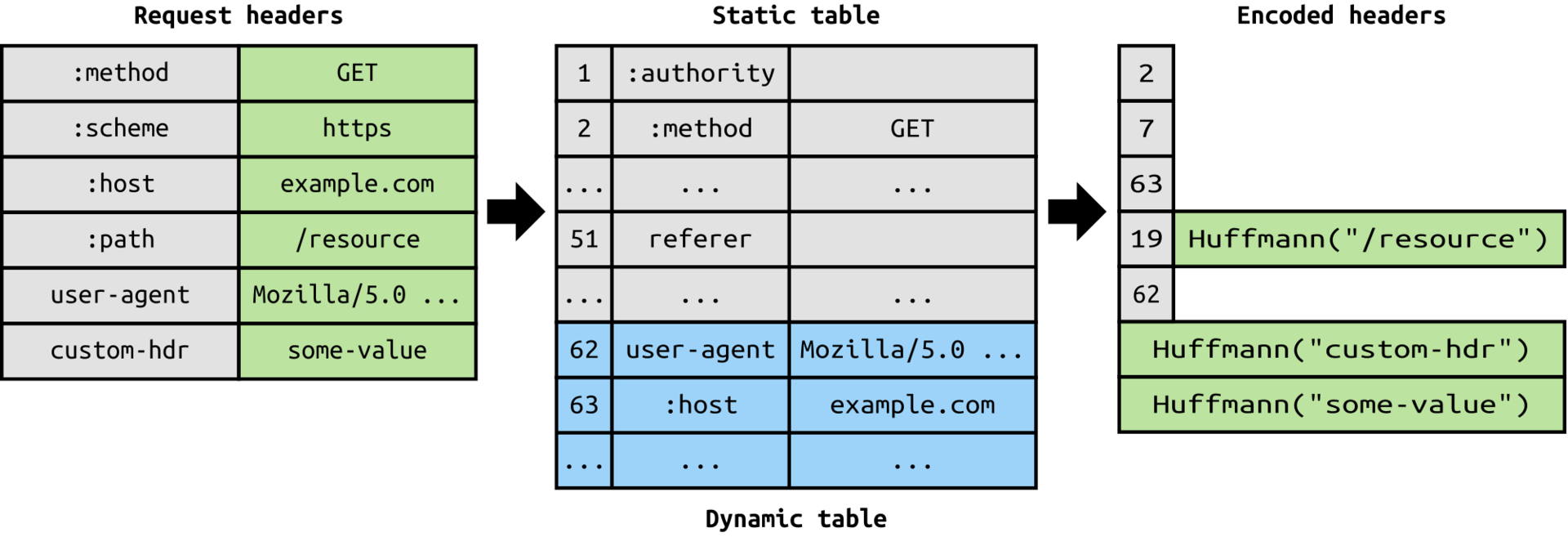

This was part of a separate RFC specifically aimed at optimizing the headers being sent. It was based on the fact that if we constantly access the server from the same client, a huge amount of duplicate data is sent in the headers time after time. And sometimes cookies are added to this, inflating the size of the headers, which reduces network bandwidth and increases latency. To solve this problem, header compression appeared in HTTP / 2.

Unlike requests and responses, headers are not compressed into gzip or similar formats. For compression, a different mechanism is used — literal values are compressed using the Huffman algorithm , and the client and server support a single header table. Duplicate headers (for example, the user agent) are omitted upon repeated requests and refer to their position in the header table.

Since we are talking about headers, let me add that they do not differ from HTTP / 1.1, except that several pseudo-headings have been added, such as: method,: scheme,: host,: path, and others.

4. Server Push

Server push is another awesome HTTP / 2 feature. The server, knowing that the client is going to request a specific resource, can send it without waiting for the request. For example, when it will be useful: the browser loads the web page, it parses it and finds what other content needs to be downloaded from the server, and then sends the corresponding requests.

Server push allows the server to reduce the number of additional requests. If he knows that the client is going to request data, he immediately sends it. The server sends a special frame PUSH_PROMISE, telling the client: “Hey, buddy, now I’ll send you this resource. You don’t need to be bothered again. ”The PUSH_PROMISE frame is associated with the thread that caused the push to be sent, and contains the thread identifier on which the server sends the desired resource.

5. Prioritize requests

The client can assign priority to the stream by adding priority information to the HEADERS frame, which opens the stream. At any other time, the client can send a PRIORITY frame that changes the priority of the stream.

If no priority information is specified, the server processes the request asynchronously, i.e. without any order. If a priority is assigned, then, based on the priority information, the server decides how much resources are allocated for processing a stream.

6. Security

Whether security (transmission over the TLS protocol) should be mandatory for HTTP / 2 or not should have developed an extensive discussion. In the end, it was decided not to make this mandatory. However, most browser manufacturers have stated that they will support HTTP / 2 only when it will be used over TLS. Thus, although the specification does not require encryption for HTTP / 2, it will still be mandatory by default. At the same time, HTTP / 2, implemented on top of TLS, imposes some restrictions: TLS version 1.2 or higher is required, there are restrictions on the minimum key size, ephemeral keys are required, and so on.

Conclusion

HTTP / 2 is already here, and has already bypassed SPDY in support , which is gradually growing. For anyone interested in learning more, here is a link to the specification and a link to a demo showing the advantages of the HTTP / 2 speed.

HTTP / 2 has a lot to offer to increase performance, and it seems like a good time to start using it.

Original: Journey to HTTP / 2 by Kamran Ahmed.

Translation: Andrei Alekseev , edited by Anatoly Tutov, Jabher , Igor Pelekhan, Natalya Yorkina, Timofey Marinin, Chaika Chursina, Yana Kriklivaya.

')

Source: https://habr.com/ru/post/308846/

All Articles