Mesos. Container Cluster Management System

Apache Mesos is a centralized, fault-tolerant cluster management system. It is designed for distributed computer environments in order to provide resource isolation and convenient management of clusters of subordinate nodes (mesos slaves). This is a new effective way to manage the server infrastructure, but, like any technical solution, not a silver bullet.

In a sense, the essence of his work is the opposite of traditional virtualization - instead of dividing a physical machine into a bunch of virtual ones, Mesos suggests combining them into one whole, into a single virtual resource.

')

Mesos allocates CPU and memory resources in a cluster for tasks in a similar manner as the Linux kernel allocates iron resources between local processes.

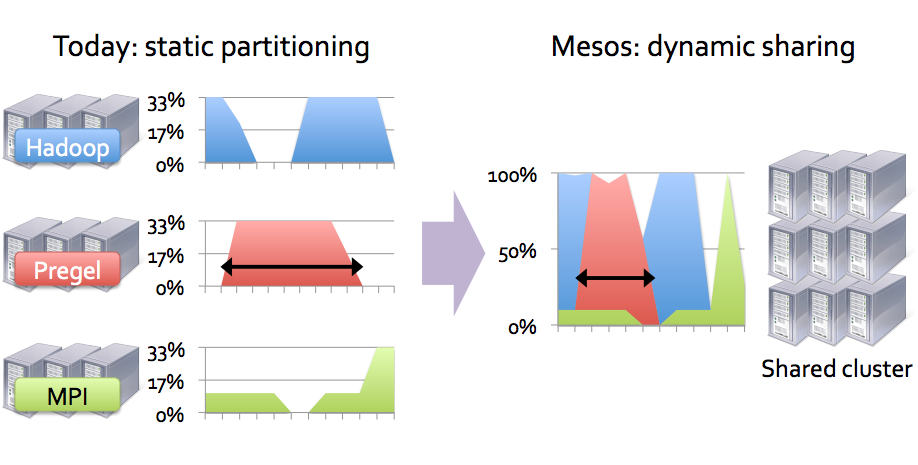

Imagine that there is a need to perform various types of tasks. To do this, you can select separate virtual machines (separate cluster) for each type. These virtual machines will probably not be fully loaded and will be idle for some time, that is, they will not work with maximum efficiency. If all virtual machines for all tasks are merged into a single cluster, we can increase the efficiency of resource use and at the same time increase the speed of their execution (if the tasks are short-term or virtual machines are not fully loaded all the time). The following picture, I hope, will clarify what was said:

But that's not all. The Mesos cluster (with the framework for it) is able to recreate individual resources, in case of their fall, scale resources manually or automatically under certain conditions, etc.

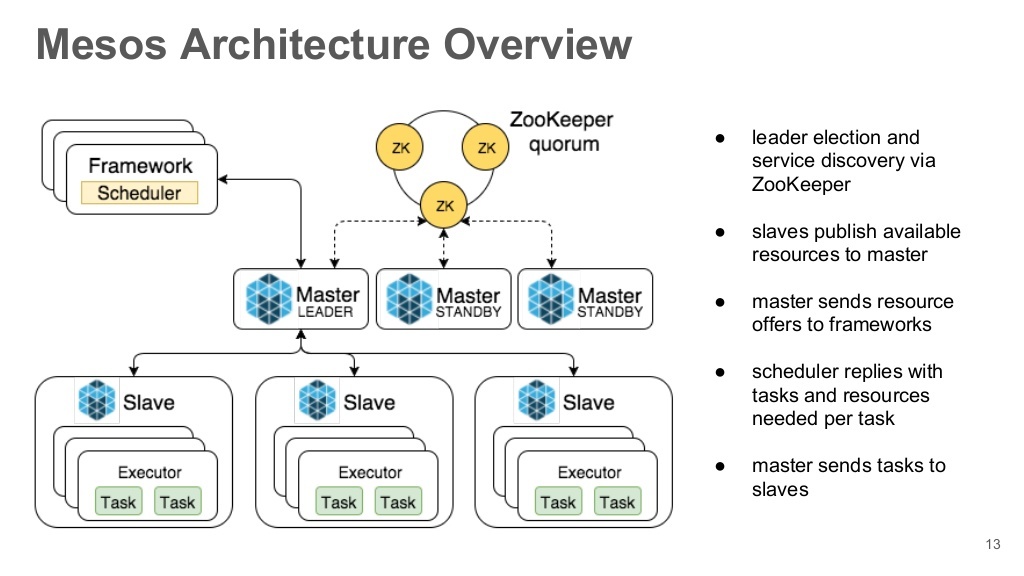

Let's walk through the components of the Mesos-cluster.

Mesos masters

The main controlling servers of the cluster. Actually they are responsible for the provision of resources, the distribution of tasks between the current Mesos-slaves. To ensure a high level of availability, there should be several and preferably an odd number, but of course more than 1. This is due to the level of quorum. Only one server can be an active master (leader) at a time.

Mesos slaves

Services (nodes) providing the capacity to perform tasks. Tasks can be executed both in own Mesos-containers, and in Docker.

Frameworks

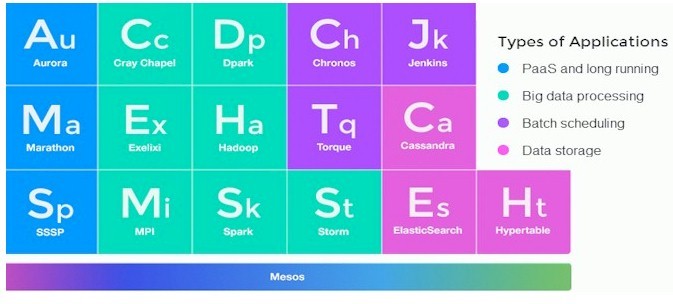

Mesos itself is only the "heart" of the cluster, it provides only the environment for the work (execution) of tasks. All the logic of launching tasks, monitoring their work, scaling, etc. perform frameworks. Similar to Linux, this is such an init / upstart system for running processes. We will consider the work of the Marathon framework, which is designed more to run ongoing tasks (long-term operation of servers, etc.) or short-term ones. To start the tasks on schedule it is worth using another framework - Chronos (similar to cron).

In general, frameworks are quite a large number and here are the most famous among them:

Aurora (can both run tasks on schedule and run long-term tasks). Developed by Twitter.

Hadoop

Jenkins

Spark

Torque

ZooKeeper

The daemon responsible for coordinating the Mesos Masters nodes. He holds the election of the master in the presence of a quorum. Other nodes in the cluster receive the address of the current master by a request to the zookeeper group of nodes of type zk: // master-node1: 2138, master-node2: 2138, master-node3: 2138 / mesos . Mesos Slaves, in turn, also connect only to the current master using a similar request. In our tutorial, they will also be installed on nodes with Mesos masters, but they can also live separately.

This article will be more practical: by repeating after me you will also be able to get a working Mesos cluster at the exit.

For future sites, I chose the following addresses:

mesos-master1 10.0.3.11 mesos-master2 10.0.3.12 mesos-master3 10.0.3.13 --- mesos-slave1 10.0.3.51 mesos-slave2 10.0.3.52 mesos-slave3 10.0.3.53 Those. 3 masters and 3 slaves. The wizards will also have a Marathon framework, which, if desired, can be placed on a separate node.

At the testing stage, it is better to choose virtual machines running on hardware virtualization platforms (VirtualBox, XEN, KVM), because, say, installing a Docker in an LXC container is not yet very possible or difficult. We will use Docker to isolate tasks running on Mesos slaves.

Thus, having 6 ready servers (virtual machines) with Ubuntu 14.04, we are ready for battle.

MESOS MASTERS / MARATHON INSTALLATION

We perform completely similar actions on all 3 Mesos master servers.

apt-get install software-properties-common Add Mesos / Marathon repositories:

apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv E56151BF DISTRO=$(lsb_release -is | tr '[:upper:]' '[:lower:]') CODENAME=$(lsb_release -cs) echo "deb http://repos.mesosphere.com/${DISTRO} ${CODENAME} main" | \ sudo tee /etc/apt/sources.list.d/mesosphere.list Mesos and Marathon require a Java machine to work. Therefore, we install the latest from Oracle:

add-apt-repository ppa:webupd8team/java apt-get update apt-get install oracle-java8-installer Check if Java works:

java -version java version "1.8.0_91" Java(TM) SE Runtime Environment (build 1.8.0_91-b14) Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode) Although, it seems, OpenJDK is also suitable.

For each of the future masters, we will install Mesos and Marathon:

apt-get -y install mesos marathon By the way, the Marathon framework is written in Scala.

The dependencies will also be installed Zookeeper. We give him the address of our master node:

vim /etc/mesos/zk zk://10.0.3.11:2181,10.0.3.12:2181,10.0.3.13:2181/mesos 2181 is the port on which Zookeeper is running.

For each master node, select a unique ID:

vim /etc/zookeeper/conf/myid 1 For the second and third server, I chose 2 and 3, respectively. Rooms can be selected from 1 to 255.

Edit /etc/zookeeper/conf/zoo.cfg :

vim /etc/zookeeper/conf/zoo.cfg server.1 = 10.0.3.11:2888:3888 server.2 = 10.0.3.12:2888:3888 server.3 = 10.0.3.13:2888:3888 1,2,3 - ID that we specified in / etc / zookeeper / conf / myid of each server.

2888 is the port that Zookeeper uses to communicate with the selected master, and 3888 for new elections, if something happened to the current master.

Go to the quorum setting. A quorum is the minimum number of work nodes required to select a new leader. This option is necessary to prevent the Split-brain cluster. Imagine that a cluster consists only of 2 servers with a quorum of 1. In this case, only the presence of 1 working server is enough to select a new leader: in fact, each server can choose its own leader. If one of the servers crashes, this behavior is more than logical. However, what will happen when only the network connection between them breaks down? That's right: the probable option is when each of the servers will take turns pulling back the main traffic. If such a cluster consists of databases, then the loss of reference data is possible in general and it will not even be clear where to recover.

So, having 3 servers is the minimum to ensure high availability. The quorum value in this case is 2. If only one server is no longer available, the other 2 will be able to choose someone among themselves. If the two servers experience breakdowns or the nodes do not see each other on the network, the group, for data integrity, will not elect a new master at all until the required quorum (the appearance of one of the servers on the network) is reached.

Why doesn't it make much sense to choose 4 (even numbers) servers? Because in this case, as in the case of 3 servers, only the absence of one server is not critical for the cluster: the fall of the second server will be fatal for the cluster due to the possible Split-brain. But in the case of 5 servers (and a quorum level of 3), 2 servers are no longer crashing. Like this. So it is best to choose clusters with 5 or more nodes, and then who knows what can happen during maintenance at one of them.

In case Zookeeper will be submitted separately, Mesos-masters can be any, incl. and fresh, quantity.

Specify the same quorum level for masters:

echo "2" > /etc/mesos-master/quorum Specify IP accordingly for each node:

echo 10.0.3.11 | tee /etc/mesos-master/ip If the servers do not have a domain name, then copy the IP address to use it as the host name:

cp /etc/mesos-master/ip /etc/mesos-master/hostname Similarly for 10.0.3.12 and 10.0.3.13 .

Customize the Marathon framework. Create a directory for configuration files and copy the hostname into it:

mkdir -p /etc/marathon/conf cp /etc/mesos-master/hostname /etc/marathon/conf Copy the Zookeeper settings for Marathon:

cp /etc/mesos/zk /etc/marathon/conf/master cp /etc/marathon/conf/master /etc/marathon/conf/zk Edit the last few:

vim /etc/marathon/conf/zk zk://10.0.3.11:2181,10.0.3.12:2181,10.0.3.13:2181/marathon Finally, we prohibit downloads of the mesos-slave daemon on the wizards:

echo manual | sudo tee /etc/init/mesos-slave.override stop mesos-slave Restart services on all nodes:

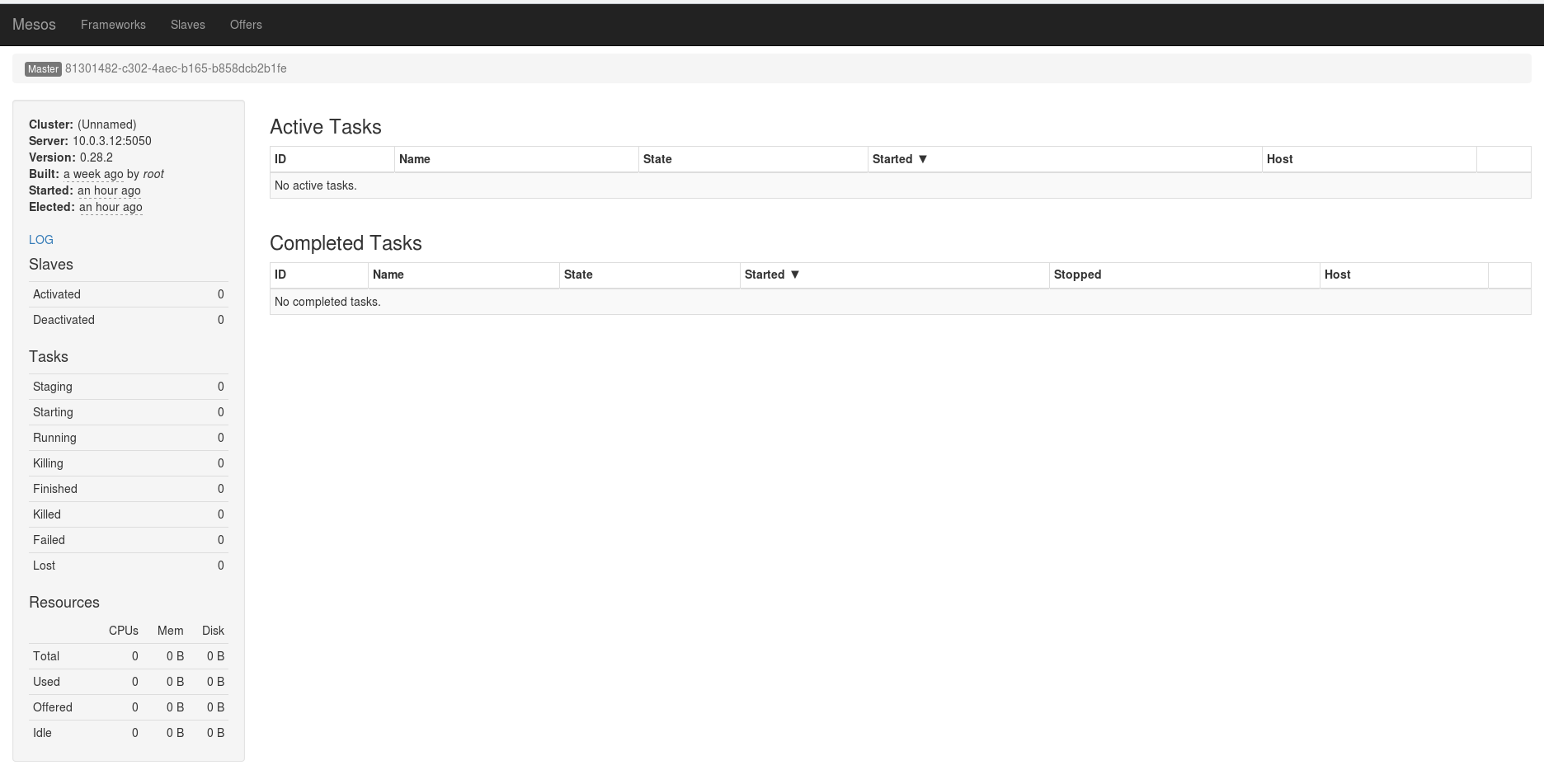

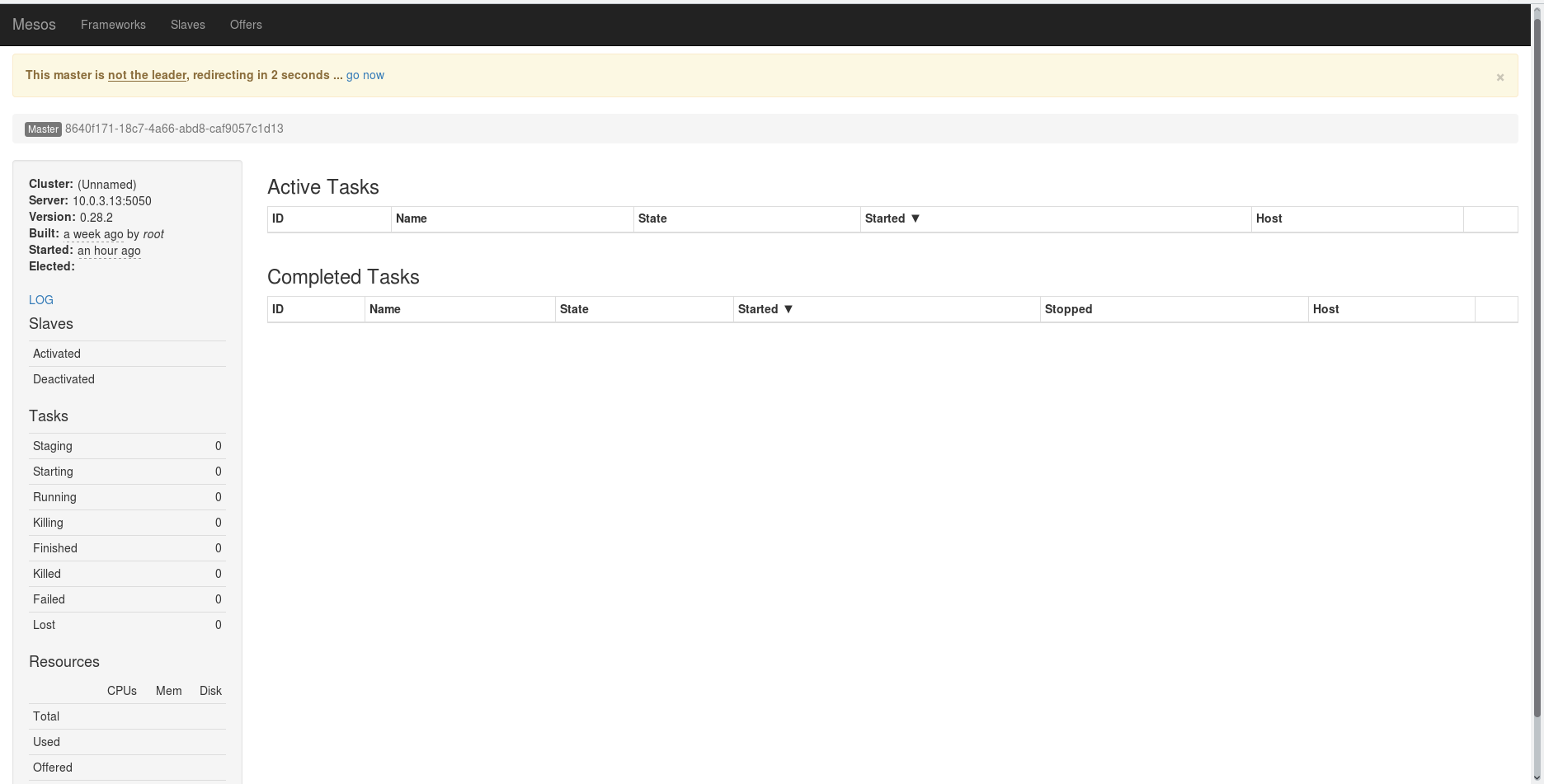

restart mesos-master restart marathon Open the Mesos web panel on port 5050:

In the event that a different server is chosen as a leader, a redirect to another server will take place:

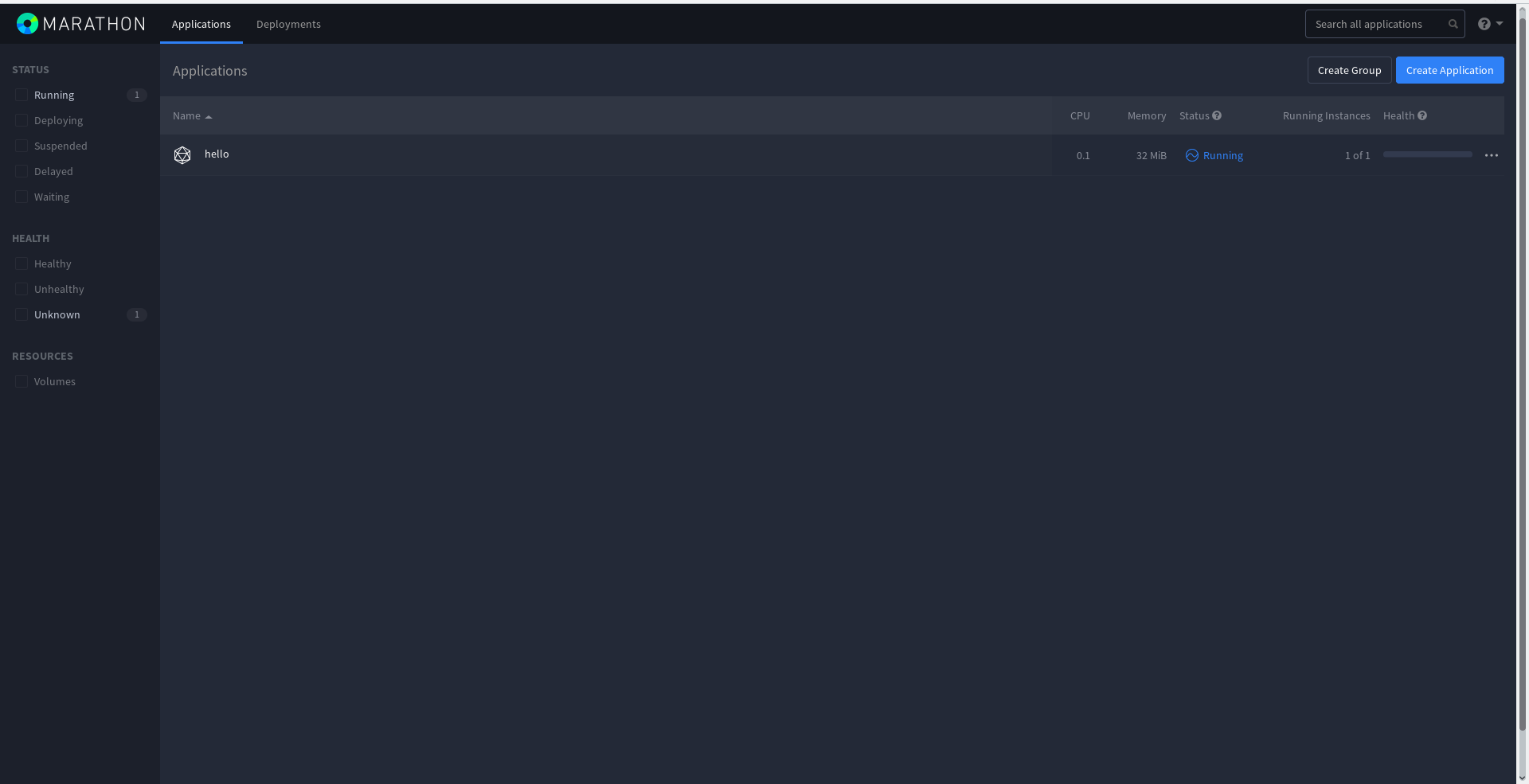

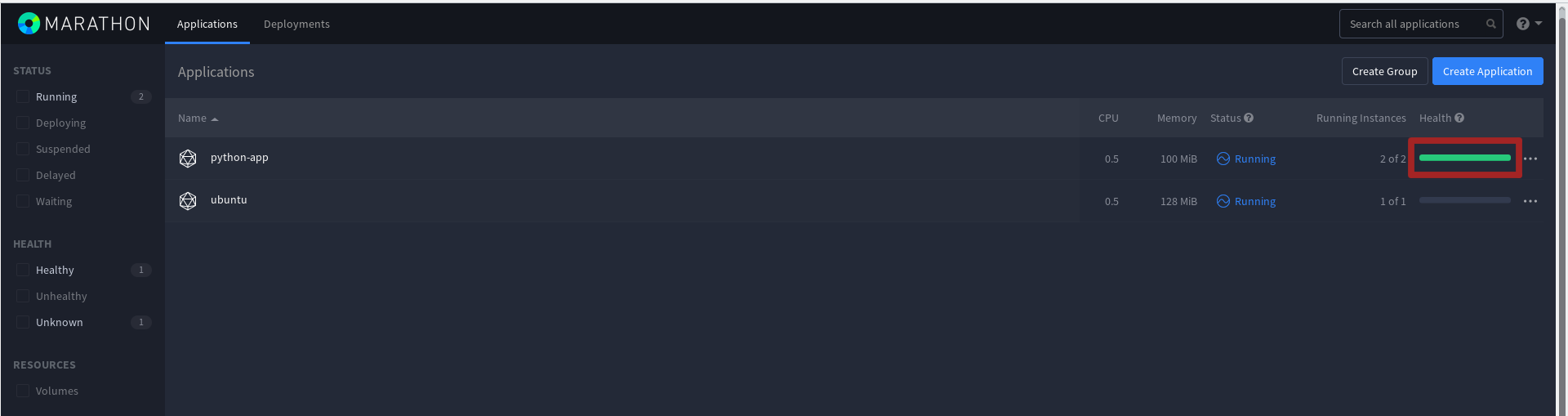

Marathon has a nice dark interface and it works on port 8080:

MESOS SLAVES INSTALLATION

As for the installation of Mesos-masters, we will add repositories and install the necessary packages:

apt-get install software-properties-common apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv E56151BF DISTRO=$(lsb_release -is | tr '[:upper:]' '[:lower:]') CODENAME=$(lsb_release -cs) echo "deb http://repos.mesosphere.com/${DISTRO} ${CODENAME} main" | \ tee /etc/apt/sources.list.d/mesosphere.list add-apt-repository ppa:webupd8team/java apt-get update apt-get install oracle-java8-installer Check if Java was installed correctly:

java -version java version "1.8.0_91" Java(TM) SE Runtime Environment (build 1.8.0_91-b14) Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode) It is also necessary to prohibit the launch of the Zookeeper and Mesos-master processes, since they are not needed here:

echo manual | sudo tee /etc/init/zookeeper.override echo manual | sudo tee /etc/init/mesos-master.override stop zookeeper stop mesos-master We indicate the domains and IP addresses for the slave:

echo 10.0.3.51 | tee /etc/mesos-slave/ip cp /etc/mesos-slave/ip /etc/mesos-slave/hostname A similar action must also be performed for 10.0.3.52 and 10.0.3.53 (of course, with a separate address for each server).

And describe all the wizards in / etc / mesos / zk :

vim /etc/mesos/zk zk://10.0.3.11:2181,10.0.3.12:2181,10.0.3.13:2181/mesos The slave will occasionally poll the Zookeeper for the current leader and connect to it, providing its resources.

During slave startup, the following error may occur:

Failed to create a containerizer: Could not create MesosContainerizer: Failed to create launcher: Failed to create Linux launcher: Failed to mount cgroups hierarchy at '/sys/fs/cgroup/freezer': 'freezer' is already attached to another hierarchy In this case, you need to make changes in / etc / default / mesos-slave :

vim /etc/default/mesos-slave ... MESOS_LAUNCHER=posix ... And run the mesos-slave again:

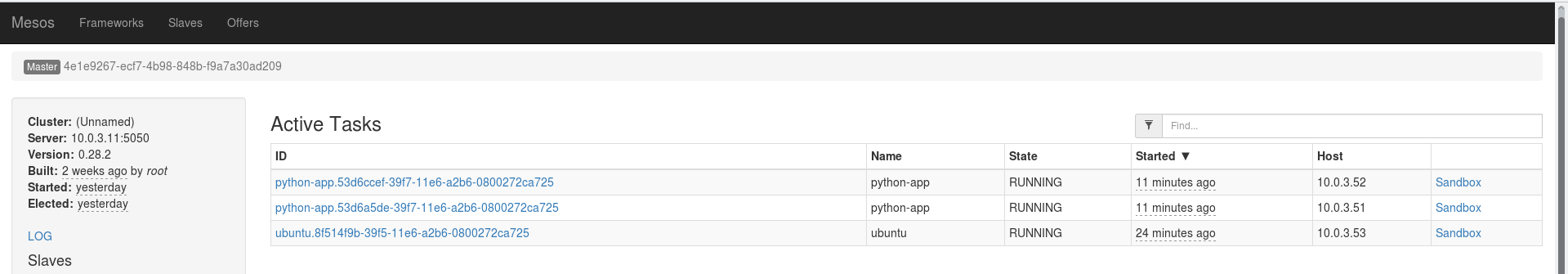

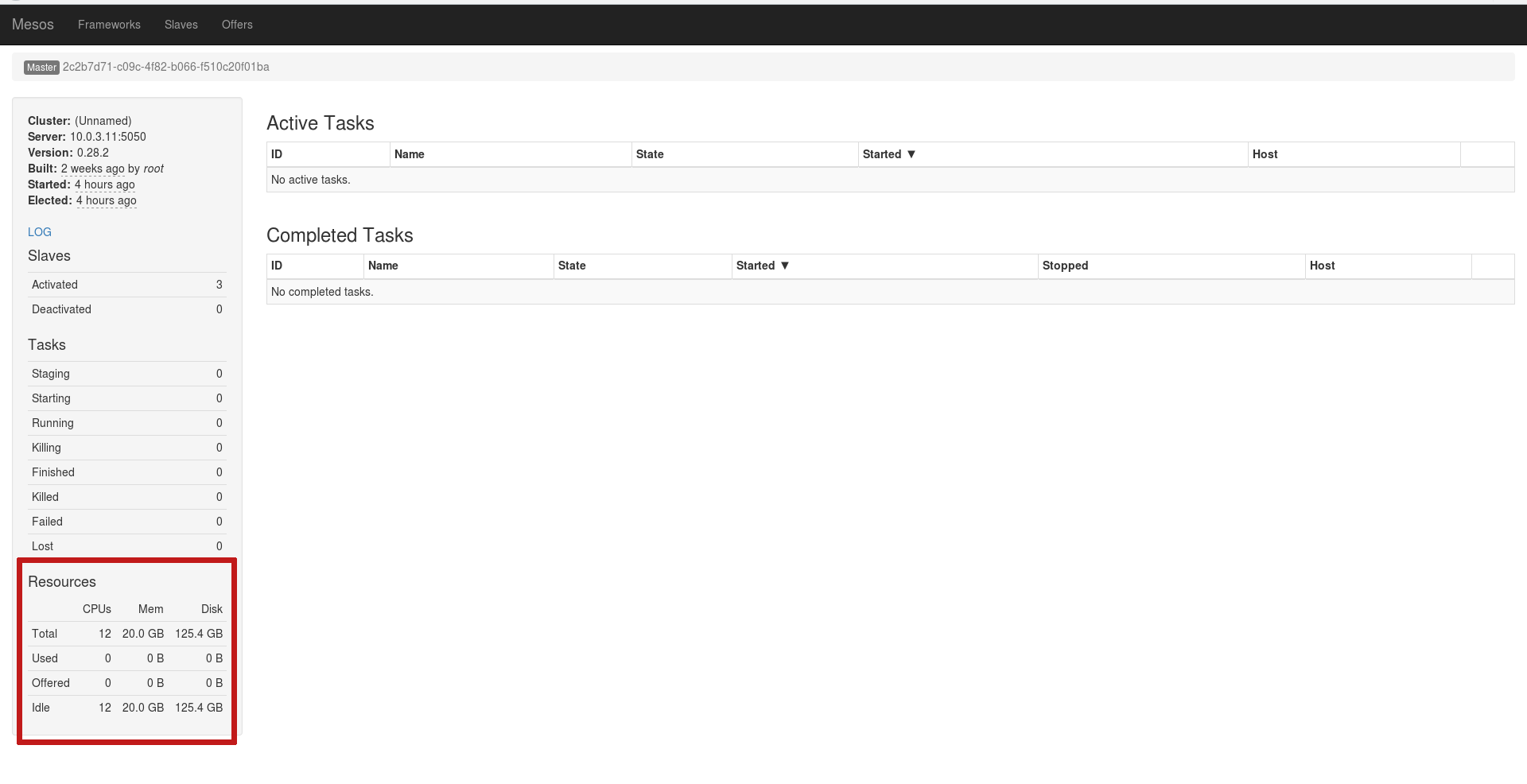

start mesos-slave If everything is done correctly, the Slaves will connect as resources for the current leader:

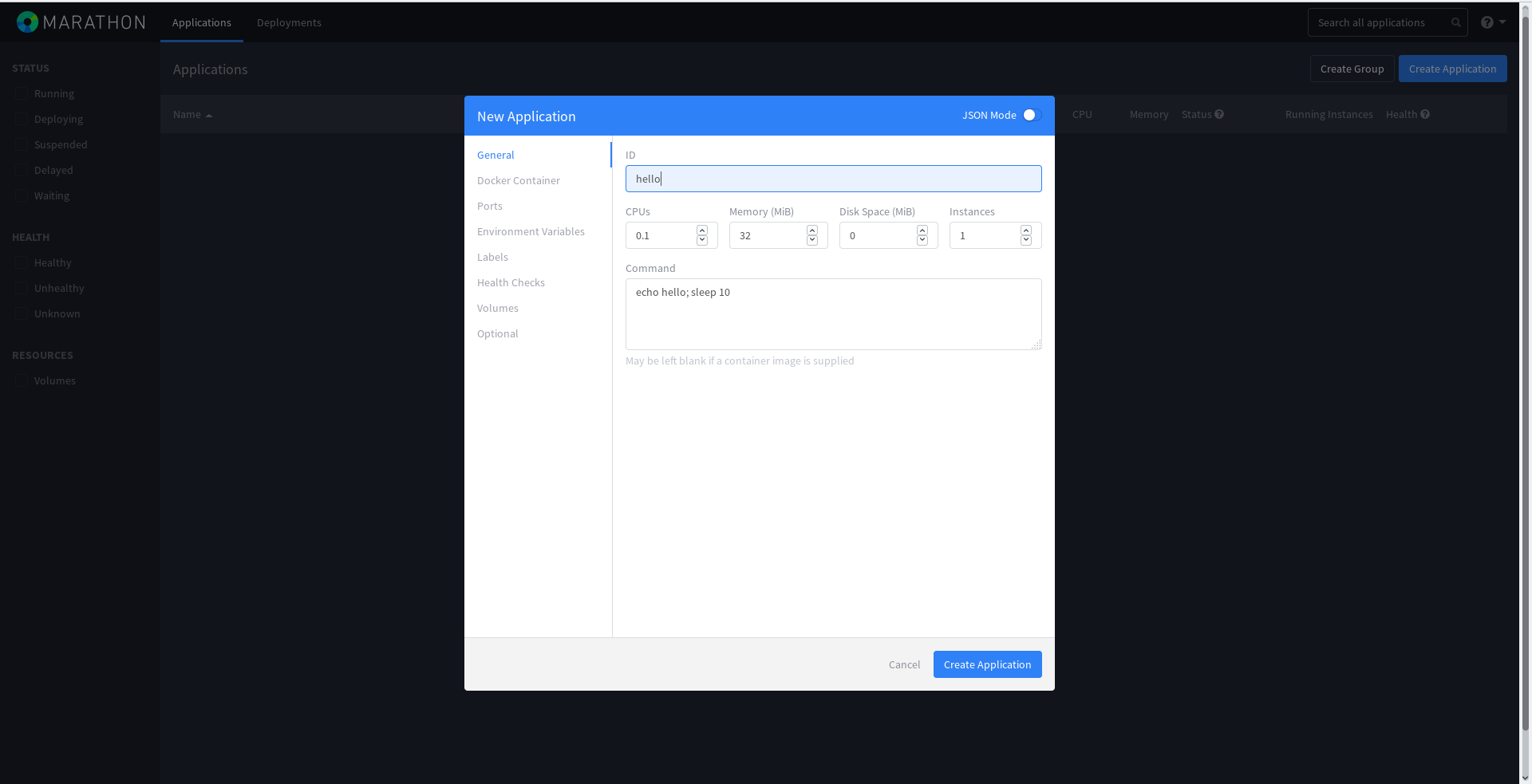

The cluster is ready! Let's run some kind of task in Marathon. To do this, open Marathon on any master node, click the blue Create Application button and enter everything as shown:

The task has started running:

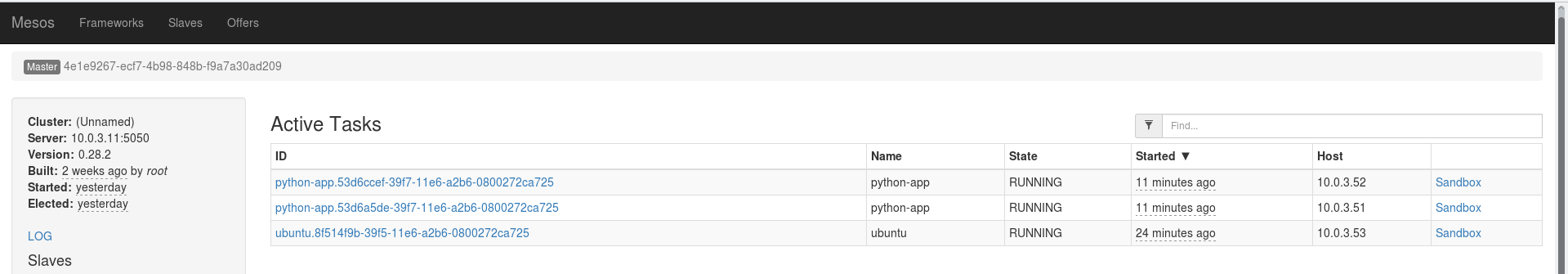

The Mesos web panel will immediately show the active task that the framework has set and the history of completed tasks. The fact is that this task will be completed and begin again, because it is short-term. That is why in the Completed Tasks will be a complete listing of all old tasks:

The same task can be performed using the Marathon API, describing it in JSON format:

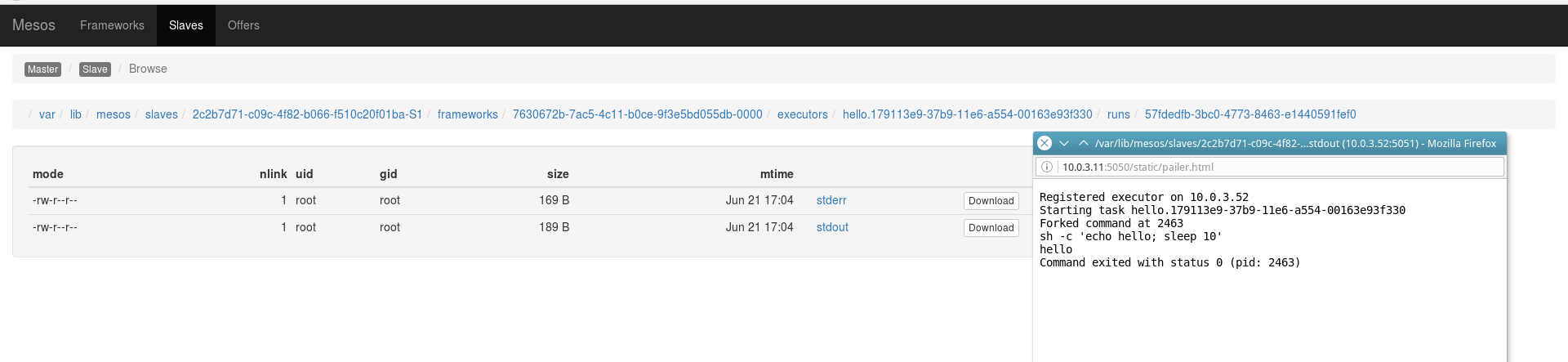

cd /tmp vim hello2.json { "id": "hello2", "cmd": "echo hello; sleep 10", "mem": 16, "cpus": 0.1, "instances": 1, "disk": 0.0, "ports": [0] } curl -i -H 'Content-Type: application/json' -d@hello2.json 10.0.3.11:8080/v2/apps HTTP/1.1 201 Created Date: Tue, 21 Jun 2016 14:21:31 GMT X-Marathon-Leader: http://10.0.3.11:8080 Cache-Control: no-cache, no-store, must-revalidate Pragma: no-cache Expires: 0 Location: http://10.0.3.11:8080/v2/apps/hello2 Content-Type: application/json; qs=2 Transfer-Encoding: chunked Server: Jetty(9.3.z-SNAPSHOT) {"id":"/hello2","cmd":"echo hello; sleep 10","args":null,"user":null,"env":{},"instances":1,"cpus":0.1,"mem":16,"disk":0,"executor":"","constraints":[],"uris":[],"fetch":[],"storeUrls":[],"ports":[0],"portDefinitions":[{"port":0,"protocol":"tcp","labels":{}}],"requirePorts":false,"backoffSeconds":1,"backoffFactor":1.15,"maxLaunchDelaySeconds":3600,"container":null,"healthChecks":[],"readinessChecks":[],"dependencies":[],"upgradeStrategy":{"minimumHealthCapacity":1,"maximumOverCapacity":1},"labels":{},"acceptedResourceRoles":null,"ipAddress":null,"version":"2016-06-21T14:21:31.665Z","residency":null,"tasksStaged":0,"tasksRunning":0,"tasksHealthy":0,"tasksUnhealthy":0,"deployments":[{"id":"13bea032-9120-45e7-b082-c7d3b7d0ad01"}],"tasks":[]}% In this (and previous) example, hello will be displayed, then a delay of 10 seconds and all this in a circle. The task will be allocated 16MB of memory and 0.1 CPU.

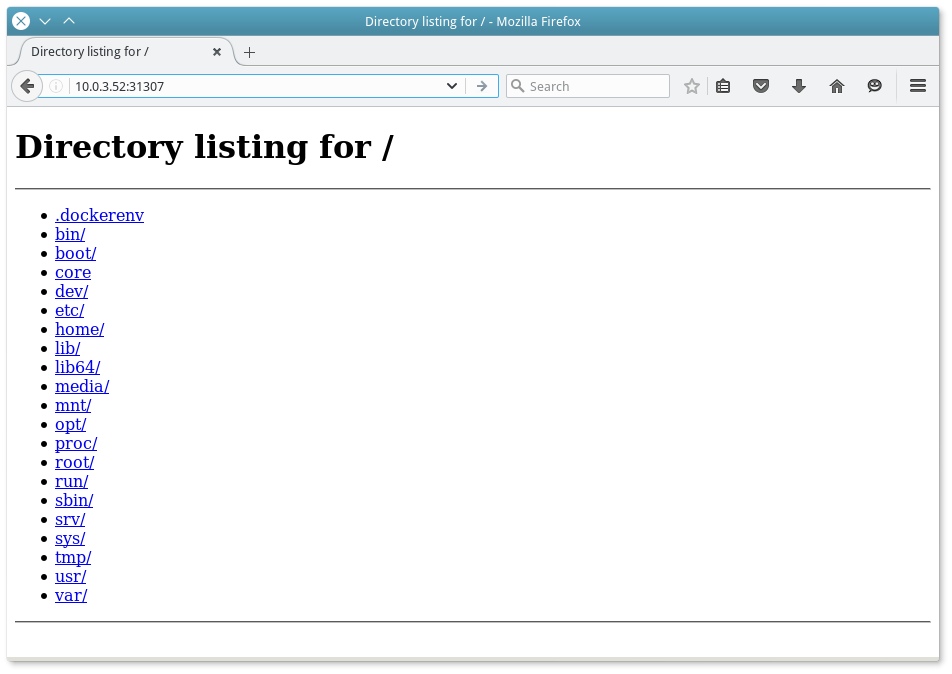

The output of running tasks can be observed by clicking on the Sandbox link in the last column of the main panel of the current Mesos wizard:

DOCKER

For better isolation and additional features, Docker support has been integrated into Mesos. Who does not know him - I advise you to do it beforehand.

The activation of Docker in Mesos is also not particularly difficult. First you need to install Docker itself on all cluster slaves:

apt-get install apt-transport-https ca-certificates apt-key adv --keyserver hkp://p80.pool.sks-keyservers.net:80 --recv-keys 58118E89F3A912897C070ADBF76221572C52609D echo "deb https://apt.dockerproject.org/repo ubuntu-precise main" | tee /etc/apt/sources.list.d/docker.list apt-get update apt-get install docker-engine To check the installation correctness, run the hello-world test container:

docker run hello-world Specify a new type of containerization for Mesos Slaves:

echo "docker,mesos" | sudo tee /etc/mesos-slave/containerizers Creating a new container that is not yet in the local cache may take longer. Therefore, we will raise the timeout value of registering a new container in the framework:

echo "5mins" | sudo tee /etc/mesos-slave/executor_registration_timeout Well and, as usual, we will overload the service after such changes:

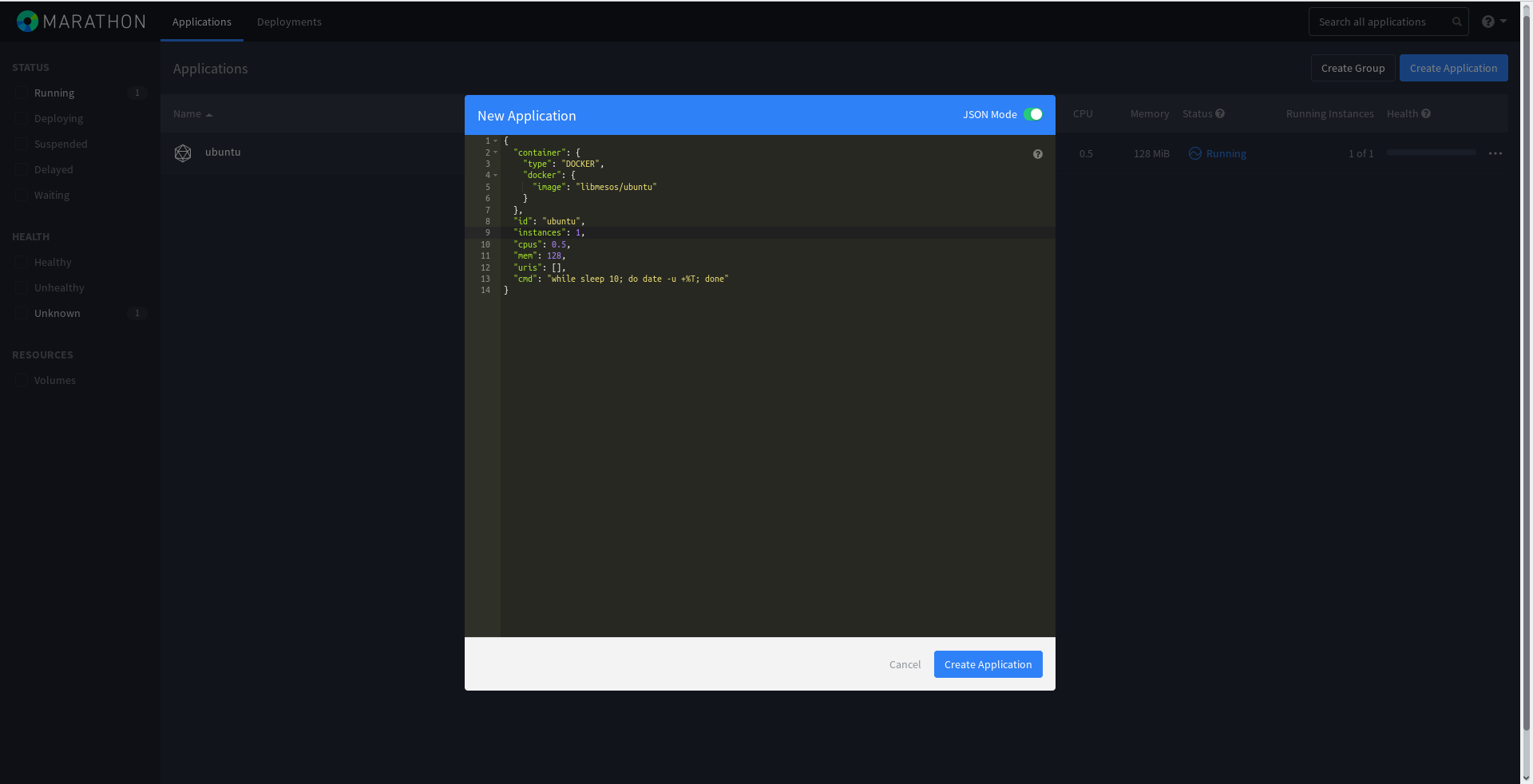

service mesos-slave restart Create a new task in Marathon and run it in the Docker container. JSON will look like this:

vim /tmp/Docker.json { "container": { "type": "DOCKER", "docker": { "image": "libmesos/ubuntu" } }, "id": "ubuntu", "cpus": 0.5, "mem": 128, "uris": [], "cmd": "while sleep 10; do date -u +%T; done" } That is, the container will run an eternal while loop with the output of the current date. Easy, right?

Docker.json can be directly infused through the Marathon web panel by activating the JSON switch when creating a new task:

Or, if desired, enter data in separate fields:

After some time, depending on the speed of the Internet connection, the container will be launched. Its existence can be observed on the Mesos-slave, which received the task to perform:

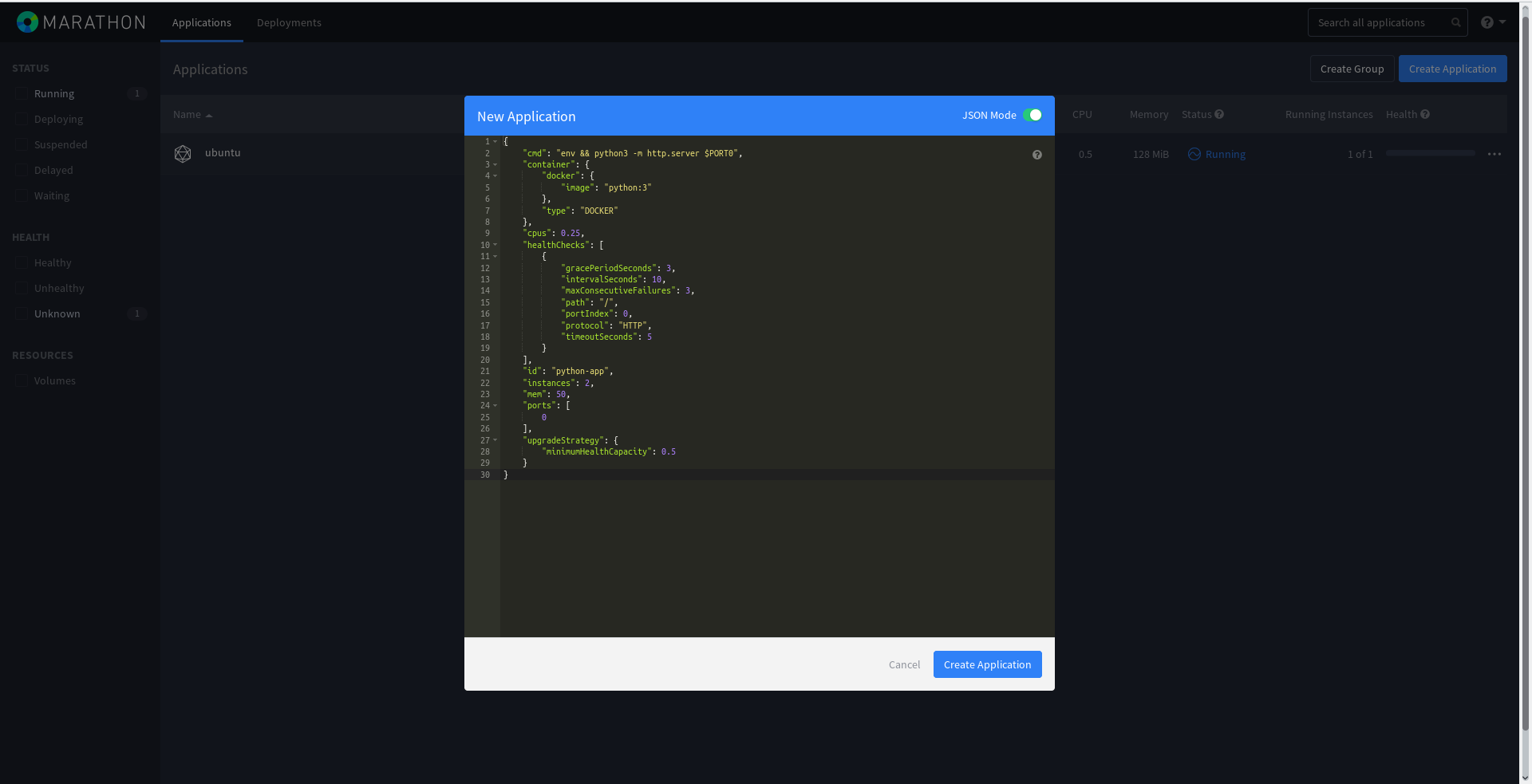

root@mesos-slave1:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 81f39fc7474a libmesos/ubuntu "/bin/sh -c 'while sl" 2 minutes ago Up 2 minutes mesos-4e1e9267-ecf7-4b98-848b-f9a7a30ad209-S2.5c1831a6-f856-48f9-aea2-74e5cb5f067f root@mesos-slave1:~# Let's create a slightly more complicated Marathon task, already with healthcheck. In case of unsatisfactory verification of the container operation, the latter will be recreated by the framework:

{ "cmd": "env && python3 -m http.server $PORT0", "container": { "docker": { "image": "python:3" }, "type": "DOCKER" }, "cpus": 0.25, "healthChecks": [ { "gracePeriodSeconds": 3, "intervalSeconds": 10, "maxConsecutiveFailures": 3, "path": "/", "portIndex": 0, "protocol": "HTTP", "timeoutSeconds": 5 } ], "id": "python-app", "instances": 2, "mem": 50, "ports": [ 0 ], "upgradeStrategy": { "minimumHealthCapacity": 0.5 } } Now there will be two full python-app instances running, that is, 2 Python web servers with port flip.

Add it also through the Marathon web panel:

Now in the Marathon panel we can observe that inspections of the instance work have appeared:

At Mesos-master new long-term tasks appeared:

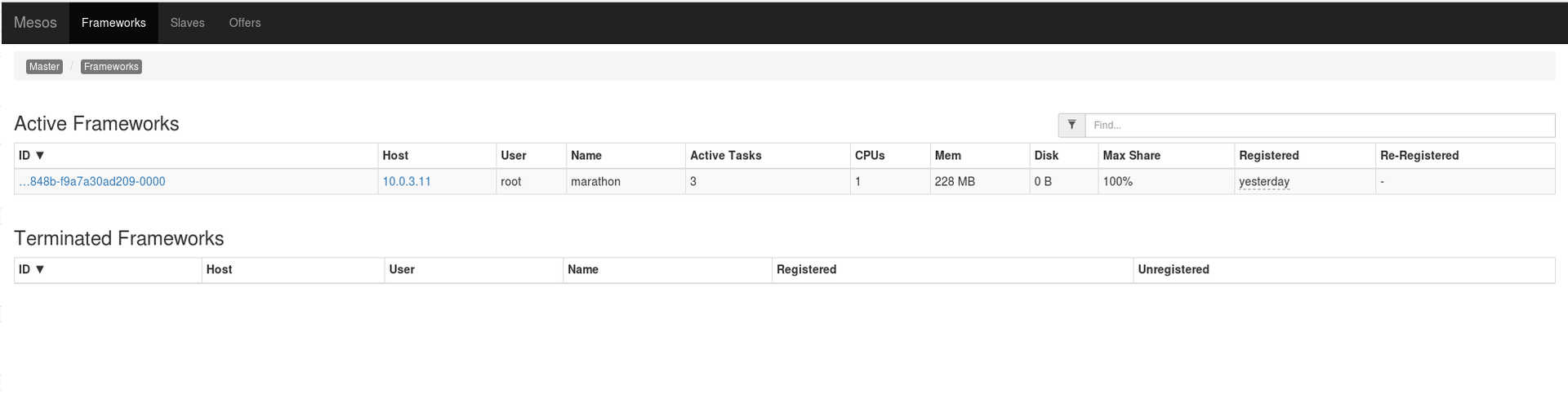

You can also see which framework has set them to run:

However, how to find out the port that is assigned to the new Python web servers? There are several ways and one of them is a request to the Marathon API:

curl -X GET -H "Content-Type: application/json" 10.0.3.12:8080/v2/tasks | python -m json.tool ... { ... { "appId": "/python-app", "healthCheckResults": [ { "alive": true, "consecutiveFailures": 0, "firstSuccess": "2016-06-24T10:35:20.785Z", "lastFailure": null, "lastFailureCause": null, "lastSuccess": "2016-06-24T12:53:31.372Z", "taskId": "python-app.53d6ccef-39f7-11e6-a2b6-0800272ca725" } ], "host": "10.0.3.51", "id": "python-app.53d6ccef-39f7-11e6-a2b6-0800272ca725", "ipAddresses": [ { "ipAddress": "10.0.3.51", "protocol": "IPv4" } ], "ports": [ 31319 ], "servicePorts": [ 10001 ], "slaveId": "4e1e9267-ecf7-4b98-848b-f9a7a30ad209-S2", "stagedAt": "2016-06-24T10:35:10.767Z", "startedAt": "2016-06-24T10:35:11.788Z", "version": "2016-06-24T10:35:10.702Z" }, { "appId": "/python-app", "healthCheckResults": [ { "alive": true, "consecutiveFailures": 0, "firstSuccess": "2016-06-24T10:35:20.789Z", "lastFailure": null, "lastFailureCause": null, "lastSuccess": "2016-06-24T12:53:31.371Z", "taskId": "python-app.53d6a5de-39f7-11e6-a2b6-0800272ca725" } ], "host": "10.0.3.52", "id": "python-app.53d6a5de-39f7-11e6-a2b6-0800272ca725", "ipAddresses": [ { "ipAddress": "10.0.3.52", "protocol": "IPv4" } ], "ports": [ 31307 ], "servicePorts": [ 10001 ], "slaveId": "4e1e9267-ecf7-4b98-848b-f9a7a30ad209-S2", "stagedAt": "2016-06-24T10:35:10.766Z", "startedAt": "2016-06-24T10:35:11.784Z", "version": "2016-06-24T10:35:10.702Z" } ] } At the addresses http://10.0.3.52:31307 and http://10.0.3.51:371319 the new servers will wait for connections:

Similarly, the port numbers can be found in the Marathon panel.

New containers on end hosts:

root@mesos-slave1:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES d5e439d61456 python:3 "/bin/sh -c 'env && p" 2 hours ago Up 2 hours mesos-4e1e9267-ecf7-4b98-848b-f9a7a30ad209-S2.150ac995-bf3c-4ecc-a79c-afc1c617afe2 ... root@mesos-slave2:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1fa55f8cb759 python:3 "/bin/sh -c 'env && p" 2 hours ago Up 2 hours mesos-4e1e9267-ecf7-4b98-848b-f9a7a30ad209-S2.9392fec2-23c1-4c05-a576-60e9350b9b20 ... It is also possible to specify static ports for each new Marathon job. This is implemented by the Bridget Network Mode . The correct JSON for creating a task will look like this:

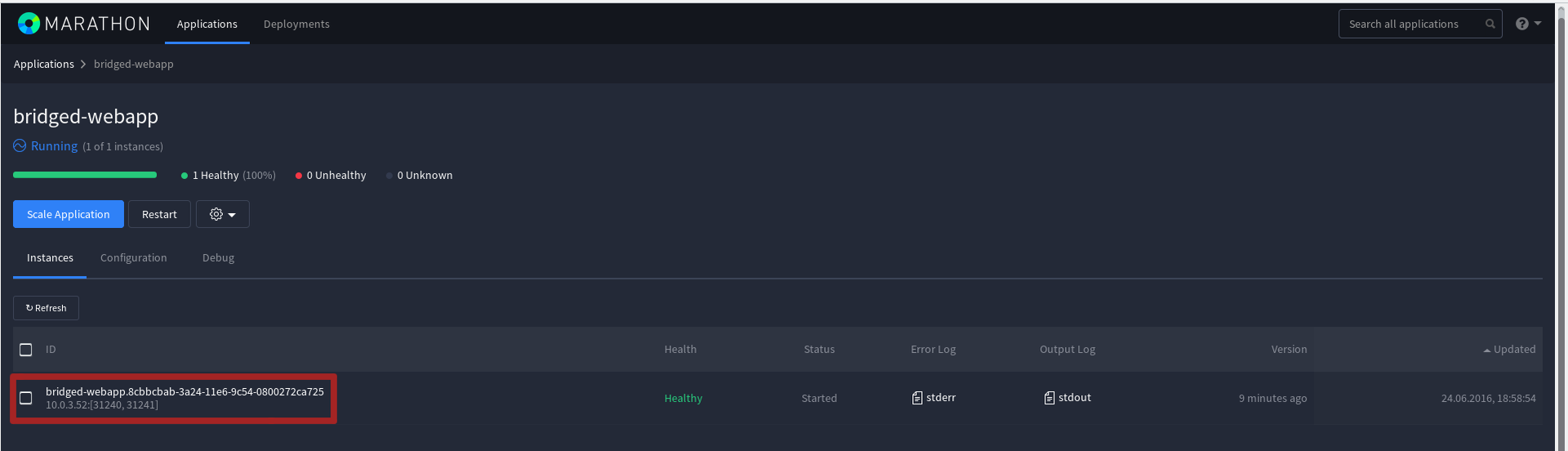

{ "id": "bridged-webapp", "cmd": "python3 -m http.server 8080", "cpus": 0.5, "mem": 64, "disk": 0, "instances": 1, "container": { "type": "DOCKER", "volumes": [], "docker": { "image": "python:3", "network": "BRIDGE", "portMappings": [ { "containerPort": 8080, "hostPort": 31240, "servicePort": 9000, "protocol": "tcp", "labels": {} }, { "containerPort": 161, "hostPort": 31241, "servicePort": 10000, "protocol": "udp", "labels": {} } ], "privileged": false, "parameters": [], "forcePullImage": false } }, "healthChecks": [ { "path": "/", "protocol": "HTTP", "portIndex": 0, "gracePeriodSeconds": 5, "intervalSeconds": 20, "timeoutSeconds": 20, "maxConsecutiveFailures": 3, "ignoreHttp1xx": false } ], "portDefinitions": [ { "port": 9000, "protocol": "tcp", "labels": {} }, { "port": 10000, "protocol": "tcp", "labels": {} } ] } Therefore, the tcp port of the 8080 container (containerPort) will be redirected to port 31240 (hostPort) on the slave machine. Similarly with udp - 161 th in 31241 . Of course, in this case, there are no reasons to redirect udp at all, and this possibility is given only as an example.

MESOS-DNS

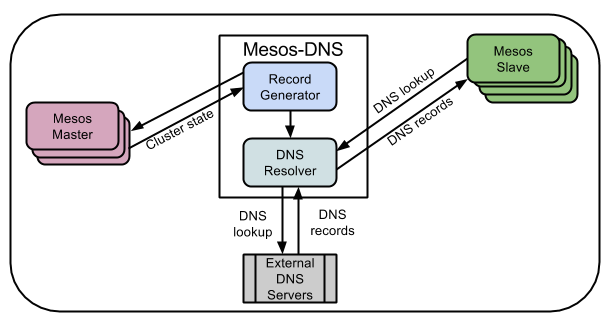

Obviously, access to IP-addresses is not very convenient. Moreover, the slave on which each next container with a task will be launched will be chosen randomly. Therefore, it would not be superfluous to be able to automatically bind DNS names to containers.

Mesos-DNS can help with this. This is the DNS server for the Mesos cluster, which uses the Mesos wizard API to retrieve the names of running tasks and the IP addresses of the slaves on which tasks are running.

The default domain name will be configured as follows: task name in Mesos + .marathon.mesos. MESOS-DNS will serve only this zone - all others will be redirected to a standard DNS server.

Mesos-DNS is written in the Go language and is distributed as a ready-made compiled binary file. For which, ideally, you need to write an init or systemd script (depending on the distribution version), but there are ready-made recommendations on the https://github.com/mesosphere/mesos-dns-pkg/tree/master/common network.

To test Mesos-DNS, I created a separate server with the address 10.0.3.60 , although it could just as well be created in a container using Marathon.

Download the latest Mesos-DNS release to the / usr / sbin directory on the new server and rename the binary:

cd /usr/sbin wget https://github.com/mesosphere/mesos-dns/releases/download/v0.5.2/mesos-dns-v0.5.2-linux-amd64 mv mesos-dns-v0.5.2-linux-amd64 mesos-dns chmod +x mesos-dns Create a configuration file:

vim /etc/mesos-dns/config.json { "zk": "zk://10.0.3.11:2181,10.0.3.12:2181,10.0.3.13:2181/mesos", "masters": ["10.0.3.11:5050","10.0.3.12:5050","10.0.3.13:5050"], "refreshSeconds": 60, "ttl": 60, "domain": "mesos", "port": 53, "resolvers": ["8.8.8.8","8.8.4.4"], "timeout": 5, "email": "root.mesos-dns.mesos" } That is, Mesos-DNS will use the request to Zookeeper (zk) to find out information about the current master and poll it once a minute ( refreshSeconds ). In the case of a request for all other domains except the mesos zone, requests will be redirected to Google’s DNS servers (the resolvers parameter). The service will work on the standard port 53, like any other DNS server.

The masters option is optional. First, Mesos-DNS will look for the leader using the request to the Zookeeper server and, if they are not available, it will go through the list of servers specified in the masters.

Here is a good article that describes all the possible options http://mesosphere.imtqy.com/mesos-dns/docs/configuration-parameters.html

This is enough, so we run Mesos-DNS:

/usr/sbin/mesos-dns -config=/etc/mesos-dns/config.json 2016/07/01 11:58:23 Connected to 10.0.3.11:2181 2016/07/01 11:58:23 Authenticated: id=96155239082295306, timeout=40000 Of course, all Mesos cluster nodes (and other nodes from which access to services in containers will be made) should add the Mesos-DNS server address as the main one in /etc/resolv.conf , to the first position:

vim /etc/resolv.conf nameserver 10.0.3.60 nameserver 8.8.8.8 nameserver 8.8.4.4 After changing resolv.conf, it’s worth making sure that all names are re-edited through 10.0.3.60

dig i.ua ; <<>> DiG 9.9.5-3ubuntu0.8-Ubuntu <<>> i.ua ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 24579 ;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1 ;; OPT PSEUDOSECTION: ; EDNS: version: 0, flags:; udp: 512 ;; QUESTION SECTION: ;i.ua. IN A ;; ANSWER SECTION: i.ua. 2403 IN A 91.198.36.14 ;; Query time: 58 msec ;; SERVER: 10.0.3.60#53(10.0.3.60) ;; WHEN: Mon Jun 27 16:20:12 CEST 2016 ;; MSG SIZE rcvd: 49 To demonstrate the work of Mesos-DNS, run the following task via Marathon:

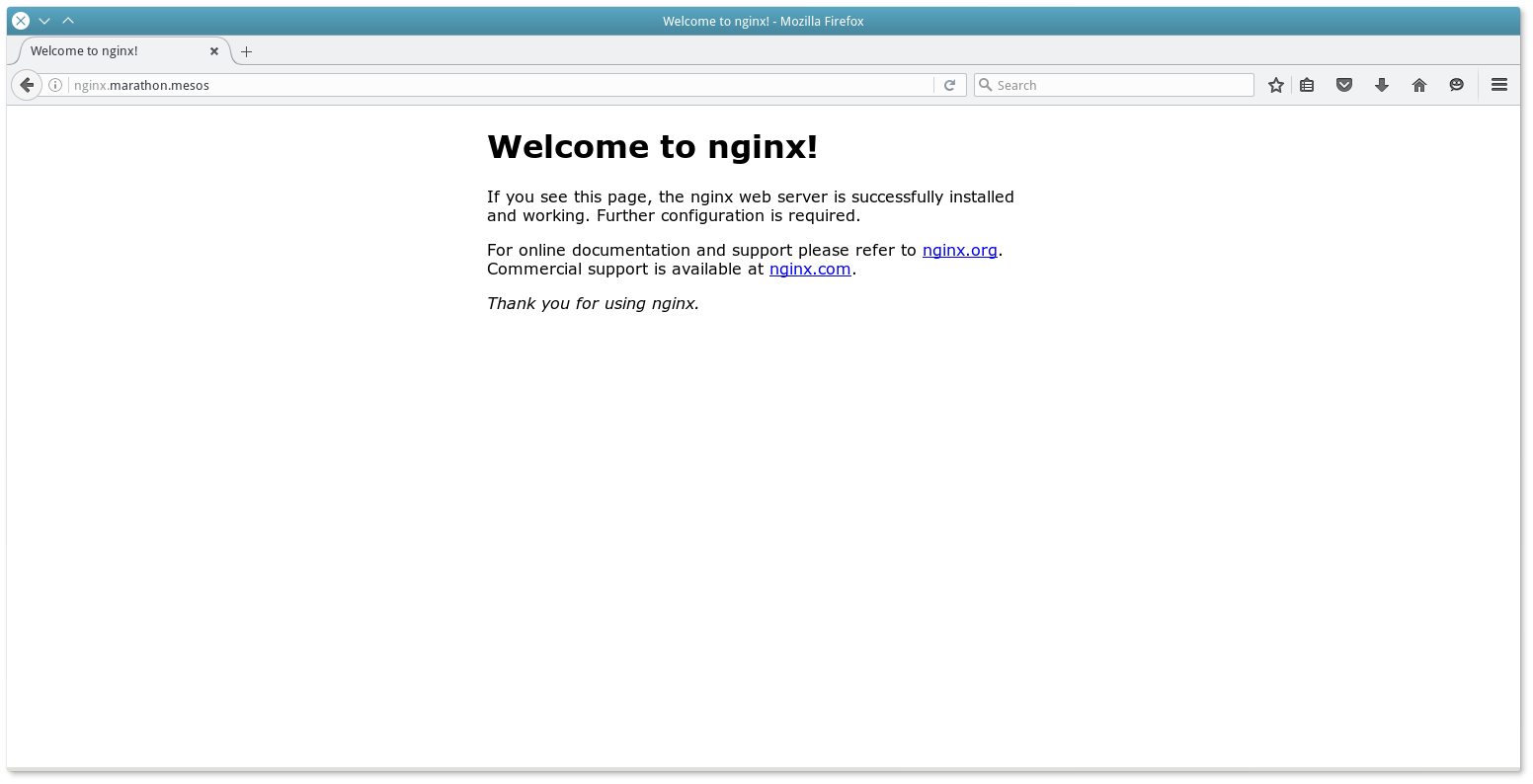

cat nginx.json { "id": "nginx", "container": { "type": "DOCKER", "docker": { "image": "nginx:1.7.7", "network": "HOST" } }, "instances": 1, "cpus": 0.1, "mem": 60, "constraints": [ [ "hostname", "UNIQUE" ] ] } curl -X POST -H "Content-Type: application/json" http://10.0.3.11:8080/v2/apps -d@nginx.json This task will install the container with Nginx, and Mesos-DNS will register for it the name nginx.marathon.mesos :

dig +short nginx.marathon.mesos 10.0.3.53

When scaling the nginx task (that is, when creating additional instances), Mesos-DNS recognizes this and creates additional A-records for the same domain:

dig +short nginx.marathon.mesos 10.0.3.53 10.0.3.51 Thus balancing between two nodes at the DNS level will work.

It is worth noting that Mesos-DNS, in addition to A-records, also creates an SRV-record in DNS for each task (container). The SRV record links the service name and the hostname-IP port on which it is available. Check the SRV record for the nginx task, which we launched earlier (not scalable to two instances):

dig _nginx._tcp.marathon.mesos SRV ; <<>> DiG 9.9.5-3ubuntu0.8-Ubuntu <<>> _nginx._tcp.marathon.mesos SRV ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 11956 ;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1 ;; QUESTION SECTION: ;_nginx._tcp.marathon.mesos. IN SRV ;; ANSWER SECTION: _nginx._tcp.marathon.mesos. 60 IN SRV 0 0 31514 nginx-9g7b9-s0.marathon.mesos. ;; ADDITIONAL SECTION: nginx-9g7b9-s0.marathon.mesos. 60 IN A 10.0.3.51 ;; Query time: 2 msec ;; SERVER: 10.0.3.60#53(10.0.3.60) ;; WHEN: Fri Jul 01 12:03:37 CEST 2016 ;; MSG SIZE rcvd: 124 In order not to make changes to the settings of the resolv.conf of each server - you can make changes to the internal DNS infrastructure. In the case of Bind9, these changes will look like this:

vim /etc/bind/named.conf.local zone "mesos" { type forward; forward only; forwarders { 192.168.0.100 port 8053; }; }; And in the Mesos-DNS config (imagine that it is now at 192.168.0.100) the following changes should be made:

vim /etc/mesos-dns/config.json ... "externalon": false, "port": 8053, ... "externalon": false indicates that Mesos-DNS has the right to refuse service to requests that did not come from mesos domains.

After the changes are made, you must restart Bind and Mesos-DNS.

Mesos-DNS also has an API https://docs.mesosphere.com/1.7/usage/service-discovery/mesos-dns/http-interface/ , which can help in solving automation problems.

Mesos-DNS, in addition to creating records for working tasks, automatically creates records (A and SRV) also for Mesos Slaves, Mesos Masters (and separately for the leader among them), frameworks. Everything for our convenience.

MARATHON-LB

Despite the advantages, Mesos-DNS also has certain limitations, including:

- DNS does not bind to the ports on which the services in the containers are running. They must either be selected statically when setting the task (to monitor their use may not be such a simple task) or to find out a new port every time through Marathon to access the end resources. Mesos-DNS can also generate SRV records in the DNS (indicating the final hostname and port), but not all programs work out of the box.

- DNS does not have fast failover (failover function).

- Records in local DNS caches can be stored for a long time (at least TTL time). Although the Mesos-DNS itself polls the Mesos Master API quite often.

- There are no Health-check services in the final containers. That is, in the case of several instances, the fall of one of them will go unnoticed for Mesos-DNS until it is completely re-created by the Marathon framework.

- Some programs and libraries do not work correctly with several A-records, which imposes serious restrictions on scaling tasks.

That is, most problems arise because of the very nature of the DNS.

To eliminate these shortcomings, the Marathon-lb subproject was launched. Marathon-lb is a Python script that polls the Marathon API and, based on the data received (the Mesos slave address that physically holds the container and the port of the service), creates the HAproxy configuration file and reloads its process.

However, it is worth noting that Marathon-lb works only with Marathon, in contrast to Mesos-DNS. Therefore, in the case of another framework, you will need to look for other software solutions.

The figure shows the balancing of requests by two pools of balancers - Internal (for access from the internal network) and External (for access from the Internet). The balancer (except ELB) is HAproxy and Marathon-lb. ELB is a balancer in the Amazon AWS infrastructure.

To configure Marathon-lb, I used a separate virtual machine with the address 10.0.3.61 . The official documentation also shows the options for running Marathon-lb in a docker container, as a task running from Marathon.

Setting up Marathon-lb and HAproxy is pretty easy. We need the latest stable release of HAproxy and for Ubuntu 14.04 this is version 1.6:

apt-get install software-properties-common add-apt-repository ppa:vbernat/haproxy-1.6 apt-get update apt-get install haproxy Download the Marathon-lb project code:

mkdir /marathon-lb/ cd /marathon-lb/ git clone https://github.com/mesosphere/marathon-lb . Install the necessary python packages for work:

apt install python3-pip pip install -r requirements.txt We generate the keys that marathon-lb requires by default:

cd /etc/ssl openssl req -x509 -newkey rsa:2048 -keyout key.pem -out cert.pem -days 365 -nodes cat key.pem >> mesosphere.com.pem cat cert.pem >> mesosphere.com.pem We run marathon-lb for the first time:

/marathon-lb/marathon_lb.py --marathon http://my_marathon_ip:8080 --group internal — Marathon:

{ "id": "nginx-internal", "container": { "type": "DOCKER", "docker": { "image": "nginx:1.7.7", "network": "BRIDGE", "portMappings": [ { "hostPort": 0, "containerPort": 80, "servicePort": 10001 } ], "forcePullImage":true } }, "instances": 1, "cpus": 0.1, "mem": 65, "healthChecks": [{ "protocol": "HTTP", "path": "/", "portIndex": 0, "timeoutSeconds": 10, "gracePeriodSeconds": 10, "intervalSeconds": 2, "maxConsecutiveFailures": 10 }], "labels":{ "HAPROXY_GROUP":"internal" } } Running , Marathon:

/marathon-lb/marathon_lb.py --marathon http://10.0.3.11:8080 --group internal marathon_lb: fetching apps marathon_lb: GET http://10.0.3.11:8080/v2/apps?embed=apps.tasks marathon_lb: got apps ['/nginx-internal'] marathon_lb: setting default value for HAPROXY_BACKEND_REDIRECT_HTTP_TO_HTTPS ... marathon_lb: setting default value for HAPROXY_BACKEND_HTTP_HEALTHCHECK_OPTIONS marathon_lb: generating config marathon_lb: HAProxy dir is /etc/haproxy marathon_lb: configuring app /nginx-internal marathon_lb: frontend at *:10001 with backend nginx-internal_10001 marathon_lb: adding virtual host for app with id /nginx-internal marathon_lb: backend server 10.0.3.52:31187 on 10.0.3.52 marathon_lb: reading running config from /etc/haproxy/haproxy.cfg marathon_lb: running config is different from generated config - reloading marathon_lb: writing config to temp file /tmp/tmp02nxplxl marathon_lb: checking config with command: ['haproxy', '-f', '/tmp/tmp02nxplxl', '-c'] Configuration file is valid marathon_lb: moving temp file /tmp/tmp02nxplxl to /etc/haproxy/haproxy.cfg marathon_lb: No reload command provided, trying to find out how to reload the configuration marathon_lb: we seem to be running on a sysvinit based system marathon_lb: reloading using /etc/init.d/haproxy reload * Reloading haproxy haproxy marathon_lb: reload finished, took 0.02593827247619629 seconds "HAPROXY_GROUP" . , .

haproxy.cfg 10001 10.0.3.52:31187 .

HAproxy :

root@mesos-lb# netstat -tulpn Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name ... tcp 0 0 0.0.0.0:10001 0.0.0.0:* LISTEN 10285/haproxy ... haproxy.cfg :

cat /etc/haproxy/haproxy.cfg ... frontend nginx-internal_10001 bind *:10001 mode http use_backend nginx-internal_10001 backend nginx-internal_10001 balance roundrobin mode http option forwardfor http-request set-header X-Forwarded-Port %[dst_port] http-request add-header X-Forwarded-Proto https if { ssl_fc } option httpchk GET / timeout check 10s server 10_0_3_52_31187 10.0.3.52:31187 check inter 2s fall 11 , 2 Marathon — 2 nginx-internal_10001 .

, — . — virtual host mapping HAproxy. , HAproxy, .

:

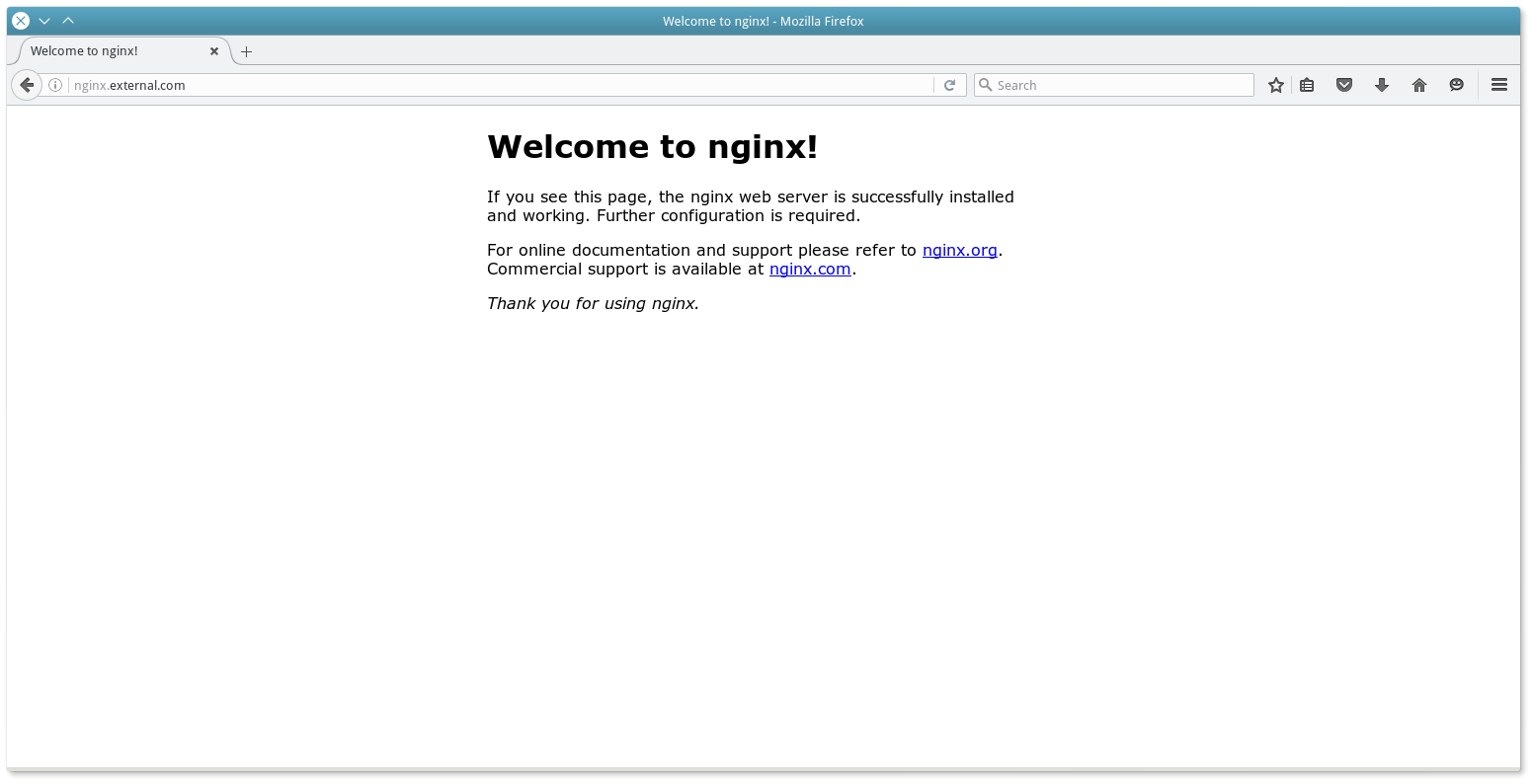

{ "Id": "nginx-external", "Container" { "Type": "DOCKER", "Docker" { "Image": "nginx: 1.7.7", "Network": "BRIDGE", "PortMappings": [ { "HostPort": 0, "containerPort": 80, "servicePort" 10000} ], "ForcePullImage": true } } "Instances": 1, "Cpus": 0.1, "Mem": 65, "HealthChecks": [{ "Protocol": "HTTP", "Path": "/", "PortIndex": 0, "TimeoutSeconds": 10 "GracePeriodSeconds": 10 "IntervalSeconds": 2, "MaxConsecutiveFailures": 10 }], "Labels" { "HAPROXY_GROUP": "external", "HAPROXY_0_VHOST": "nginx.external.com" } } , " HAPROXY_0_VHOST " , HAproxy.

, marathon_lb.py:

/marathon-lb/marathon_lb.py --marathon Http://10.0.3.11:8080 --group external marathon_lb: fetching apps marathon_lb: GET http://10.0.3.11:8080/v2/apps?embed=apps.tasks marathon_lb: got apps [ '/ nginx-internal', '/ nginx-external'] marathon_lb: setting default value for HAPROXY_HTTP_FRONTEND_ACL_WITH_AUTH ... marathon_lb: generating config marathon_lb: HAProxy dir is / etc / haproxy marathon_lb: configuring app / nginx-external marathon_lb: frontend at * 10000 with backend nginx-external_10000 marathon_lb: adding virtual host for app with hostname nginx.external.com marathon_lb: adding virtual host for app with id / nginx-external marathon_lb: backend server 10.0.3.53:31980 on 10.0.3.53 marathon_lb: reading running config from /etc/haproxy/haproxy.cfg marathon_lb: running config is different from generated config - reloading marathon_lb: writing config to temp file / tmp / tmpcqyorq8x marathon_lb: checking config with command: [ 'haproxy "," -f "," / tmp / tmpcqyorq8x "," -c'] Configuration file is valid marathon_lb: moving temp file / tmp / tmpcqyorq8x to /etc/haproxy/haproxy.cfg marathon_lb: No reload command provided, trying to find out how to reload the configuration marathon_lb: we seem to be running on a sysvinit based system marathon_lb: reloading using /etc/init.d/haproxy reload * Reloading haproxy haproxy marathon_lb: reload finished, took 0.02756667137145996 seconds HAproxy:

cat /etc/haproxy/haproxy.cfg ... frontend marathon_http_in bind * 80 mode http acl host_nginx_external_com_nginx-external hdr (host) -i nginx.external.com use_backend nginx-external_10000 if host_nginx_external_com_nginx-external frontend marathon_http_appid_in bind *: 9091 mode http acl app__nginx-external hdr (x-marathon-app-id) -i / nginx-external use_backend nginx-external_10000 if app__nginx-external frontend marathon_https_in bind * 443 ssl crt /etc/ssl/mesosphere.com.pem mode http use_backend nginx-external_10000 if {ssl_fc_sni nginx.external.com} frontend nginx-external_10000 bind * 10000 mode http use_backend nginx-external_10000 backend nginx-external_10000 balance roundrobin mode http option forwardfor http-request set-header X-Forwarded-Port% [dst_port] http-request add-header X-Forwarded-Proto https if {ssl_fc} option httpchk GET / timeout check 10s server 10_0_3_53_31980 10.0.3.53:31980 check inter 2s fall 11 , nginx.external.com, HAproxy, Mesos .

Check the result in the browser:

HAproxy labels are also reflected in Marathon:

In addition, Marathon-lb supports SSL settings for HAproxy, sticky SSL, and data for building haproxy.cfg can be obtained not by an Marathon survey via API, but by subscribing to an Event Bus, etc.

AUTOSCALING

Anyone who has worked with the Amazon AWS platform knows this excellent opportunity to increase or decrease the number of virtual machines depending on the load. Mesos Cluster also has this feature.

The first implementation is marathon-autoscale . The Marathon API script can monitor the CPU / memory usage of the task and raise the number of instances depending on the specified conditions.

, , — marathon-lb-autoscale . Marathon-lb . — Marathon.

. . , — .

http://blog.ipeacocks.info/2016/06/mesos-cluster-management.html

References:

Getting Stated

https://www.digitalocean.com/community/tutorials/how-to-configure-a-production-ready-mesosphere-cluster-on-ubuntu-14-04

https://open.mesosphere.com/advanced-course/introduction/

https://open.mesosphere.com/getting-started/install/

http://iankent.uk/blog/a-quick-introduction-to-apache-mesos/

http://frankhinek.com/tag/mesos/

https://mesosphere.imtqy.com/marathon/docs/service-discovery-load-balancing.html

https://mesosphere.imtqy.com/marathon/

https://mesosphere.imtqy.com/marathon/docs/ports.html

https://beingasysadmin.wordpress.com/2014/06/27/managing-docker-clusters-using-mesos-and-marathon/

http://mesos.readthedocs.io/en/latest/

Docker

http://mesos.apache.org/documentation/latest/containerizer/#Composing

http://mesos.apache.org/documentation/latest/docker-containerizer/

https://mesosphere.imtqy.com/marathon/docs/native-docker.html

Mesos-DNS

https://tech.plista.com/devops/mesos-dns/

http://programmableinfrastructure.com/guides/service-discovery/mesos-dns-haproxy-marathon/

http://mesosphere.imtqy.com/mesos-dns/docs/tutorial-systemd.html

http://mesosphere.imtqy.com/mesos-dns/docs/configuration-parameters.html

https://mesosphere.imtqy.com/mesos-dns/docs/tutorial.html

https://mesosphere.imtqy.com/mesos-dns/docs/tutorial-forward.html

https://github.com/mesosphere/mesos-dns

Marathon-lb

https://mesosphere.com/blog/2015/12/04/dcos-marathon-lb/

https://mesosphere.com/blog/2015/12/13/service-discovery-and-load-balancing-with-dcos-and-marathon-lb-part-2/

https://docs.mesosphere.com/1.7/usage/service-discovery/marathon-lb/

https://github.com/mesosphere/marathon-lb

https://dcos.io/docs/1.7/usage/service-discovery/marathon-lb/usage/

Autoscaling

https://docs.mesosphere.com/1.7/usage/tutorials/autoscaling/

https://docs.mesosphere.com/1.7/usage/tutorials/autoscaling/cpu-memory/

https://docs.mesosphere.com/1.7/usage/tutorials/autoscaling/requests-second/

https://github.com/mesosphere/marathon-autoscale

Other

https://clusterhq.com/2016/04/15/resilient-riak-mesos/

https://github.com/CiscoCloud/mesos-consul/blob/master/README.md#comparisons-to-other-discovery-software

http://programmableinfrastructure.com/guides/load-balancing/traefik/

https://opensource.com/business/14/8/interview-chris-aniszczyk-twitter-apache-mesos

http://www.slideshare.net/akirillov/data-processing-platforms-architectures-with-spark-mesos-akka-cassandra-and-kafka

http://www.slideshare.net/mesosphere/scaling-like-twitter-with-apache-mesos

http://www.slideshare.net/subicura/mesos-on-coreos

https://www.youtube.com/watch?v=RciM1U_zltM

http://www.slideshare.net/JuliaMateo1/deploying-a-dockerized-distributed-application-in-mesossos-and-marathon/

http://mesos.readthedocs.io/en/latest/

Docker

http://mesos.apache.org/documentation/latest/containerizer/#Composing

http://mesos.apache.org/documentation/latest/docker-containerizer/

https://mesosphere.imtqy.com/marathon/docs/native-docker.html

Mesos-DNS

https://tech.plista.com/devops/mesos-dns/

http://programmableinfrastructure.com/guides/service-discovery/mesos-dns-haproxy-marathon/

http://mesosphere.imtqy.com/mesos-dns/docs/tutorial-systemd.html

http://mesosphere.imtqy.com/mesos-dns/docs/configuration-parameters.html

https://mesosphere.imtqy.com/mesos-dns/docs/tutorial.html

https://mesosphere.imtqy.com/mesos-dns/docs/tutorial-forward.html

https://github.com/mesosphere/mesos-dns

Marathon-lb

https://mesosphere.com/blog/2015/12/04/dcos-marathon-lb/

https://mesosphere.com/blog/2015/12/13/service-discovery-and-load-balancing-with-dcos-and-marathon-lb-part-2/

https://docs.mesosphere.com/1.7/usage/service-discovery/marathon-lb/

https://github.com/mesosphere/marathon-lb

https://dcos.io/docs/1.7/usage/service-discovery/marathon-lb/usage/

Autoscaling

https://docs.mesosphere.com/1.7/usage/tutorials/autoscaling/

https://docs.mesosphere.com/1.7/usage/tutorials/autoscaling/cpu-memory/

https://docs.mesosphere.com/1.7/usage/tutorials/autoscaling/requests-second/

https://github.com/mesosphere/marathon-autoscale

Other

https://clusterhq.com/2016/04/15/resilient-riak-mesos/

https://github.com/CiscoCloud/mesos-consul/blob/master/README.md#comparisons-to-other-discovery-software

http://programmableinfrastructure.com/guides/load-balancing/traefik/

https://opensource.com/business/14/8/interview-chris-aniszczyk-twitter-apache-mesos

http://www.slideshare.net/akirillov/data-processing-platforms-architectures-with-spark-mesos-akka-cassandra-and-kafka

http://www.slideshare.net/mesosphere/scaling-like-twitter-with-apache-mesos

http://www.slideshare.net/subicura/mesos-on-coreos

https://www.youtube.com/watch?v=RciM1U_zltM

http://www.slideshare.net/JuliaMateo1/deploying-a-dockerized-distributed-application-in-mesos

Source: https://habr.com/ru/post/308812/

All Articles