The future of computer technology: an overview of current trends

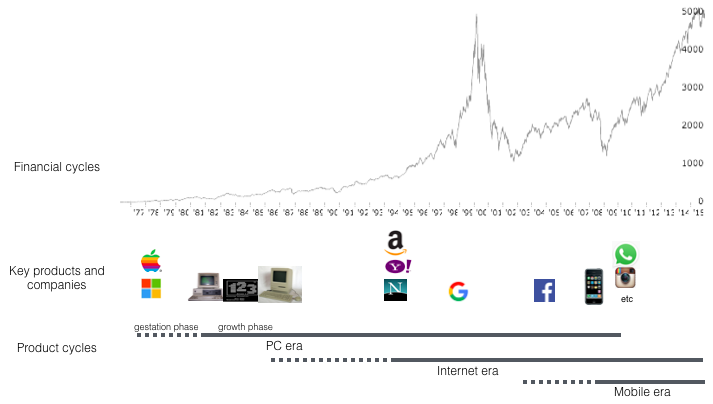

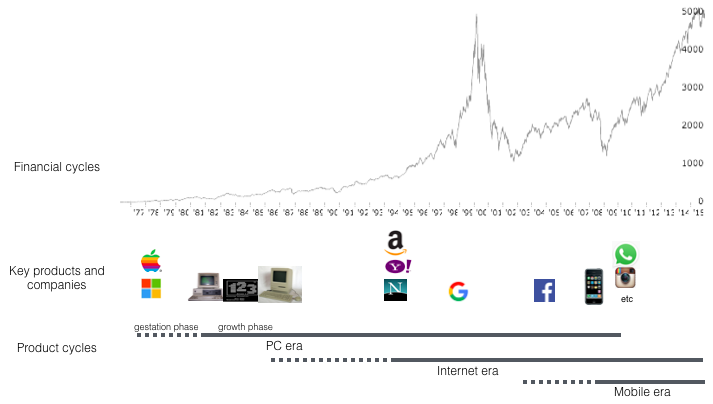

The field of information technology develops in two predominantly independent cycles: product and financial. Recently, disputes about which stage of the financial cycle we are in have not abated; A lot of attention is paid to financial markets, which sometimes behave unpredictably and fluctuate greatly. On the other hand, the product cycles receive relatively little attention, although they are the ones who are moving information technologies forward. But, analyzing the experience of the past, you can try to understand the current product cycle and predict the further development of technology.

The development of product cycles in the field of high technology is due to the interaction of platforms and applications: new platforms allow you to create new applications that, in turn, increase the value of these platforms, thus closing the chain of positive feedback.

')

Small product cycles are repeated all the time, but historically it happens that once in 10–15 years another big cycle begins - an era that completely changes the face of IT.

Financial and product cycles develop mainly independently of each other.

Once the emergence of computers prompted entrepreneurs to create the first text editors, spreadsheets and many other PC applications. With the advent of the Internet, the world has seen search engines, online commerce, e-mail, social networks, business applications of the SaaS model, and many other services. Smartphones gave impetus to the development of mobile social networks and instant messengers, as well as the emergence of new types of services like carpooling. We live in the midst of the mobile era, and, apparently, we will have many more interesting innovations.

Each epoch can be divided into 2 phases: 1) the formation phase - when the platform first appears on the market, but is expensive, crude and / or difficult to handle; 2) the active phase - when a new product solves the mentioned disadvantages of the platform, thereby starting the period of its rapid development.

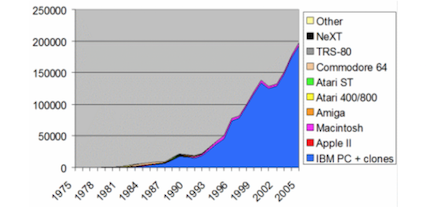

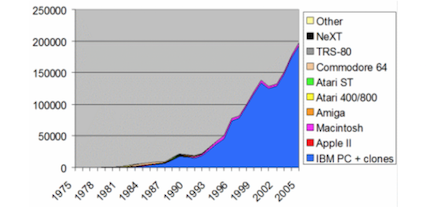

The Apple II computer was released in 1977, and the Altair 8800 in 1975, but the active phase of the PC era began with the release of the IBM PC in 1981.

PC sales per year (thous.)

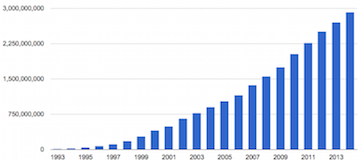

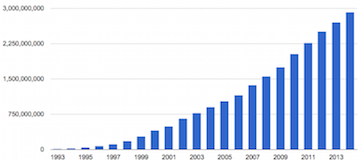

The Internet formation phase began in the 1980s and early 1990s , when, in essence, it was a text-sharing tool used by scientists and the government. The release of the first browser, NCSA Mosaic, in 1993 marked the beginning of the intensive development phase of the Internet, which has not ended to this day.

The number of Internet users worldwide

In the 90s, mobile phones already existed, and the first smartphones appeared at the dawn of zero, but the widespread production of smartphones began in 2007–2008 with the release of the first iPhone, and then with the advent of the Android platform. Since then, the number of smartphone users has skyrocketed, and now their number has already reached about two billion. And by 2020, 80% of the world's population will have smartphones .

Sales of smartphones worldwide (million)

If the duration of each cycle is indeed 10–15 years, the active phase of the new computer era will begin in just a few years. It turns out that the new technology is already in the formation phase. To date, there are several major trends in the areas of hardware and software that allow us to partially shed light on the next era. In this article I want to discuss these trends and put forward several assumptions about how our future might look.

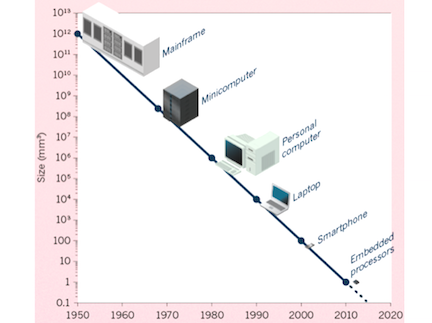

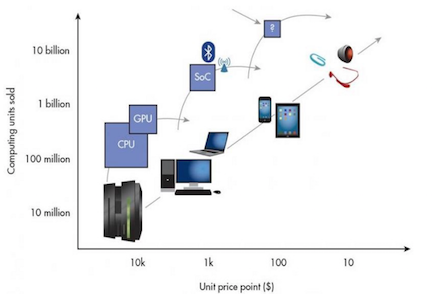

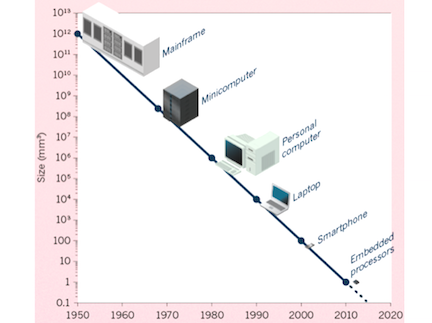

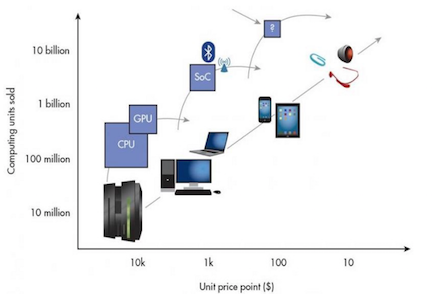

In the mainframe era, only large organizations could afford a computer. Mini-computers were available for smaller organizations, and computers for homes and offices.

The size of computers decreases with constant speed.

Now we are on the verge of a new era in which processors and sensors become so cheap and compact that there will soon be more computers than people.

Two factors contribute to this. First, the steady progress in semiconductor manufacturing over the past 50 years ( Moore's Law ). Secondly, what Chris Anderson calls the “peace dividends from the war of smartphones”: the dizzying success of smartphones has contributed to large investments in the development of processors and sensors. Look inside the modern quadcopter, virtual reality glasses or any device of the Internet of things - what will you see? That's right - mainly the components of the smartphone.

But in the modern era of semiconductors, all attention has shifted from individual processors to entire assemblies of special microcircuits, known as single-chip systems.

Prices for computers are steadily declining

An ordinary single-chip system combines an energy-efficient ARM processor and a special graphics processor, as well as information sharing devices, power management, video signal processing, and so on.

Raspberry Pi Zero: $ 5 Linux Computer with 1 GHz processor

This innovative architecture has reduced the minimum cost of basic computing systems from $ 100 to $ 10 per unit. A great example would be the Raspberry Pi Zero - the first 5-dollar computer on Linux with a frequency of 1 GHz. For the same money, you can purchase a Wi-Fi microcontroller that supports one of the versions of Python. Very soon, these microprocessors will cost less than a dollar, and we can easily embed them almost everywhere.

But more serious achievements are taking place today in the world of high-quality microprocessors. Separate attention deserve graphics processors , the best of which are manufactured by NVIDIA. Graphic processors are useful not only for graphics processing, but also when working with machine learning algorithms, as well as with virtual and augmented reality devices. However, NVIDIA representatives promise more significant performance improvements for GPUs in the near future.

The trump card of the whole sphere of information technology is still quantum computers, which still exist mainly in laboratories. But it is worth making them commercially attractive, and this will lead to a tremendous increase in productivity, primarily in the field of biology and artificial intelligence.

Quantum google computer

There are a lot of interesting things happening in the software world today. A good example is distributed systems. Their appearance is due to the repeated increase in the number of devices in recent years, which has caused the need to parallelize tasks on several machines, to establish the exchange of data between devices and to coordinate their work. Separate systems such as Hadoop or Spark , designed to work with large data sets , deserve special attention. It is also worth mentioning the blockchain technology, which ensures the security of data and resources and was first implemented in Bitcoin cryptocurrency.

But perhaps the most exciting discoveries are being made today in the field of artificial intelligence (AI), which has a long history of ups and downs. Even Alan Turing himself predicted that by 2000 cars would be able to imitate people. And although this prediction has not yet been realized, there are good reasons to believe that AI is finally entering a golden age of its development.

The greatest excitement in the field of AI is centered around the so-called in-depth training - a method that was widely covered in a well-known project of Google, launched in 2012. This project involved a high-performance network of computers, the purpose of which was to learn how to recognize cats on YouTube videos. The method of deep learning is based on artificial neural networks - a technology that originated in the 40s of the last century. Recently, this technology has become relevant again due to many factors : the emergence of new algorithms, reduction in the cost of parallel computing and the wide dissemination of large data sets.

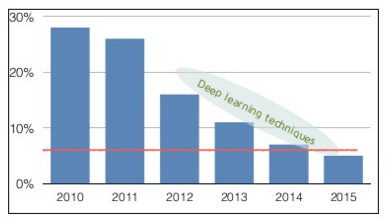

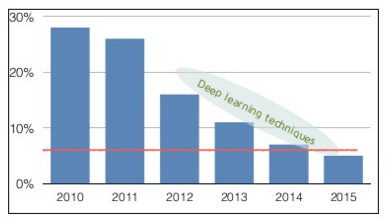

The percentage of errors in the ImageNet contest (the red line corresponds to the person’s performance)

It is hoped that in-depth training will not be just another fashionable term in Silicon Valley. However, interest in this method of teaching is supported by impressive theoretical and practical results. For example, before the introduction of in-depth training, the admissible percentage of errors for the winners of ImageNet, a well-known computer vision competition, was 20–30%. But after its application, the correctness of the algorithms steadily grew, and in 2015, the performance of the machines exceeded the human performance.

Many documents, data packages, and software tools related to in-depth training are publicly available, allowing individuals and small organizations to create their own high-performance applications. WhatsApp Inc. it took only 50 developers to create a popular messenger for 900 million users . For comparison, the creation of messengers of previous generations required the involvement of over a thousand (and sometimes several thousand) developers. Something similar is happening now in the field of AI: software like Theano and TensorFlow in combination with cloud-based training data centers and inexpensive video cards for computing allow small development teams to create innovative AI systems.

For example, below is a small project of one programmer using TensorFlow to convert black and white photos into color:

From left to right: black and white photo, converted photo, color original photo. ( Source )

But a small startup application for the classification of items in real time:

Teradeep application identifies items in real time

Hmm, but somewhere I already saw it:

Fragment from movie Terminator 2: Judgment Day (1991)

One of the first applications from the large-scale company with the in-depth training was an amazingly smart Google Photos image search application:

Search for photos (without metadata) with the keyword phrase "big ben"

Soon we will see a significant increase in the productivity of AI in all areas of software and hardware: voice assistants, search engines, chat bots , 3D scanners , language translators, cars, drones, diagnostic imaging systems, and much more.

Start-ups that create products with an emphasis on AI should remain extremely focused on certain applications in order to maintain competition with large companies for which AI is a top priority. AI systems become more efficient as the amount of data collected for them increases. It turns out something like a flywheel, constantly rotating due to the so-called data network effect (more users → more data → better products → more users). For example, the Wase map service team used the effect of a data network to make the quality of the maps provided better than their more venerable competitors. Anyone who intends to use AI for their startup should stick to a similar strategy.

Now a number of promising platforms are at the stage of formation, which soon may well go on to the stage of development, as they combine the latest developments from the fields of software and hardware. And although these platforms may look different or have different configurations, they have one thing in common: the use of the latest advanced features of smart virtualization. Consider some of these platforms:

Cars. Large information technology companies like Google, Apple, Uber and Tesla invest a lot in the development of autonomous or unmanned vehicles. Semi-autonomous cars Tesla Model S are already on the market and the release of updated and more advanced models is expected soon. Creating a fully autonomous car will take some time, but there is reason to believe that there will be no more than five years to wait. In fact, there are already developments of fully autonomous cars that drive no worse than under human control. However, due to many aspects of a cultural and regulatory nature, such cars have to drive much better than human driven vehicles in order to be widely used.

Unmanned vehicle charts its environment

Undoubtedly, the volume of investment in unmanned vehicles will only grow. In addition to information technology companies, major car manufacturers have also begun to think about autonomy. We are still waiting for a lot of interesting startup products. Software depth training have become so effective that today a single developer the strength to make a semi-autonomous car.

Homemade unmanned vehicle

Drones Modern drones are equipped with the latest technology (mainly components of smartphones and mechanical parts), but have relatively simple software. Soon, improved models will appear, equipped with computer vision and other types of AI, which will make them safer, easier to operate and useful. Photo and video from drones will be popular not only among amateurs, but, more importantly, will find commercial application. In addition, there are many dangerous types of work, including high-altitude, for which it would be much safer to use drones.

Fully autonomous drone flight

Internet of things. The most basic advantages of IoT devices are their energy efficiency, safety and convenience. Good examples of the first two characteristics are the Nest and Dropcam products. As for convenience, pay attention to the Amazon Echo device.

Most people think that Echo is just another marketing ploy, but when they use it at least once, they are surprised at how convenient this device is. It brilliantly demonstrates the effectiveness of voice control as the basis of the user interface. Of course, we will not soon see robots with universal intelligence capable of supporting a full-fledged conversation. But, as Echo shows, computers are already able to cope with more or less complex voice commands. As the depth learning method improves, computers will learn to understand the language better.

3 main advantages: energy efficiency, safety, convenience

IoT devices will also find use in the business segment. For example, devices with sensors and network connectivity are widely used for the operational control of industrial equipment.

Wearable technology. Today, the functionality of wearable computers varies depending on several factors: battery capacity, means of communication and data processing. The most successful devices usually have a very narrow scope of application: for example, fitness tracking. As hardware components improve, wearables will, like smartphones, expand their functionality, thereby opening up opportunities for new applications. As in the case of the Internet of Things, it is assumed that the voice will become the main user interface for managing wearable devices.

Miniature earpiece with artificial intelligence, a fragment from the film "She"

The virtual reality. The year 2016 will be very interesting for the development of VR tools: the release of virtual reality glasses Oculus Rift and HTC Vive (and, possibly, PlayStation VR) means that comfortable and immersive VR systems will finally become publicly available. The developers of VR devices will have to make a good effort to prevent users from having the so-called “sinister valley” effect, in which the excessive plausibility of a robot or other artificial object causes hostility in human observers.

Creating high-quality VR systems requires high-quality screens (with high resolution, high refresh rates and low inertia), powerful graphics cards and the ability to track the exact position of the user (previous generations of VR systems could only track the user's head rotation). This year, thanks to new devices, for the first time, users will be able to experience the full effect of presence : all the senses are so deceptively qualitative that the user feels completely immersed in the virtual world.

Demo Oculus Rift Toybox

Undoubtedly, VR glasses will continue to evolve and will eventually become more accessible. Developers have yet to work on aspects such as new tools for presenting generated and / or captured VR content , improving machine vision to track the user's position and retrieving data about him directly from the phone or virtual reality glasses, as well as distributed server systems for hosting large-scale virtual environments.

Creating a virtual world in 3D with VR glasses

Augmented Reality. Most likely, AR will be developed only after VR, because for the full use of augmented reality, all virtual capabilities will be required along with additional new technologies. For example, to fully merge real and virtual objects in one interactive scene, AR tools will require advanced machine vision technologies with low latency.

Augmented reality device, a fragment of the movie "Kingsman: Secret Service"

But, most likely, the era of augmented reality will come faster than you think. This demo was filmed directly through the AR Magic Leap device:

Demonstration of Magic Leap: a virtual character in a real environment

This demo was filmed directly through the Magic Leap device on October 14, 2015. When creating it, neither special effects nor compositing were used.

Perhaps the cycles of 10-15 years will not be repeated, and the mobile era will be the last of them. Or maybe the next era will be shorter, or only one of the subspecies of the technologies discussed above will later become really important.

I prefer to think that we are now at the intersection of several epochs. “Peaceful dividends from the war of smartphones” has been the rapid emergence of new devices and software developments, especially artificial intelligence, which can make these devices even smarter and more useful.

Some researchers note that most of the new devices are still in the “puberty period” : they may be imperfect and to some extent ridiculous, and all because they have not yet entered the development phase. As in the case of personal computers in the 70s, the Internet in the 80s and smartphones at the dawn of zero, we see not a complete picture, but only fragments of what current technologies are going to turn into. Anyway, the future is near: markets fluctuate, fashion comes and goes, but progress, as before, is confidently moving forward.

The development of product cycles in the field of high technology is due to the interaction of platforms and applications: new platforms allow you to create new applications that, in turn, increase the value of these platforms, thus closing the chain of positive feedback.

')

Small product cycles are repeated all the time, but historically it happens that once in 10–15 years another big cycle begins - an era that completely changes the face of IT.

Financial and product cycles develop mainly independently of each other.

Once the emergence of computers prompted entrepreneurs to create the first text editors, spreadsheets and many other PC applications. With the advent of the Internet, the world has seen search engines, online commerce, e-mail, social networks, business applications of the SaaS model, and many other services. Smartphones gave impetus to the development of mobile social networks and instant messengers, as well as the emergence of new types of services like carpooling. We live in the midst of the mobile era, and, apparently, we will have many more interesting innovations.

Each epoch can be divided into 2 phases: 1) the formation phase - when the platform first appears on the market, but is expensive, crude and / or difficult to handle; 2) the active phase - when a new product solves the mentioned disadvantages of the platform, thereby starting the period of its rapid development.

The Apple II computer was released in 1977, and the Altair 8800 in 1975, but the active phase of the PC era began with the release of the IBM PC in 1981.

PC sales per year (thous.)

The Internet formation phase began in the 1980s and early 1990s , when, in essence, it was a text-sharing tool used by scientists and the government. The release of the first browser, NCSA Mosaic, in 1993 marked the beginning of the intensive development phase of the Internet, which has not ended to this day.

The number of Internet users worldwide

In the 90s, mobile phones already existed, and the first smartphones appeared at the dawn of zero, but the widespread production of smartphones began in 2007–2008 with the release of the first iPhone, and then with the advent of the Android platform. Since then, the number of smartphone users has skyrocketed, and now their number has already reached about two billion. And by 2020, 80% of the world's population will have smartphones .

Sales of smartphones worldwide (million)

If the duration of each cycle is indeed 10–15 years, the active phase of the new computer era will begin in just a few years. It turns out that the new technology is already in the formation phase. To date, there are several major trends in the areas of hardware and software that allow us to partially shed light on the next era. In this article I want to discuss these trends and put forward several assumptions about how our future might look.

Hardware: compact, cheap and versatile

In the mainframe era, only large organizations could afford a computer. Mini-computers were available for smaller organizations, and computers for homes and offices.

The size of computers decreases with constant speed.

Now we are on the verge of a new era in which processors and sensors become so cheap and compact that there will soon be more computers than people.

Two factors contribute to this. First, the steady progress in semiconductor manufacturing over the past 50 years ( Moore's Law ). Secondly, what Chris Anderson calls the “peace dividends from the war of smartphones”: the dizzying success of smartphones has contributed to large investments in the development of processors and sensors. Look inside the modern quadcopter, virtual reality glasses or any device of the Internet of things - what will you see? That's right - mainly the components of the smartphone.

But in the modern era of semiconductors, all attention has shifted from individual processors to entire assemblies of special microcircuits, known as single-chip systems.

Prices for computers are steadily declining

An ordinary single-chip system combines an energy-efficient ARM processor and a special graphics processor, as well as information sharing devices, power management, video signal processing, and so on.

Raspberry Pi Zero: $ 5 Linux Computer with 1 GHz processor

This innovative architecture has reduced the minimum cost of basic computing systems from $ 100 to $ 10 per unit. A great example would be the Raspberry Pi Zero - the first 5-dollar computer on Linux with a frequency of 1 GHz. For the same money, you can purchase a Wi-Fi microcontroller that supports one of the versions of Python. Very soon, these microprocessors will cost less than a dollar, and we can easily embed them almost everywhere.

But more serious achievements are taking place today in the world of high-quality microprocessors. Separate attention deserve graphics processors , the best of which are manufactured by NVIDIA. Graphic processors are useful not only for graphics processing, but also when working with machine learning algorithms, as well as with virtual and augmented reality devices. However, NVIDIA representatives promise more significant performance improvements for GPUs in the near future.

The trump card of the whole sphere of information technology is still quantum computers, which still exist mainly in laboratories. But it is worth making them commercially attractive, and this will lead to a tremendous increase in productivity, primarily in the field of biology and artificial intelligence.

Quantum google computer

Software: the golden age of artificial intelligence

There are a lot of interesting things happening in the software world today. A good example is distributed systems. Their appearance is due to the repeated increase in the number of devices in recent years, which has caused the need to parallelize tasks on several machines, to establish the exchange of data between devices and to coordinate their work. Separate systems such as Hadoop or Spark , designed to work with large data sets , deserve special attention. It is also worth mentioning the blockchain technology, which ensures the security of data and resources and was first implemented in Bitcoin cryptocurrency.

But perhaps the most exciting discoveries are being made today in the field of artificial intelligence (AI), which has a long history of ups and downs. Even Alan Turing himself predicted that by 2000 cars would be able to imitate people. And although this prediction has not yet been realized, there are good reasons to believe that AI is finally entering a golden age of its development.

“Machine learning is a key, revolutionary way to rethink everything we do,” - Google CEO Sundar Pichai .

The greatest excitement in the field of AI is centered around the so-called in-depth training - a method that was widely covered in a well-known project of Google, launched in 2012. This project involved a high-performance network of computers, the purpose of which was to learn how to recognize cats on YouTube videos. The method of deep learning is based on artificial neural networks - a technology that originated in the 40s of the last century. Recently, this technology has become relevant again due to many factors : the emergence of new algorithms, reduction in the cost of parallel computing and the wide dissemination of large data sets.

The percentage of errors in the ImageNet contest (the red line corresponds to the person’s performance)

It is hoped that in-depth training will not be just another fashionable term in Silicon Valley. However, interest in this method of teaching is supported by impressive theoretical and practical results. For example, before the introduction of in-depth training, the admissible percentage of errors for the winners of ImageNet, a well-known computer vision competition, was 20–30%. But after its application, the correctness of the algorithms steadily grew, and in 2015, the performance of the machines exceeded the human performance.

Many documents, data packages, and software tools related to in-depth training are publicly available, allowing individuals and small organizations to create their own high-performance applications. WhatsApp Inc. it took only 50 developers to create a popular messenger for 900 million users . For comparison, the creation of messengers of previous generations required the involvement of over a thousand (and sometimes several thousand) developers. Something similar is happening now in the field of AI: software like Theano and TensorFlow in combination with cloud-based training data centers and inexpensive video cards for computing allow small development teams to create innovative AI systems.

For example, below is a small project of one programmer using TensorFlow to convert black and white photos into color:

From left to right: black and white photo, converted photo, color original photo. ( Source )

But a small startup application for the classification of items in real time:

Teradeep application identifies items in real time

Hmm, but somewhere I already saw it:

Fragment from movie Terminator 2: Judgment Day (1991)

One of the first applications from the large-scale company with the in-depth training was an amazingly smart Google Photos image search application:

Search for photos (without metadata) with the keyword phrase "big ben"

Soon we will see a significant increase in the productivity of AI in all areas of software and hardware: voice assistants, search engines, chat bots , 3D scanners , language translators, cars, drones, diagnostic imaging systems, and much more.

“It’s easy to predict the ideas of the next 10,000 startups: take X and add artificial intelligence,” Kevin Kelly .

Start-ups that create products with an emphasis on AI should remain extremely focused on certain applications in order to maintain competition with large companies for which AI is a top priority. AI systems become more efficient as the amount of data collected for them increases. It turns out something like a flywheel, constantly rotating due to the so-called data network effect (more users → more data → better products → more users). For example, the Wase map service team used the effect of a data network to make the quality of the maps provided better than their more venerable competitors. Anyone who intends to use AI for their startup should stick to a similar strategy.

Software + hardware: new computers

Now a number of promising platforms are at the stage of formation, which soon may well go on to the stage of development, as they combine the latest developments from the fields of software and hardware. And although these platforms may look different or have different configurations, they have one thing in common: the use of the latest advanced features of smart virtualization. Consider some of these platforms:

Cars. Large information technology companies like Google, Apple, Uber and Tesla invest a lot in the development of autonomous or unmanned vehicles. Semi-autonomous cars Tesla Model S are already on the market and the release of updated and more advanced models is expected soon. Creating a fully autonomous car will take some time, but there is reason to believe that there will be no more than five years to wait. In fact, there are already developments of fully autonomous cars that drive no worse than under human control. However, due to many aspects of a cultural and regulatory nature, such cars have to drive much better than human driven vehicles in order to be widely used.

Unmanned vehicle charts its environment

Undoubtedly, the volume of investment in unmanned vehicles will only grow. In addition to information technology companies, major car manufacturers have also begun to think about autonomy. We are still waiting for a lot of interesting startup products. Software depth training have become so effective that today a single developer the strength to make a semi-autonomous car.

Homemade unmanned vehicle

Drones Modern drones are equipped with the latest technology (mainly components of smartphones and mechanical parts), but have relatively simple software. Soon, improved models will appear, equipped with computer vision and other types of AI, which will make them safer, easier to operate and useful. Photo and video from drones will be popular not only among amateurs, but, more importantly, will find commercial application. In addition, there are many dangerous types of work, including high-altitude, for which it would be much safer to use drones.

Fully autonomous drone flight

Internet of things. The most basic advantages of IoT devices are their energy efficiency, safety and convenience. Good examples of the first two characteristics are the Nest and Dropcam products. As for convenience, pay attention to the Amazon Echo device.

Most people think that Echo is just another marketing ploy, but when they use it at least once, they are surprised at how convenient this device is. It brilliantly demonstrates the effectiveness of voice control as the basis of the user interface. Of course, we will not soon see robots with universal intelligence capable of supporting a full-fledged conversation. But, as Echo shows, computers are already able to cope with more or less complex voice commands. As the depth learning method improves, computers will learn to understand the language better.

3 main advantages: energy efficiency, safety, convenience

IoT devices will also find use in the business segment. For example, devices with sensors and network connectivity are widely used for the operational control of industrial equipment.

Wearable technology. Today, the functionality of wearable computers varies depending on several factors: battery capacity, means of communication and data processing. The most successful devices usually have a very narrow scope of application: for example, fitness tracking. As hardware components improve, wearables will, like smartphones, expand their functionality, thereby opening up opportunities for new applications. As in the case of the Internet of Things, it is assumed that the voice will become the main user interface for managing wearable devices.

Miniature earpiece with artificial intelligence, a fragment from the film "She"

The virtual reality. The year 2016 will be very interesting for the development of VR tools: the release of virtual reality glasses Oculus Rift and HTC Vive (and, possibly, PlayStation VR) means that comfortable and immersive VR systems will finally become publicly available. The developers of VR devices will have to make a good effort to prevent users from having the so-called “sinister valley” effect, in which the excessive plausibility of a robot or other artificial object causes hostility in human observers.

Creating high-quality VR systems requires high-quality screens (with high resolution, high refresh rates and low inertia), powerful graphics cards and the ability to track the exact position of the user (previous generations of VR systems could only track the user's head rotation). This year, thanks to new devices, for the first time, users will be able to experience the full effect of presence : all the senses are so deceptively qualitative that the user feels completely immersed in the virtual world.

Demo Oculus Rift Toybox

Undoubtedly, VR glasses will continue to evolve and will eventually become more accessible. Developers have yet to work on aspects such as new tools for presenting generated and / or captured VR content , improving machine vision to track the user's position and retrieving data about him directly from the phone or virtual reality glasses, as well as distributed server systems for hosting large-scale virtual environments.

Creating a virtual world in 3D with VR glasses

Augmented Reality. Most likely, AR will be developed only after VR, because for the full use of augmented reality, all virtual capabilities will be required along with additional new technologies. For example, to fully merge real and virtual objects in one interactive scene, AR tools will require advanced machine vision technologies with low latency.

Augmented reality device, a fragment of the movie "Kingsman: Secret Service"

But, most likely, the era of augmented reality will come faster than you think. This demo was filmed directly through the AR Magic Leap device:

Demonstration of Magic Leap: a virtual character in a real environment

This demo was filmed directly through the Magic Leap device on October 14, 2015. When creating it, neither special effects nor compositing were used.

What's next?

Perhaps the cycles of 10-15 years will not be repeated, and the mobile era will be the last of them. Or maybe the next era will be shorter, or only one of the subspecies of the technologies discussed above will later become really important.

I prefer to think that we are now at the intersection of several epochs. “Peaceful dividends from the war of smartphones” has been the rapid emergence of new devices and software developments, especially artificial intelligence, which can make these devices even smarter and more useful.

Some researchers note that most of the new devices are still in the “puberty period” : they may be imperfect and to some extent ridiculous, and all because they have not yet entered the development phase. As in the case of personal computers in the 70s, the Internet in the 80s and smartphones at the dawn of zero, we see not a complete picture, but only fragments of what current technologies are going to turn into. Anyway, the future is near: markets fluctuate, fashion comes and goes, but progress, as before, is confidently moving forward.

Source: https://habr.com/ru/post/308776/

All Articles