Errors of questionnaires. 1 error: sample offset. 8 ways to attract the right respondents

In these articles, I tried to gather experience of my mistakes and discoveries related to unobtrusive traps in research. When teaching studies, a lot of attention is usually paid to the choice of methodology, data collection technique and statistical processing, but almost no one talks about organizational nuances that can distort the results, or completely fail the study. Many of them will seem obvious to you when you read them, but it sometimes takes years to notice and begin to take them into account in your own research. I conduct personally, teach and lead research for more than 15 years. Often, meeting business research in IT companies and seeing them from the inside, I was convinced that these traps were rarely taken into account even by experienced researchers.

This means that the material will be useful to those who conduct research on users (customers, employees, students) using questionnaires, who do it themselves, or order such research. For professional researchers, this article will be of less interest than for amateurs.

')

Is it possible to research user needs for surveys?

Sometimes a company needs to get the user's opinion about the product (or the employee’s opinion about the company), to find out its needs and hidden objections. A simple solution is usually chosen. A questionnaire is compiled with seemingly logical and simple questions and is sent to users. The returned part of the questionnaires are processed by mathematical methods and taken as a representative sample (reflecting the views of typical users), analyzed with the help of statistics, visualized and prepared a report for decision-making and strategy development. Often an erroneous and useless report.

One of the most insidious problems of such research: the needs are very difficult to investigate, but it almost always seems that the research was successful.

In this and the next article I will talk about a few common mistakes in researching user needs and ways that can reduce their impact on research results.

Error 1. Sample bias, or who are all these people and why do they answer you?

Everyone who has ever met with questionnaires knows that only a small part of the questionnaires returned. Sometimes - less than 10%.

A lot of publications are devoted to ways to reduce this evil. Including, with tips on statistical sampling. Strictly or not strictly, but the returned part of the questionnaires is often recognized as an adjectival sample from the general population (the entire set of users whose opinions the researcher wants to study) and work with what they have. Sometimes this “selectiveness” is completely invisible - for example, when an invitation to a survey is sent without address, and the responded users are considered to be a sample.

Example. A large IT company (with a staff of several thousand) conducted a survey of employee satisfaction with the work of the HR service. We were invited to help only at the stage of processing the results. Prior to this, the department staff themselves compiled a questionnaire and themselves launched a survey, sending out invitations to employees via corporate mail. They did not take care of the motivation of survey participants and the “administrative leverage” (almost the most important part of any organizational research) and simply counted on a good response of the staff. As a result, the questionnaires returned with a 15% return. The questionnaires were mainly answered by new or completely inexperienced workers (they still had almost no experience with the activities of this division, but they filled out the questionnaires willingly and, as a rule, exaggerated the overestimated points). The most important group - heads of departments - was represented by only three (out of several dozen) the most loyal participants. The opportunity to draw attention to the survey was missed, it was also impossible to re-survey (the organizers would be accused of spam). As a result, they chose an elegant solution - they presented the results to the management as a percentage of each group of employees, without indicating the number of participants. The report turned out that the heads of departments are extremely satisfied with the work of the unit. The life of such a report is until the manager finds out how many people from each group actually took part in the survey. But such questions are rarely asked, and organizational research, to which none of the employees pays attention, flourishes.

Let us examine 3 reasons for the sample bias:

1) the wrong choice of the search channel participants,

2) responsiveness

3) mercantile spirit.

1. Wrong selection of the search channel participants

In almost all statistical textbooks, you will find a warning about the sample bias that appears if you interview, say, the participants of only one Internet forum (provided that the user population is much wider), or if you survey employees in the canteen working time, or polling the most accessible group of users (for example, those who came to the presentation of a new product): do their opinions agree with the opinions of other users, or is this a specific group? These warnings are classic tips for researchers, and they are surely considered by many.

But I want to draw attention to other dangers of sample bias - responsiveness and mercantile spirit.

2. Responsive enemies of the researcher

Answer honestly: when was the last time you were happy to fill out the questionnaire? Most often, they probably agreed out of solidarity, trying to help a colleague. So, your answers should be excluded from the sample as irrelevant (you answered questions like a loyal friend, or a sympathetic colleague, and not as a “naive” user). In the classical marketing research, after the initial analysis of the sample, it was decided to exclude all representatives of related specialties, including all advertisers and marketers, since their opinion is considered professionally deformed. Who then will stay?

Who are all these people who answer our polls and find time for them? Who is in a stream near a supermarket who approaches a sociologist, who opens the doors of an apartment, or fills out a form in an online survey? Obviously, those who are personally concerned about the survey, or those who have plenty of time, have developed a motive for help and communication, and perhaps not enough other interesting activities.

Remember, in the Soviet film “The Most Charming and Attractive”, the main character wants to get married with the help of a sociologist friend, a specialist in family relations. Together they try to investigate the heroines' potential suitors and face their employment and unwillingness to answer questions. But there is a sympathetic employee who is happy to answer any questions — the respondent is not at all interesting to the bride and researcher. So it is in life: in the situation of a mass survey, we can probably not notice that behind the numerous responses of responsive and irrelevant users, we have not received a single response from our target customers.

An old study by JM Darley, CD Batson, 1973 showed that even seminary students, meeting requests for help, rendered it much less frequently if they were busy and were in a hurry (for example, they were preparing to speak on the topic of the good Samaritan).

Source: Heckhausen H. Motivation and activity: In 2 volumes. T. 2. M: Pedagogy, 1986. P. 234-248 / Darley, JM, and Batson, CD, "From Jerusalem to Jericho": A study of Situational and Dispositional Variables in Helping Behavior ". JPSP, 1973, 27, 100-108.

Do we need answers from such sympathetic and unoccupied people? And will their answers reflect the views of typical users about our product? Will they praise the product, wanting to seem good, or, conversely, criticizing it, wanting to attract attention?

Example. Exploring a few years ago, the loyalty of employees of an average-sized company, we paid attention to the suspiciously positive, “monotonous”, answers of several of its employees. They were the first to send out fully completed questionnaires, speak well and fully about the company, offer ideas and, in general, were just the ideal survey participants. This caused suspicions in us, which we were able to clarify during in-depth interviews. It turned out that only sellers who felt their precarious position in front of the upcoming reporting period showed over-responsiveness to the survey. They also gave only positive feedback about the company and management (if we were to analyze the average, these reviews would significantly shift the overall picture of the research results). During the interview, one of the sellers praised the company and sincerely admitted that he understood that he owed her because he did not show results for a year. When we brought a report with caution to management, we learned that a “loyal” employee was ahead of us, the day before he submitted a letter of resignation.

If the portrait of your user is “a person with a lot of free time, with a developed need for help and communication,” then yes, you can safely use the results of such surveys. If your user is a busy and rational person, you need to additionally think about how to involve him in research. Below, I will tell you about my findings for such an attraction.

3. Mercantile enemies of the researcher

The second problem of sampling bias and receiving erroneous data is participation in surveys of “money seekers”.

The problem of external and internal motivation of survey participants is also often discussed: is it worth paying for labor, offering additional rewards and pleasant bonuses to customers who answer the questionnaire? In such disputes there is a clear position: do not enter external motivation (money, free access, gifts, time off, etc.) as long as it is possible to work with internal ones. And it's not about greed. External motivation is almost always irreversible , it should be considered the last desperate step.

But shifting the sample among real users or potential customers towards those who are interested in paying for their participation is still a small evil. More evil, highly relevant now in the research market, is when, with the purpose of earning money, people present themselves as your real or potential customers, but not as such.

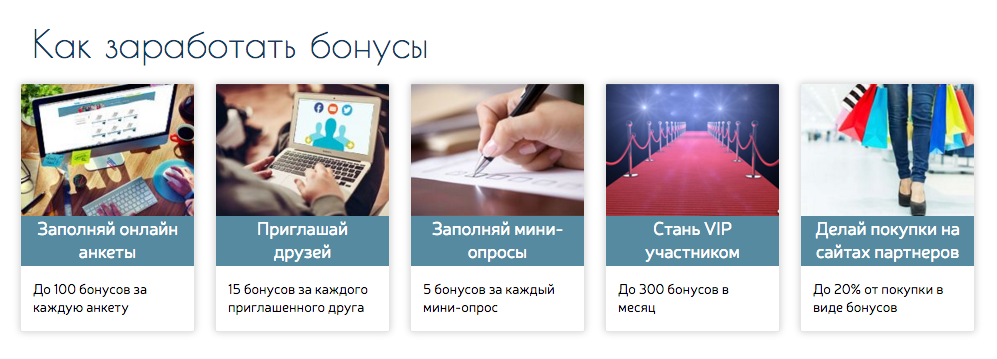

Now many on-line survey services offer access to the paid respondents panel. They attract and pay for the work of research participants using different methods and it remains to be seen how scrupulous they are about targeting, and how far such targeting can be done at all.

In the picture: a screenshot of one of the sites inviting to actively participate in different polls.

Doubts about the purity of targeting are reinforced by a parallel wave of activity on the part of the participants of such surveys, who post tips on how to make money on online surveys and increase the likelihood of receiving an invitation (getting into the sample). For example, when filling out a questionnaire, it is advised to write that you have an average age, average or above average income, you are married, you have a driver's license, a child, you are a regular consumer of certain products (which ones in the questionnaire are asked about and agree with ) and are not a representative of a certain profession (which one will be asked in the questionnaire - deny that). All these tips help get off as a typical user, get into research and get paid for participating. Imagine what kind of people earn from such research, and what answers they will give to your questions.

On the Russian-language sites, profit seekers are advised to impersonate the average Europeans or Americans, and then to respond, as it will, even without knowing a foreign language. Are our Western brands' product strategies determined by our unemployed compatriots and their random answers?

We can ignore the sample bias only in the rare case, if it does not matter for us at all what user we are talking about, and we need, for example, some of his psychophysical indicators characteristic of each normally developing person without taking into account age and sex, or if we just started testing the product, and at this stage absolutely any feedback and ideas are important to us, regardless of the portrait of a typical user. In the latter case, it only makes sense to collect “raw data” in order to prepare a more thorough survey on the relevant sample.

How to attract suitable?

In order not to work with a “good” sample of inappropriate respondents, we occasionally use such psychological methods to attract the relevant sample.

Method 1. Looking for business and rational? We find meaning for them.

In order for a person to take time to complete a questionnaire, he needs to find the meaning in it. In such cases, we formulate a “legend”, an invitation to research so that a busy person who does not want to help us, imbued with the idea of the importance of the survey and agreed that it is worth spending time on it.

1. Important to him. Sometimes the nature of the study allows you to choose motivating incentives that relate personally to the respondent.

Example 1. Several years ago we needed to test a large psychological questionnaire. It was forbidden to report the results until the questionnaire passes the test. To attract people, we attached to it two authoritative psychological tests that served to validate the questionnaire. After the survey, each participant was given detailed individual interpretation of these tests, which was a good incentive for them to participate in the study. We brought printed results to some regional participants or even sent paper letters because they did not have an e-mail. Once on New Year's Day, I even received a postcard from one participant with congratulations and thanks for the results.

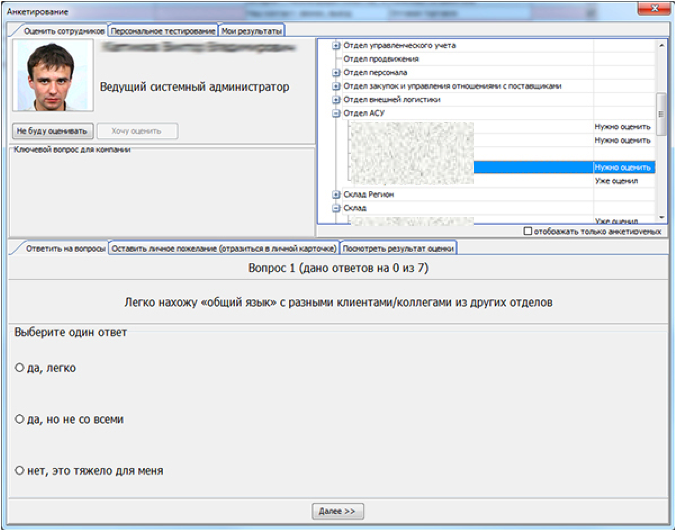

Example 2. We developed the methodology and automated the internal annual staff assessment in one of our clients' companies. Before proposing an assessment methodology, we conducted a brief survey and found out from the staff that the lack of feedback on the results frustrated them most in previous evaluations. They were informed about the overall rating, or accrued bonuses, but no one offered a detailed result. Automation has helped us make the process of providing feedback instant, deployed and aimed at the development of each employee.

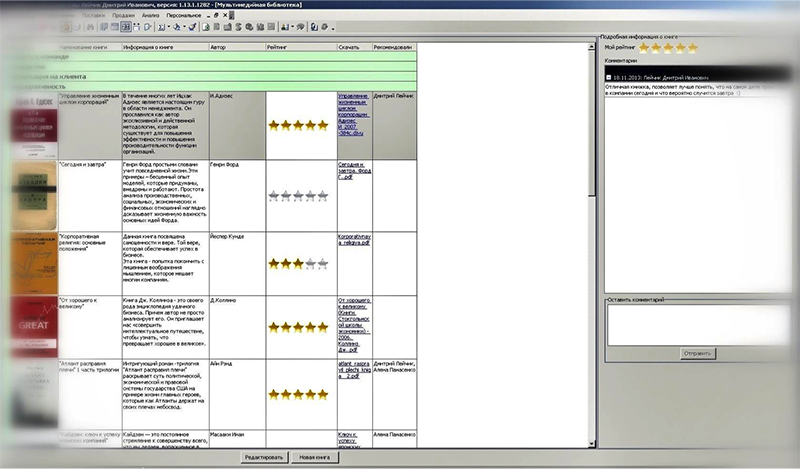

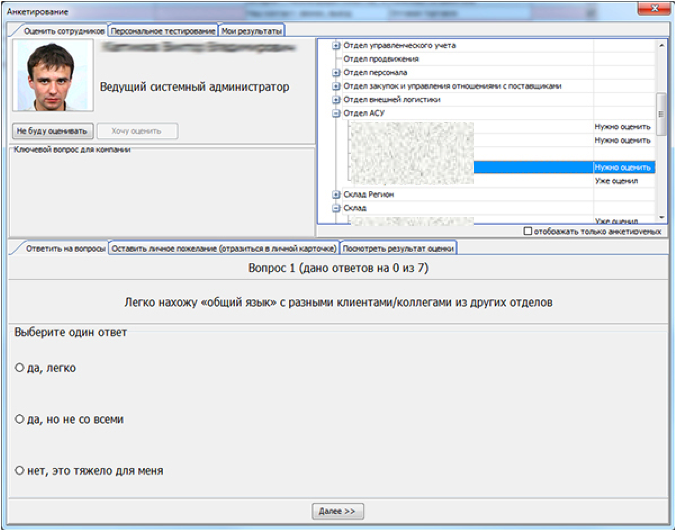

First, after answering the questionnaire about themselves and their employees (one of the stages of evaluation on the basis of 360 degrees), each participant could see how the employees and management assessed it (the evaluations were visualized in a spider diagram and presented quite visual results) - see drawing.

Secondly, after receiving the final assessment of personal qualities, the employee could review the decoding of quality, find out what exactly his assessment means, and read personal recommendations for the development of competencies - depending on the points received, he was offered behavior tips with staff and management, or special trainings and seminars (see drawing).

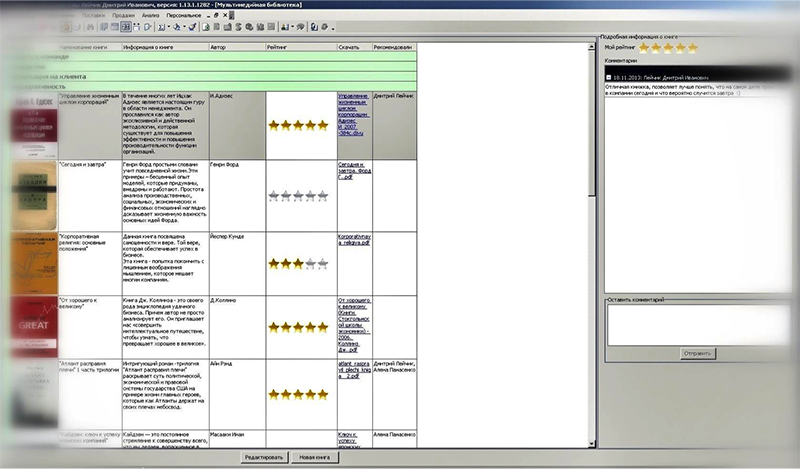

Thirdly, after reading the recommendations, the participant could see what kind of literature he could read for the development of those qualities that were not appreciated enough. References to the literature led to the corporate on-line library, where employees could evaluate the books read and leave comments (see figure).

So we managed to involve the staff in the assessment process, to achieve rapid filling of on-line questionnaires and substantially color the formal assessment process.

2. Important for society. When the respondent cannot be interested personally, we tell in the invitation why the need for research arose, what threatens society (nature, culture, science, etc.) and why it is important to get the answers of just such a person in order to understand how to change the situation. Of course, such explanations are only suitable for global research, or socially significant projects.

3. Important to you. Perhaps the weakest argument. Often the egoism of researchers causes irritation. But there are also successful examples when a researcher explains his predicament and causes sympathy.

Example 1: I met similar appeals. Usually they work: “I got a job of my dream. But I can not pass a trial period, if I do not conduct a good customer research. (I am writing a thesis / I try to win a grant for training). I try very hard to get a high-quality study. I just can’t gather the right amount of experts to participate in the survey. Please help me get a dream job. You only need to answer a couple of questions, and this will give me a huge chance to do something worthwhile. ”

Example 2: Once I was really bribed by a call from a well-known MLM company distributing cosmetics. The girl began the conversation with these words: “We noticed that recently the reputation of our company began to fall, and we decided to conduct a survey of public opinion in order to understand what our customers didn’t like. Could you help us and answer a few questions so that we can improve our reputation and please our customers again? ”I was filled with their problem and was ready to answer her questions in detail. Unfortunately, after this introductory phrase, the girl returned to the usual steps of telephone sales, and I quickly stopped talking.

Method 2. Tell what this will lead to.

One of the most unpleasant experiences for a person is the experience of the futility of effort. The probability of filling out questionnaires in our studies usually increases if we explain to participants how their answers will be used. Sometimes we honestly tell us that now we are choosing a product development strategy, and we will base our choice on the decision of our customers. Or we inform that as a result of the research a report will be published with recommendations for all manufacturers of such goods. Study participants have the right to know where the results of their work will be used to decide whether to take part in this.

Method 3. Guess the "inner" motive.

What personal motives of clients may affect the filling of the questionnaire? What joy can we bring to them?

I will give an incomplete list of needs that can be taken into account in invitations and thanks for survey participants. We will never use all options at once. The type of survey and the characteristics of the target audience set the options for attracting respondents. Some moves require additional efforts from us, but some of them help to attract participants, while others help to make them loyal and to return when their opinion is needed again.

The need for development and self-knowledge- in this case, we guarantee each participant a report with the results of the study, or a link to its publication in the press. If we have resources and the participants are very dear to us, we draw up a comparative report for each of them: how his answers differed from those of most other survey participants. Hardware to organize it is not so difficult, but the effect is good.

Example. One day such a move helped us to involve directors of state enterprises in our research. Their desire to find out how their opinion differs from the opinions of other directors in the industry was the only motivator to participate in our survey. We received a rich specialized sample, and spent only an extra day of work for an inexpensive assistant and a bit of paper for each participant.

Need for power- in this option, we emphasize that the participant’s responses will be specifically considered when making decisions to change the product or company policy. And we surely promise to send a report on such changes. The most important thing in such promises is to spend extra efforts to sustain them. So we increase loyalty to the product and provide participants with the following research.

Need for accessories- in this case, you can create a community of testers / friends / evangelists of the product, make a platform for their communication, give them privileges in the form of exclusive information, test access, testing of new functions. Here it is important to choose a sign according to which the participant can be assigned to a small group of users similar to him: inform in the invitation that this person was selected to the group of the most active (or the youngest, metropolitan, owning minivans, etc.) product users.

The need for new knowledge (curiosity)- it is easy to work with this need if experts or product fans take part in the survey. A survey can be developed, offered unique testing of product features, reporting product facts or important details, combining a final report with a feature article, or promising to send detailed material on this topic when the research is completed. It is important to avoid sampling bias here: an article or a promise of exclusive material will attract only experts in this field. If you need the opinions of inexperienced users, you need to promise them something non-specialized and entertaining.

The need for admiration (vanity)- we will use this need when we examine a limited number of experts. In such cases, we promise to mention the participant’s company, or its name (if appropriate) in publishing the report’s results, including evangelists or reviewers in the club, etc. If there are a lot of participants, we can spend time on personalized letters of thanks to each of them a few days after the study, in which we emphasize once again how important it was to his participation in the study.

The need to assist- quite a strong motivator, it can be used in almost all surveys. When we use it, we emphasize the difficult situation in which developers find themselves, we tell in more detail about the difficulties (the number of failures has increased, users are dissatisfied with the product, but we cannot understand what does not suit them, or do not know how to do it better, and therefore need advice). Here it is important not to be shy to appear in a weak position. Sometimes companies invent difficulties in order to engage sympathetic users in the free work. It is more pleasant for research participants to feel themselves experts than experimental ones, so the position of “asking for help” will not weaken you, but will attract more motivated participants.

Method 4. We invent an intangible "external" motive.

Discounts, exclusive use of the product for users, time off for employees, etc. - still risky incentives, they can shift the interest from filling out a questionnaire to receive a reward. The non-material reward will be a gift in the form of a link to an interesting video, an anecdote at the end of the questionnaire, an unexpected souvenir.

If you need to involve experts to get professional feedback, in such cases they often use the provision of unique access to a new version of the product, the authors of books and applications send them free versions for testing and reviews. There are entire campaigns to attract early product testers. All this also serves the marketing goals of promotion.

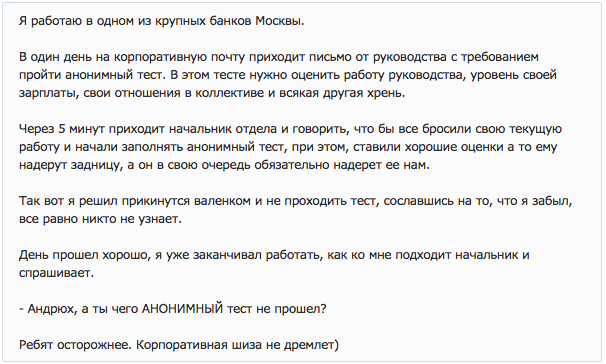

Method 5. We try not to flirt with anonymity.

Often, I hear complaints from employees of different companies to internal organization surveys: “They assure that the survey is anonymous, but they ask to send answers by e-mail and ask for my position, gender and age! As soon as I saw this, I refused to fill out their false questionnaire. ”

Example:

Source: pikabu

Often this “lie” among the organizers of the study does not appear from evil, but from the desire to saturate the data, segment the participants and make the study more detailed. So, it is better not to promise anonymity, if respondents can be calculated from the answers.

In such cases, we either use the most secure ways to collect responses (envelope research, an anonymous survey by reference), or we promise only data protection that we can perform ourselves.

: . , - , - . : . , on-line . , . . , - . , ( ) , ( , , , ).

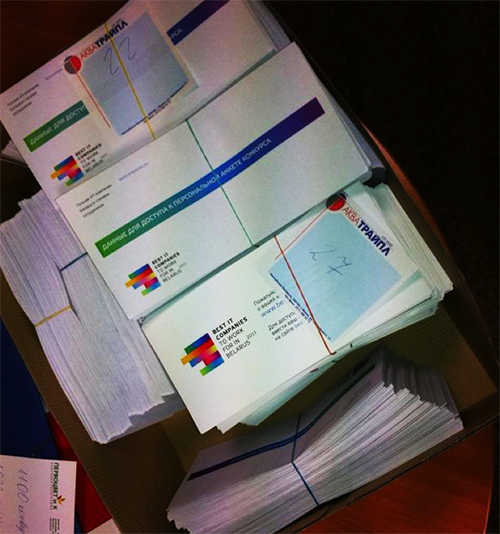

In the photo: a box with anonymous envelopes for study participants.

When it is very difficult to achieve anonymity, you can guarantee the preservation of data and access to it only by the research team.

An example of the wording in the invitation: "We guarantee that your answers will be analyzed together with the answers of the other study participants, will not be considered separately, disclosed or transferred to third parties who are not involved in the conduct of this study."

Method 6. If possible, remove the alarm about time.

The questionnaire becomes more attractive if it is small, and information about the number of questions and the expected time of filling is immediately visible in the introduction. If you look at the questionnaire meticulously and reformulate the questions, you can usually reduce it by 2 times. And another third of the questions - almost the whole “passport” - fill in by yourself (if the study is not anonymous and you know the data about the participant), or give a prepared questionnaire immediately (divide the forms / links for women and men, different age and status groups, and .d.) All this will reduce the objections of respondents to participate in the survey.

1. We promise simplicity immediately in the introduction.

Example: "The questionnaire contains 10 simple questions, and filling it out will not take you more than 5 minutes."

2. If questions require a transition, and the participant does not immediately see where the questionnaire ends, we show the progress of the implementation - this increases the likelihood of filling the questionnaire to the end.

Example: “Question 1 of 19, Question 2 of 19, etc.”, if the questionnaire is modeled according to previous answers, write in the title of the questions: “Part 1 of 4, Part 2 of 4”.

3. A little trick. If the participant has already filled out most of the questionnaire, he is sorry for the wasted time, and he will most likely pass the questionnaire to the end. Therefore, at the beginning of the questionnaire we usually put easy questions that can entice the participant to the maximum (these are questions about the participant himself, his tastes, but not personal questions and not complicated comparisons that can scare away immediately).

Method 7. Set time limits.

Filling out a questionnaire is often attributed by people “for later” and is completely forgotten. We consider it a success if it turns out to immediately involve the participants in filling out the questionnaire. This goes well in organizational research, when it is possible to invite participants to a separate room for research during working hours, or when the manager gives the staff time to fill out a questionnaire.

In on-line surveys, a quick questionnaire filling is achieved when it is an impulsive participation in a brief survey. In other cases, we further limit the time and ask to send the completed questionnaire, for example, until 22:00 of this day, or until Friday, etc. Sometimes, if the participants are loyal enough, closer to the expiration date, we send reminders.

Method 8. We entertain and hold attention.

If the questionnaire is still a big one (more than 10 questions), you can add pictures, videos or funny facts to some questions. We try to alternate between complex and simple questions, remove tables, comparisons, complex lists, and ranking questions: researchers love them very much and the respondents dislike them very much.

When we have more resources, we try to make the survey playable and as visual as possible. This is already a separate topic of gamification of polls, here I will give only a couple of examples from my experience.

1: on-line . , , -. , . , , 6- . , , . . , – .

, , .

2: , , . , , : « , , ». , on-line . , , , . , .

So, in this article, I told you what methods we use in our research in order to involve the most relevant participants and prevent sample bias.

I will summarize this part in the form of a brief memo on the questionnaire compilation :

1. Determine the portrait of the target respondent and think what can attract such a person to take part in the survey.

2. In the invitation to the survey, the introductory and final part of the questionnaire, make the participants an offer that is meaningful for their personal needs (needs for power, curiosity, self-knowledge, belonging, help, admiration).

3. Do not use external motivation to participate in the survey, if you can use the internal.

4. Promise only the level of anonymity that you can provide.

5. Shorten the application as much as possible. Leave participants to fill in only those fields that you can not fill out for them.

6. Try not to include in the questionnaire complex tasks and comparisons. Tell participants about the simplicity of the questionnaire in the introduction.

7. Set a clear time frame.

8. Consider entertainment for participants during and after completing the questionnaire.

In the following parts:

2 error: the wording of the questionnaire. 13 cases of misunderstanding and manipulation in the survey (part 1)

Error 2: questionnaire wording.13 cases of misunderstanding and manipulation in the survey (part 2)

Error 3. Types of lies in the polls: why do you believe the answers?

Mistake 4. Opinion is not equal to behavior: do you really ask what you want to know?

Error 5. Types of polls: do you need to know or confirm?

Error 6. Separate and saturate the sample: the average does not help to understand anything.

Error 7. The notorious “Net Promouter Score” is NOT an elegant solution.

This means that the material will be useful to those who conduct research on users (customers, employees, students) using questionnaires, who do it themselves, or order such research. For professional researchers, this article will be of less interest than for amateurs.

')

Is it possible to research user needs for surveys?

Sometimes a company needs to get the user's opinion about the product (or the employee’s opinion about the company), to find out its needs and hidden objections. A simple solution is usually chosen. A questionnaire is compiled with seemingly logical and simple questions and is sent to users. The returned part of the questionnaires are processed by mathematical methods and taken as a representative sample (reflecting the views of typical users), analyzed with the help of statistics, visualized and prepared a report for decision-making and strategy development. Often an erroneous and useless report.

One of the most insidious problems of such research: the needs are very difficult to investigate, but it almost always seems that the research was successful.

In this and the next article I will talk about a few common mistakes in researching user needs and ways that can reduce their impact on research results.

Error 1. Sample bias, or who are all these people and why do they answer you?

Everyone who has ever met with questionnaires knows that only a small part of the questionnaires returned. Sometimes - less than 10%.

A lot of publications are devoted to ways to reduce this evil. Including, with tips on statistical sampling. Strictly or not strictly, but the returned part of the questionnaires is often recognized as an adjectival sample from the general population (the entire set of users whose opinions the researcher wants to study) and work with what they have. Sometimes this “selectiveness” is completely invisible - for example, when an invitation to a survey is sent without address, and the responded users are considered to be a sample.

Example. A large IT company (with a staff of several thousand) conducted a survey of employee satisfaction with the work of the HR service. We were invited to help only at the stage of processing the results. Prior to this, the department staff themselves compiled a questionnaire and themselves launched a survey, sending out invitations to employees via corporate mail. They did not take care of the motivation of survey participants and the “administrative leverage” (almost the most important part of any organizational research) and simply counted on a good response of the staff. As a result, the questionnaires returned with a 15% return. The questionnaires were mainly answered by new or completely inexperienced workers (they still had almost no experience with the activities of this division, but they filled out the questionnaires willingly and, as a rule, exaggerated the overestimated points). The most important group - heads of departments - was represented by only three (out of several dozen) the most loyal participants. The opportunity to draw attention to the survey was missed, it was also impossible to re-survey (the organizers would be accused of spam). As a result, they chose an elegant solution - they presented the results to the management as a percentage of each group of employees, without indicating the number of participants. The report turned out that the heads of departments are extremely satisfied with the work of the unit. The life of such a report is until the manager finds out how many people from each group actually took part in the survey. But such questions are rarely asked, and organizational research, to which none of the employees pays attention, flourishes.

Let us examine 3 reasons for the sample bias:

1) the wrong choice of the search channel participants,

2) responsiveness

3) mercantile spirit.

1. Wrong selection of the search channel participants

In almost all statistical textbooks, you will find a warning about the sample bias that appears if you interview, say, the participants of only one Internet forum (provided that the user population is much wider), or if you survey employees in the canteen working time, or polling the most accessible group of users (for example, those who came to the presentation of a new product): do their opinions agree with the opinions of other users, or is this a specific group? These warnings are classic tips for researchers, and they are surely considered by many.

But I want to draw attention to other dangers of sample bias - responsiveness and mercantile spirit.

2. Responsive enemies of the researcher

Answer honestly: when was the last time you were happy to fill out the questionnaire? Most often, they probably agreed out of solidarity, trying to help a colleague. So, your answers should be excluded from the sample as irrelevant (you answered questions like a loyal friend, or a sympathetic colleague, and not as a “naive” user). In the classical marketing research, after the initial analysis of the sample, it was decided to exclude all representatives of related specialties, including all advertisers and marketers, since their opinion is considered professionally deformed. Who then will stay?

Who are all these people who answer our polls and find time for them? Who is in a stream near a supermarket who approaches a sociologist, who opens the doors of an apartment, or fills out a form in an online survey? Obviously, those who are personally concerned about the survey, or those who have plenty of time, have developed a motive for help and communication, and perhaps not enough other interesting activities.

Remember, in the Soviet film “The Most Charming and Attractive”, the main character wants to get married with the help of a sociologist friend, a specialist in family relations. Together they try to investigate the heroines' potential suitors and face their employment and unwillingness to answer questions. But there is a sympathetic employee who is happy to answer any questions — the respondent is not at all interesting to the bride and researcher. So it is in life: in the situation of a mass survey, we can probably not notice that behind the numerous responses of responsive and irrelevant users, we have not received a single response from our target customers.

An old study by JM Darley, CD Batson, 1973 showed that even seminary students, meeting requests for help, rendered it much less frequently if they were busy and were in a hurry (for example, they were preparing to speak on the topic of the good Samaritan).

Source: Heckhausen H. Motivation and activity: In 2 volumes. T. 2. M: Pedagogy, 1986. P. 234-248 / Darley, JM, and Batson, CD, "From Jerusalem to Jericho": A study of Situational and Dispositional Variables in Helping Behavior ". JPSP, 1973, 27, 100-108.

Do we need answers from such sympathetic and unoccupied people? And will their answers reflect the views of typical users about our product? Will they praise the product, wanting to seem good, or, conversely, criticizing it, wanting to attract attention?

Example. Exploring a few years ago, the loyalty of employees of an average-sized company, we paid attention to the suspiciously positive, “monotonous”, answers of several of its employees. They were the first to send out fully completed questionnaires, speak well and fully about the company, offer ideas and, in general, were just the ideal survey participants. This caused suspicions in us, which we were able to clarify during in-depth interviews. It turned out that only sellers who felt their precarious position in front of the upcoming reporting period showed over-responsiveness to the survey. They also gave only positive feedback about the company and management (if we were to analyze the average, these reviews would significantly shift the overall picture of the research results). During the interview, one of the sellers praised the company and sincerely admitted that he understood that he owed her because he did not show results for a year. When we brought a report with caution to management, we learned that a “loyal” employee was ahead of us, the day before he submitted a letter of resignation.

If the portrait of your user is “a person with a lot of free time, with a developed need for help and communication,” then yes, you can safely use the results of such surveys. If your user is a busy and rational person, you need to additionally think about how to involve him in research. Below, I will tell you about my findings for such an attraction.

3. Mercantile enemies of the researcher

The second problem of sampling bias and receiving erroneous data is participation in surveys of “money seekers”.

The problem of external and internal motivation of survey participants is also often discussed: is it worth paying for labor, offering additional rewards and pleasant bonuses to customers who answer the questionnaire? In such disputes there is a clear position: do not enter external motivation (money, free access, gifts, time off, etc.) as long as it is possible to work with internal ones. And it's not about greed. External motivation is almost always irreversible , it should be considered the last desperate step.

But shifting the sample among real users or potential customers towards those who are interested in paying for their participation is still a small evil. More evil, highly relevant now in the research market, is when, with the purpose of earning money, people present themselves as your real or potential customers, but not as such.

Now many on-line survey services offer access to the paid respondents panel. They attract and pay for the work of research participants using different methods and it remains to be seen how scrupulous they are about targeting, and how far such targeting can be done at all.

In the picture: a screenshot of one of the sites inviting to actively participate in different polls.

Doubts about the purity of targeting are reinforced by a parallel wave of activity on the part of the participants of such surveys, who post tips on how to make money on online surveys and increase the likelihood of receiving an invitation (getting into the sample). For example, when filling out a questionnaire, it is advised to write that you have an average age, average or above average income, you are married, you have a driver's license, a child, you are a regular consumer of certain products (which ones in the questionnaire are asked about and agree with ) and are not a representative of a certain profession (which one will be asked in the questionnaire - deny that). All these tips help get off as a typical user, get into research and get paid for participating. Imagine what kind of people earn from such research, and what answers they will give to your questions.

On the Russian-language sites, profit seekers are advised to impersonate the average Europeans or Americans, and then to respond, as it will, even without knowing a foreign language. Are our Western brands' product strategies determined by our unemployed compatriots and their random answers?

We can ignore the sample bias only in the rare case, if it does not matter for us at all what user we are talking about, and we need, for example, some of his psychophysical indicators characteristic of each normally developing person without taking into account age and sex, or if we just started testing the product, and at this stage absolutely any feedback and ideas are important to us, regardless of the portrait of a typical user. In the latter case, it only makes sense to collect “raw data” in order to prepare a more thorough survey on the relevant sample.

How to attract suitable?

In order not to work with a “good” sample of inappropriate respondents, we occasionally use such psychological methods to attract the relevant sample.

Method 1. Looking for business and rational? We find meaning for them.

In order for a person to take time to complete a questionnaire, he needs to find the meaning in it. In such cases, we formulate a “legend”, an invitation to research so that a busy person who does not want to help us, imbued with the idea of the importance of the survey and agreed that it is worth spending time on it.

1. Important to him. Sometimes the nature of the study allows you to choose motivating incentives that relate personally to the respondent.

Example 1. Several years ago we needed to test a large psychological questionnaire. It was forbidden to report the results until the questionnaire passes the test. To attract people, we attached to it two authoritative psychological tests that served to validate the questionnaire. After the survey, each participant was given detailed individual interpretation of these tests, which was a good incentive for them to participate in the study. We brought printed results to some regional participants or even sent paper letters because they did not have an e-mail. Once on New Year's Day, I even received a postcard from one participant with congratulations and thanks for the results.

Example 2. We developed the methodology and automated the internal annual staff assessment in one of our clients' companies. Before proposing an assessment methodology, we conducted a brief survey and found out from the staff that the lack of feedback on the results frustrated them most in previous evaluations. They were informed about the overall rating, or accrued bonuses, but no one offered a detailed result. Automation has helped us make the process of providing feedback instant, deployed and aimed at the development of each employee.

First, after answering the questionnaire about themselves and their employees (one of the stages of evaluation on the basis of 360 degrees), each participant could see how the employees and management assessed it (the evaluations were visualized in a spider diagram and presented quite visual results) - see drawing.

Secondly, after receiving the final assessment of personal qualities, the employee could review the decoding of quality, find out what exactly his assessment means, and read personal recommendations for the development of competencies - depending on the points received, he was offered behavior tips with staff and management, or special trainings and seminars (see drawing).

Thirdly, after reading the recommendations, the participant could see what kind of literature he could read for the development of those qualities that were not appreciated enough. References to the literature led to the corporate on-line library, where employees could evaluate the books read and leave comments (see figure).

So we managed to involve the staff in the assessment process, to achieve rapid filling of on-line questionnaires and substantially color the formal assessment process.

2. Important for society. When the respondent cannot be interested personally, we tell in the invitation why the need for research arose, what threatens society (nature, culture, science, etc.) and why it is important to get the answers of just such a person in order to understand how to change the situation. Of course, such explanations are only suitable for global research, or socially significant projects.

3. Important to you. Perhaps the weakest argument. Often the egoism of researchers causes irritation. But there are also successful examples when a researcher explains his predicament and causes sympathy.

Example 1: I met similar appeals. Usually they work: “I got a job of my dream. But I can not pass a trial period, if I do not conduct a good customer research. (I am writing a thesis / I try to win a grant for training). I try very hard to get a high-quality study. I just can’t gather the right amount of experts to participate in the survey. Please help me get a dream job. You only need to answer a couple of questions, and this will give me a huge chance to do something worthwhile. ”

Example 2: Once I was really bribed by a call from a well-known MLM company distributing cosmetics. The girl began the conversation with these words: “We noticed that recently the reputation of our company began to fall, and we decided to conduct a survey of public opinion in order to understand what our customers didn’t like. Could you help us and answer a few questions so that we can improve our reputation and please our customers again? ”I was filled with their problem and was ready to answer her questions in detail. Unfortunately, after this introductory phrase, the girl returned to the usual steps of telephone sales, and I quickly stopped talking.

Method 2. Tell what this will lead to.

One of the most unpleasant experiences for a person is the experience of the futility of effort. The probability of filling out questionnaires in our studies usually increases if we explain to participants how their answers will be used. Sometimes we honestly tell us that now we are choosing a product development strategy, and we will base our choice on the decision of our customers. Or we inform that as a result of the research a report will be published with recommendations for all manufacturers of such goods. Study participants have the right to know where the results of their work will be used to decide whether to take part in this.

Method 3. Guess the "inner" motive.

What personal motives of clients may affect the filling of the questionnaire? What joy can we bring to them?

I will give an incomplete list of needs that can be taken into account in invitations and thanks for survey participants. We will never use all options at once. The type of survey and the characteristics of the target audience set the options for attracting respondents. Some moves require additional efforts from us, but some of them help to attract participants, while others help to make them loyal and to return when their opinion is needed again.

The need for development and self-knowledge- in this case, we guarantee each participant a report with the results of the study, or a link to its publication in the press. If we have resources and the participants are very dear to us, we draw up a comparative report for each of them: how his answers differed from those of most other survey participants. Hardware to organize it is not so difficult, but the effect is good.

Example. One day such a move helped us to involve directors of state enterprises in our research. Their desire to find out how their opinion differs from the opinions of other directors in the industry was the only motivator to participate in our survey. We received a rich specialized sample, and spent only an extra day of work for an inexpensive assistant and a bit of paper for each participant.

Need for power- in this option, we emphasize that the participant’s responses will be specifically considered when making decisions to change the product or company policy. And we surely promise to send a report on such changes. The most important thing in such promises is to spend extra efforts to sustain them. So we increase loyalty to the product and provide participants with the following research.

Need for accessories- in this case, you can create a community of testers / friends / evangelists of the product, make a platform for their communication, give them privileges in the form of exclusive information, test access, testing of new functions. Here it is important to choose a sign according to which the participant can be assigned to a small group of users similar to him: inform in the invitation that this person was selected to the group of the most active (or the youngest, metropolitan, owning minivans, etc.) product users.

The need for new knowledge (curiosity)- it is easy to work with this need if experts or product fans take part in the survey. A survey can be developed, offered unique testing of product features, reporting product facts or important details, combining a final report with a feature article, or promising to send detailed material on this topic when the research is completed. It is important to avoid sampling bias here: an article or a promise of exclusive material will attract only experts in this field. If you need the opinions of inexperienced users, you need to promise them something non-specialized and entertaining.

The need for admiration (vanity)- we will use this need when we examine a limited number of experts. In such cases, we promise to mention the participant’s company, or its name (if appropriate) in publishing the report’s results, including evangelists or reviewers in the club, etc. If there are a lot of participants, we can spend time on personalized letters of thanks to each of them a few days after the study, in which we emphasize once again how important it was to his participation in the study.

The need to assist- quite a strong motivator, it can be used in almost all surveys. When we use it, we emphasize the difficult situation in which developers find themselves, we tell in more detail about the difficulties (the number of failures has increased, users are dissatisfied with the product, but we cannot understand what does not suit them, or do not know how to do it better, and therefore need advice). Here it is important not to be shy to appear in a weak position. Sometimes companies invent difficulties in order to engage sympathetic users in the free work. It is more pleasant for research participants to feel themselves experts than experimental ones, so the position of “asking for help” will not weaken you, but will attract more motivated participants.

Method 4. We invent an intangible "external" motive.

Discounts, exclusive use of the product for users, time off for employees, etc. - still risky incentives, they can shift the interest from filling out a questionnaire to receive a reward. The non-material reward will be a gift in the form of a link to an interesting video, an anecdote at the end of the questionnaire, an unexpected souvenir.

If you need to involve experts to get professional feedback, in such cases they often use the provision of unique access to a new version of the product, the authors of books and applications send them free versions for testing and reviews. There are entire campaigns to attract early product testers. All this also serves the marketing goals of promotion.

Method 5. We try not to flirt with anonymity.

Often, I hear complaints from employees of different companies to internal organization surveys: “They assure that the survey is anonymous, but they ask to send answers by e-mail and ask for my position, gender and age! As soon as I saw this, I refused to fill out their false questionnaire. ”

Example:

Source: pikabu

Often this “lie” among the organizers of the study does not appear from evil, but from the desire to saturate the data, segment the participants and make the study more detailed. So, it is better not to promise anonymity, if respondents can be calculated from the answers.

In such cases, we either use the most secure ways to collect responses (envelope research, an anonymous survey by reference), or we promise only data protection that we can perform ourselves.

: . , - , - . : . , on-line . , . . , - . , ( ) , ( , , , ).

In the photo: a box with anonymous envelopes for study participants.

When it is very difficult to achieve anonymity, you can guarantee the preservation of data and access to it only by the research team.

An example of the wording in the invitation: "We guarantee that your answers will be analyzed together with the answers of the other study participants, will not be considered separately, disclosed or transferred to third parties who are not involved in the conduct of this study."

Method 6. If possible, remove the alarm about time.

The questionnaire becomes more attractive if it is small, and information about the number of questions and the expected time of filling is immediately visible in the introduction. If you look at the questionnaire meticulously and reformulate the questions, you can usually reduce it by 2 times. And another third of the questions - almost the whole “passport” - fill in by yourself (if the study is not anonymous and you know the data about the participant), or give a prepared questionnaire immediately (divide the forms / links for women and men, different age and status groups, and .d.) All this will reduce the objections of respondents to participate in the survey.

1. We promise simplicity immediately in the introduction.

Example: "The questionnaire contains 10 simple questions, and filling it out will not take you more than 5 minutes."

2. If questions require a transition, and the participant does not immediately see where the questionnaire ends, we show the progress of the implementation - this increases the likelihood of filling the questionnaire to the end.

Example: “Question 1 of 19, Question 2 of 19, etc.”, if the questionnaire is modeled according to previous answers, write in the title of the questions: “Part 1 of 4, Part 2 of 4”.

3. A little trick. If the participant has already filled out most of the questionnaire, he is sorry for the wasted time, and he will most likely pass the questionnaire to the end. Therefore, at the beginning of the questionnaire we usually put easy questions that can entice the participant to the maximum (these are questions about the participant himself, his tastes, but not personal questions and not complicated comparisons that can scare away immediately).

Method 7. Set time limits.

Filling out a questionnaire is often attributed by people “for later” and is completely forgotten. We consider it a success if it turns out to immediately involve the participants in filling out the questionnaire. This goes well in organizational research, when it is possible to invite participants to a separate room for research during working hours, or when the manager gives the staff time to fill out a questionnaire.

In on-line surveys, a quick questionnaire filling is achieved when it is an impulsive participation in a brief survey. In other cases, we further limit the time and ask to send the completed questionnaire, for example, until 22:00 of this day, or until Friday, etc. Sometimes, if the participants are loyal enough, closer to the expiration date, we send reminders.

Method 8. We entertain and hold attention.

If the questionnaire is still a big one (more than 10 questions), you can add pictures, videos or funny facts to some questions. We try to alternate between complex and simple questions, remove tables, comparisons, complex lists, and ranking questions: researchers love them very much and the respondents dislike them very much.

When we have more resources, we try to make the survey playable and as visual as possible. This is already a separate topic of gamification of polls, here I will give only a couple of examples from my experience.

1: on-line . , , -. , . , , 6- . , , . . , – .

, , .

2: , , . , , : « , , ». , on-line . , , , . , .

So, in this article, I told you what methods we use in our research in order to involve the most relevant participants and prevent sample bias.

I will summarize this part in the form of a brief memo on the questionnaire compilation :

1. Determine the portrait of the target respondent and think what can attract such a person to take part in the survey.

2. In the invitation to the survey, the introductory and final part of the questionnaire, make the participants an offer that is meaningful for their personal needs (needs for power, curiosity, self-knowledge, belonging, help, admiration).

3. Do not use external motivation to participate in the survey, if you can use the internal.

4. Promise only the level of anonymity that you can provide.

5. Shorten the application as much as possible. Leave participants to fill in only those fields that you can not fill out for them.

6. Try not to include in the questionnaire complex tasks and comparisons. Tell participants about the simplicity of the questionnaire in the introduction.

7. Set a clear time frame.

8. Consider entertainment for participants during and after completing the questionnaire.

In the following parts:

2 error: the wording of the questionnaire. 13 cases of misunderstanding and manipulation in the survey (part 1)

Error 2: questionnaire wording.13 cases of misunderstanding and manipulation in the survey (part 2)

Error 3. Types of lies in the polls: why do you believe the answers?

Mistake 4. Opinion is not equal to behavior: do you really ask what you want to know?

Error 5. Types of polls: do you need to know or confirm?

Error 6. Separate and saturate the sample: the average does not help to understand anything.

Error 7. The notorious “Net Promouter Score” is NOT an elegant solution.

Source: https://habr.com/ru/post/307620/

All Articles