When should you think about optimizing your IT infrastructure?

It’s never too early or too late to think about optimization. Even the most modern IT infrastructure already tomorrow may not cope with all the tasks assigned to it. In addition, optimization is not always the acquisition of new assets, including the transfer of services to public clouds, and optimization of IT processes, and fine-tuning the performance of existing components.

Optimization tasks

The main task of optimization is to ensure high-quality and continuous work of business processes with minimal costs to support the IT infrastructure. However, I would like to decompose this goal so that you can understand how the desired results can be achieved.

First of all, the IT infrastructure is being upgraded to solve four main tasks:

• continuity of service;

• increasing productivity (computing power);

• reduced support costs;

• ensuring the information security of the company.

These tasks are solved by a fairly wide range of methods that can be conventionally combined into groups:

However, before proceeding to a more detailed analysis of the above approaches, it is necessary to make one important remark.

It is not always necessary to upgrade all parts of the IT infrastructure. First of all, it is necessary to evaluate the possible benefits of the planned innovations and understand how they relate to the planned costs.

It is possible, from the point of view of your business and your realities, that it makes sense to leave some components unchanged, simply by accepting the risks of their possible breakdown for a while. Typically, this approach is used in relation to various “supporting” hardware and software, for which even relatively long downtime (up to several days) will not bring any significant losses for the core business.

Why do I need a survey?

The figure below shows a classic diagram of the dependence of business processes on the IT infrastructure.

As you can see, there is no direct correlation, since business processes are automated with the help of various software control complexes (for example, various ERP and CRM systems). And now the software systems themselves depend directly on the existing infrastructure.

For one system, the most critical may be, for example, a data network, for another - the amount of memory and disk space allocated to a virtual server, for the third - not so much the amount of allocated memory as its speed.

That is, for each automated system, the mechanism of dependence on the hardware used will be different. Therefore, in the first place, the key task of optimizing the IT infrastructure is understanding the existing relationships between specific components of computing systems and the business applications they serve.

It is great when these relationships are known and constantly kept up to date, but in most cases the situation is still far from ideal. Often, decisions about automating a business process are made at different times by different people, and, as a rule, no analysis is conducted (or minimal) for the existing IT infrastructure to meet the new requirements.

At a specific point in time, there may be a task to optimize the performance of any one of the used automated systems and, accordingly, there will be increased requirements only for some components of the enterprise computing capacity.

Now that we’ve figured out the main relationships and outlined ways to optimize as efficiently as possible, let's get back to discussing approaches to achieve our goals.

Approaches for solving problems of IT infrastructure optimization

fault tolerance

Maintaining the continuity of all automated business processes, and therefore, the failure of any component of the IT-infrastructure should not lead to their degradation or complete stop. This, in turn, means that there should not be single points of failure in the IT infrastructure (SPOF - Single Point Of Failure).

In most cases, fault tolerance is achieved through redundancy. We are, in fact, duplicating some component in case of failure of the main one, which means additional capital costs are required to purchase new equipment (or to expand the functionality of existing equipment through the acquisition of licenses with extended functionality).

Thus, it is necessary to solve the problem of increasing the resiliency of each component based on the criticality of their impact on the operation of automated systems and, accordingly, on the business processes themselves.

If, for example, the lion’s share of your sales is accomplished through a website located on your own computing platform, and your Internet connection is not reserved, then line failures will lead to serious losses for your business, therefore, increasing the Internet resiliency connections will be a high priority for you.

If your site is for informational purposes only, there are no financial transactions tied to it, and e-mail does not imply the need for an instant response to incoming emails, the need to create a backup line to the Internet looks very doubtful. And even if in the future you plan to use global networks more fully, at the current moment you do not need to spend resources on creating redundancy at this point.

Disaster tolerance

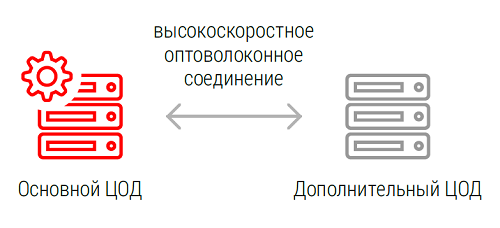

It often happens that a fail-safe infrastructure is not enough for a business to run continuously. If, for example, your main computing cluster is located in an earthquake-prone region, and the hardware and software complex built on it manages geographically distributed production, then the data center itself (data processing center) becomes a single point of failure.

Thus, you have the need to create a reserve site, which, in the case of force majeure at the primary facility, closes all the necessary business processes.

Creating a spare data center is always associated with serious investments and high operating costs, so you need to clearly understand how these investments will be commensurate with the potential losses associated with downtime in the operation of the software and hardware complex (PAK) at the main facility. For this reason, the leased computing capacity of specialized service providers is very often used as a backup data center, and only the most necessary services and applications are actually protected from possible catastrophes.

IT infrastructure virtualization

In most cases, modern software does not have any specific hardware requirements, which made it possible to make maximum use of virtual machines to provide the necessary computing power.

And if previously the distribution of tasks across servers could be quite expensive (allocating a separate physical server for each task), then using virtualization technologies this problem is solved much easier and more elegantly. Modern server hardware allows you to run dozens (and sometimes hundreds) of virtual machines with sufficient performance to run any not too resource-intensive applications (domain controllers, WSUS, servers, monitoring systems, etc.). In addition to reducing the cost of increasing the required computing resources, virtualization technologies still have a number of advantages:

• improving resiliency and disaster resistance;

• service isolation;

• the possibility of flexible allocation of resources between services;

• use of operating systems that are best suited for specific tasks;

• saving space in racks;

• reduction of energy consumption and heat generation;

• simplified administration;

• extensive automation of server deployment and management;

• reduction of forced and planned system downtime due to failover clusters and live migration.

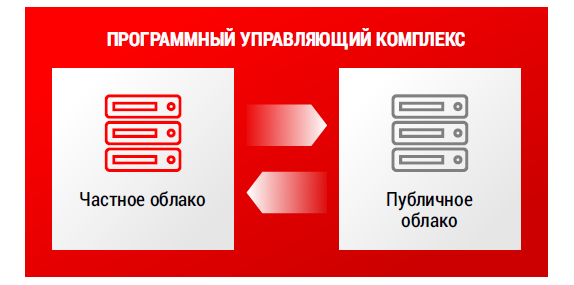

The organization of "private clouds"

The further development of virtualization technologies is the concept of “private cloud” (eng. Private Cloud), when servers are combined into a pool, from which computing resources that are not tied to specific physical servers are allocated for solving certain tasks. Such clouds are built on the basis of the data center of the company, which makes it possible to make IT services even more “flexible”, while, unlike using public cloud services, all security and privacy issues located in private clouds remain completely closed.

Hybrid IT Infrastructure

You can still rely on your own infrastructure, but resources based on public clouds are becoming more attractive regardless of who provides them. Transferring a part of computing power to the “cloud” or expanding local capacity at the expense of the resources of the “cloud” providers has ceased to be something exotic and is now completely standard practice.

The main advantage of this approach is the ability to save (and sometimes quite significantly) on the development of its own IT infrastructure.

In modern realities, it is sometimes very difficult to predict the actually needed amount of resources, especially if your business is subject to some seasonal activity peaks. On the one hand, you can purchase the necessary amount of equipment and cover all needs with your own resources, but, on the other hand, you will have to be aware that some of your resources will stand idle most of the time (from peak to peak), creating the most additional financial burden on the company's cash flows.

Using the resources of a public cloud allows you to pay for the resources you need so much only after their actual use, for example, you pay for water or electricity in your apartment.

A classic example of successful use of public cloud resources is, for example, the rental of additional computing power by a company-retailer during the pre-New Year consumer fuss. Or, another example - instead of buying your own computing power, you can use a ready-made cloud service to organize an antispam-solution.

')

Source: https://habr.com/ru/post/307542/

All Articles