Network Evolution to SDN & NFV

Why is there a change of technological concepts in the environment of data transmission networks, what are the reasons for this and how will the life of service providers change through the implementation of modern trends?

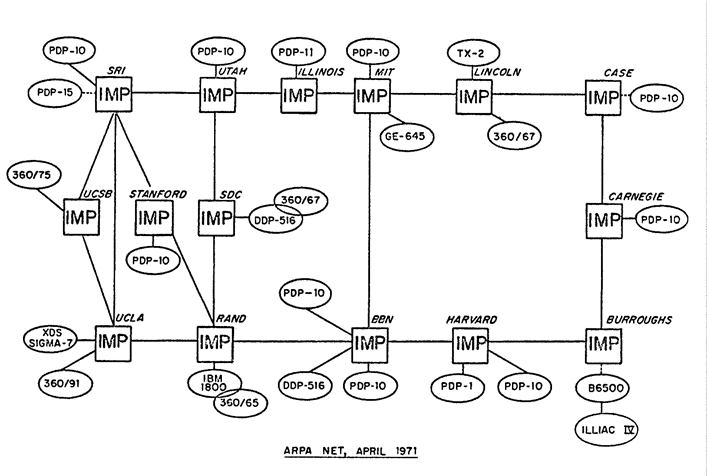

The development of transmission network technologies since 1969 (ARPANET) was aimed at ensuring that each hardware component was independent of other components. The interaction protocols worked on the principle of the exchange of control information, which was used by algorithms to analyze the topology and build models of interaction between devices.

')

There is a version that this approach was specifically designed to increase the chance of survival of data networks in a military conflict, in which some of the devices or communication lines may fail, and the remaining network components will have to decide for themselves which topology remains holistic and workable. That is, the decentralization of the network was laid as a goal.

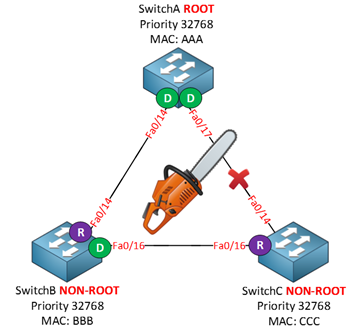

All developed dynamic routing protocols (RIP, OSPF, IGRP, BGP) and the branching tree protocol (STP) were built on this principle - devices as equitable exchange existing information about their connections with neighbors and self-organize into a common topology that works as a single network.

Reaching the actual at the time goal - autonomy, over time, when the load on the network increased, it became apparent how current connections are used inefficiently. The network at the second level of OSI began to lead to the shutdown of redundant connections, thereby reducing the overall utilization of the data network. Network operation on the third level of OSI began to lead to asymmetric routing, which could cause inconsistent packet delivery, which led to an increase in the occupied buffer volume on receiving devices.

Another problem was the fact that network functions (Firewall, Load Balancing, Mirroring) that were implemented in a certain physical location of the data network could be located far in the topology from the place where traffic was generated. Since the traffic had to be delivered to the hardware device and only there could be, for example, filtered, this led to greater utilization and reduced efficiency than if the traffic had been filtered at the place of traffic generation.

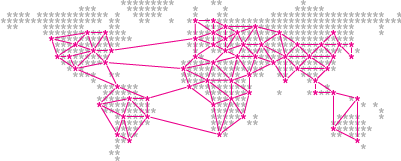

A lot of time has passed since then, and although the threat of military conflicts has remained, the technological leaders decided that the goal of centralization now meets modern needs. This approach led to the need to revise the model of the data network in the direction of separation of control functions and data transfer functions. It was decided to concentrate all management functions at a single point - a controller that would collect information from traffic transmission devices and decide how to manage this traffic.

Centralization allows to increase the efficiency of the workload of all connections and utilize the resources of hardware devices to the maximum. The developed protocol Openflow solved the problem of controlling the traffic plane by programming the switches with actions that should be performed with the traffic. In this case, the model of deciding how traffic should be transmitted within the controller of the control plane could be implemented using completely different mathematical models than those on which the known dynamic routing protocols are based. The difference is that modern routing protocols are based on the principle of information exchange between points about the topology. But if the entire topology is stored in one place, then there is no need for information exchange algorithms. There is only the need to use topology information in order to direct traffic from point A to point B. With a centralized approach, decisions about traffic transfer may not be universal. That is, there is no need to transfer all traffic between two points along the same path. One part of the traffic may follow one path, while the other may use an alternative path in the same topology. This approach improves efficiency by maximizing the utilization of your existing network infrastructure.

Another advantage is that, having information about which policies should be applied to different types of traffic at different points of entry of traffic into the network, it is possible to apply the network function directly at this point of the network. If we imagine that hundreds of network functions can be separated from the hardware platform and implemented as a program that receives control information (which policies need to be applied to traffic) as input data and specify the incoming and outgoing interface for traffic, then this network function can be run on standard operating systems on x86 servers. By running these programs at the points of generation or exchange of traffic, the central controller can program the switches to transfer the necessary type of traffic inside the network function. The traffic, after being processed by the network function, is returned to the data transmission plane and transmitted to the final recipient.

One of the arguments for adopting such a model is to reduce the cost of the data transmission network due to the fact that specific network functions implemented on a specialized hardware platform are offered at a higher price than the implementation of the same functions on a universal computing platform. The performance of specialized platforms has always been higher and this was important in the era of not very productive universal processors, but modern trends leveled this difference and now even on common CPUs, due to the increasing performance, it became possible to perform these network functions with the required performance.

Another advantage is that, given the opportunity to perform a network function anywhere in a data network, there is no need for giant performance in one device, because you can distribute the required performance across all points of traffic generation or exchange. This fact allows you to quickly scale the performance of the network function with a sharp increase in traffic. This is regularly observed during significant events in the life of all mankind, for example, a football championship, the holding of the Olympics or the broadcast of the wedding of the English prince. At such moments, people record video and take photos, upload everything to the “cloud” and exchange links. All this leads to an explosive growth in traffic volumes, which need to be able to redirect to the right points.

Network functions of real-time traffic compression (Real-Time Compression, WAN Optimization), forwarding requests for the nearest points (CDN), load balancing between the server farm (Load Balancing) need to be deployed automatically and at the desired scale and provide to a certain type traffic. This type of traffic can be determined, virtual machines with the required function can be started and the MANO system can help to redirect traffic to them with auto-provisioning, auto-scaling, automatic setting of traffic redirection.

The orchestrator (management and operation) of this process should have a whole set of abilities:

1) Manage the physical infrastructure: transmission networks, data warehouses, servers;

2) Using virtualization platforms, create the necessary instances on the basis of the physical infrastructure: virtual machines, virtual LUNs, virtual networks;

3) Accept telemetry from current network devices to analyze and decide on the provision of additional network functions;

4) Run the required network functions from the templates and load the configuration corresponding to the required task to them;

5) Analyze the status of running network functions for making operational decisions in case of failures, accidents or overloads;

6) Eliminate running network functions in the case when there is no longer any need for their work and do it on the basis of automatic data from telemetry or at the request of the administrator;

7) Transfer information about the resources used for billing calculations to the IT system business.

The implementation of this functionality will dramatically accelerate the provision of services from service operators to customers and consumers. Reducing the time it takes to enter the market will increase the monetization of the infrastructure and lead to additional profits. User experience from smoother and faster consumption of content will improve the consumers' emotional perception of the service, which, ultimately, will increase the attractiveness of telecom operators as customer-oriented providers.

Changing the work of providers with the provision of conventional communication channels ("pipes") to the creation of a set of value-added services will continue to provide highly profitable services, and in the long term will not lead to a decrease in revenue due to increased competition in the market of infrastructure providers.

Another opportunity to increase profits for service providers is the smart end-point transition (CPE) model. The transition from digital modems to managed routers allows you to achieve several goals. At the moment, to connect a remote branch office of a customer of a service provider’s services, a device is required that connects the branch’s local network to the operator’s network in the required dedicated network organized either by MPLS technology or, in the case of legacy providers, by ATM technology. In this case, the need to perform various operations with the customer’s traffic (to provide additional network functions) led to the need to deliver this traffic from the client to the operator’s network core, in which devices realizing such a network function are located, and already on the network core to process traffic according to specified policies. This led to increased utilization of the “last mile” and the operator’s mainline by the traffic that could be processed closer to the generation source. On the other hand, the endpoint devices were merely either analog-to-digital converters or fairly simple IP devices, without the possibility of proactive monitoring or remote configuration.

Replacing such devices with CPEs of the new generation will solve several problems. Remote management of new CPEs will allow zero-touch provisioning using a two-factor method using activation URLs. Thus, it will be possible to send devices directly to end customers directly from the manufacturing plant, and on-site to activate them with the necessary configuration, including unqualified personnel. In addition, implementation on the CPE using a virtualization layer of containers or virtual machines with network-friendly network functions that allow providing value-added services directly at the endpoint will enable customers in the app store to activate for their connection point the functionality they need : anti-virus protection, spam filtering, parental control and so on. The operator, charging an additional fee for these functions, will receive a wide opportunity to increase revenue through the rapid and seamless implementation of new SDN applications.

For corporate customers, the rapid deployment of new offices at the Internet presence will become a reality - you do not need to reconfigure multiple IPSec VPN tunnels to the new addressing if the CPE automatically builds superimposed tunnels using multiple CPEs and a central data center using VXLAN or MPLSoGRE technologies. Centralized management of remote offices will allow you to quickly change the software on the CPE and centrally store configuration data. Information security tools can be activated from a single management console at once for the entire organization. The information security administrator will appreciate the lack of ability of employees in the branch to have access to the console of the router, because the policies can be configured only on the SDN controller or the orchestrator.

Using the mentioned trends - switching to a centralized control plane, replacing specialized devices with network functions to x86 servers with software NF, creating a virtual resource management system with transmitting control signals to various components of the OSS \ BSS system, as well as automating and virtualizing terminal connection devices to increase the speed of service delivery to the market, create an ecosystem for the provision of high value-added services and increase the provider’s appeal to the final cli ntov.

Historical excursion

The development of transmission network technologies since 1969 (ARPANET) was aimed at ensuring that each hardware component was independent of other components. The interaction protocols worked on the principle of the exchange of control information, which was used by algorithms to analyze the topology and build models of interaction between devices.

')

There is a version that this approach was specifically designed to increase the chance of survival of data networks in a military conflict, in which some of the devices or communication lines may fail, and the remaining network components will have to decide for themselves which topology remains holistic and workable. That is, the decentralization of the network was laid as a goal.

All developed dynamic routing protocols (RIP, OSPF, IGRP, BGP) and the branching tree protocol (STP) were built on this principle - devices as equitable exchange existing information about their connections with neighbors and self-organize into a common topology that works as a single network.

Reaching the actual at the time goal - autonomy, over time, when the load on the network increased, it became apparent how current connections are used inefficiently. The network at the second level of OSI began to lead to the shutdown of redundant connections, thereby reducing the overall utilization of the data network. Network operation on the third level of OSI began to lead to asymmetric routing, which could cause inconsistent packet delivery, which led to an increase in the occupied buffer volume on receiving devices.

Another problem was the fact that network functions (Firewall, Load Balancing, Mirroring) that were implemented in a certain physical location of the data network could be located far in the topology from the place where traffic was generated. Since the traffic had to be delivered to the hardware device and only there could be, for example, filtered, this led to greater utilization and reduced efficiency than if the traffic had been filtered at the place of traffic generation.

SDN

A lot of time has passed since then, and although the threat of military conflicts has remained, the technological leaders decided that the goal of centralization now meets modern needs. This approach led to the need to revise the model of the data network in the direction of separation of control functions and data transfer functions. It was decided to concentrate all management functions at a single point - a controller that would collect information from traffic transmission devices and decide how to manage this traffic.

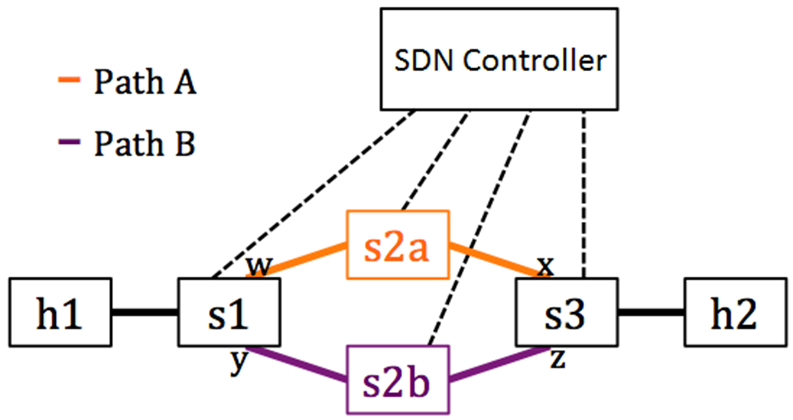

Centralization allows to increase the efficiency of the workload of all connections and utilize the resources of hardware devices to the maximum. The developed protocol Openflow solved the problem of controlling the traffic plane by programming the switches with actions that should be performed with the traffic. In this case, the model of deciding how traffic should be transmitted within the controller of the control plane could be implemented using completely different mathematical models than those on which the known dynamic routing protocols are based. The difference is that modern routing protocols are based on the principle of information exchange between points about the topology. But if the entire topology is stored in one place, then there is no need for information exchange algorithms. There is only the need to use topology information in order to direct traffic from point A to point B. With a centralized approach, decisions about traffic transfer may not be universal. That is, there is no need to transfer all traffic between two points along the same path. One part of the traffic may follow one path, while the other may use an alternative path in the same topology. This approach improves efficiency by maximizing the utilization of your existing network infrastructure.

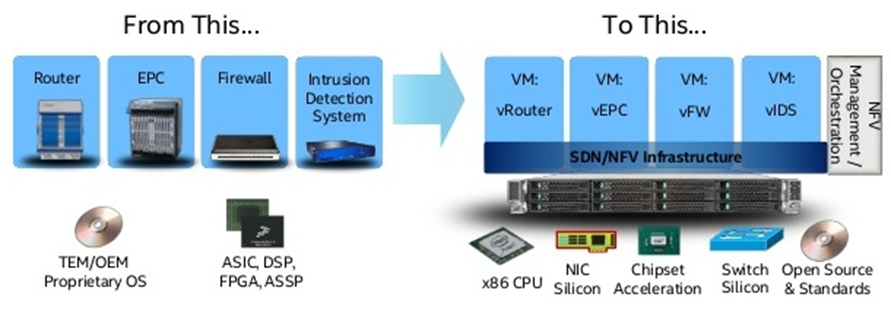

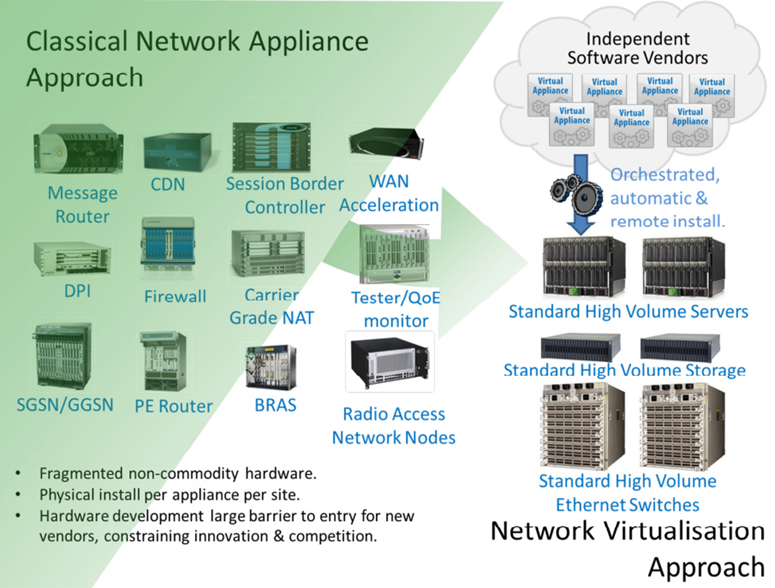

NFV

Another advantage is that, having information about which policies should be applied to different types of traffic at different points of entry of traffic into the network, it is possible to apply the network function directly at this point of the network. If we imagine that hundreds of network functions can be separated from the hardware platform and implemented as a program that receives control information (which policies need to be applied to traffic) as input data and specify the incoming and outgoing interface for traffic, then this network function can be run on standard operating systems on x86 servers. By running these programs at the points of generation or exchange of traffic, the central controller can program the switches to transfer the necessary type of traffic inside the network function. The traffic, after being processed by the network function, is returned to the data transmission plane and transmitted to the final recipient.

One of the arguments for adopting such a model is to reduce the cost of the data transmission network due to the fact that specific network functions implemented on a specialized hardware platform are offered at a higher price than the implementation of the same functions on a universal computing platform. The performance of specialized platforms has always been higher and this was important in the era of not very productive universal processors, but modern trends leveled this difference and now even on common CPUs, due to the increasing performance, it became possible to perform these network functions with the required performance.

Another advantage is that, given the opportunity to perform a network function anywhere in a data network, there is no need for giant performance in one device, because you can distribute the required performance across all points of traffic generation or exchange. This fact allows you to quickly scale the performance of the network function with a sharp increase in traffic. This is regularly observed during significant events in the life of all mankind, for example, a football championship, the holding of the Olympics or the broadcast of the wedding of the English prince. At such moments, people record video and take photos, upload everything to the “cloud” and exchange links. All this leads to an explosive growth in traffic volumes, which need to be able to redirect to the right points.

Network functions of real-time traffic compression (Real-Time Compression, WAN Optimization), forwarding requests for the nearest points (CDN), load balancing between the server farm (Load Balancing) need to be deployed automatically and at the desired scale and provide to a certain type traffic. This type of traffic can be determined, virtual machines with the required function can be started and the MANO system can help to redirect traffic to them with auto-provisioning, auto-scaling, automatic setting of traffic redirection.

MANO

The orchestrator (management and operation) of this process should have a whole set of abilities:

1) Manage the physical infrastructure: transmission networks, data warehouses, servers;

2) Using virtualization platforms, create the necessary instances on the basis of the physical infrastructure: virtual machines, virtual LUNs, virtual networks;

3) Accept telemetry from current network devices to analyze and decide on the provision of additional network functions;

4) Run the required network functions from the templates and load the configuration corresponding to the required task to them;

5) Analyze the status of running network functions for making operational decisions in case of failures, accidents or overloads;

6) Eliminate running network functions in the case when there is no longer any need for their work and do it on the basis of automatic data from telemetry or at the request of the administrator;

7) Transfer information about the resources used for billing calculations to the IT system business.

The implementation of this functionality will dramatically accelerate the provision of services from service operators to customers and consumers. Reducing the time it takes to enter the market will increase the monetization of the infrastructure and lead to additional profits. User experience from smoother and faster consumption of content will improve the consumers' emotional perception of the service, which, ultimately, will increase the attractiveness of telecom operators as customer-oriented providers.

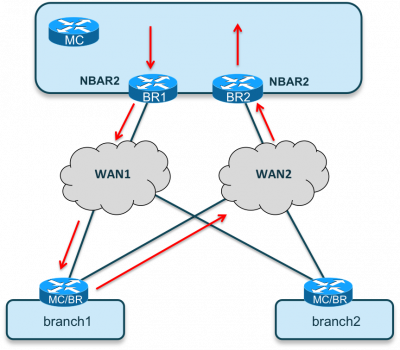

Enterprise CPE virtualization

Changing the work of providers with the provision of conventional communication channels ("pipes") to the creation of a set of value-added services will continue to provide highly profitable services, and in the long term will not lead to a decrease in revenue due to increased competition in the market of infrastructure providers.

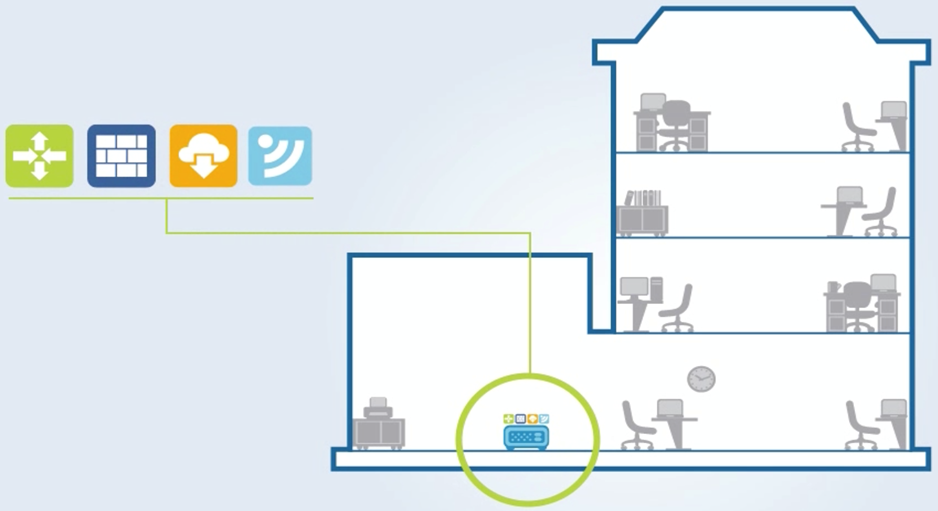

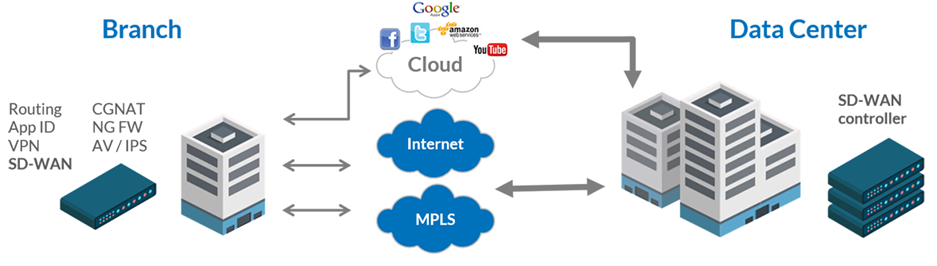

Another opportunity to increase profits for service providers is the smart end-point transition (CPE) model. The transition from digital modems to managed routers allows you to achieve several goals. At the moment, to connect a remote branch office of a customer of a service provider’s services, a device is required that connects the branch’s local network to the operator’s network in the required dedicated network organized either by MPLS technology or, in the case of legacy providers, by ATM technology. In this case, the need to perform various operations with the customer’s traffic (to provide additional network functions) led to the need to deliver this traffic from the client to the operator’s network core, in which devices realizing such a network function are located, and already on the network core to process traffic according to specified policies. This led to increased utilization of the “last mile” and the operator’s mainline by the traffic that could be processed closer to the generation source. On the other hand, the endpoint devices were merely either analog-to-digital converters or fairly simple IP devices, without the possibility of proactive monitoring or remote configuration.

Replacing such devices with CPEs of the new generation will solve several problems. Remote management of new CPEs will allow zero-touch provisioning using a two-factor method using activation URLs. Thus, it will be possible to send devices directly to end customers directly from the manufacturing plant, and on-site to activate them with the necessary configuration, including unqualified personnel. In addition, implementation on the CPE using a virtualization layer of containers or virtual machines with network-friendly network functions that allow providing value-added services directly at the endpoint will enable customers in the app store to activate for their connection point the functionality they need : anti-virus protection, spam filtering, parental control and so on. The operator, charging an additional fee for these functions, will receive a wide opportunity to increase revenue through the rapid and seamless implementation of new SDN applications.

For corporate customers, the rapid deployment of new offices at the Internet presence will become a reality - you do not need to reconfigure multiple IPSec VPN tunnels to the new addressing if the CPE automatically builds superimposed tunnels using multiple CPEs and a central data center using VXLAN or MPLSoGRE technologies. Centralized management of remote offices will allow you to quickly change the software on the CPE and centrally store configuration data. Information security tools can be activated from a single management console at once for the entire organization. The information security administrator will appreciate the lack of ability of employees in the branch to have access to the console of the router, because the policies can be configured only on the SDN controller or the orchestrator.

findings

Using the mentioned trends - switching to a centralized control plane, replacing specialized devices with network functions to x86 servers with software NF, creating a virtual resource management system with transmitting control signals to various components of the OSS \ BSS system, as well as automating and virtualizing terminal connection devices to increase the speed of service delivery to the market, create an ecosystem for the provision of high value-added services and increase the provider’s appeal to the final cli ntov.

Source: https://habr.com/ru/post/307356/

All Articles