Learning OpenGL ES2 for Android Lesson number 3. Lighting

Before you start

If you are new to OpenGL ES, I recommend that you first study lessons №1 and №2, since this lesson is based on knowledge of previous lessons.

The basics of the code used in this article are taken from here:

1. http://andmonahov.blogspot.com/2012/10/opengl-es-20.html

2. http://www.learnopengles.com/android-lesson-two-ambient-and-diffuse-lighting/

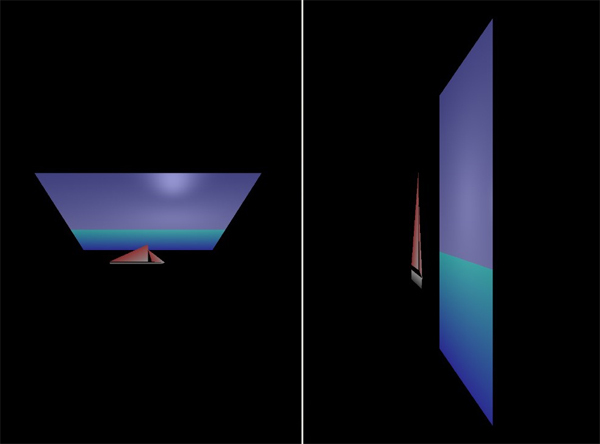

As a result, we get such a picture on the screen of the device or emulator.

A little bit about the light and types of light sources

Light can be considered either as an electromagnetic wave of a certain frequency, or as a stream of photons — particles with a certain energy. Depending on which photons fell on certain places in the retina, our brain creates visual sensations.

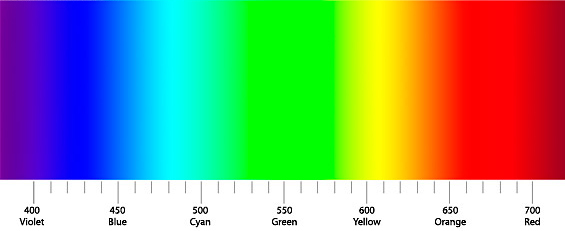

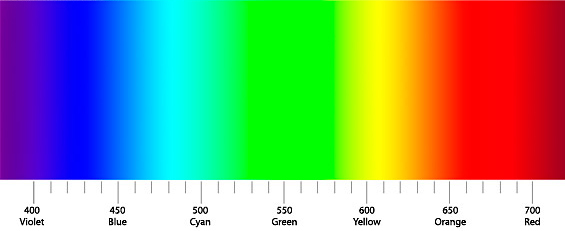

Sensations of color depend on the photon energy or frequency of electromagnetic radiation. The section with wavelengths in vacuum of 380–400 nm (violet) is taken as the short-wave boundary of the spectral range occupied by light, and the segment of 760–780 nm (red) is taken as the long-wave boundary.

In addition to the sensation of color, the concept of the brightness of light, which is determined by the number of photons that falls on the retina per unit of time or intensity of an electromagnetic wave, is very important for us.

All objects of the world can be divided into those that emit light and those that reflect it. But we perceive the world in all its diversity, not only the brightness of the light or color is important to us, but also the reflecting abilities of the objects themselves, the shadows from them and many other things. Computer modeling of the surrounding world has reached such heights that sometimes only an expert can distinguish a photograph of a real object from a computer model. There are many classifications and systems for modeling lighting of objects, we consider only some of them.

Ambient lighting

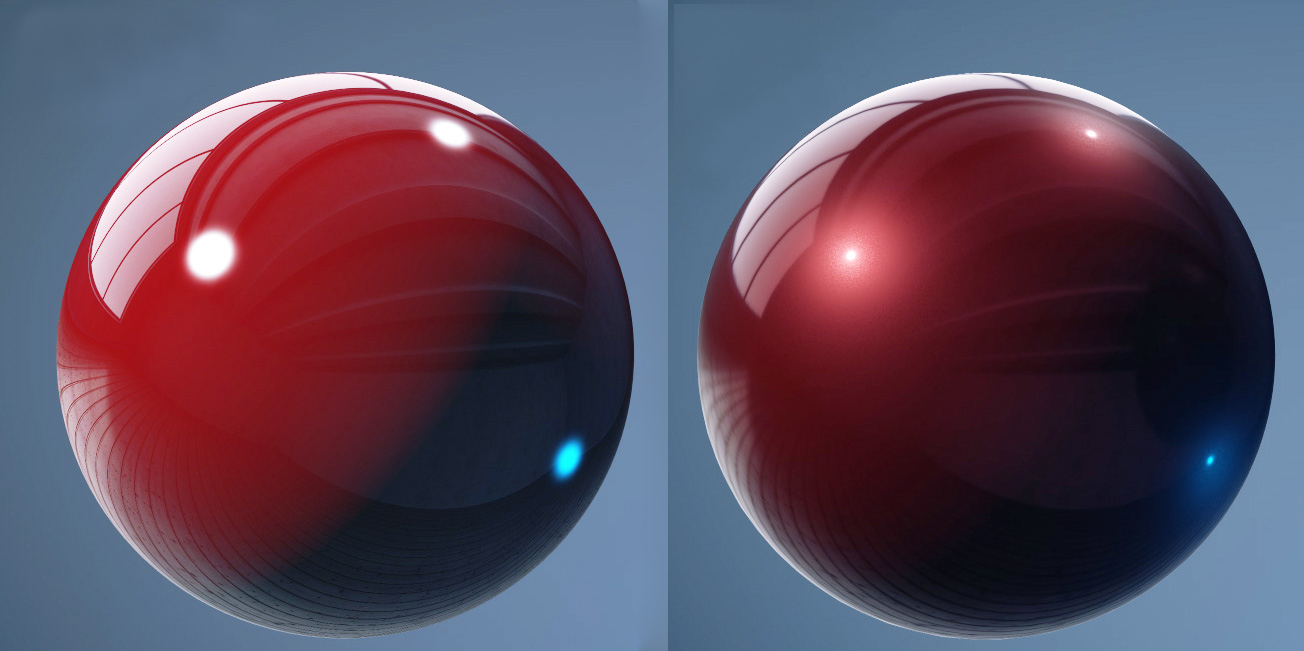

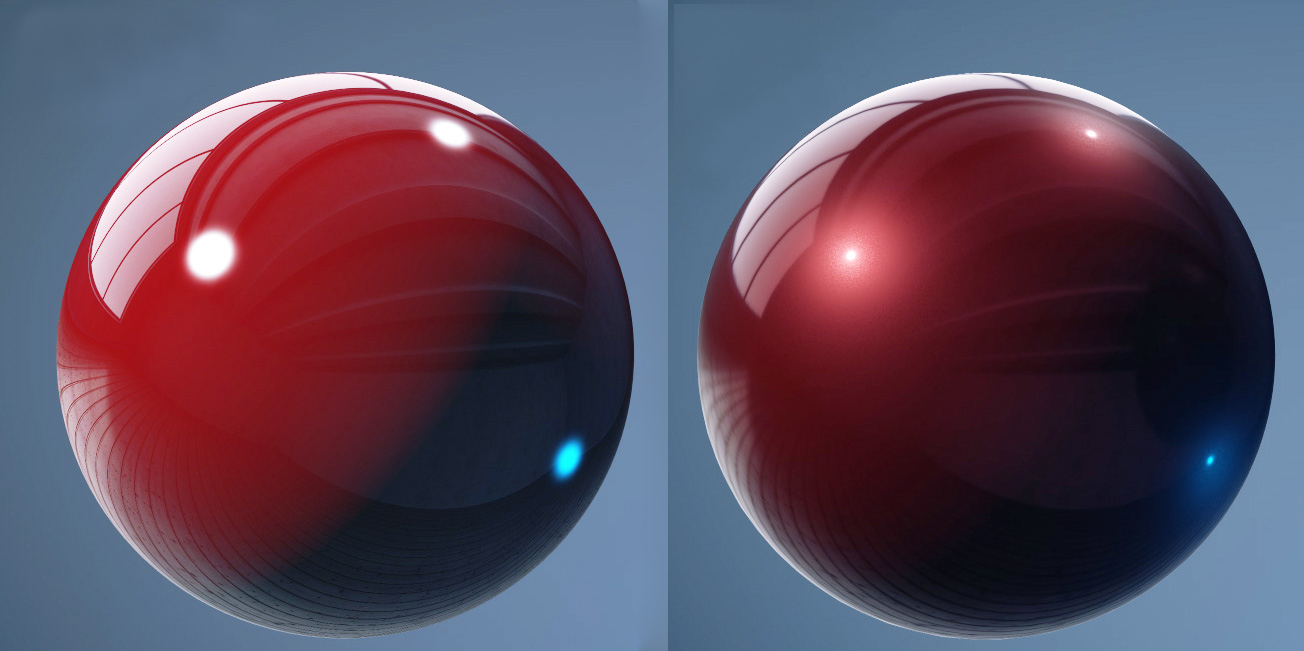

When objects are illuminated equally from all sides, we are talking about background lighting. In nature, this usually happens on an overcast day. If we are indoors, then such lighting occurs when multiple reflections of light from different objects or when there are many light sources distributed in a special way, with the result that our model will be illuminated from all sides. In the photo on the left you see a picture where the directional and background lighting is mixed, and on the right - only the background. When calculating the background illumination, neither the normals of the surfaces (at what angle the beam falls on the surface) nor the current camera position are taken into account.

How to describe background lighting programmatically?

Let our object be red, and our background light be dim white. Suppose we save a color as an array of three colors: red, green, and blue, using the RGB color model. Then, we need to multiply the weighting factors of the colors by a certain light constant.

final color = {1, 0, 0} * {0.1, 0.1, 0.1} = {0.1, 0.0, 0.0}

Now the final color of the object will become dull-red, which corresponds to what we see in real life if we light a red object with a dull white light.

')

Diffuse lighting

Diffuse lighting - the light from the source is scattered after hitting a given point. Depending on the angle at which the light falls, the lighting becomes stronger or weaker. Here the surface normals are taken into account, but not the position of the camera;

Imagine that a ray of light falls at a 45 degree angle (the angle between the ray and the normal to the surface) on a horizontal surface. As a result of diffuse (diffuse) reflection, the light energy will be uniformly reflected in all directions, so all three cameras will remove the surface of the same brightness. It is clear that if the light falls at an angle of 0 degrees (perpendicular to the surface), the surface will be lit best. And if the beam of light falls at an angle close to 90 degrees, then the rays will glide over the surface and will not illuminate it.

Software simulation of diffuse reflection

To simulate diffuse reflection, the Lambert coefficient (lambert factor) is applied.

Let's explain these three lines of code.

First, calculate the vector of light by subtracting the position of the object from the position of the light source.

Then we can calculate the cosine of the angle between the vector of light and the normal, finding the scalar product between the normal to the surface and the normalized vector of light. To normalize the vector of light means to change its length so that it is equal to one, but the direction of the vector remains the same. The module of the normal to the surface is already equal to one. Having determined the scalar product of two normalized vectors, we find the cosine of the angle between them.

Since the scalar product can have a range from -1 to 1, we must limit it to the range from 0 to 1. The function max (x, y) gives “x” if “x” is greater than “y” and vice versa.

To be clear, consider an example.

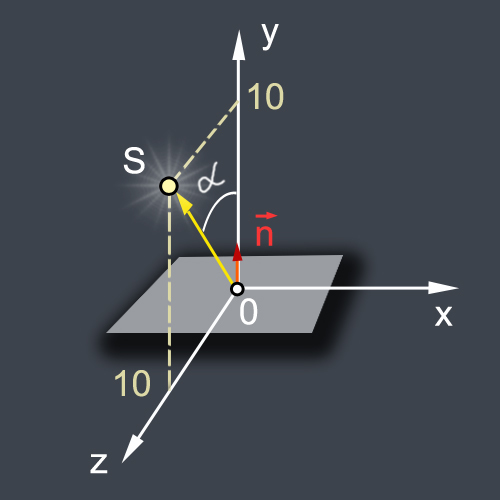

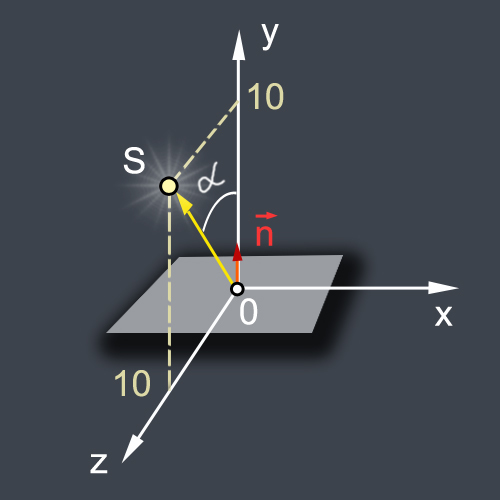

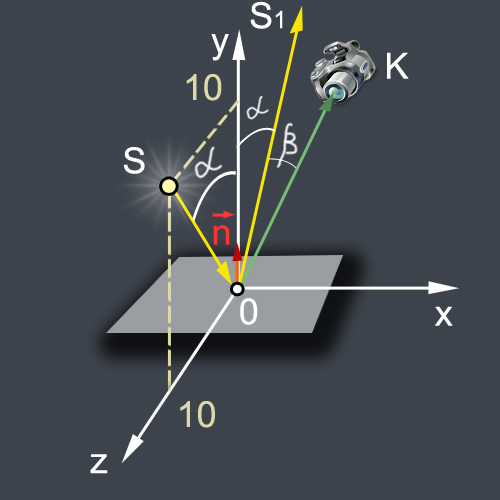

Let our light source be in t.S (0.10.10), and we want to calculate the illumination of a horizontal surface (this can be a single pixel) whose normal looks vertically upwards (0, 1, 0). Then the OS vector (light vector) and the normal vector (object normal)

Find the length of the vector OS

Normalize it, i.e. let's make it so that its length becomes equal to one.

Then we compute the scalar product:

Who forgot mathematics, let me remind you that the scalar product of two vectors is equal to the product of their modules multiplied by the cosine of the angle between them. If our modules are equal in unit, then the scalar product is equal to the cosine.

There is a small problem, which is that, in principle, the cosine can take negative values if the angle alpha is from 90 to 180 degrees. Therefore, we limit the range of values of the Lambert coefficient from 0 to 1.

Reflected or glare lighting

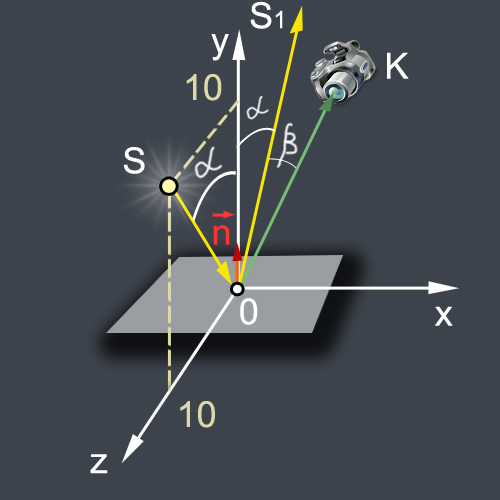

Specular lighting is the light from the source, reflected after hitting a given point. Reflected light is visible only if it enters the camera. Therefore, both the normals and the position of the camera are taken into account.

Software implementation of the glare

When calculating the diffuse illumination, a vector of unit length was determined, passing from the illuminated point to the light source of the normalized light vector. To determine the vector of incident light from the light source to a point on the surface, you just need to change the sign to minus.

To calculate the reflected vector (OS1 direction) in GLSL there is a special function reflect:

The camera coordinates are passed to the fragment shader as the u_camera uniform:

Now let's calculate the vector OK, pointing from the point of illumination to the camera and normalize it:

Next, we need to calculate the cosine of the angle beta between the reflected vector OS1 and the direction to the camera OK. Previously, we have already shown that the cosine of the angle is the scalar product of two unit vectors: dot (lookvector, reflectvector). The smaller the beta value, the brighter the highlight will be.

As for the lambert factor, we cut off the negative values of the scalar product using the max function:

The size of the flare itself can be adjusted using the gloss parameter; for this, the calculated value of the scalar product must be raised to the degree of gloss. The GLSL function is provided for exponentiation. Typically, the value of light take a few dozen units. With increasing brightness, the size of the flare decreases, but its brightness increases. Conversely, the smaller the brilliance, the larger the size of the flare, but its brightness becomes smaller.

Let, for example, the brilliance be equal to 30. Then we raise the scalar product obtained to the power of 30:

Multiply the obtained value by the coefficient of specular illumination k_specular and we obtain the brightness of specular illumination for this pixel:

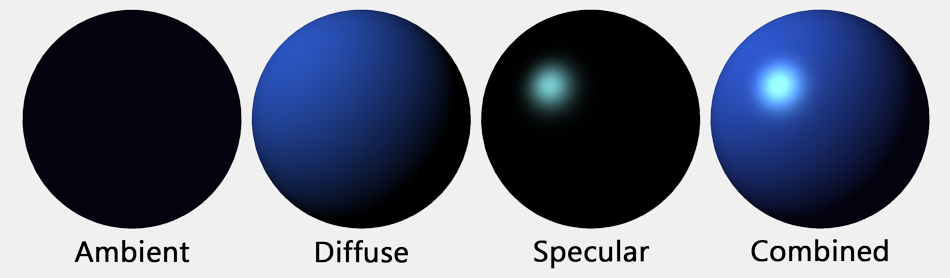

Total lighting

In order to simulate the lighting of an object in real life, you need to sum up in certain proportions different types of lighting of an object. For example, to get a pixel color with light, you need to add the ambient + diffuse + specular background, diffuse and specular parts of the illumination and multiply the pixel color obtained by interpolating the v_color vertex colors by the vector.

If we do not want to decorate the pixels with the interpolated colors of the vertices, it is enough to determine the vector of white color:

and multiply the brightness of the light:

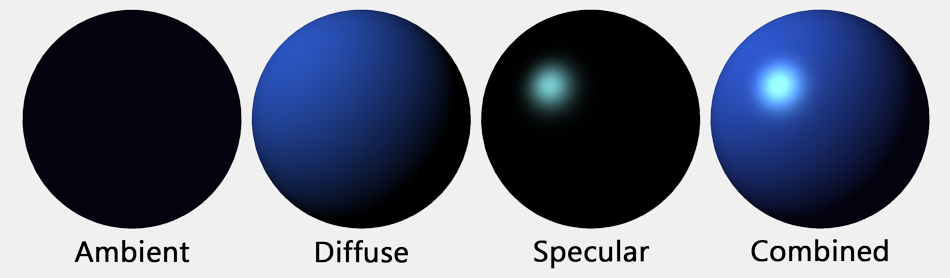

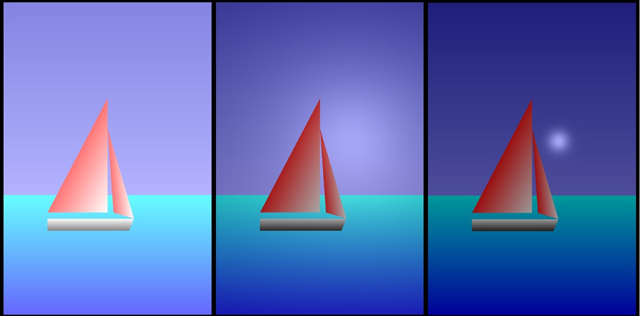

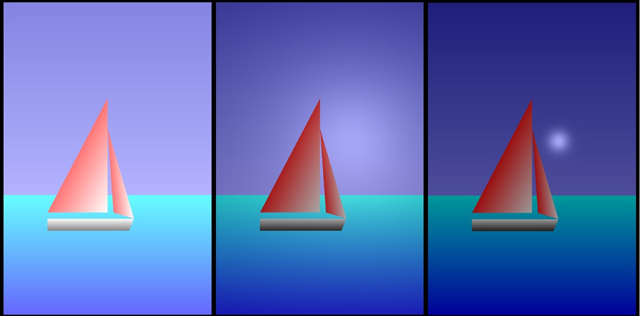

You can evaluate how the picture changes if you first take ambient = 1, diffuse = 0, specular = 0. The picture in the center for the case ambient = 0, diffuse = 1, specular = 0, respectively, the picture on the right for such values ambient = 0, diffuse = 0, specular = 1.

This line mixes light and color.

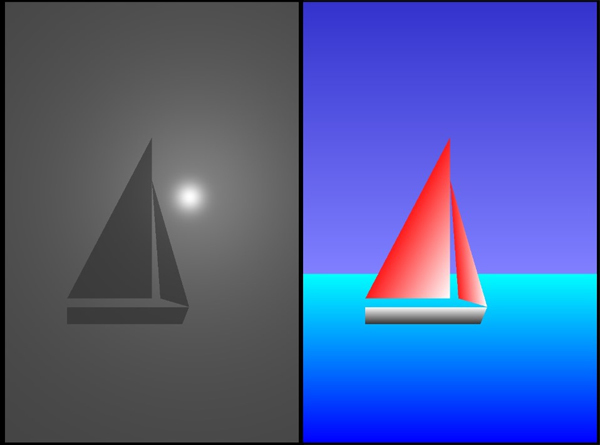

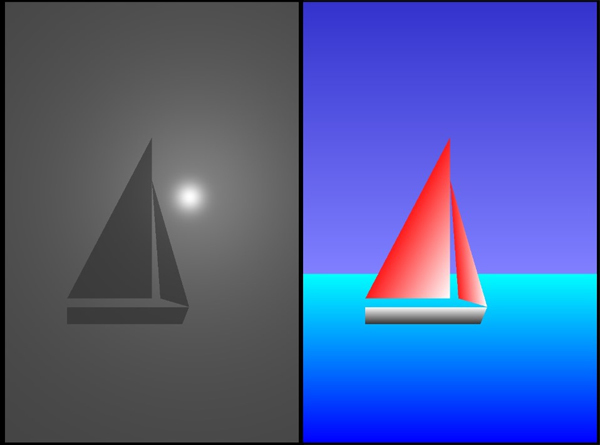

Look at how the picture looks (left), if the mixing coefficient is 0, in fact we see the distribution of the brightness of the light. If the coefficient is set to 1, then we will see pure colors.

Before you go to the source, small comments to them.

1. Even at the last lesson you noticed that such lines appeared in the Manifest file.

Now Google Play will not show our app on devices that do not support OpenGL ES.

2. In the second lesson, we drew triangles using only the GLES20.glDrawArrays method (GLES20.GL_TRIANGLES, 0, 3);

In this lesson, a new method GLES20.glDrawArrays (GLES20.GL_TRIANGLE_STRIP, 0, 4) has appeared;

With its help it is more convenient to draw quadrangles and complex reliefs. That is what we used to draw the sea, sky and boat. A good lesson on the application of this method can be found here.

http://www.learnopengles.com/tag/triangle-strips/

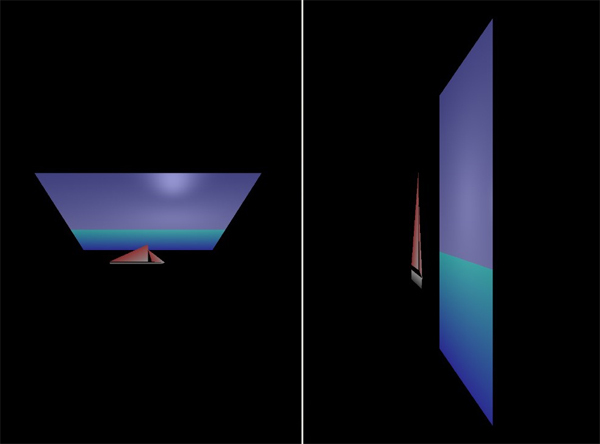

3. Our ship is drawn slightly protruding along the 0Z axis. By changing the position of the camera, one can see this displacement, the operation of the dies and the change in surface illumination. On the left, the camera was raised by 5 units of 0U, on the right - shifted by 4 units of 0X.

You will find the remaining explanations in the code itself.

I recommend to start the project first, and then play and experiment with different coefficients.

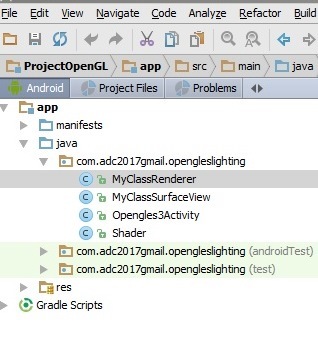

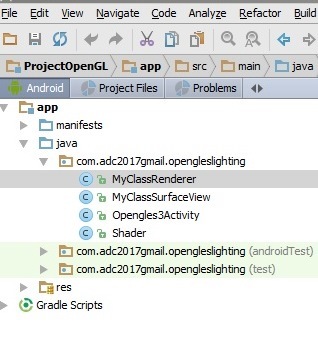

Create a project

Create an OpenGLESLighting project in Android Studio

Name the main activity Opengles3Activity.

Create three more java files:

MyClassSurfaceView.java

MyClassRenderer.java

Shader.java

Copy the code and paste it into the files instead of what was generated automatically.

Enjoy the picture. :)

If errors have crept into the article, I will be glad to correct them and learn from you.

Good luck!

Source code

AndroidManifest.xml

Opengles3Activity.java

MyClassSurfaceView.java

MyClassRenderer.java

Shader.java

Sources:

http://andmonahov.blogspot.com/2012/10/opengl-es-20.html

http://www.learnopengles.com/android-lesson-two-ambient-and-diffuse-lighting/

http://www.learnopengles.com/tag/triangle-strips/

http://eax.me/opengl-lighting/

http://www.john-chapman.net/content.php?id=3

If you are new to OpenGL ES, I recommend that you first study lessons №1 and №2, since this lesson is based on knowledge of previous lessons.

The basics of the code used in this article are taken from here:

1. http://andmonahov.blogspot.com/2012/10/opengl-es-20.html

2. http://www.learnopengles.com/android-lesson-two-ambient-and-diffuse-lighting/

As a result, we get such a picture on the screen of the device or emulator.

A little bit about the light and types of light sources

Light can be considered either as an electromagnetic wave of a certain frequency, or as a stream of photons — particles with a certain energy. Depending on which photons fell on certain places in the retina, our brain creates visual sensations.

Sensations of color depend on the photon energy or frequency of electromagnetic radiation. The section with wavelengths in vacuum of 380–400 nm (violet) is taken as the short-wave boundary of the spectral range occupied by light, and the segment of 760–780 nm (red) is taken as the long-wave boundary.

In addition to the sensation of color, the concept of the brightness of light, which is determined by the number of photons that falls on the retina per unit of time or intensity of an electromagnetic wave, is very important for us.

All objects of the world can be divided into those that emit light and those that reflect it. But we perceive the world in all its diversity, not only the brightness of the light or color is important to us, but also the reflecting abilities of the objects themselves, the shadows from them and many other things. Computer modeling of the surrounding world has reached such heights that sometimes only an expert can distinguish a photograph of a real object from a computer model. There are many classifications and systems for modeling lighting of objects, we consider only some of them.

Ambient lighting

When objects are illuminated equally from all sides, we are talking about background lighting. In nature, this usually happens on an overcast day. If we are indoors, then such lighting occurs when multiple reflections of light from different objects or when there are many light sources distributed in a special way, with the result that our model will be illuminated from all sides. In the photo on the left you see a picture where the directional and background lighting is mixed, and on the right - only the background. When calculating the background illumination, neither the normals of the surfaces (at what angle the beam falls on the surface) nor the current camera position are taken into account.

How to describe background lighting programmatically?

Let our object be red, and our background light be dim white. Suppose we save a color as an array of three colors: red, green, and blue, using the RGB color model. Then, we need to multiply the weighting factors of the colors by a certain light constant.

final color = {1, 0, 0} * {0.1, 0.1, 0.1} = {0.1, 0.0, 0.0}

Now the final color of the object will become dull-red, which corresponds to what we see in real life if we light a red object with a dull white light.

')

Diffuse lighting

Diffuse lighting - the light from the source is scattered after hitting a given point. Depending on the angle at which the light falls, the lighting becomes stronger or weaker. Here the surface normals are taken into account, but not the position of the camera;

Imagine that a ray of light falls at a 45 degree angle (the angle between the ray and the normal to the surface) on a horizontal surface. As a result of diffuse (diffuse) reflection, the light energy will be uniformly reflected in all directions, so all three cameras will remove the surface of the same brightness. It is clear that if the light falls at an angle of 0 degrees (perpendicular to the surface), the surface will be lit best. And if the beam of light falls at an angle close to 90 degrees, then the rays will glide over the surface and will not illuminate it.

Software simulation of diffuse reflection

To simulate diffuse reflection, the Lambert coefficient (lambert factor) is applied.

light vector = light position - object position

cosine = dot product(object normal, normalize(light vector))

lambert factor = max(cosine, 0)

Let's explain these three lines of code.

First, calculate the vector of light by subtracting the position of the object from the position of the light source.

light vector = light position - object position

Then we can calculate the cosine of the angle between the vector of light and the normal, finding the scalar product between the normal to the surface and the normalized vector of light. To normalize the vector of light means to change its length so that it is equal to one, but the direction of the vector remains the same. The module of the normal to the surface is already equal to one. Having determined the scalar product of two normalized vectors, we find the cosine of the angle between them.

cosine = dot product(object normal, normalize(light vector))

Since the scalar product can have a range from -1 to 1, we must limit it to the range from 0 to 1. The function max (x, y) gives “x” if “x” is greater than “y” and vice versa.

lambert factor = max(cosine, 0)

To be clear, consider an example.

Let our light source be in t.S (0.10.10), and we want to calculate the illumination of a horizontal surface (this can be a single pixel) whose normal looks vertically upwards (0, 1, 0). Then the OS vector (light vector) and the normal vector (object normal)

light vector = {0, 10, 10} - {0, 0, 0} = {0, 10, 10}

object normal = {0, 1, 0}

Find the length of the vector OS

light vector length = square root(0*0 + 10*10 + 10*10) = square root(200) = 14.14

Normalize it, i.e. let's make it so that its length becomes equal to one.

normalized light vector = {0, 10/14.14, 10/14.14} = {0, 0.707, 0.707}

Then we compute the scalar product:

dot product({0, 1, 0}, {0, 0.707, 0.707}) = (0 * 0) + (1 * 0.707) + (0 * 0.707) = 0 + 0.707 + 0 = 0.707

Who forgot mathematics, let me remind you that the scalar product of two vectors is equal to the product of their modules multiplied by the cosine of the angle between them. If our modules are equal in unit, then the scalar product is equal to the cosine.

There is a small problem, which is that, in principle, the cosine can take negative values if the angle alpha is from 90 to 180 degrees. Therefore, we limit the range of values of the Lambert coefficient from 0 to 1.

lambert factor = max(0.707, 0) = 0.707

Reflected or glare lighting

Specular lighting is the light from the source, reflected after hitting a given point. Reflected light is visible only if it enters the camera. Therefore, both the normals and the position of the camera are taken into account.

Software implementation of the glare

When calculating the diffuse illumination, a vector of unit length was determined, passing from the illuminated point to the light source of the normalized light vector. To determine the vector of incident light from the light source to a point on the surface, you just need to change the sign to minus.

To calculate the reflected vector (OS1 direction) in GLSL there is a special function reflect:

reflectvector = reflect(- normalized light vector, object normal);

The camera coordinates are passed to the fragment shader as the u_camera uniform:

uniform vec3 u_camera;

Now let's calculate the vector OK, pointing from the point of illumination to the camera and normalize it:

lookvector = normalize(u_camera - object position);

Next, we need to calculate the cosine of the angle beta between the reflected vector OS1 and the direction to the camera OK. Previously, we have already shown that the cosine of the angle is the scalar product of two unit vectors: dot (lookvector, reflectvector). The smaller the beta value, the brighter the highlight will be.

As for the lambert factor, we cut off the negative values of the scalar product using the max function:

max(dot(lookvector,reflectvector),0.0)

The size of the flare itself can be adjusted using the gloss parameter; for this, the calculated value of the scalar product must be raised to the degree of gloss. The GLSL function is provided for exponentiation. Typically, the value of light take a few dozen units. With increasing brightness, the size of the flare decreases, but its brightness increases. Conversely, the smaller the brilliance, the larger the size of the flare, but its brightness becomes smaller.

Let, for example, the brilliance be equal to 30. Then we raise the scalar product obtained to the power of 30:

pow( max(dot(lookvector,reflectvector),0.0), 30.0 )

Multiply the obtained value by the coefficient of specular illumination k_specular and we obtain the brightness of specular illumination for this pixel:

float specular = k_specular * pow( max(dot(lookvector,reflectvector),0.0), 30.0 );

Total lighting

In order to simulate the lighting of an object in real life, you need to sum up in certain proportions different types of lighting of an object. For example, to get a pixel color with light, you need to add the ambient + diffuse + specular background, diffuse and specular parts of the illumination and multiply the pixel color obtained by interpolating the v_color vertex colors by the vector.

gl_FragColor = (ambient+diffuse+specular)*v_color;

If we do not want to decorate the pixels with the interpolated colors of the vertices, it is enough to determine the vector of white color:

vec4 one=vec4(1.0,1.0,1.0,1.0);

and multiply the brightness of the light:

gl_FragColor = (ambient+diffuse+specular)*one;

You can evaluate how the picture changes if you first take ambient = 1, diffuse = 0, specular = 0. The picture in the center for the case ambient = 0, diffuse = 1, specular = 0, respectively, the picture on the right for such values ambient = 0, diffuse = 0, specular = 1.

This line mixes light and color.

gl_FragColor = mix(lightColor,v_color,0.6)

Look at how the picture looks (left), if the mixing coefficient is 0, in fact we see the distribution of the brightness of the light. If the coefficient is set to 1, then we will see pure colors.

Before you go to the source, small comments to them.

1. Even at the last lesson you noticed that such lines appeared in the Manifest file.

<uses-feature android:glEsVersion="0x00020000" android:required="true" /> Now Google Play will not show our app on devices that do not support OpenGL ES.

2. In the second lesson, we drew triangles using only the GLES20.glDrawArrays method (GLES20.GL_TRIANGLES, 0, 3);

In this lesson, a new method GLES20.glDrawArrays (GLES20.GL_TRIANGLE_STRIP, 0, 4) has appeared;

With its help it is more convenient to draw quadrangles and complex reliefs. That is what we used to draw the sea, sky and boat. A good lesson on the application of this method can be found here.

http://www.learnopengles.com/tag/triangle-strips/

3. Our ship is drawn slightly protruding along the 0Z axis. By changing the position of the camera, one can see this displacement, the operation of the dies and the change in surface illumination. On the left, the camera was raised by 5 units of 0U, on the right - shifted by 4 units of 0X.

You will find the remaining explanations in the code itself.

I recommend to start the project first, and then play and experiment with different coefficients.

Create a project

Create an OpenGLESLighting project in Android Studio

Name the main activity Opengles3Activity.

Create three more java files:

MyClassSurfaceView.java

MyClassRenderer.java

Shader.java

Copy the code and paste it into the files instead of what was generated automatically.

Enjoy the picture. :)

If errors have crept into the article, I will be glad to correct them and learn from you.

Good luck!

Source code

AndroidManifest.xml

<?xml version="1.0" encoding="utf-8"?> <manifest xmlns:android="http://schemas.android.com/apk/res/android" package="com.adc2017gmail.opengleslighting" android:versionCode="1" android:versionName="1.0" > <uses-sdk android:minSdkVersion="10" android:targetSdkVersion="19" /> <uses-feature android:glEsVersion="0x00020000" /> <application android:allowBackup="true" android:icon="@mipmap/ic_launcher" android:label="@string/app_name" android:supportsRtl="true" android:theme="@style/AppTheme"> <activity android:name=".Opengles3Activity"> <intent-filter> <action android:name="android.intent.action.MAIN" /> <category android:name="android.intent.category.LAUNCHER" /> </intent-filter> </activity> </application> </manifest> Opengles3Activity.java

public class Opengles3Activity extends Activity { // MyClassSurfaceView private MyClassSurfaceView mGLSurfaceView; // // onCreate @Override public void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); // MyClassSurfaceView mGLSurfaceView = new MyClassSurfaceView(this); // MyClassSurfaceView setContentView(mGLSurfaceView); // OpenGl ES } @Override protected void onPause() { super.onPause(); mGLSurfaceView.onPause(); } @Override protected void onResume() { super.onResume(); mGLSurfaceView.onResume(); } } MyClassSurfaceView.java

package com.adc2017gmail.opengleslighting; import android.content.Context; import android.opengl.GLSurfaceView; // MyClassSurfaceView GLSurfaceView public class MyClassSurfaceView extends GLSurfaceView{ // private MyClassRenderer renderer; // public MyClassSurfaceView(Context context) { // GLSurfaceView super(context); setEGLContextClientVersion(2); // MyClassRenderer renderer = new MyClassRenderer(context); // setRenderer(renderer); // onDrawFrame setRenderMode(GLSurfaceView.RENDERMODE_CONTINUOUSLY); // // onDrawFrame // .. } } MyClassRenderer.java

package com.adc2017gmail.opengleslighting; import android.content.Context; import android.opengl.GLES20; import android.opengl.GLSurfaceView; import android.opengl.Matrix; import java.nio.ByteBuffer; import java.nio.ByteOrder; import java.nio.FloatBuffer; import javax.microedition.khronos.egl.EGLConfig; import javax.microedition.khronos.opengles.GL10; public class MyClassRenderer implements GLSurfaceView.Renderer{ // GLSurfaceView.Renderer // onDrawFrame, onSurfaceChanged, onSurfaceCreated // // private Context context; // private float xamera, yCamera, zCamera; // private float xLightPosition, yLightPosition, zLightPosition; // private float[] modelMatrix= new float[16]; private float[] viewMatrix= new float[16]; private float[] modelViewMatrix= new float[16];; private float[] projectionMatrix= new float[16];; private float[] modelViewProjectionMatrix= new float[16];; // private final FloatBuffer vertexBuffer; private final FloatBuffer vertexBuffer1; private final FloatBuffer vertexBuffer2; private final FloatBuffer vertexBuffer3; private final FloatBuffer vertexBuffer4; // private FloatBuffer normalBuffer; private FloatBuffer normalBuffer1; // private final FloatBuffer colorBuffer; private final FloatBuffer colorBuffer1; private final FloatBuffer colorBuffer2; private final FloatBuffer colorBuffer4; // private Shader mShader; private Shader mShader1; private Shader mShader2; private Shader mShader3; private Shader mShader4; // public MyClassRenderer(Context context) { // // this.context=context; // xLightPosition=0.3f; yLightPosition=0.2f; zLightPosition=0.5f; // // Matrix.setIdentityM(modelMatrix, 0); // xamera=0.0f; yCamera=0.0f; zCamera=3.0f; // // Y // Matrix.setLookAtM( viewMatrix, 0, xamera, yCamera, zCamera, 0, 0, 0, 0, 1, 0); // // - Matrix.multiplyMM(modelViewMatrix, 0, viewMatrix, 0, modelMatrix, 0); // 1 float x1=-1; float y1=-0.35f; float z1=0.0f; // 2 float x2=-1; float y2=-1.5f; float z2=0.0f; // 3 float x3=1; float y3=-0.35f; float z3=0.0f; // 4 float x4=1; float y4=-1.5f; float z4=0.0f; // float vertexArray [] = {x1,y1,z1, x2,y2,z2, x3,y3,z3, x4,y4,z4}; //coordinates for sky float vertexArray1 [] = {-1.0f,1.5f,0.0f, -1.0f,-0.35f,0.0f, 1.0f,1.5f,0.0f, 1.0f,-0.35f,0}; //coordinates for main sail float vertexArray2 [] = {-0.5f,-0.45f,0.4f, 0.0f,-0.45f,0.4f, 0.0f,0.5f,0.4f}; //coordinates for small sail float vertexArray3 [] = {0.05f,-0.45f,0.4f, 0.22f,-0.5f,0.4f, 0.0f,0.25f,0.4f }; //coordinates for boat float vertexArray4 [] = {-0.5f,-0.5f,0.4f, -0.5f,-0.6f,0.4f, 0.22f,-0.5f,0.4f, 0.18f,-0.6f,0.4f}; // ByteBuffer bvertex = ByteBuffer.allocateDirect(vertexArray.length*4); bvertex.order(ByteOrder.nativeOrder()); vertexBuffer = bvertex.asFloatBuffer(); vertexBuffer.position(0); ByteBuffer bvertex1 = ByteBuffer.allocateDirect(vertexArray1.length*4); bvertex1.order(ByteOrder.nativeOrder()); vertexBuffer1 = bvertex1.asFloatBuffer(); vertexBuffer1.position(0); ByteBuffer bvertex2 = ByteBuffer.allocateDirect(vertexArray2.length*4); bvertex2.order(ByteOrder.nativeOrder()); vertexBuffer2 = bvertex2.asFloatBuffer(); vertexBuffer2.position(0); ByteBuffer bvertex3 = ByteBuffer.allocateDirect(vertexArray3.length*4); bvertex3.order(ByteOrder.nativeOrder()); vertexBuffer3 = bvertex3.asFloatBuffer(); vertexBuffer3.position(0); ByteBuffer bvertex4 = ByteBuffer.allocateDirect(vertexArray4.length*4); bvertex4.order(ByteOrder.nativeOrder()); vertexBuffer4 = bvertex4.asFloatBuffer(); vertexBuffer4.position(0); // vertexBuffer.put(vertexArray); vertexBuffer.position(0); vertexBuffer1.put(vertexArray1); vertexBuffer1.position(0); vertexBuffer2.put(vertexArray2); vertexBuffer2.position(0); vertexBuffer3.put(vertexArray3); vertexBuffer3.position(0); vertexBuffer4.put(vertexArray4); vertexBuffer4.position(0); // // Z float nx=0; float ny=0; float nz=1; // , // 4 float normalArray [] ={nx, ny, nz, nx, ny, nz, nx, ny, nz, nx, ny, nz}; float normalArray1 [] ={0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1}; // ByteBuffer bnormal = ByteBuffer.allocateDirect(normalArray.length*4); bnormal.order(ByteOrder.nativeOrder()); normalBuffer = bnormal.asFloatBuffer(); normalBuffer.position(0); // normalBuffer.put(normalArray); normalBuffer.position(0); // , float red1=0; float green1=1; float blue1=1; // float red2=0; float green2=0; float blue2=1; // float red3=0; float green3=1; float blue3=1; // float red4=0; float green4=0; float blue4=1; // // () float colorArray [] = { red1, green1, blue1, 1, red2, green2, blue2, 1, red3, green3, blue3, 1, red4, green4, blue4, 1, }; float colorArray1[] = { 0.2f, 0.2f, 0.8f, 1, 0.5f, 0.5f, 1, 1, 0.2f, 0.2f, 0.8f, 1, 0.5f, 0.5f, 1, 1, }; float colorArray2[] = { 1, 0.1f, 0.1f, 1, 1, 1, 1, 1, 1, 0.1f, 0.1f, 1, }; float colorArray4[] = { 1, 1, 1, 1, 0.2f, 0.2f, 0.2f, 1, 1, 1, 1, 1, 0.2f, 0.2f, 0.2f, 1, }; // ByteBuffer bcolor = ByteBuffer.allocateDirect(colorArray.length*4); bcolor.order(ByteOrder.nativeOrder()); colorBuffer = bcolor.asFloatBuffer(); colorBuffer.position(0); // colorBuffer.put(colorArray); colorBuffer.position(0); ByteBuffer bcolor1 = ByteBuffer.allocateDirect(colorArray1.length*4); bcolor1.order(ByteOrder.nativeOrder()); colorBuffer1 = bcolor1.asFloatBuffer(); colorBuffer1.position(0); colorBuffer1.put(colorArray1); colorBuffer1.position(0); ByteBuffer bcolor2 = ByteBuffer.allocateDirect(colorArray1.length*4); bcolor2.order(ByteOrder.nativeOrder()); colorBuffer2 = bcolor2.asFloatBuffer(); colorBuffer2.position(0); colorBuffer2.put(colorArray2); colorBuffer2.position(0); ByteBuffer bcolor4 = ByteBuffer.allocateDirect(colorArray4.length*4); bcolor4.order(ByteOrder.nativeOrder()); colorBuffer4 = bcolor4.asFloatBuffer(); colorBuffer4.position(0); colorBuffer4.put(colorArray4); colorBuffer4.position(0); }// //, // -- public void onSurfaceChanged(GL10 unused, int width, int height) { // glViewport GLES20.glViewport(0, 0, width, height); float ratio = (float) width / height; float k=0.055f; float left = -k*ratio; float right = k*ratio; float bottom = -k; float top = k; float near = 0.1f; float far = 10.0f; // Matrix.frustumM(projectionMatrix, 0, left, right, bottom, top, near, far); // , // -- Matrix.multiplyMM( modelViewProjectionMatrix, 0, projectionMatrix, 0, modelViewMatrix, 0); } //, // public void onSurfaceCreated(GL10 unused, EGLConfig config) { // GLES20.glEnable(GLES20.GL_DEPTH_TEST); // GLES20.glEnable(GLES20.GL_CULL_FACE); // , GLES20.glHint( GLES20.GL_GENERATE_MIPMAP_HINT, GLES20.GL_NICEST); // String vertexShaderCode= "uniform mat4 u_modelViewProjectionMatrix;"+ "attribute vec3 a_vertex;"+ "attribute vec3 a_normal;"+ "attribute vec4 a_color;"+ "varying vec3 v_vertex;"+ "varying vec3 v_normal;"+ "varying vec4 v_color;"+ "void main() {"+ "v_vertex=a_vertex;"+ "vec3 n_normal=normalize(a_normal);"+ "v_normal=n_normal;"+ "v_color=a_color;"+ "gl_Position = u_modelViewProjectionMatrix * vec4(a_vertex,1.0);"+ "}"; // String fragmentShaderCode= "precision mediump float;"+ "uniform vec3 u_camera;"+ "uniform vec3 u_lightPosition;"+ "varying vec3 v_vertex;"+ "varying vec3 v_normal;"+ "varying vec4 v_color;"+ "void main() {"+ "vec3 n_normal=normalize(v_normal);"+ "vec3 lightvector = normalize(u_lightPosition - v_vertex);"+ "vec3 lookvector = normalize(u_camera - v_vertex);"+ "float ambient=0.2;"+ "float k_diffuse=0.3;"+ "float k_specular=0.5;"+ "float diffuse = k_diffuse * max(dot(n_normal, lightvector), 0.0);"+ "vec3 reflectvector = reflect(-lightvector, n_normal);"+ "float specular = k_specular * pow( max(dot(lookvector,reflectvector),0.0), 40.0 );"+ "vec4 one=vec4(1.0,1.0,1.0,1.0);"+ "vec4 lightColor = (ambient+diffuse+specular)*one;"+ "gl_FragColor = mix(lightColor,v_color,0.6);"+ "}"; // mShader=new Shader(vertexShaderCode, fragmentShaderCode); // a_vertex mShader.linkVertexBuffer(vertexBuffer); // a_normal mShader.linkNormalBuffer(normalBuffer); // a_color mShader.linkColorBuffer(colorBuffer); // , // mShader1 = new Shader(vertexShaderCode, fragmentShaderCode); mShader1.linkVertexBuffer(vertexBuffer1); mShader1.linkNormalBuffer(normalBuffer); mShader1.linkColorBuffer(colorBuffer1); mShader2 = new Shader(vertexShaderCode, fragmentShaderCode); mShader2.linkVertexBuffer(vertexBuffer2); mShader2.linkNormalBuffer(normalBuffer); mShader2.linkColorBuffer(colorBuffer2); mShader3 = new Shader(vertexShaderCode, fragmentShaderCode); mShader3.linkVertexBuffer(vertexBuffer3); mShader3.linkNormalBuffer(normalBuffer); mShader3.linkColorBuffer(colorBuffer2); mShader4 = new Shader(vertexShaderCode, fragmentShaderCode); mShader4.linkVertexBuffer(vertexBuffer4); mShader4.linkNormalBuffer(normalBuffer); mShader4.linkColorBuffer(colorBuffer4); } //, public void onDrawFrame(GL10 unused) { // GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT | GLES20.GL_DEPTH_BUFFER_BIT); // -- mShader.useProgram(); mShader.linkVertexBuffer(vertexBuffer); mShader.linkColorBuffer(colorBuffer); mShader.linkModelViewProjectionMatrix(modelViewProjectionMatrix); mShader.linkCamera(xamera, yCamera, zCamera); mShader.linkLightSource(xLightPosition, yLightPosition, zLightPosition); GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4); mShader1.useProgram(); mShader1.linkVertexBuffer(vertexBuffer1); mShader1.linkColorBuffer(colorBuffer1); mShader1.linkModelViewProjectionMatrix(modelViewProjectionMatrix); mShader1.linkCamera(xamera, yCamera, zCamera); mShader1.linkLightSource(xLightPosition, yLightPosition, zLightPosition); GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4); mShader2.useProgram(); mShader2.linkVertexBuffer(vertexBuffer2); mShader2.linkColorBuffer(colorBuffer2); mShader2.linkModelViewProjectionMatrix(modelViewProjectionMatrix); mShader2.linkCamera(xamera, yCamera, zCamera); mShader2.linkLightSource(xLightPosition, yLightPosition, zLightPosition); GLES20.glDrawArrays(GLES20.GL_TRIANGLES, 0, 3); mShader3.useProgram(); mShader3.linkVertexBuffer(vertexBuffer3); mShader3.linkColorBuffer(colorBuffer2); mShader3.linkModelViewProjectionMatrix(modelViewProjectionMatrix); mShader3.linkCamera(xamera, yCamera, zCamera); mShader3.linkLightSource(xLightPosition, yLightPosition, zLightPosition); GLES20.glDrawArrays(GLES20.GL_TRIANGLES, 0, 3); mShader4.useProgram(); mShader4.linkVertexBuffer(vertexBuffer4); mShader4.linkColorBuffer(colorBuffer4); mShader4.linkModelViewProjectionMatrix(modelViewProjectionMatrix); mShader4.linkCamera(xamera, yCamera, zCamera); mShader4.linkLightSource(xLightPosition, yLightPosition, zLightPosition); GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4); } }// Shader.java

package com.adc2017gmail.opengleslighting; import android.opengl.GLES20; import java.nio.FloatBuffer; public class Shader { // // private int program_Handle; // // public Shader(String vertexShaderCode, String fragmentShaderCode){ // , // program_Handle createProgram(vertexShaderCode, fragmentShaderCode); } // , , private void createProgram(String vertexShaderCode, String fragmentShaderCode){ // int vertexShader_Handle = GLES20.glCreateShader(GLES20.GL_VERTEX_SHADER); // GLES20.glShaderSource(vertexShader_Handle, vertexShaderCode); // GLES20.glCompileShader(vertexShader_Handle); // int fragmentShader_Handle = GLES20.glCreateShader(GLES20.GL_FRAGMENT_SHADER); // GLES20.glShaderSource(fragmentShader_Handle, fragmentShaderCode); // GLES20.glCompileShader(fragmentShader_Handle); // program_Handle = GLES20.glCreateProgram(); // GLES20.glAttachShader(program_Handle, vertexShader_Handle); // GLES20.glAttachShader(program_Handle, fragmentShader_Handle); // GLES20.glLinkProgram(program_Handle); } //, // vertexBuffer a_vertex public void linkVertexBuffer(FloatBuffer vertexBuffer){ // GLES20.glUseProgram(program_Handle); // a_vertex int a_vertex_Handle = GLES20.glGetAttribLocation(program_Handle, "a_vertex"); // a_vertex GLES20.glEnableVertexAttribArray(a_vertex_Handle); // vertexBuffer a_vertex GLES20.glVertexAttribPointer( a_vertex_Handle, 3, GLES20.GL_FLOAT, false, 0,vertexBuffer); } //, // normalBuffer a_normal public void linkNormalBuffer(FloatBuffer normalBuffer){ // GLES20.glUseProgram(program_Handle); // a_normal int a_normal_Handle = GLES20.glGetAttribLocation(program_Handle, "a_normal"); // a_normal GLES20.glEnableVertexAttribArray(a_normal_Handle); // normalBuffer a_normal GLES20.glVertexAttribPointer( a_normal_Handle, 3, GLES20.GL_FLOAT, false, 0,normalBuffer); } //, // colorBuffer a_color public void linkColorBuffer(FloatBuffer colorBuffer){ // GLES20.glUseProgram(program_Handle); // a_color int a_color_Handle = GLES20.glGetAttribLocation(program_Handle, "a_color"); // a_color GLES20.glEnableVertexAttribArray(a_color_Handle); // colorBuffer a_color GLES20.glVertexAttribPointer( a_color_Handle, 4, GLES20.GL_FLOAT, false, 0, colorBuffer); } //, -- // modelViewProjectionMatrix u_modelViewProjectionMatrix public void linkModelViewProjectionMatrix(float [] modelViewProjectionMatrix){ // GLES20.glUseProgram(program_Handle); // u_modelViewProjectionMatrix int u_modelViewProjectionMatrix_Handle = GLES20.glGetUniformLocation(program_Handle, "u_modelViewProjectionMatrix"); // modelViewProjectionMatrix // u_modelViewProjectionMatrix GLES20.glUniformMatrix4fv( u_modelViewProjectionMatrix_Handle, 1, false, modelViewProjectionMatrix, 0); } // , u_camera public void linkCamera (float xCamera, float yCamera, float zCamera){ // GLES20.glUseProgram(program_Handle); // u_camera int u_camera_Handle=GLES20.glGetUniformLocation(program_Handle, "u_camera"); // u_camera GLES20.glUniform3f(u_camera_Handle, xCamera, yCamera, zCamera); } // , // u_lightPosition public void linkLightSource (float xLightPosition, float yLightPosition, float zLightPosition){ // GLES20.glUseProgram(program_Handle); // u_lightPosition int u_lightPosition_Handle=GLES20.glGetUniformLocation(program_Handle, "u_lightPosition"); // u_lightPosition GLES20.glUniform3f(u_lightPosition_Handle, xLightPosition, yLightPosition, zLightPosition); } // , public void useProgram(){ GLES20.glUseProgram(program_Handle); } // } Sources:

http://andmonahov.blogspot.com/2012/10/opengl-es-20.html

http://www.learnopengles.com/android-lesson-two-ambient-and-diffuse-lighting/

http://www.learnopengles.com/tag/triangle-strips/

http://eax.me/opengl-lighting/

http://www.john-chapman.net/content.php?id=3

Source: https://habr.com/ru/post/306928/

All Articles