How many neurons do you need to recognize a bridge summary?

The story began when I moved to live on the island of the Decembrists in St. Petersburg. At night, when the bridges were built, this island together with Vasilyevsky was completely isolated from the mainland. At the same time, bridges are often reduced ahead of time, sometimes an hour earlier than the published schedule, but there is no operational information about this anywhere.

After the second "tardiness" of the bridges, I thought about the sources of information about the early bridges report. One of the options that came to mind was information from public webcams. Armed with this data and residual knowledge from ML specialization from MIPT and Yandex , I decided to try to solve the problem head on.

First, the cameras

With webcams in Petersburg now it is not thick, I managed to find only two live cameras aimed at bridges: from vpiter.com and RSHU . A few years ago, there were cameras from Skylink, but now they are not available. On the other hand, even information on the Palace Bridge with vpiter.com alone can be useful. And it turned out to be more useful than I expected - a comrade-paramedic said that his crew was ambulance, including thanks to operational information about the bridges saved, plus two Petersburgers and one Swede in a week.

More cameras tend to fall off, give the video stream in a nasty format flv, but all this is very easy to manage ready-made cubes. Literally two lines of a shell script from a video stream is a set of frames received for classification every 5 seconds:

while true; do curl --connect-timeout $t --speed-limit $x --speed-time $y http://url/to | \ ffmpeg -loglevel warning -r 10 -i /dev/stdin -vsync 1 -r 0.2 -f image2 $(date +%s).%06d.jpeg done True, there is no classification yet. First, in the "sausage machine" you need to put the marked data, so leave the script to work at night for a week, and optionally follow the #PeteRT mantra, checking that the pictures are loaded.

x = io.imread(fname) Secondly - image processing

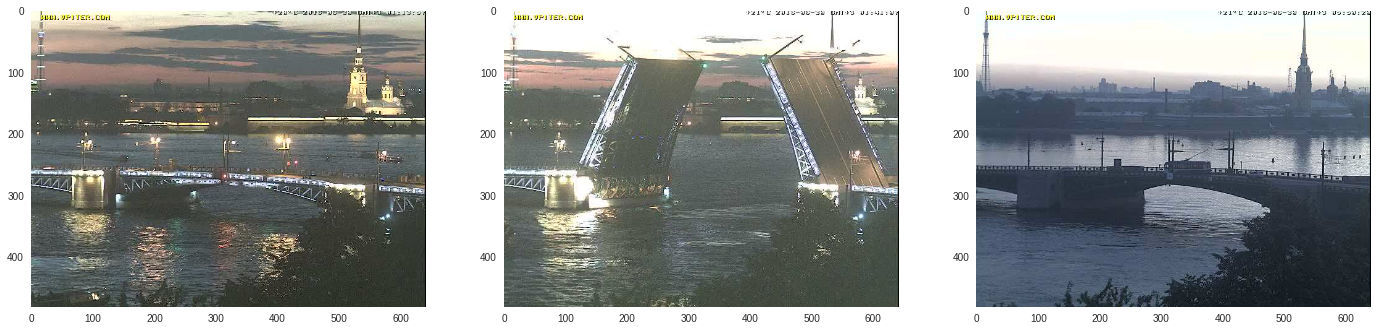

Anyway, having spread my hands and the method of dividing the photos in half into the folders UP, MOVING, DOWN, I received a marked selection. Andrew Eun in his course offered good heuristics "if you can distinguish object A from object B in the picture, then the neural network has a chance." Let's call this rule of thumb naive UN test .

First of all, it seems reasonable to crop the image so that it only had a section of the drawbridge. The TV tower is beautiful, but it doesn't look practical. Let's write the first line of code that has at least something to do with image processing :

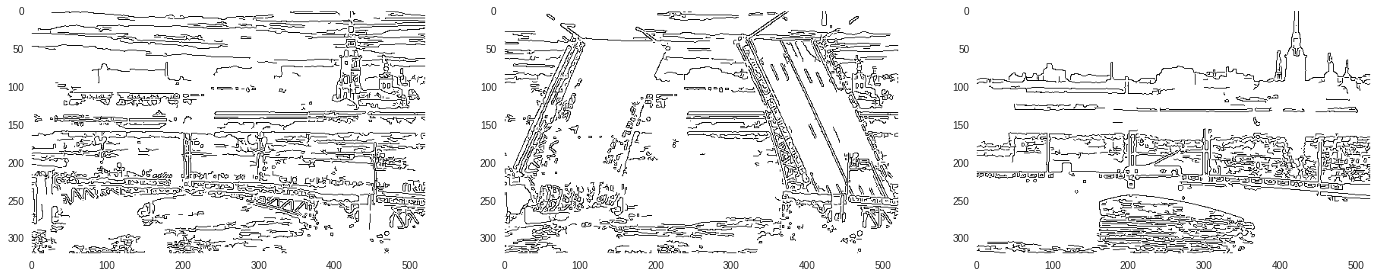

lambda x: x[40:360, 110:630] I vaguely heard that real experts take OpenCV, extract features and get decent quality. But, starting to read the documentation for OpenCV, I felt sad - quite quickly, I realized that I would not be able to work with OpenCV in the set limit “to make a prototype in a couple of evenings”. But in the library used for reading jpeg-s, skimage for the word feature also something . What is the difference between a divorced bridge and a mixed bridge? Contour against the sky. Well, let's take skimage.feature.canny , writing a task to read a notebook after a prototype about how the operator skimage.feature.canny .

lambdax x: feature.canny(color.rgb2gray(x[40:360, 110:630])) A trolley bus traveling over shaded water looks pretty nice. Perhaps, bored by this beauty, mkot regrets that he moved from St. Petersburg, but this picture does not pass the naive UN test - it looks visually noisy. We'll have to read the documentation beyond the first argument of the function. It seems logical that if there are too many borders, then you can blur the image with the proposed Gauss filter. The default value is 1 , try to increase it.

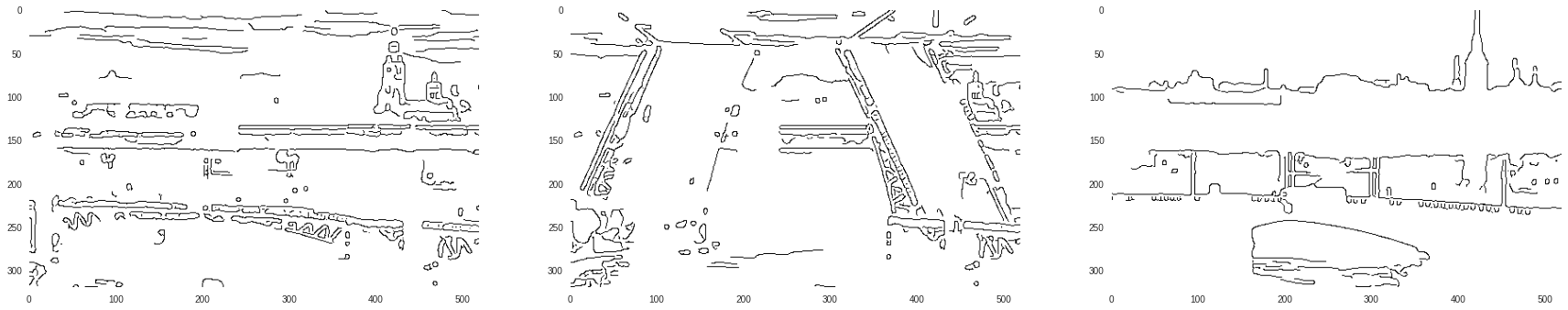

lambdax x: feature.canny(color.rgb2gray(x[40:360, 110:630]), sigma=2) This is more like data than strokes with a pencil. But there is another problem, this picture has 166400 pixels, and a couple of thousands of frames are collected overnight, because Disk space is not infinite. Surely, if you take these binary pixels as-is, the classifier will simply retrain. Let us apply the "forehead" method once more - we compress it 20 times.

lambda x: transform.downscale_local_mean(feature.canny(color.rgb2gray(x[40:360, 110:630]), sigma=2), (20, 20)) It still looks like bridges, but the image is now only 16x26, 416 pixels. Having several thousand frames on such a multitude of under-features is no longer very scary to learn and cross-validate. Now it would be nice to choose the topology of the neural network. Once Sergei Mikhailovich Dobrovolsky, who gave us lectures on the mat. analysis, joked that to predict the outcome of the election of the President of the United States is enough one neuron. It seems that the bridge is not much more complicated construction. I tried to train a logistic regression model . As expected, the bridge is not much more complicated than the election, and the model gives quite decent quality with two or three nines on all sorts of different metrics . Although this result looks suspicious (for sure, everything in the data is bad with multicollinearity). A nice side effect is that the model predicts the probability of the class, not the class itself. This allows you to draw a funny graph of how the layout of the Palace Bridge looks like for a robot's “neuron” in real time.

It remains to fasten to this design a push-notification and some kind of interface that allows you to look at the bridge with your eyes if the classifier failed. The first turned out to be the easiest to do with the Telegram bot, which sends notifications to the @SpbBridge channel . The second - from crutches, bootstrap and jquery to make a web-snout with live broadcast .

Why did I write all this?

I wanted to remind you that every problem has a simple, understandable, wrong solution, which nevertheless can be practical.

And yet, while I was writing this text, the rain, it seems, washed the camera into Neva, which looked at the Palace Bridge together with the server vpiter.tv, which the robot promptly reported.

I will be glad if you, in the name of failover, want to share your webcam, which is looking at some drawbridge. Suddenly, for example, you work in St. Petersburg State Medical University "GMC" .

PS: And here is the version with a slightly larger number of neurons , but more automatic feature extraction.

')

Source: https://habr.com/ru/post/306798/

All Articles