Screw to Nginx patch for dynamic size TLS records from Cloudflare

If you use Nginx to terminate TLS traffic, you can improve server response time with the help of patches from Cloudflare. Details under the cut.

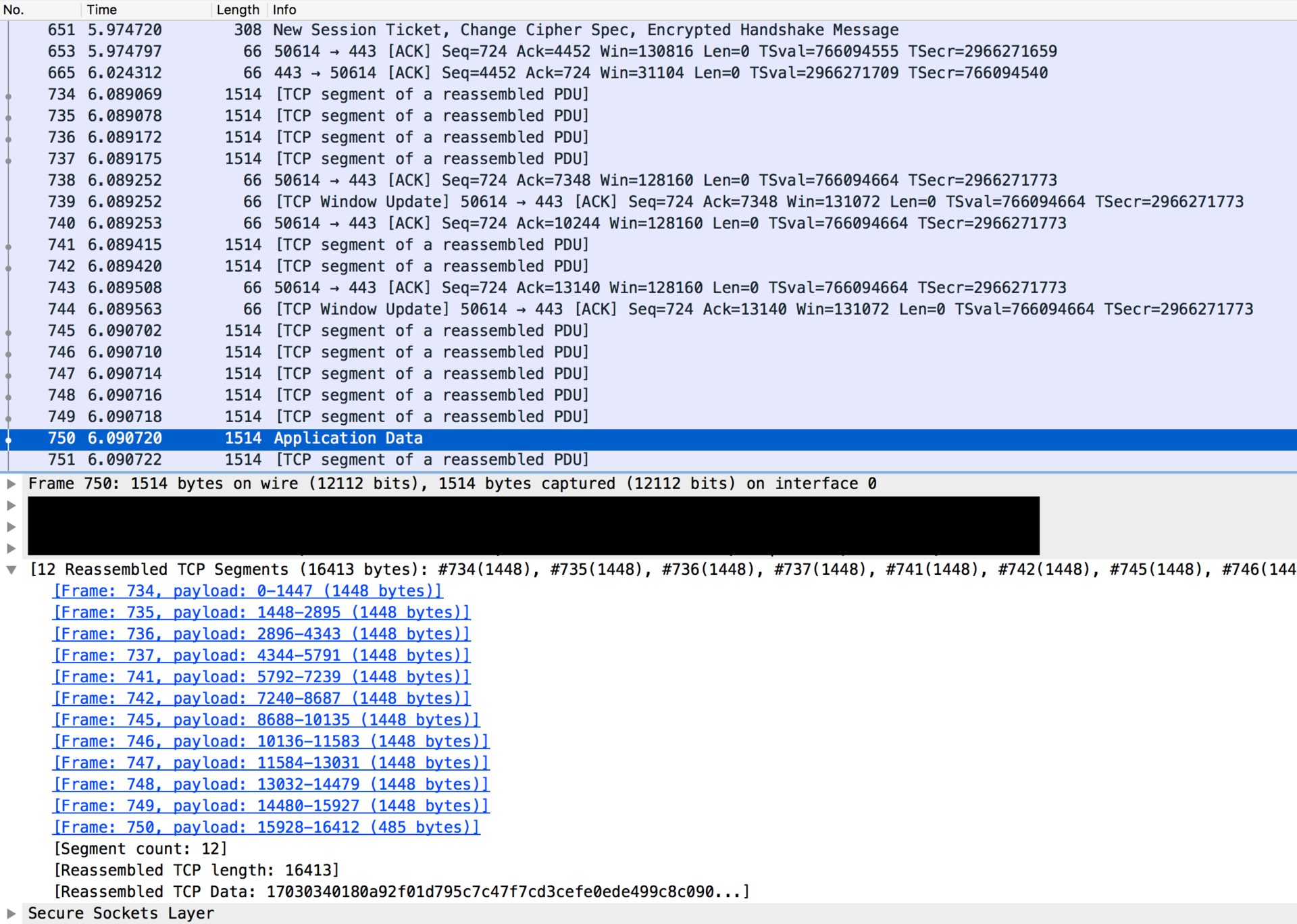

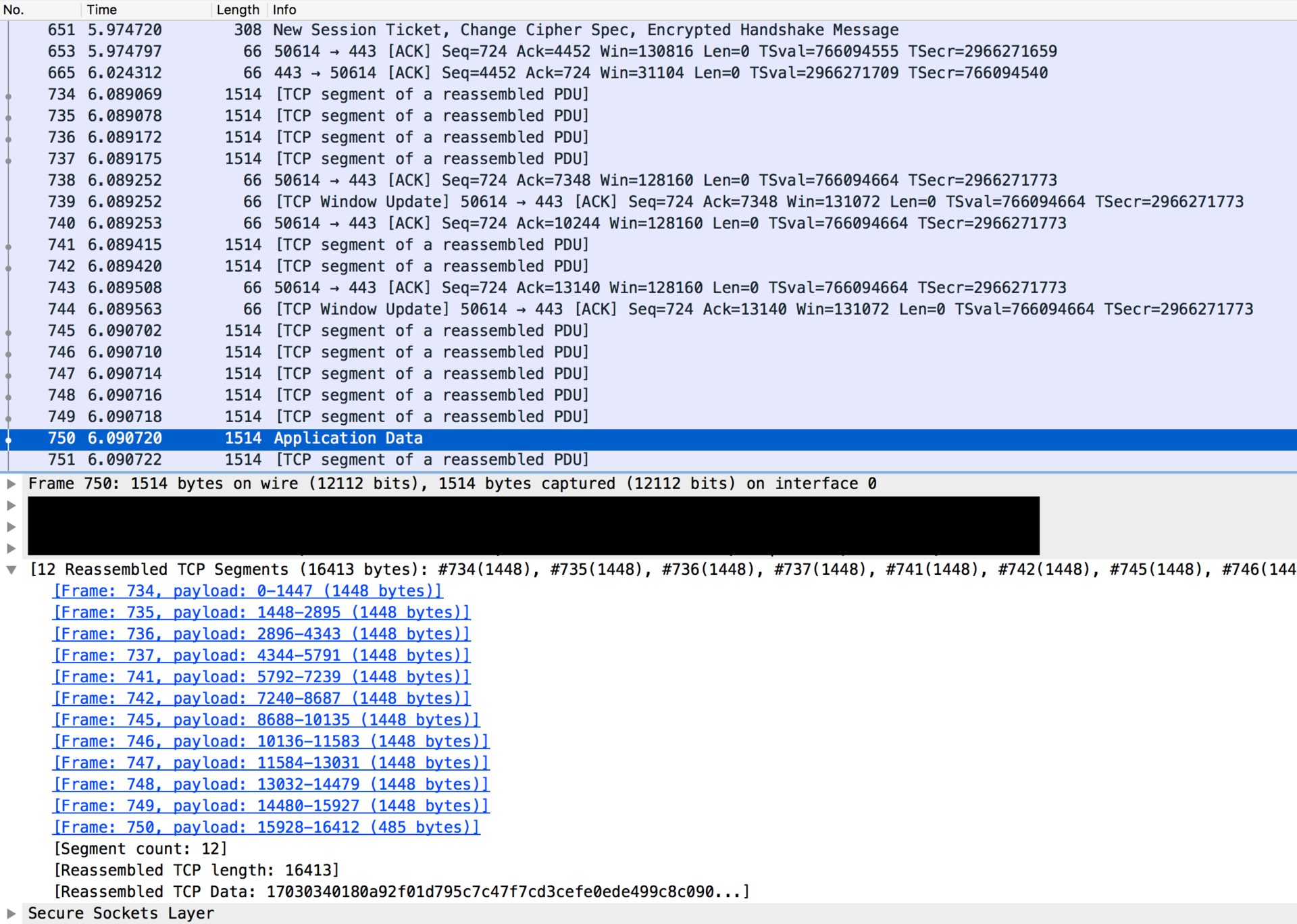

As you know, data on the Internet is transmitted using a multi-layer protocol stack. Now we are interested in the interaction of TCP and TLS. The main task of TCP is reliable delivery of packets in their original order. If we have a service that uses TLS (HTTPS site), then all the encrypted TLS data will be sent using TCP.

At the TCP level: immediately after connecting, the server can send no more than initcwd packets (for old systems it is 3 packets, for new systems it is 10). Then the server will wait for confirmation (ACK) from the client and gradually the number of packets in the send window will grow, and the connection will increase its throughput.

')

In the case of regular HTTP traffic, everything is fine: with each new packet, data arrives that the browser can use.

If we use TLS, then Nginx uses a special buffer (the size is specified by the ssl_buffer_size directive), which controls the size of the TLS record size. The browser (client) can use the data only after receiving the TLS record completely. The maximum (and default in Nginx) size ssl_buffer_size is 16k.

Since the initial window for sending packets = 10, we can get about 14k of traffic, which is less than TLS record (16k). This can cause delays in getting useful content.

And if you use HTTP / 2, then you should pay attention to the http2_chunk_size setting (default 8k) - it sets the maximum size of the part into which the response body is divided. In this case, only one connection to the server is used; therefore, many resources are simultaneously transmitted on this TCP connection, which increases the probability of delays.

The simplest thing you can do is reduce ssl_buffer_size , for example, to 8k or 12k. This can be done in the standard version of Nginx. However, when sending large amounts of data, efficiency will be lower (higher overhead).

It turns out that the perfect ssl_buffer_size does not exist.

Here Cloudflare comes to the rescue with its own set of patches .

Using these patches, we get support for the dynamic TLS record size.

On fresh connections, the record size is set to no more than the size of one packet, after passing a certain number of records, the size can be increased to 3 TCP packets, and then to the maximum size (16k). After the connection is idle, the process begins again. All parameters of this process are configured.

To get new functionality, you need to apply patches and build Nginx. I already wrote about building Nginx with OpenSSL earlier , so let's focus on the process of applying patches.

To apply the patches you need to go to the github page .

On this page, you need to isolate individual patches for each file. Writing the patch itself begins like this:

From this record, it is clear what this patch refers to (in this case, src / event / ngx_event_openssl.c ).

Copy the patch text to a file (for example, openssl.c.patch ) and put it next to the source file.

Apply the patch with the following command:

So we go through all the patch files (there should be 4 files in total).

Well and we collect Nginx as usual (I used 1.11.2, everything turned out).

With the patch come new settings. We get something like this:

Details can be read in the original article blog Cloudflare.

On the very principle of optimizing TLS record size can be read in the book HPBN .

On this, I have everything implemented so far, we are testing. If you already have experience in customization, please share in the comments.

TLS and TCP

As you know, data on the Internet is transmitted using a multi-layer protocol stack. Now we are interested in the interaction of TCP and TLS. The main task of TCP is reliable delivery of packets in their original order. If we have a service that uses TLS (HTTPS site), then all the encrypted TLS data will be sent using TCP.

At the TCP level: immediately after connecting, the server can send no more than initcwd packets (for old systems it is 3 packets, for new systems it is 10). Then the server will wait for confirmation (ACK) from the client and gradually the number of packets in the send window will grow, and the connection will increase its throughput.

')

In the case of regular HTTP traffic, everything is fine: with each new packet, data arrives that the browser can use.

TLS problem

If we use TLS, then Nginx uses a special buffer (the size is specified by the ssl_buffer_size directive), which controls the size of the TLS record size. The browser (client) can use the data only after receiving the TLS record completely. The maximum (and default in Nginx) size ssl_buffer_size is 16k.

Since the initial window for sending packets = 10, we can get about 14k of traffic, which is less than TLS record (16k). This can cause delays in getting useful content.

And if you use HTTP / 2, then you should pay attention to the http2_chunk_size setting (default 8k) - it sets the maximum size of the part into which the response body is divided. In this case, only one connection to the server is used; therefore, many resources are simultaneously transmitted on this TCP connection, which increases the probability of delays.

What can be done?

The simplest thing you can do is reduce ssl_buffer_size , for example, to 8k or 12k. This can be done in the standard version of Nginx. However, when sending large amounts of data, efficiency will be lower (higher overhead).

It turns out that the perfect ssl_buffer_size does not exist.

Dynamic size TLS record

Here Cloudflare comes to the rescue with its own set of patches .

Using these patches, we get support for the dynamic TLS record size.

On fresh connections, the record size is set to no more than the size of one packet, after passing a certain number of records, the size can be increased to 3 TCP packets, and then to the maximum size (16k). After the connection is idle, the process begins again. All parameters of this process are configured.

Patch application

To get new functionality, you need to apply patches and build Nginx. I already wrote about building Nginx with OpenSSL earlier , so let's focus on the process of applying patches.

To apply the patches you need to go to the github page .

On this page, you need to isolate individual patches for each file. Writing the patch itself begins like this:

diff --git a/src/event/ngx_event_openssl.cb/src/event/ngx_event_openssl.c From this record, it is clear what this patch refers to (in this case, src / event / ngx_event_openssl.c ).

Copy the patch text to a file (for example, openssl.c.patch ) and put it next to the source file.

Apply the patch with the following command:

patch ngx_event_openssl.c < openssl.c.patch So we go through all the patch files (there should be 4 files in total).

Well and we collect Nginx as usual (I used 1.11.2, everything turned out).

Nginx setup

With the patch come new settings. We get something like this:

# , 1 ssl_dyn_rec_size_lo 1369; # , 3 ssl_dyn_rec_size_hi 4229; # ssl_dyn_rec_threshold 20; # ssl_dyn_rec_timeout 10; # , ssl_buffer_size 16k; Details can be read in the original article blog Cloudflare.

On the very principle of optimizing TLS record size can be read in the book HPBN .

On this, I have everything implemented so far, we are testing. If you already have experience in customization, please share in the comments.

Source: https://habr.com/ru/post/306142/

All Articles