Yandex.Toloka. How people help teach machine intelligence

For the past year and a half in Yandex, the Toloka platform has been used to improve search algorithms and machine intelligence technologies. It may seem surprising, but all modern machine learning technologies to one degree or another need human evaluations.

People evaluate the relevance of reference documents to search queries so that the ranking formulas in the search are guided by them; people rewrite audio recordings into text so that the voice recognition algorithm is tuned to this data; people place images into categories so that, having trained on these examples, the neural network would continue to do this without people and better than people.

')

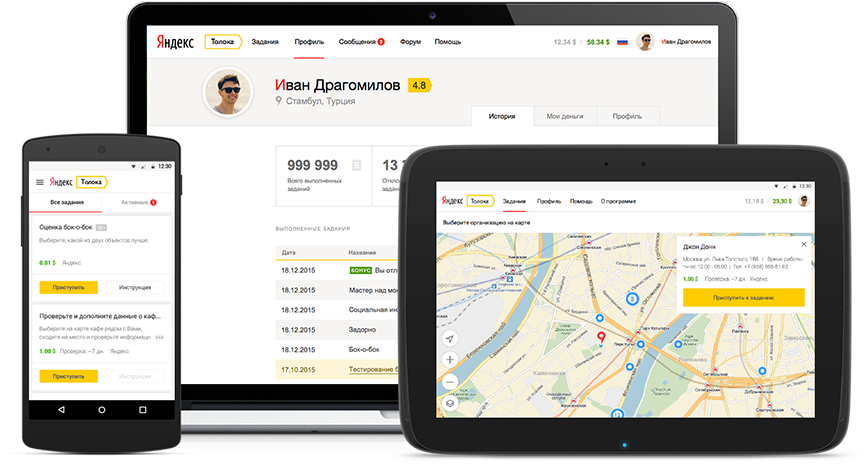

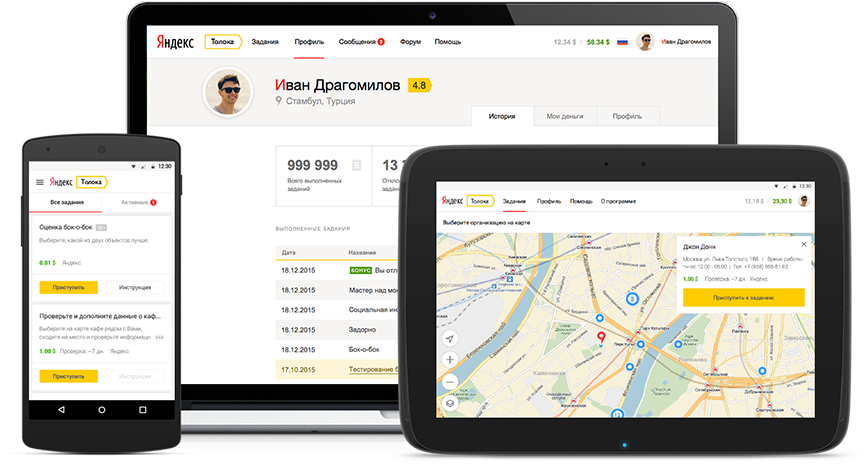

All this can be done in Toloka, which is a crowdsourcing platform and helps to find those who will solve your problem. Today, it goes into beta status and is now open to all external customers. So it's time to tell you in detail about the platform itself and about what unexpected difficulties we encountered in the process of working on it, share our observations and explain how Toloka can help you.

The tasks that we discussed above in Yandex are traditionally solved with the help of trained assessors. Assessors look at how far the search results match the query, find spam among the found web pages, classify it, solve similar problems in other services.

The irony is that the more new technologies we launch, the stronger the need for human evaluations. It is not enough just to determine the relevance of the page to the search query. It is important to understand whether the page is littered with malicious ads? Does the page contain adult content? And if it does, does the user's request imply that he was looking for just such content? In order to automatically take into account all these factors, you need to collect a sufficient number of examples for training a search engine. And since everything on the Internet is constantly changing, then the training kits need to be constantly updated and maintained up to date. In general, only for search tasks, the need for human estimates was measured in millions per month, and every year this number only grows.

Organizing more and more assessors in each of the countries where Yandex is present is organizationally difficult. Moreover, not all new tasks require special training. Almost any person can handle many tasks, and often it is even more useful to collect the opinions of ordinary users who are not trained to professionally evaluate rankings. This division of tasks has led us to the conclusion that in addition to assessors, we need another more flexible and scalable source for obtaining human assessments.

In addition to the difficult tasks performed by assessors, we needed to learn how to collect millions of simple assessments in any country of interest to us. Most of the tasks we are talking about are quite simple and small: they do not take more than 30 seconds to complete. But the number of these tasks is very large. Ordinary freelance exchanges, where you can contact directly with several performers and explain them the essence of the task personally, were not suitable for us. For industrial scale, we needed to attract thousands of performers, pay for their work without paperwork and control the result.

The closest analogue to what we actually needed was the crowdsourcing platforms of Amazon Mechanical Turk, Clickworker and CrowdFlower. Using them, as a rule, simple human assessments are collected by academic researchers in the field of Machine Learning and major search companies, for example, Bing.

But all these platforms did not work in the countries of interest. In addition, we have already managed to gain some experience in order to solve the problem on our own.

In principle, the logic of any crowdsourcing platform and, in particular, Toloki is nothing complicated. On the one hand, we work with executors, distribute tasks, make payments, and on the other, we help customers get results with minimum labor costs.

By the way, what is this word "Toloka"? They thought about the name for quite a long time, looking for international options, but in the end it turned out quite the opposite. The idea to call the service “Toloka” was born in our Minsk office, where, by the way, the core of the platform development is concentrated. This word (in Belarusian “talaka”) is common in Belarus about 30 times stronger than in Russia, and means useful joint work for a common result, and this is perfectly combined with crowdsourcing. Although the choice of name - this is not the most difficult question that we faced when developing a service.

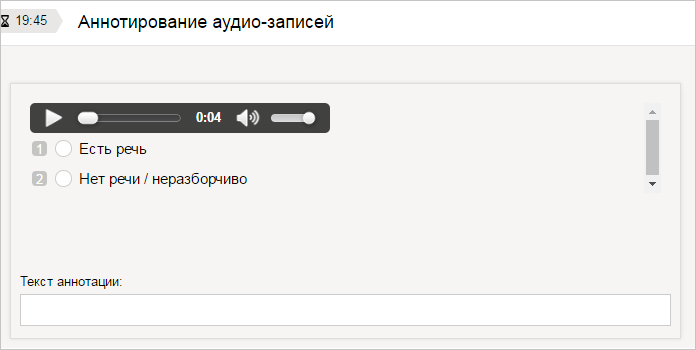

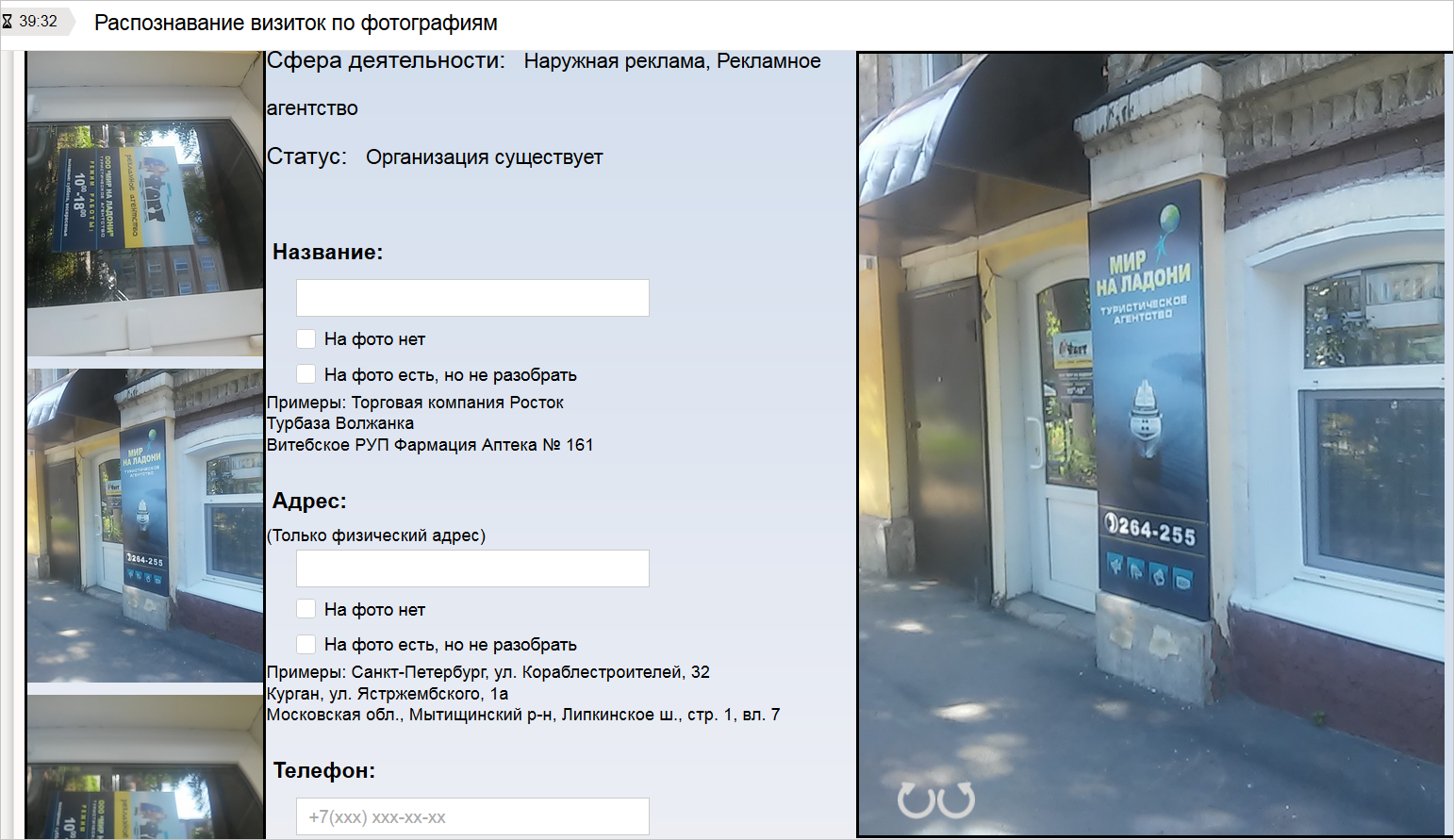

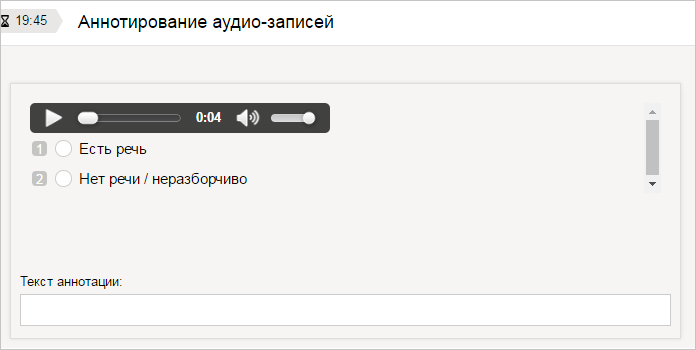

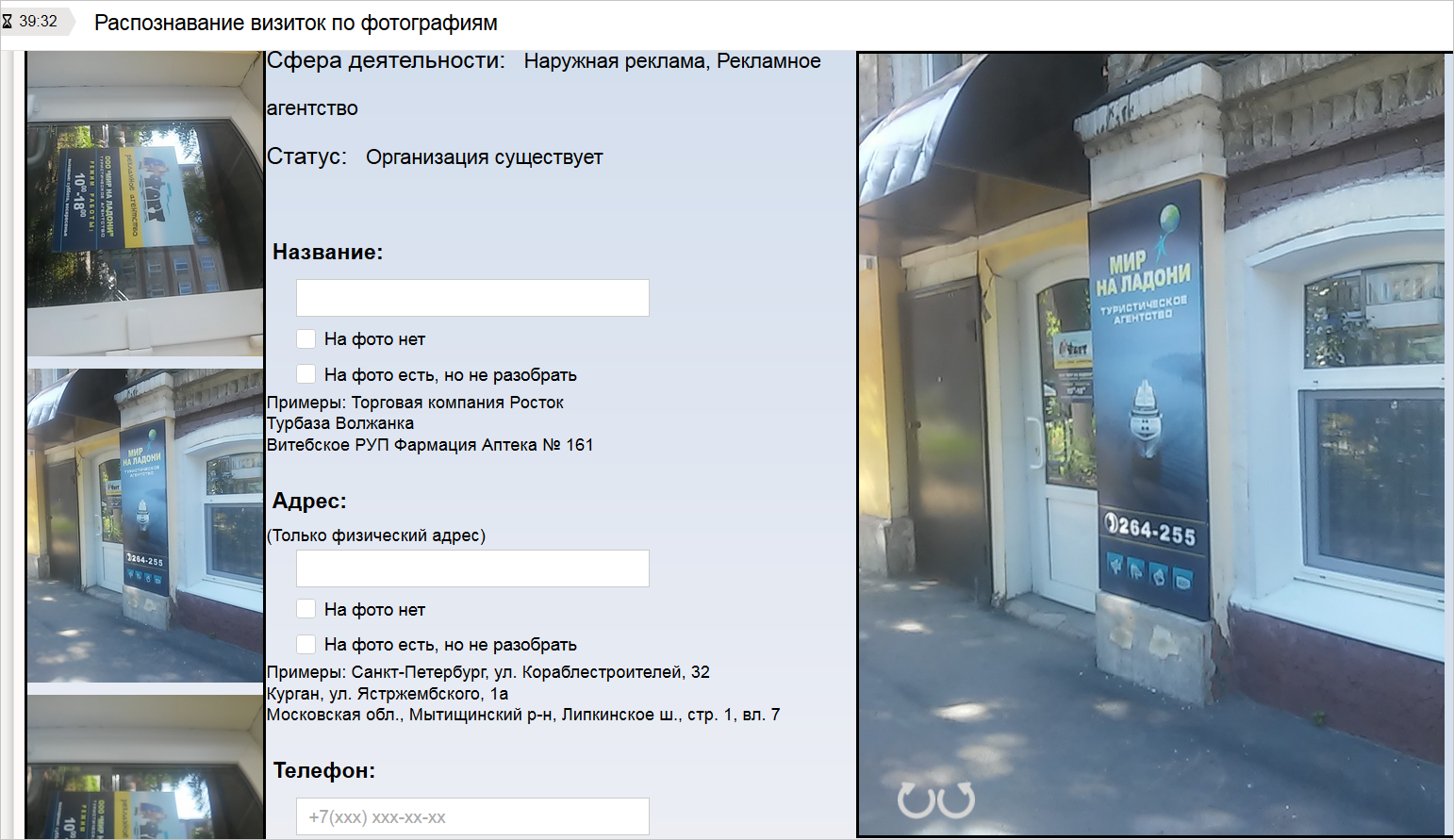

What could be the job? Potentially, they can be any of the source data, the interface, the expected answers. One needs to load pictures and ask them to be classified, another one to display the audio player and ask to write what is heard on the recording, the third one to show the map with the user's location.

Therefore, the path of maximum flexibility was chosen: using json for input and output parameters and html / css / js for the interface, the customer can create almost any task. And for those tasks that require work "in the field" (for example, go to the address and check the relevance of information about the organization), we have prepared a mobile version of Toloki for Android.

Flexibility in settings has a reverse side - a high threshold of entry. Not every person can typeset the interface. We solve this problem with the help of ready-made templates, the total number of which we plan to increase.

How to pay tolokers? The successful withdrawal of hard earned is one of the main motivators for a person to return and continue to perform tasks. A complex and lengthy withdrawal procedure can bury the platform. Therefore, we have built a system in which it is enough to add your wallet to PayPal or Yandex.Money. At the stage of debugging processes on the part of Toloki, the withdrawal of money could take up to 30 days, now it takes a couple of days, or even minutes. At the same time, both the tolokers and customers are exempt from paperwork.

How to ensure quality performance? This question is the main headache of any crowdsourcing platform. In Toloka, like in any society, there are diligent and attentive people, and there are lazy, dishonest and at the same time able to write scripts. The main task: to keep in service the first and as soon as possible to find and limit the second. For this, we taught Toloka to analyze the behavior of performers. Customers have the opportunity to automatically identify and limit those tolokers who, for example, respond too quickly, or whose answers are not consistent with the answers of others. We also added the ability to use control tasks (“hanipot”) and mandatory acceptance before payment. Moreover, the acceptance can also be simplified. Give tasks to one user, and evaluate their results to others.

By the way, what do we know about the Tolokers themselves?

In Toloka, a variety of people register and perform tasks. Most performers (as well as assignments) are in Russia, Ukraine and Turkey. Most tolokers are young people under 35 years old (as a rule, students of technical colleges or mothers on maternity leave). Most performers see Toloka as an additional source of money, although many say that they like to do useful work and make the Internet cleaner. Here, for example, that they write about themselves, discussing Toloka on the Internet:

Toloka now is almost 270 thousand performers from five countries of the world, 80 thousand tasks performed per day, 400 different types of tasks already tested, 1 billion of assessments collected.

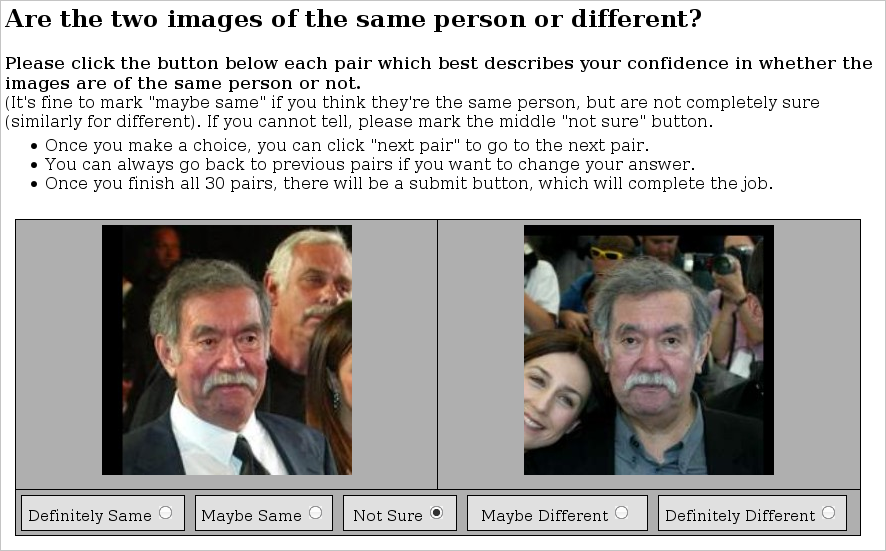

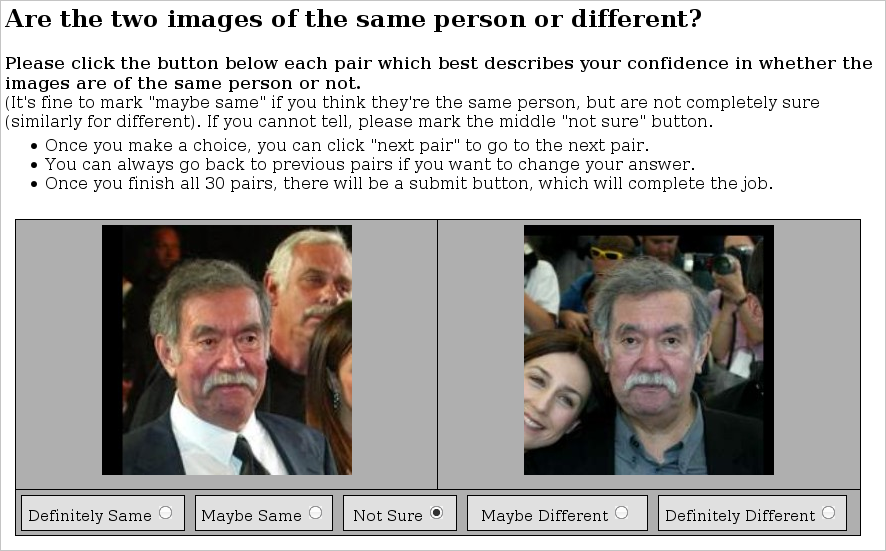

At the time of discovery, we started with three main types of tasks, and it is interesting that they still remain the most favorite tasks for most of the tokers: evaluation of the quality of image search, content markup for adults and pairwise comparison of objects (for example, pages in different designs).

The use of crowdsourcing to collect assessments in these projects allowed teams to significantly reduce the waiting time for evaluations to build metrics. Where assessors need several days to evaluate a set of objects, the tollers do it in a couple of hours. By reducing the cost of evaluation, it was possible to significantly increase the size of training sets and improve the quality of classification algorithms. For example, after switching to Toloka, the quality of the definition of content for adults increased by 30%.

Customers of ratings in Toloka are dozens of different teams of Yandex. With the help of this service, data for computer vision and speech recognition technologies are collected, recommender technologies are improved, the base of the directory of organizations is replenished, many other internal tasks are solved. But they are still not enough to provide tasks for all the tolokers. Need to move on.

Having agreed with several external partners, we organized closed alpha testing to verify that the platform meets external needs. External customers came both with familiar tasks for us and with new ones specific to their business. During testing, tasks were launched to decipher the price lists of goods with photos, audit outlets, categorization of goods, search for characteristics.

The website of the Bookmakers Rating offered to the Tsokkers tasks for updating the base of bookmaker offices throughout the country (about 4,000 objects). It was necessary to check whether the object exists at the specified address, take a photo and compose its description. According to the customer, checking the relevance of the facility database in Russia with the help of crowdsourcing turned out to be more profitable and more efficient than sending an employee or searching for contractors. A bit of specifics. The fulfillment of the task in St. Petersburg, where 157 addresses were found to be checked, using Toloka cost the customer a little more than 4,100 rubles. This is more than 10 times cheaper than the project seven-day business trip of one of the company's own employees from Moscow to St. Petersburg, and 3-6 times cheaper than hiring a contractor on site.

Toloka was useful not only for business, but also in scientific work. Dmitry dustalov Ustalov from the Institute of Mathematics and Mechanics named after N.N. Krasovsky (IMM Ural Branch of the Russian Academy of Sciences) uses the service to collect estimates about the semantic connections of Russian words to improve the quality of electronic dictionaries used in the framework of the NLPub project. According to Dmitry, he can quickly and inexpensively conduct experiments, testing a large number of hypotheses in a short time. For one of the tasks, for example, he managed to collect 13 thousand assessments in just an hour.

And one more example. Rambler uses Toloka to refine the assessor ratings for search results. The results of the toloker are a little less accurate, since they do not have special training, but they can, however, complete large-scale tasks in a short time and with stable quality. So this channel for obtaining estimates is especially useful during periods of peak loads.

Closed alpha testing helped us to decide on a further vector of improvements and, in general, confirmed the hypothesis that Toloka could be useful for external customers and should be open to all. Exactly what we did. Starting this week, Toloka goes into beta status, and its crowdsourcing capabilities are available to everyone.

The process of sending the job still involves some knowledge from the customer, but we are already working to simplify this process in the near future. In the meantime, documentation is available to customers at yandex.ru/support/toloka-requester .

We recommend starting with the sandbox . This test environment will allow you not only to understand the process of creating tasks, but also to test them without risking wasted money. After registration, you can immediately start creating your project, and the service will offer you ready-made templates to choose from.

When setting a task, you will be able to limit executors according to certain criteria (for example, country, language, or user-agent of the browser), enable preliminary check of the result and quality control, adjust the specifications of input and output data.

After training in the sandbox, you can go to the combat system , transfer your projects there, replenish the balance and start crowdsourcing. By the way, all this is not necessary to do in the interface. Toloki has its own API .

In conclusion, I would like to tell you about the main recommendations that we formulated on the basis of our own experience of working with tolokers.

Wisdom of the crowd (wisdom of the crowd) is not a philosophical at all, but a completely statistically verifiable phenomenon. Even if the opinion of one person is not sufficiently competent, and his assessment is not accurate enough, combining assessments from many different people may give more accurate results than the assessment of one professional expert. The main thing here is to properly organize the process of collecting and aggregating individual assessments.

We would be grateful to the Habrahabr community for feedback on the work of the Toloka beta. We continue to work to ensure that any customer can get the highest quality result without the need to write code, and each responsible executor - encouragement and a sufficient number of tasks. And your feedback will help our team in this work. Thank!

People evaluate the relevance of reference documents to search queries so that the ranking formulas in the search are guided by them; people rewrite audio recordings into text so that the voice recognition algorithm is tuned to this data; people place images into categories so that, having trained on these examples, the neural network would continue to do this without people and better than people.

')

All this can be done in Toloka, which is a crowdsourcing platform and helps to find those who will solve your problem. Today, it goes into beta status and is now open to all external customers. So it's time to tell you in detail about the platform itself and about what unexpected difficulties we encountered in the process of working on it, share our observations and explain how Toloka can help you.

The tasks that we discussed above in Yandex are traditionally solved with the help of trained assessors. Assessors look at how far the search results match the query, find spam among the found web pages, classify it, solve similar problems in other services.

The irony is that the more new technologies we launch, the stronger the need for human evaluations. It is not enough just to determine the relevance of the page to the search query. It is important to understand whether the page is littered with malicious ads? Does the page contain adult content? And if it does, does the user's request imply that he was looking for just such content? In order to automatically take into account all these factors, you need to collect a sufficient number of examples for training a search engine. And since everything on the Internet is constantly changing, then the training kits need to be constantly updated and maintained up to date. In general, only for search tasks, the need for human estimates was measured in millions per month, and every year this number only grows.

Organizing more and more assessors in each of the countries where Yandex is present is organizationally difficult. Moreover, not all new tasks require special training. Almost any person can handle many tasks, and often it is even more useful to collect the opinions of ordinary users who are not trained to professionally evaluate rankings. This division of tasks has led us to the conclusion that in addition to assessors, we need another more flexible and scalable source for obtaining human assessments.

Crowdsourcing

In addition to the difficult tasks performed by assessors, we needed to learn how to collect millions of simple assessments in any country of interest to us. Most of the tasks we are talking about are quite simple and small: they do not take more than 30 seconds to complete. But the number of these tasks is very large. Ordinary freelance exchanges, where you can contact directly with several performers and explain them the essence of the task personally, were not suitable for us. For industrial scale, we needed to attract thousands of performers, pay for their work without paperwork and control the result.

The closest analogue to what we actually needed was the crowdsourcing platforms of Amazon Mechanical Turk, Clickworker and CrowdFlower. Using them, as a rule, simple human assessments are collected by academic researchers in the field of Machine Learning and major search companies, for example, Bing.

But all these platforms did not work in the countries of interest. In addition, we have already managed to gain some experience in order to solve the problem on our own.

Toloka

In principle, the logic of any crowdsourcing platform and, in particular, Toloki is nothing complicated. On the one hand, we work with executors, distribute tasks, make payments, and on the other, we help customers get results with minimum labor costs.

By the way, what is this word "Toloka"? They thought about the name for quite a long time, looking for international options, but in the end it turned out quite the opposite. The idea to call the service “Toloka” was born in our Minsk office, where, by the way, the core of the platform development is concentrated. This word (in Belarusian “talaka”) is common in Belarus about 30 times stronger than in Russia, and means useful joint work for a common result, and this is perfectly combined with crowdsourcing. Although the choice of name - this is not the most difficult question that we faced when developing a service.

What could be the job? Potentially, they can be any of the source data, the interface, the expected answers. One needs to load pictures and ask them to be classified, another one to display the audio player and ask to write what is heard on the recording, the third one to show the map with the user's location.

A few examples

Therefore, the path of maximum flexibility was chosen: using json for input and output parameters and html / css / js for the interface, the customer can create almost any task. And for those tasks that require work "in the field" (for example, go to the address and check the relevance of information about the organization), we have prepared a mobile version of Toloki for Android.

Flexibility in settings has a reverse side - a high threshold of entry. Not every person can typeset the interface. We solve this problem with the help of ready-made templates, the total number of which we plan to increase.

How to pay tolokers? The successful withdrawal of hard earned is one of the main motivators for a person to return and continue to perform tasks. A complex and lengthy withdrawal procedure can bury the platform. Therefore, we have built a system in which it is enough to add your wallet to PayPal or Yandex.Money. At the stage of debugging processes on the part of Toloki, the withdrawal of money could take up to 30 days, now it takes a couple of days, or even minutes. At the same time, both the tolokers and customers are exempt from paperwork.

How to ensure quality performance? This question is the main headache of any crowdsourcing platform. In Toloka, like in any society, there are diligent and attentive people, and there are lazy, dishonest and at the same time able to write scripts. The main task: to keep in service the first and as soon as possible to find and limit the second. For this, we taught Toloka to analyze the behavior of performers. Customers have the opportunity to automatically identify and limit those tolokers who, for example, respond too quickly, or whose answers are not consistent with the answers of others. We also added the ability to use control tasks (“hanipot”) and mandatory acceptance before payment. Moreover, the acceptance can also be simplified. Give tasks to one user, and evaluate their results to others.

By the way, what do we know about the Tolokers themselves?

Tolokery

In Toloka, a variety of people register and perform tasks. Most performers (as well as assignments) are in Russia, Ukraine and Turkey. Most tolokers are young people under 35 years old (as a rule, students of technical colleges or mothers on maternity leave). Most performers see Toloka as an additional source of money, although many say that they like to do useful work and make the Internet cleaner. Here, for example, that they write about themselves, discussing Toloka on the Internet:

I have already dropped 790.41 rubles to my wallet. If anything, then in the region where I live, the salary, for example, of a young teacher is 2,800 rubles. Please note that this is all packed between times, in your free time, in the intervals between the performance of the main work, when you wanted to relax and switch a little.

And you don't feel like a stupid clicking robot at all. There is some sense of the usefulness of their work.

Toloka in Yandex

Toloka now is almost 270 thousand performers from five countries of the world, 80 thousand tasks performed per day, 400 different types of tasks already tested, 1 billion of assessments collected.

At the time of discovery, we started with three main types of tasks, and it is interesting that they still remain the most favorite tasks for most of the tokers: evaluation of the quality of image search, content markup for adults and pairwise comparison of objects (for example, pages in different designs).

The use of crowdsourcing to collect assessments in these projects allowed teams to significantly reduce the waiting time for evaluations to build metrics. Where assessors need several days to evaluate a set of objects, the tollers do it in a couple of hours. By reducing the cost of evaluation, it was possible to significantly increase the size of training sets and improve the quality of classification algorithms. For example, after switching to Toloka, the quality of the definition of content for adults increased by 30%.

Customers of ratings in Toloka are dozens of different teams of Yandex. With the help of this service, data for computer vision and speech recognition technologies are collected, recommender technologies are improved, the base of the directory of organizations is replenished, many other internal tasks are solved. But they are still not enough to provide tasks for all the tolokers. Need to move on.

First external customers

Having agreed with several external partners, we organized closed alpha testing to verify that the platform meets external needs. External customers came both with familiar tasks for us and with new ones specific to their business. During testing, tasks were launched to decipher the price lists of goods with photos, audit outlets, categorization of goods, search for characteristics.

The website of the Bookmakers Rating offered to the Tsokkers tasks for updating the base of bookmaker offices throughout the country (about 4,000 objects). It was necessary to check whether the object exists at the specified address, take a photo and compose its description. According to the customer, checking the relevance of the facility database in Russia with the help of crowdsourcing turned out to be more profitable and more efficient than sending an employee or searching for contractors. A bit of specifics. The fulfillment of the task in St. Petersburg, where 157 addresses were found to be checked, using Toloka cost the customer a little more than 4,100 rubles. This is more than 10 times cheaper than the project seven-day business trip of one of the company's own employees from Moscow to St. Petersburg, and 3-6 times cheaper than hiring a contractor on site.

Toloka was useful not only for business, but also in scientific work. Dmitry dustalov Ustalov from the Institute of Mathematics and Mechanics named after N.N. Krasovsky (IMM Ural Branch of the Russian Academy of Sciences) uses the service to collect estimates about the semantic connections of Russian words to improve the quality of electronic dictionaries used in the framework of the NLPub project. According to Dmitry, he can quickly and inexpensively conduct experiments, testing a large number of hypotheses in a short time. For one of the tasks, for example, he managed to collect 13 thousand assessments in just an hour.

And one more example. Rambler uses Toloka to refine the assessor ratings for search results. The results of the toloker are a little less accurate, since they do not have special training, but they can, however, complete large-scale tasks in a short time and with stable quality. So this channel for obtaining estimates is especially useful during periods of peak loads.

Closed alpha testing helped us to decide on a further vector of improvements and, in general, confirmed the hypothesis that Toloka could be useful for external customers and should be open to all. Exactly what we did. Starting this week, Toloka goes into beta status, and its crowdsourcing capabilities are available to everyone.

How to add your task?

The process of sending the job still involves some knowledge from the customer, but we are already working to simplify this process in the near future. In the meantime, documentation is available to customers at yandex.ru/support/toloka-requester .

We recommend starting with the sandbox . This test environment will allow you not only to understand the process of creating tasks, but also to test them without risking wasted money. After registration, you can immediately start creating your project, and the service will offer you ready-made templates to choose from.

When setting a task, you will be able to limit executors according to certain criteria (for example, country, language, or user-agent of the browser), enable preliminary check of the result and quality control, adjust the specifications of input and output data.

After training in the sandbox, you can go to the combat system , transfer your projects there, replenish the balance and start crowdsourcing. By the way, all this is not necessary to do in the interface. Toloki has its own API .

In conclusion, I would like to tell you about the main recommendations that we formulated on the basis of our own experience of working with tolokers.

Recommendations for working with crowds

Wisdom of the crowd (wisdom of the crowd) is not a philosophical at all, but a completely statistically verifiable phenomenon. Even if the opinion of one person is not sufficiently competent, and his assessment is not accurate enough, combining assessments from many different people may give more accurate results than the assessment of one professional expert. The main thing here is to properly organize the process of collecting and aggregating individual assessments.

- Crowdsourcing is a very powerful tool, it is great when it comes to large volumes and the need to scale and standardize processes. But if you have a one-time task that is solved by one person in a couple of hours, then this is how it is easier to solve.

- It has been repeatedly verified that any complex task can and should be decomposed into a set of small independent subtasks. This will significantly improve the quality of the resulting data without increasing the cost of the overall markup.

- Most of the tasks in crowdsourcing are started with overlapping (when several performers do the same task). A popular misconception: the greater the overlap, the higher the quality of the results. As a rule, it is not. Accuracy stabilizes fairly quickly with an increase in overlap, and for which tasks it is reasonable to overlap more than 5 people per task.

- Not quite an intuitive fact: the price per task in crowdsourcing has virtually no effect on the quality of the estimates obtained. Often, overestimation of the rate may even harm the quality, since the tolokers, in pursuit of money, stop paying enough attention to assignments.

- But the main thing from which the quality of assessments really depends is on the organization of the task. The clearer the instruction is written and the interface is designed, the higher the quality of the result. And do not forget about the verification tasks ("hanipota"), which will help identify unscrupulous pushers.

- Most projects in Toloka do not require special knowledge from the contractor. Nevertheless, sometimes it is necessary to “train” the tolokers to carry out the task exactly as the customer needs. In this case, special training pools should be created and additional settings should be used (for example, to allow only those pushers who have successfully completed the training to the task).

- Properly targeting assignments to specific artists often helps to significantly improve the markup results. For example, if for marking the catalog of shoes you need to evaluate pantoletes, then this task is better to be given immediately to toloker girls.

- Not all tasks can be given at the mercy of the wisdom of the crowd. There is always a task that requires training and utmost care. But almost always in these cases, using pre-filtering with crowds, you can simplify the life of professional experts, significantly reduce the cost of obtaining estimates and increase the throughput of your workflow.

We would be grateful to the Habrahabr community for feedback on the work of the Toloka beta. We continue to work to ensure that any customer can get the highest quality result without the need to write code, and each responsible executor - encouragement and a sufficient number of tasks. And your feedback will help our team in this work. Thank!

Source: https://habr.com/ru/post/305956/

All Articles