Features of the choice of solid-state drives (SSD) for servers and RAID-arrays

As promised in the publication “The expediency and benefits of using server drives, building RAID arrays, is it worth saving and when?” , We’ll dwell in more detail on the problem of choosing solid-state drives. But in the beginning there is some theory.

Solid State Drives (SSD) - drives designed to ensure minimal latency (delay before the start of a read or write operation) and a large number of IOPS (Input / Output Operations per Second, input / output operations per second). When choosing an SSD, the user is primarily focused on how fast the drive will be for solving its task and how reliable the data storage on it will be.

Solid-state drives consist of NAND chips that form an array of memory, they are free from the disadvantages of HDDs, since there are no moving parts and no mechanical wear, due to which high performance and minimal latency are achieved (in hard disks, the main delay is related to head positioning) . Each memory location can be overwritten a certain number of times. Reads do not affect SSD wear. Basically, there are three main types of NAND chips: SLC (Single Level Cell), MLC (Multi Level Cell) and TLC (Three Level Cell) - single-level, multi-level and three-level chips and cells. Accordingly, cells in the SLC can use two voltage values of 0 or 1 (they can store 1 bits of information), in the MLC 00, 01, 10, or 11 (they store 2 bits of information), in TLC 000, 001, 010, 011, 100, 101, 110 or 111 (store 3 bits). It becomes clear that the more values a cell can take, the more the probability of incorrect reading of this value increases, the more time it takes to correct errors, the more information the drive can store. It is for this reason that TLC requires a larger ECC (Error Correction Code). At the same time, the number of rewriting cycles decreases with increasing information storage density and is maximal in SLC and therefore this memory is the fastest, since it is much easier to read one of the two values.

')

Now a little about the features of the chips themselves. NAND memory, in contrast to the NOR used in flash drives, is more cost-effective and has several advantages and disadvantages. The advantages lie in the much larger capacity of the array, the possibility of more efficient sequential reading. The disadvantages are in the mode of page access, the absence of random access to data, the appearance of additional errors due to the high density of data recording in the cells. Each NAND chip is divided into pages of 512 or 256 KB, the same in turn into blocks of 4 KB. It is possible to read from individual pages and write, provided that they are empty. However, once the information has been placed, it cannot be overwritten until the entire block of pages has been erased. This is the main drawback, which has a huge impact on write efficiency and drive wear, since the NAND chip has a limited number of rewrite cycles. To ensure uniform wear of all cells, uniform utilization of the drive, the controller moves the recorded data from place to place during recording, thereby increasing the influence of WAF (Write Amplification Factor) on SSD operation, due to which the amount of really recorded information is much larger than the logical one recorded by the user. , in consequence of which the rate of random reading decreases. In fact, data moves from place to place more than once, since information in memory must be erased before re-recording and the more efficiently the WAF algorithm is implemented, the longer the drive will live.

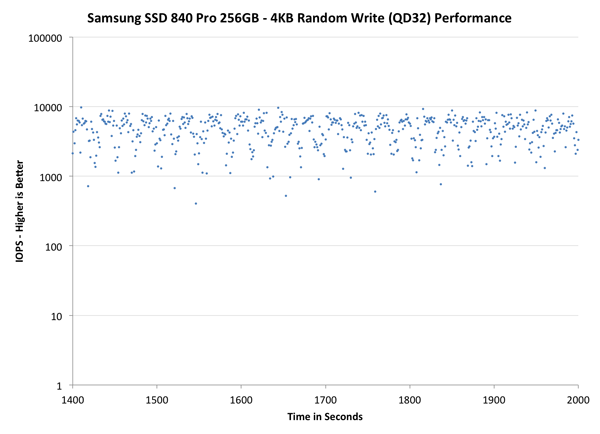

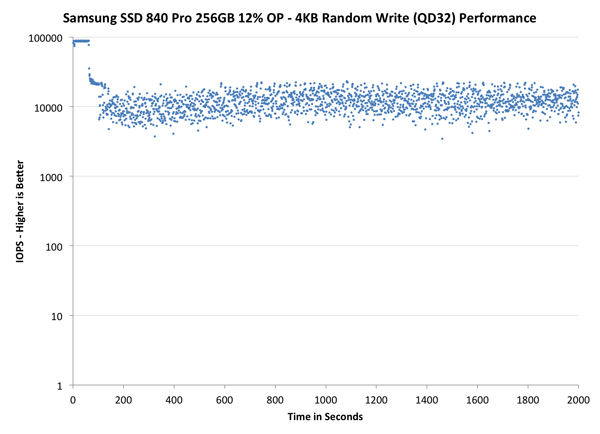

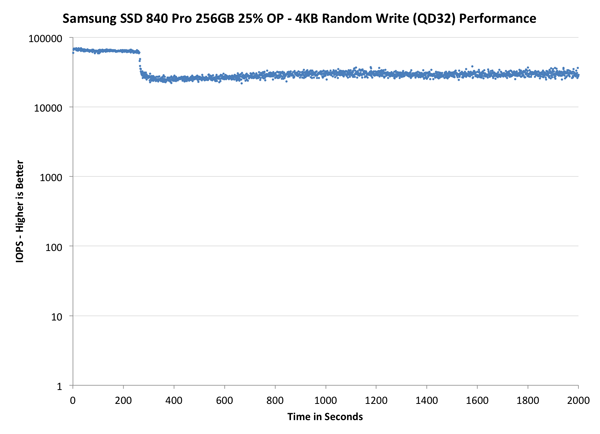

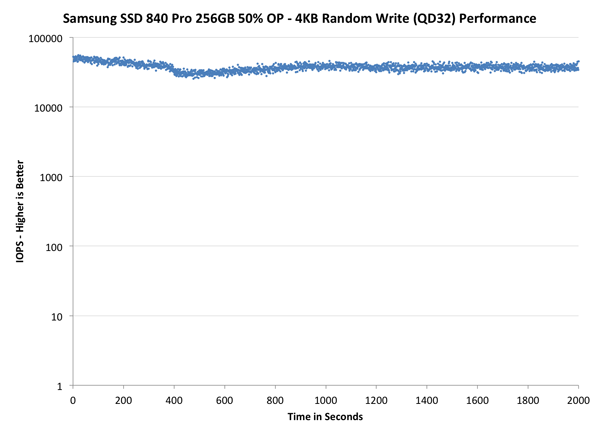

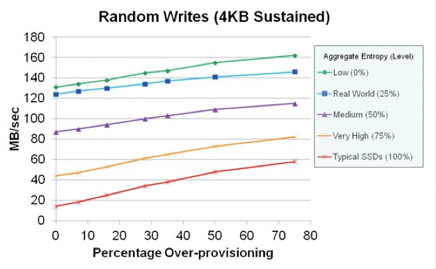

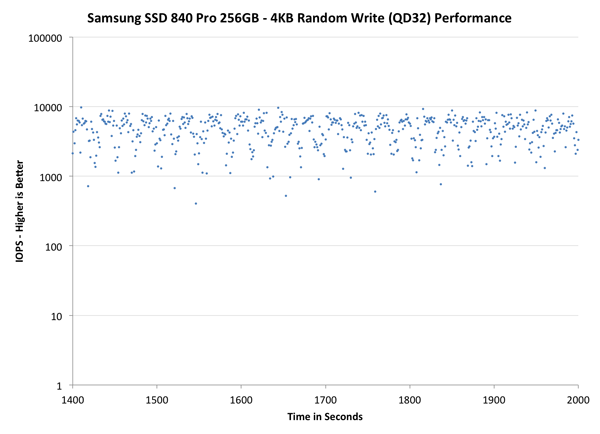

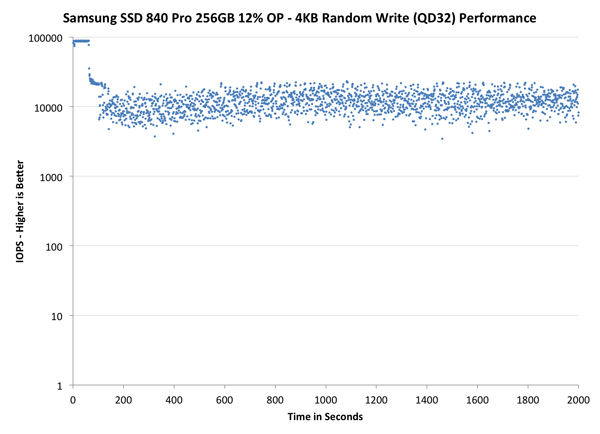

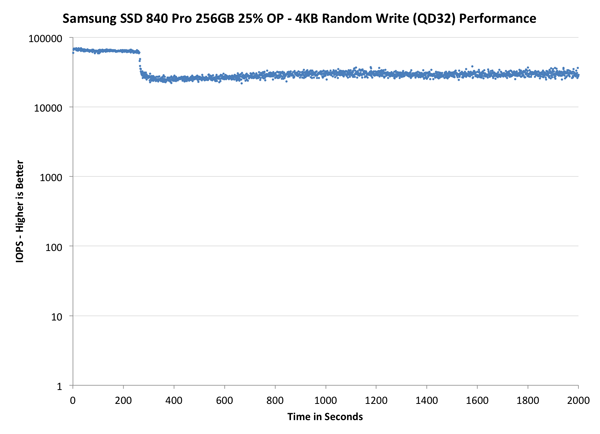

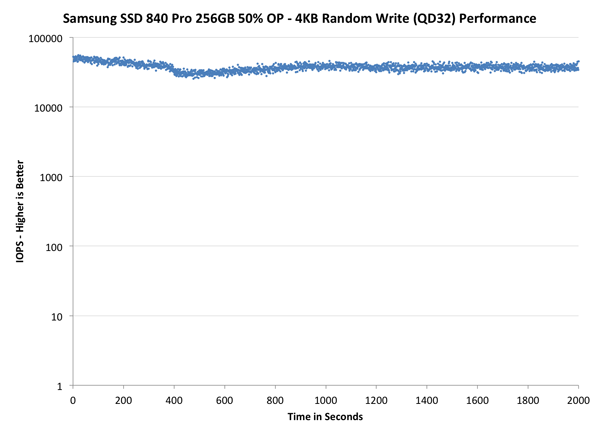

Over-Provisioning technology allows you to optimize the main drawback associated with recording / rewriting and improve performance, increase the life of the drive. It lies in the fact that on each of the drives, there is an unavailable area for the user, which the controller uses to move data, thereby aligning the cell wear indicators, since erasing a 4K cell requires erasing the entire row of cells (by 256 or 512 KB), which is more logical to perform in the background, using the unallocated area first to write. It is easy to understand that the larger the PR area, the easier it will be for the controller to perform the functions assigned to it, the less WAF will be and the more effective the random write and random read will be. The manufacturer lays under the PR from 7% to 50% of the volume of the drive, due to which the recording speed significantly increases, as can be seen from the graphs presented below (OR of 0%, 12%, 25%, 50%)

As we see from the graphs, the performance is significantly increased already with an OR of 25% or more. Most SSD manufacturers allow you to manage these parameters, and Samsung has a useful utility for this purpose:

What is the difference between server and desktop-drive? The most important difference is how efficient the work with write operations is in continuous mode, and this is mainly determined by the type of chip, the algorithms used and the Over-Provisioning area allocated by the manufacturer. For example, for the Intel 320 Series Over-Provisioning makes up 8% of the capacity of its microcircuits, and for the Intel 710, the drive seems to be as with an identical type of chip - 42%. Moreover, Intel recommends leaving at least 20% unallocated when creating a partition, so that they can also be automatically used under Over-Provisioning, even on server drives, where 42% is already allocated. This will extend the lifespan of the drive up to 3 times, as WAF will decrease, and increase the recording performance up to 75%.

But what is the difference between desktop drives and server drives? Take for example the drives Intel 320 and 520 - good desktop solid-state drives, in the latter applied “trick” in the form of the LSI SandForce controller, which compresses the recorded data and transfers them to the drive in a compressed form, thus increasing the write speed. And compare them with the server drive Intel 710 series.

Inte differs from other manufacturers in that it writes technical details in some detail and honestly and we can always know the performance in different modes of using the drive, which is just very useful in our case. That is why we chose them for comparison, even if some are already out of production and there are newer models, but that's not the point. Our goal is to understand the differences and principles of choice, which, regardless of updates, have not changed much.

http://ark.intel.com/ru/products/56563/Intel-SSD-320-Series-120GB-2_5in-SATA-3Gbs-25nm-MLC - we look and see that:

Random read (8 GB partition) - 38000 IOPS

Random reading (100% section) - 38000 IOPS

Random entry (8 GB lot) - 14000 IOPS

Random entry (100% plot) - 400 IOPS

That is, if we occupy only 8 GB on our 120 GB SSD, allocating more than 90% of the capacity for Over-Provisioning, then the random write performance is quite good and amounts to 14 KIOPS, but if we use all the space - only 400 IOPS on random recording, performance drops 35 times and turns out to be at the level of a pair of good SAS disks!

Now consider the http://www.intel.com/content/dam/www/public/us/en/documents/product-specifications/ssd-710-series-specification.pdf , as we see that even with 100% filling available capacity, the write speed is pretty decent - 2700 IOPS, and in the presence of 20% of the capacity under Over-Provisioning increases to 4000 IOPS. This result is a little, but still a different memory, with High Endurance Technology (HET), if in a simple way - this is the use of selective memory. Also, a different firmware is used with a different recording algorithm, which allows to reduce the number of errors and extend the life of the drive. And what is very important - a different free space cleaning algorithm is used. Due to this, performance is maintained at a continuous level, almost always at a decent level, the disk constantly undergoes background cleaning and data distribution optimization. In the desktop Intel 320 - it may fall a little during continuous operation, since the cleaning processes are not constant.

Conclusion - the desktop disk will live for quite a long time on small amounts of data and in terms of speed it can provide fairly good results if there is a large amount of space under Over-Provisioning. When is it profitable? Suppose there is a database, the same 1C, which requires access to 10-20 users. The base has a volume of 4 GB. We allocate more than 90% of the storage capacity under Over-Provisioning and mark up only the necessary, with a small margin of, say, 8 GB. As a result, we have a fairly good performance and cost-effective solution with a very good indicator of reliability. Of course, in the case of 40-50 users of 1C, it would still be better to use a server drive, since with continuous load, the performance will still fall on the desktop SSD.

Now let's dwell in more detail on drives with SandForce controllers. After reviewing the characteristics of http://download.intel.com/newsroom/kits/ssd/pdfs/intel_ssd_520_product_spec_325968.pdf , we conclude that the Intel 520 is a very good option in the case of compressed data, provides up to 80,000 IOPS per record, but In the case of incompressible data, such as video, the figures drop significantly - up to 13 KIOPS and less. Among other things, in a small footnote (number 2) it is indicated that such fantastic write speeds (80 KIOPS) are achievable only when only 8 GB is marked up, which in the case of a 180-gigabyte drive is only about 3% of its capacity, the rest is reserved for Over-Provisioning, as a result, again, we can conclude that this disk will work well with small databases and text files, and if it is necessary to quickly write incompressible data, such as video, it is still better to use full-fledged server data. rer.

It is also worth noting that the 320 series, although it is considered to be desktop, is actually a half-server, because, among other things, the drive contains a supercapacitor, which, in the event of a power outage, allows you to save data from the cache of the disk itself. But 520-ka does not have this. Because it is very important to pay attention to such features when choosing drives. Thus, the 320 series will be slower than the 520, but more reliable.

It is fair to talk about drives from other popular manufacturers - Seagate and Kingston. What is the difference? Seagate Pulsar, in contrast to the considered drives above, has an SAS interface, not SATA, and this is its main advantage. SSDs with SATA interface have a data integrity controller on the drive, there is a data integrity controller on the controller itself, but what happens to the data at the transfer stage is not well tracked. The SAS interface solves this problem and fully controls the transmission channel and in case of some kind of error due to the same pickup, corrects it, the SATA interface does not. In addition, it takes advantage of the reliability of the SAS interface itself, when 520 bytes are written instead of 512, along with 8 parity bytes. Among other things, we can take advantage of the duplex of the SAS interface, but where it can be used is better to be covered in a separate publication.

As for the Kingston series of drives, these are not only reliable drives, but also very productive ones. Until recently, their server series was one of the fastest until the Intel 3700 appeared on the market. At the same time, the price of these drives is quite pleasant, the price / performance / reliability ratio is probably the most optimal. It is for this reason that we offered these drives in the “new” line of servers in the Netherlands with which we started the sale , thus providing a rather interesting price offer, which resulted in quite a bit of servers with these drives:

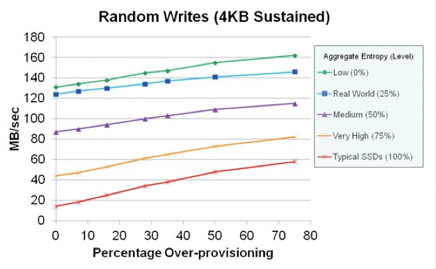

In these drives, 8 chips of 32 GB each form a total volume of 256 GB, about 7% of the capacity is allocated for Over-Provisioning, the net quota of one drive is 240 GB. The SandForce controller has a positive effect on the performance gains in the case of working with compressed data, namely databases, and often meets the IOPS requirements for 95% of our clients. In the case of uncompressed data or data with large entropy, such as video, users mostly use it more to distribute content than to write, and the performance does not fall so much that it also meets the needs of most users, and if you need to provide more write performance - just increase over-provisioning. As can be seen from the graph, the performance gain for data with zero compression (entropy 100%) with the growth of Over-Provisioning, the maximum:

It is worth noting even the integrity of the manufacturer, the tests are very conservative. And often real results were higher than guaranteed by 10-15%.

And for those who need more capacity, we have prepared specials. sentence:

Traffic can be increased, as well as the channel, upgrades are available at very nice prices:

1 Gbps 150TB - + $ 99.00

1 Gbps Unmetered - + $ 231.00

2 Gbps Unmetered - + $ 491.00

As for the use of solid-state drives in RAID-arrays, we will not repeat about the features of their use in RAID, there is a magical author article amarao , which I recommend reading SSD + raid0 - not everything is so simple and that will help to form a full understanding. In the same article I will talk a little about SSD-drives with PCI-Express interface, in which the built-in RAID-controller is already used. In the case of building a very fast solution, say, for a loaded billing system, such drives are irreplaceable, as they are able to provide hundreds of KIOPS for recording and more, and also, very importantly, very low latency. If the latency of most solid-state drives is within 65 microseconds, which is 10-40 times better than the latency of hard drives, then the top SSD PCI-Express values are 25 microseconds or less, that is, almost RAM speed. Of course, due to the PCI-Express interface itself, there is a decrease in speed, compared to RAM, however, noticeable improvements in latency are expected soon.

The storage capacity of the PCI-Express interface is typed by “memory banks”, the board already has a SandForce chip, and a hardware RAID controller. That is, it is already a mirror with a reaction speed of 25 microseconds with a write speed of more than 100 KIOPS, which has a very high reliability. The effective capacity of such drives is usually small and can be 100GB. The price is also quite impressive (7000-14000 euros). But in the case, as already noted, loaded billing systems, completely loaded databases, and also for the purpose of quickly generating 1C accounting reports in large companies (building speed increases by almost 2 orders of magnitude, 100 times faster) - such solutions are irreplaceable.

So far, we can offer such solutions only in custom-built servers with long-term lease guarantees, since demand is rather limited and not everyone will be willing to pay such impressive money for performance, by the way, this is not appropriate for everyone. Perhaps later, in a separate publication, we will consider such solutions more extensively if there is a corresponding interest from business subscribers.

Solid State Drives (SSD) - drives designed to ensure minimal latency (delay before the start of a read or write operation) and a large number of IOPS (Input / Output Operations per Second, input / output operations per second). When choosing an SSD, the user is primarily focused on how fast the drive will be for solving its task and how reliable the data storage on it will be.

Solid-state drives consist of NAND chips that form an array of memory, they are free from the disadvantages of HDDs, since there are no moving parts and no mechanical wear, due to which high performance and minimal latency are achieved (in hard disks, the main delay is related to head positioning) . Each memory location can be overwritten a certain number of times. Reads do not affect SSD wear. Basically, there are three main types of NAND chips: SLC (Single Level Cell), MLC (Multi Level Cell) and TLC (Three Level Cell) - single-level, multi-level and three-level chips and cells. Accordingly, cells in the SLC can use two voltage values of 0 or 1 (they can store 1 bits of information), in the MLC 00, 01, 10, or 11 (they store 2 bits of information), in TLC 000, 001, 010, 011, 100, 101, 110 or 111 (store 3 bits). It becomes clear that the more values a cell can take, the more the probability of incorrect reading of this value increases, the more time it takes to correct errors, the more information the drive can store. It is for this reason that TLC requires a larger ECC (Error Correction Code). At the same time, the number of rewriting cycles decreases with increasing information storage density and is maximal in SLC and therefore this memory is the fastest, since it is much easier to read one of the two values.

')

Now a little about the features of the chips themselves. NAND memory, in contrast to the NOR used in flash drives, is more cost-effective and has several advantages and disadvantages. The advantages lie in the much larger capacity of the array, the possibility of more efficient sequential reading. The disadvantages are in the mode of page access, the absence of random access to data, the appearance of additional errors due to the high density of data recording in the cells. Each NAND chip is divided into pages of 512 or 256 KB, the same in turn into blocks of 4 KB. It is possible to read from individual pages and write, provided that they are empty. However, once the information has been placed, it cannot be overwritten until the entire block of pages has been erased. This is the main drawback, which has a huge impact on write efficiency and drive wear, since the NAND chip has a limited number of rewrite cycles. To ensure uniform wear of all cells, uniform utilization of the drive, the controller moves the recorded data from place to place during recording, thereby increasing the influence of WAF (Write Amplification Factor) on SSD operation, due to which the amount of really recorded information is much larger than the logical one recorded by the user. , in consequence of which the rate of random reading decreases. In fact, data moves from place to place more than once, since information in memory must be erased before re-recording and the more efficiently the WAF algorithm is implemented, the longer the drive will live.

Over-Provisioning technology allows you to optimize the main drawback associated with recording / rewriting and improve performance, increase the life of the drive. It lies in the fact that on each of the drives, there is an unavailable area for the user, which the controller uses to move data, thereby aligning the cell wear indicators, since erasing a 4K cell requires erasing the entire row of cells (by 256 or 512 KB), which is more logical to perform in the background, using the unallocated area first to write. It is easy to understand that the larger the PR area, the easier it will be for the controller to perform the functions assigned to it, the less WAF will be and the more effective the random write and random read will be. The manufacturer lays under the PR from 7% to 50% of the volume of the drive, due to which the recording speed significantly increases, as can be seen from the graphs presented below (OR of 0%, 12%, 25%, 50%)

As we see from the graphs, the performance is significantly increased already with an OR of 25% or more. Most SSD manufacturers allow you to manage these parameters, and Samsung has a useful utility for this purpose:

What is the difference between server and desktop-drive? The most important difference is how efficient the work with write operations is in continuous mode, and this is mainly determined by the type of chip, the algorithms used and the Over-Provisioning area allocated by the manufacturer. For example, for the Intel 320 Series Over-Provisioning makes up 8% of the capacity of its microcircuits, and for the Intel 710, the drive seems to be as with an identical type of chip - 42%. Moreover, Intel recommends leaving at least 20% unallocated when creating a partition, so that they can also be automatically used under Over-Provisioning, even on server drives, where 42% is already allocated. This will extend the lifespan of the drive up to 3 times, as WAF will decrease, and increase the recording performance up to 75%.

But what is the difference between desktop drives and server drives? Take for example the drives Intel 320 and 520 - good desktop solid-state drives, in the latter applied “trick” in the form of the LSI SandForce controller, which compresses the recorded data and transfers them to the drive in a compressed form, thus increasing the write speed. And compare them with the server drive Intel 710 series.

Inte differs from other manufacturers in that it writes technical details in some detail and honestly and we can always know the performance in different modes of using the drive, which is just very useful in our case. That is why we chose them for comparison, even if some are already out of production and there are newer models, but that's not the point. Our goal is to understand the differences and principles of choice, which, regardless of updates, have not changed much.

http://ark.intel.com/ru/products/56563/Intel-SSD-320-Series-120GB-2_5in-SATA-3Gbs-25nm-MLC - we look and see that:

Random read (8 GB partition) - 38000 IOPS

Random reading (100% section) - 38000 IOPS

Random entry (8 GB lot) - 14000 IOPS

Random entry (100% plot) - 400 IOPS

That is, if we occupy only 8 GB on our 120 GB SSD, allocating more than 90% of the capacity for Over-Provisioning, then the random write performance is quite good and amounts to 14 KIOPS, but if we use all the space - only 400 IOPS on random recording, performance drops 35 times and turns out to be at the level of a pair of good SAS disks!

Now consider the http://www.intel.com/content/dam/www/public/us/en/documents/product-specifications/ssd-710-series-specification.pdf , as we see that even with 100% filling available capacity, the write speed is pretty decent - 2700 IOPS, and in the presence of 20% of the capacity under Over-Provisioning increases to 4000 IOPS. This result is a little, but still a different memory, with High Endurance Technology (HET), if in a simple way - this is the use of selective memory. Also, a different firmware is used with a different recording algorithm, which allows to reduce the number of errors and extend the life of the drive. And what is very important - a different free space cleaning algorithm is used. Due to this, performance is maintained at a continuous level, almost always at a decent level, the disk constantly undergoes background cleaning and data distribution optimization. In the desktop Intel 320 - it may fall a little during continuous operation, since the cleaning processes are not constant.

Conclusion - the desktop disk will live for quite a long time on small amounts of data and in terms of speed it can provide fairly good results if there is a large amount of space under Over-Provisioning. When is it profitable? Suppose there is a database, the same 1C, which requires access to 10-20 users. The base has a volume of 4 GB. We allocate more than 90% of the storage capacity under Over-Provisioning and mark up only the necessary, with a small margin of, say, 8 GB. As a result, we have a fairly good performance and cost-effective solution with a very good indicator of reliability. Of course, in the case of 40-50 users of 1C, it would still be better to use a server drive, since with continuous load, the performance will still fall on the desktop SSD.

Now let's dwell in more detail on drives with SandForce controllers. After reviewing the characteristics of http://download.intel.com/newsroom/kits/ssd/pdfs/intel_ssd_520_product_spec_325968.pdf , we conclude that the Intel 520 is a very good option in the case of compressed data, provides up to 80,000 IOPS per record, but In the case of incompressible data, such as video, the figures drop significantly - up to 13 KIOPS and less. Among other things, in a small footnote (number 2) it is indicated that such fantastic write speeds (80 KIOPS) are achievable only when only 8 GB is marked up, which in the case of a 180-gigabyte drive is only about 3% of its capacity, the rest is reserved for Over-Provisioning, as a result, again, we can conclude that this disk will work well with small databases and text files, and if it is necessary to quickly write incompressible data, such as video, it is still better to use full-fledged server data. rer.

It is also worth noting that the 320 series, although it is considered to be desktop, is actually a half-server, because, among other things, the drive contains a supercapacitor, which, in the event of a power outage, allows you to save data from the cache of the disk itself. But 520-ka does not have this. Because it is very important to pay attention to such features when choosing drives. Thus, the 320 series will be slower than the 520, but more reliable.

It is fair to talk about drives from other popular manufacturers - Seagate and Kingston. What is the difference? Seagate Pulsar, in contrast to the considered drives above, has an SAS interface, not SATA, and this is its main advantage. SSDs with SATA interface have a data integrity controller on the drive, there is a data integrity controller on the controller itself, but what happens to the data at the transfer stage is not well tracked. The SAS interface solves this problem and fully controls the transmission channel and in case of some kind of error due to the same pickup, corrects it, the SATA interface does not. In addition, it takes advantage of the reliability of the SAS interface itself, when 520 bytes are written instead of 512, along with 8 parity bytes. Among other things, we can take advantage of the duplex of the SAS interface, but where it can be used is better to be covered in a separate publication.

As for the Kingston series of drives, these are not only reliable drives, but also very productive ones. Until recently, their server series was one of the fastest until the Intel 3700 appeared on the market. At the same time, the price of these drives is quite pleasant, the price / performance / reliability ratio is probably the most optimal. It is for this reason that we offered these drives in the “new” line of servers in the Netherlands with which we started the sale , thus providing a rather interesting price offer, which resulted in quite a bit of servers with these drives:

In these drives, 8 chips of 32 GB each form a total volume of 256 GB, about 7% of the capacity is allocated for Over-Provisioning, the net quota of one drive is 240 GB. The SandForce controller has a positive effect on the performance gains in the case of working with compressed data, namely databases, and often meets the IOPS requirements for 95% of our clients. In the case of uncompressed data or data with large entropy, such as video, users mostly use it more to distribute content than to write, and the performance does not fall so much that it also meets the needs of most users, and if you need to provide more write performance - just increase over-provisioning. As can be seen from the graph, the performance gain for data with zero compression (entropy 100%) with the growth of Over-Provisioning, the maximum:

It is worth noting even the integrity of the manufacturer, the tests are very conservative. And often real results were higher than guaranteed by 10-15%.

And for those who need more capacity, we have prepared specials. sentence:

Traffic can be increased, as well as the channel, upgrades are available at very nice prices:

1 Gbps 150TB - + $ 99.00

1 Gbps Unmetered - + $ 231.00

2 Gbps Unmetered - + $ 491.00

As for the use of solid-state drives in RAID-arrays, we will not repeat about the features of their use in RAID, there is a magical author article amarao , which I recommend reading SSD + raid0 - not everything is so simple and that will help to form a full understanding. In the same article I will talk a little about SSD-drives with PCI-Express interface, in which the built-in RAID-controller is already used. In the case of building a very fast solution, say, for a loaded billing system, such drives are irreplaceable, as they are able to provide hundreds of KIOPS for recording and more, and also, very importantly, very low latency. If the latency of most solid-state drives is within 65 microseconds, which is 10-40 times better than the latency of hard drives, then the top SSD PCI-Express values are 25 microseconds or less, that is, almost RAM speed. Of course, due to the PCI-Express interface itself, there is a decrease in speed, compared to RAM, however, noticeable improvements in latency are expected soon.

The storage capacity of the PCI-Express interface is typed by “memory banks”, the board already has a SandForce chip, and a hardware RAID controller. That is, it is already a mirror with a reaction speed of 25 microseconds with a write speed of more than 100 KIOPS, which has a very high reliability. The effective capacity of such drives is usually small and can be 100GB. The price is also quite impressive (7000-14000 euros). But in the case, as already noted, loaded billing systems, completely loaded databases, and also for the purpose of quickly generating 1C accounting reports in large companies (building speed increases by almost 2 orders of magnitude, 100 times faster) - such solutions are irreplaceable.

So far, we can offer such solutions only in custom-built servers with long-term lease guarantees, since demand is rather limited and not everyone will be willing to pay such impressive money for performance, by the way, this is not appropriate for everyone. Perhaps later, in a separate publication, we will consider such solutions more extensively if there is a corresponding interest from business subscribers.

Source: https://habr.com/ru/post/305724/

All Articles