URL history, part 2: path, fragment, query and authorization

URLs should not be what they became: a tricky way to identify a website on the Internet for a user. Unfortunately, we could not standardize the URN, which could become a more useful naming system. Considering that the modern URL system is good enough is like idolizing the DOS command line and saying that all people just need to learn how to use the command line. Window interfaces were designed to make using computers easier, and to make them more popular. Similar thoughts should lead us to a better method for identifying sites on the Web.

- Dale Dougherty1996

There are several options for the definition of the word "Internet". One of them is a system of computers connected via a computer network. This version of the Internet appeared in 1969 with the creation of ARPANET. Mail, files and chat worked on this network even before the creation of HTTP, HTML and a web browser.

In 1992, Tim Berners-Lee created three things that gave birth to what we consider the Internet: the HTTP protocol, HTML, and URL. His goal was to translate the concept of hypertext into reality. Hypertext, in a nutshell, is the ability to create documents that link to each other. In those years, the idea of hypertext was considered a panacea for science fiction, along with hypermedia , and any other words with the prefix "hyper."

The key requirement of hypertext was the ability to link from one document to another. At that time, a bunch of formats were used to store documents, and access was carried out using a protocol like Gopher or FTP. Tim needed a reliable way to refer to the file, so that the link encoded the protocol, the host on the Internet, and the location on that host. The URL, first officially documented in the RFC in 1994, became this method.

In an initial presentation of the World-Wide Web in March 1992, Tim Berners-Lee described it as a “Universal Document Identifier (UDI)”. Many other formats were also considered as such identifiers:

protocol: aftp host: xxx.yyy.edu path: /pub/doc/README PR=aftp; H=xx.yy.edu; PA=/pub/doc/README; PR:aftp/xx.yy.edu/pub/doc/README /aftp/xx.yy.edu/pub/doc/README) This document also explains why spaces must be encoded in a URL (% 20):

UDI avoids the use of spaces: spaces are prohibited characters. This is done because extra spaces often appear when lines are wrapped around systems like mail, or because of the usual need to align column widths, as well as due to the conversion of various types of spaces during the conversion of character codes and when transferring text from application to application.

It is important to understand that the URL was simply an abbreviated way to refer to a combination of schema, domain, port, credentials and paths that previously needed to be determined from the context for each of the communication systems.

scheme:[//[user:password@]host[:port]][/]path[?query][#fragment] This allowed access to different systems from hypertext, but today, perhaps this form is already redundant, since almost everything is transmitted via HTTP. In 1996, browsers already added http:// and www. for users automatically (which makes advertising with these URL chunks really meaningless).

Way

I do not think that the question “can people understand the meaning of the URL” makes sense. I just think that it is morally unacceptable to force a grandmother or grandfather to delve into what is ultimately the rules of the UNIX file system.

- Israel del Rio,1996

The slash separating the path in the URL is familiar to anyone who has used a computer for the past fifty years. The hierarchical file system itself was represented in the MULTICS system. Its creator, in turn, refers to a two-hour conversation with Albert Einstein, which took place in 1952.

MULTICS used the “more” symbol ( > ) to separate the components of the file path. For example:

>usr>bin>local>awk This is perfectly logical, but, unfortunately, the guys from Unix decided to use > to indicate redirects, and to separate the paths they took a slash ( / ).

Bad links in the decisions of the Supreme Court

Wrong. Now I clearly see that we do not agree with each other. You and I.

')

...

As a person, I want to reserve the right to use different criteria for different purposes. I want to be able to give names to the works themselves, and specific translations and specific versions. I want a richer world than the one you offer. I do not want to limit myself to your two-tier system of "documents" and "options."

- Tim Berners-Lee,1993

Half of the URLs referenced by the US Supreme Court no longer exist. If you read the academic work in 2011, and it was written in 2001, then with a high probability any URL will be non-working there .

In 1993, many passionately believed that the URL would die, and the URN would come to replace it. A Uniform Resource Name is a permanent link to any fragment that, unlike a URL, will never change or break. Tim Berners-Lee described it as an “urgent need” back in 1991 .

The easiest way to create a URN is to use a cryptographic hash of the content of the page, for example: urn:791f0de3cfffc6ec7a0aacda2b147839 . However, this method does not meet the criteria of the web community, since it is impossible to figure out who and how will convert this hash back to real content. This method also does not take into account format changes that often occur in the file (for example, file compression), which do not affect the content.

In 1996, Kif Schafer and several other experts proposed a solution to the problem of broken URLs. The link to this solution is not working now. Roy Fielding published a proposal for implementation in July 1995. The link is also broken.

I was able to find these pages through Google, which essentially made the page titles a modern analogue of URN. The URN format was finalized in 1997 and has not been used since. He has an interesting implementation. Each URN consists of two parts: authority , which can convert a specific type of URN, and a specific document identifier in a format understandable to authority . For example, urn:isbn:0131103628 will denote a book, forming a permalink that (hopefully) will be converted into a set of URLs by your local isbn converter.

Given the power of search engines, it is possible that the best format for URN to date could be the simple ability of files to refer to their past URL. We can allow search engines to index this information, and link to our pages correctly:

<!-- On http://zack.is/history --> <link rel="past-url" href="http://zackbloom.com/history.html"> <link rel="past-url" href="http://zack.is/history.html"> Request parameters

The application / x-www-form-urlencoded format is an anomalous monster in many ways, the result of many years of random implementations and trade-offs that have led to a set of requirements for interoperability. But this is definitely not a sample of good architecture.

- WhatWG URL Spec

If you used the web for a while, then you are familiar with the query parameters. They are located after the path and are needed to encode parameters like ?name=zack&state=mi . It may seem strange that requests use an ampersand ( & ), which is used in HTML to encode special characters. If you wrote in HTML, then you most likely faced with the need to encode ampersands in the URL, turning http://host/?x=1&y=2 into http://host/?x=1&y=2 or http://host?x=1&y=2 (specifically, this confusion has always existed ).

You may also have noticed that cookies use a similar, but still different format: x=1;y=2 , and it does not conflict with HTML characters. W3C did not forget about this idea and recommended everyone to support how ; , and & in query parameters back in 1995 .

Initially, this part of the URL was used solely for searching indexes. The web was originally created (and its funding was based on this) as a collaborative method for elementary particle physicists. This does not mean that Tim Berners-Lee did not know that he was creating a communication system with truly widespread use. He did not add support for the tables for several years, despite the fact that the tables would probably be useful to physicists.

One way or another, physicists needed a way to encode and link information, and a way to find this information. To do this, Tim Berners-Lee created the <ISINDEX> . If <ISINDEX> present on the page, then the browser knew that this page could be searched. The browser showed the search string and allowed the user to make a request to the server.

The request was a set of keywords separated by pluses ( + ):

http://cernvm/FIND/?sgml+cms As it usually happens on the Internet, they began to use the tag for everything, including as a number input field for calculating the square root. Soon they offered to accept the fact that such a field is too specific, and a general <input> tag is needed.

In that sentence, the plus symbol was used to separate the request components, but otherwise everything resembles a modern GET request:

http://somehost.somewhere/some/path?x=xxxx+y=yyyy+z=zzzz Not everyone approved it. Some thought that a way to specify search support on the other side of the link was needed:

<a HREF="wais://quake.think.com/INFO" INDEX=1>search</a> Tim Berners-Lee thought that he needed a way to define strongly typed queries:

<ISINDEX TYPE="iana:/www/classes/query/personalinfo"> Studying the past, I am ready to say with a certain degree of confidence: I am glad that a more general solution won.

Work on the <INPUT> began in January 1993, it was based on the older type of SGML. It was decided (perhaps, unfortunately) that the <SELECT> needs its own broader structure:

<select name=FIELDNAME type=CHOICETYPE [value=VALUE] [help=HELPUDI]> <choice>item 1 <choice>item 2 <choice>item 3 </select> If you're curious, then yes, the idea was to reuse the <li> element instead of creating a new <option> . However, there were other suggestions. In one of them there was a change of variables, which resembles the modern Angular:

<ENTRYBLANK TYPE=int LENGTH=length DEFAULT=default VAR=lval> Prompt </ENTRYBLANK> <QUESTION TYPE=float DEFAULT=default VAR=lval> Prompt </QUESTION> <CHOICE DEFAULT=default VAR=lval> <ALTERNATIVE VAL=value1> Prompt1 ... <ALTERNATIVE VAL=valuen> Promptn </CHOICE> In this example, the input type is checked based on the type indication, and the VAR values are available on the page to replace strings in the URL, like this:

http://eager.io/apps/$appId Additional clauses used @ instead of = to separate request components:

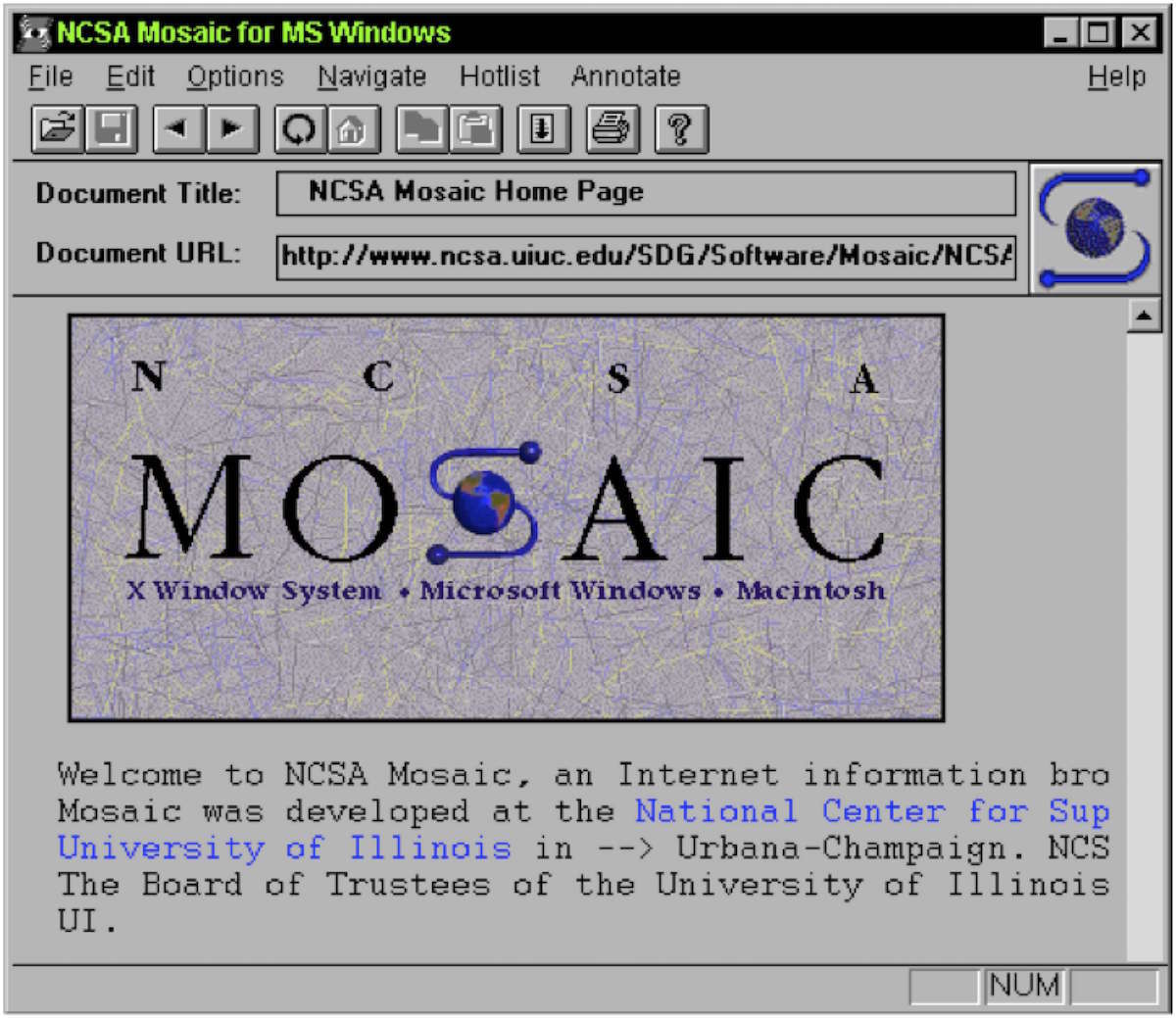

name@value+name@(value&value) Marc Andreessen proposed a method based on what he had already implemented in Mosaic:

name=value&name=value&name=value Just two months later, Mosaic added method=POST support to forms, and so modern HTML forms were born.

Of course, the company Netscape Mark Andriessen also created a cookie format (with a different delimiter). Their proposal was painfully short-sighted, it led to an attempt to create a Set-Cookie2 header, and created fundamental structural problems that we still have to deal with in the Eager product.

Fragments

The part of the URL after the '#' character is known as a “fragment”. Fragments have been part of the URL since the first specification , they were used to create a link to a specific place on the loaded page. For example, if I have an anchor on the site:

<a name="bio"></a> I can link to it:

http://zack.is/#bio This concept was gradually expanded to all elements (and not just anchors), and switched to the id attribute instead of the name :

<h1 id="bio">Bio</h1> Tim Berners-Lee decided to use this symbol based on his connection with the format of postal addresses in the USA (despite the fact that Tim himself is British). According to him :

At least in the USA, postal addresses often use the sign of the number to indicate the apartment number or room in the building. 12 Acacia Av # 12 means "Building 12 on Acacia Avenue, and in this building is apartment 12". This symbol seemed natural for such a purpose. Today, http://www.example.com/foo#bar means “On a http://www.example.com/foo resource, a specific view known as bar”.

It turns out that the primary hypertext system created by Douglas Engelbart also used "#" for such purposes. This may be a coincidence or an occasional “borrowing of an idea.”

Fragments are not specifically included in HTTP requests, that is, they live exclusively in the browser. This concept proved valuable when it came time to implement client navigation (before the invention of pushState ). The snippets were also very helpful when it came time to think about saving the state to the URL without sending it to the server. What does it mean? Let's see:

Mole hills and mountains

There is a whole standard, as nasty as SGML, created for the transfer of electronic data, in other words - for forms and submitting forms. The only thing I know: it looks like a FORTRAN backwards without spaces.

- Tim Berners-Lee,1993

There is a feeling shared by many that organizations responsible for Internet standards have not really done anything since the final adoption of HTTP 1.1. and HTML 4.01 in 2002 until HTML 5 became truly popular. This period is also known (only for me) as the Dark Age XHTML. In reality, the people involved in standards were extremely busy . They just did what turned out to be not very valuable.

One of the directions was the creation of the Semantic Web. It was a dream: to create a Resource Description Framework. (editor's note: run from any team that wants to make a framework). Such a framework would allow one to universally describe meta-information about the content. For example, instead of making a beautiful web page about my Corvette Stingray, I would make an RDF document describing the size, color, and number of speeding tickets, which I wrote out for the entire ride.

This, of course, is not a bad idea at all. But the format was based on XML, and this is a big chicken and egg problem: you need to document the whole world, and you need browsers that can do useful stuff with this documentation.

But this idea even gave birth to conditions for philosophical debates. One of the best of these disputes lasted at least ten years, it is known by the clever code name ' httpRange-14 '.

The purpose of httpRange-14 was to answer the fundamental question “What is a URL?”. Does the URL always refer to the document or can it refer to anything? Can the URL link to my machine?

They did not attempt to answer this question in any satisfactory manner. Instead, they focused on how and when you can use the 303 redirect to inform the user that there is no document under the link and redirect it to where the document is. And on when fragments can be used (part after the '#') to direct users to the associated data .

To the pragmatic modern man these questions may seem ridiculous. Many of us are used to the fact that if a URL turns out to be used for something, it means that you can use it for that. And people will either use your product or not.

But the Semantic Web cared only about semantics.

This particular topic was discussed on July 1, 2002, July 15, 2002, July 22, 2002, July 29, 2002, September 16, 2002 , and at least another 20 times during 2005. The discussion ended thanks to the very “ httpRange-14 solution ” in 2005, and it was returned again due to complaints in 2007 and 2011 , and the request for new solutions was opened in 2012. The issue was discussed for a long time by the pedantic web group, which has a very appropriate name . The only thing that did not happen was that none of these semantic data were added to the web in any URL.

Authorization

As you know, in the URL you can include a username and password:

http://zack:shhhhhh@zack.is The browser encodes this data in Base64 format and sends it as a header:

Authentication: Basic emFjazpzaGhoaGho Base64 is used only to allow characters that are prohibited in the headers to be transmitted. He does not hide the login and password.

This was a problem, especially before the SSL distribution. Anyone who monitors your connection could easily see the password. They offered many alternatives , including Kerberos , which was and remains a popular security protocol.

As with other examples of our history, simple basic authorization was easiest to implement for browser developers (Mosaic). So basic authorization was the first and only solution until developers got the tools to create their own authentication systems.

Web application

In the world of web applications, it is strange to imagine that the web is based on a hyperlink. This is a method of connecting one document to another, which over time has acquired styles, the ability to run code, sessions, authentication, and ultimately has become a common social computer system that so many researchers of the 70s tried (unsuccessfully) to create.

The conclusion is the same as that of any modern project or startup: only distribution makes sense. If you have done something that people use, even if it is a low-quality product, then they will help you turn it into what they themselves want. And on the other hand, of course, if no one uses the product, then its technical excellence does not matter. There are countless tools that have spent millions of hours of work, but exactly zero people use them.

Source: https://habr.com/ru/post/305654/

All Articles