CodingFuture + Puppet. Part V: Databases (cfdb)

In short:

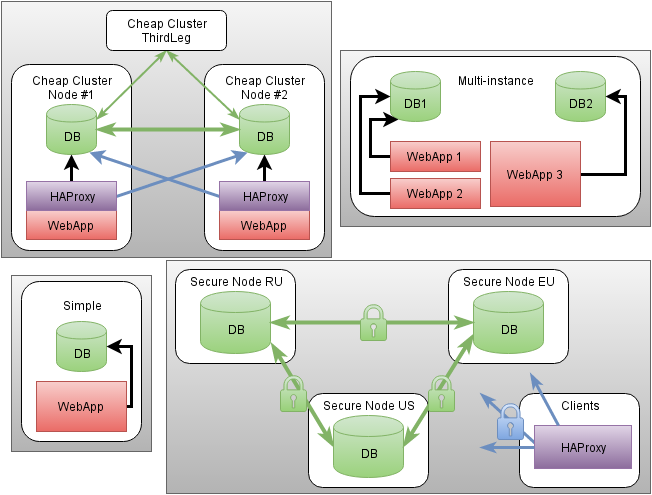

- cfdb is a module for deploying and autotuning nodes and clusters of databases and accessing them with high availability and failover protection.

- As proof-of-concept, MySQL and PostgreSQL are supported based on Percona Server / XtraDB Cluster and official PostgreSQL + repmgr assemblies.

- Isolation of resources based on cgroups , integration with network filter settings through the

cfnetworkmodule and strict access control by means of DBMS.- Write to one node to minimize conflicts and load distribution for read-only access.

- Automatic health check of the cluster and the actual feasibility of access.

- Manual and automatic local backup, automated data recovery.

- Support for automatic migration of existing databases

Thematic cycle:

- Part I: network and network filter (cfnetwork + cffirehol)

- Part II: Access and Standard Environment (cfauth + cfsystem)

- Part III: Install Puppet Server (cfpuppetserver)

- Part IV: Centralized Management (cftotalcontrol)

- Part V: Databases (cfdb)

- Part VI: Topical Blacklists and Secure Knock

Introduction to the concept and terminology

Entity types in abstract configuration:

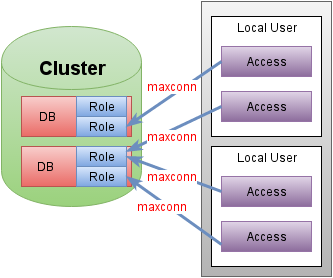

clusteris an abstract named collection of DBMS nodes operating as one.instance- the physical node belonging to thecluster.databaseis a named database owned bycluster.role- an account with access to a specificdatabase. By default, there is always adatabaserole with the same name with full access to the corresponding database.access- declares the need to access a certain database under a certain account of the database with a certain maximum number of connections from the specified local account of a specific node.

The specificity of the cluster configuration is to some extent dictated by the living work of the DBA — there is a primary node through which all changes are made. According to this principle, database and role entities can only be set on one node, and the remaining nodes must be configured as secondary or arbitrators in general. Such a situation can add a bit of discomfort if you need to make changes during a fail-over, but nothing limits the possibility of temporary manual changes.

To unify and simplify debugging of the infrastructure, the universal proxy service HAProxy is used transparently. The clear advantage is: in the absence of special changes in applications, in advanced monitoring of the status of fully operational cluster nodes, creation of secure communication channels outside of the TMS offloading process, support for collecting statistical data out of the box, strict limitation of the number of admissible connections even from application curves . HAProxy automatically comes into play in the following cases:

- There is more than one node in a

clusterand therefore the status of each is required - The access declaration forcibly requires a secure communication channel with the remote host.

- The fact of

cf_locationclient and server nodes do not match (different data centers) and there is no explicit indication of the unsafe connection for the VPN case.

Unlike rubber "productive" resources (CPU, I / O), the main problem arises with the distribution of memory. To do this, a universal framework was created in the cfsystem module for allocating memory in the system according to the relative weights (priorities) of the services, taking into account possible minimum and maximum limits. A set of processes of each instance run in its own cgroup systemd slice. In addition to managing the allocation of resources and limits, such as the maximum number of file descriptors, systemd also acts as a custodian of the process and automatically restarts any abnormal crashes. For disk space, it is still implied to mount separate volumes for maximum isolation and speed.

Meta-information of this module is collected and stored as Puppet facts, which requires some understanding that facts are generated on the target system and loaded into PuppetDB at the beginning of the deployment. Those. redeployment is required to keep the facts fresh after the changes. Autoconfiguration of access, restrictions on the number of connections and other nuances are configured from these centrally stored facts about all managed systems. There is clearly room for improvement and a corresponding plan, but so far.

Closer to the point

This module is almost as multifaceted as the full configuration of the DBMS is multifaceted. The cfdb documentation will help to partially clarify the functionality, but it will be unnecessary to load all this article.

Lift the DBMS

- Add a system configuration with a base

# cfdb classes: [cfdb] # cfdb::instances: mysrv: type: mysql port: 3306 databases: db1: {} db2: roles: ro: readonly: true custom: custom_grant: 'GRANT SELECT ON $database.* TO $user; GRANT SELECT ON mysql.* TO $user;' - Deploy twice. So far, the second step will gather the necessary facts for a centralized base.

@db$ sudo /opt/puppetlabs/bin/puppet agent --test; sudo /opt/puppetlabs/bin/puppet agent --test Let's try to figure out what happened:

- Installs a complete list of Percona Server packages.

- We create a database node belonging to an abstract cluster with a unique name

mysrv - In a cluster, we define two databases

db1anddb2 - Roles of

db1anddb2are automatically created with full access to the corresponding databases - Additionally, a

db2rorole isdb2rowith read-only access todb2and support for load distribution across nodes - Additionally, the

db2customrole isdb2customwith absolutely arbitrary access rights. Note the mandatory use of the$databaseand$usersubstitution keys. - All passwords are randomly generated, but can be forcibly set.

- Information about existing clusters, their nodes, databases and roles appears in the centralized database PuppetDB.

Declaring access to cluster roles

- Add system configuration with the application

# cfdb classes: [cfdb] # cfdb::access: # , webapp_mysrv_db1: cluster: mysrv role: db1 local_user: webapp max_connections: 100 webapp_mysrv_db2ro: cluster: mysrv role: db2ro local_user: webapp max_connections: 500 config_prefix: 'DBRO_' - Deploy twice to the client system. There should be a warning about the inability to access with automatic checks.

@web$ sudo /opt/puppetlabs/bin/puppet agent --test; sudo /opt/puppetlabs/bin/puppet agent --test - Deploy once on a system with a base

@db$ sudo /opt/puppetlabs/bin/puppet agent --test - If necessary, we overload the DBMS to increase the maximum number of all connections that are multiples of 100 by default. The deployment process itself will prompt the necessary actions.

@db$ sudo /bin/systemctl restart cfmysql-mysrv.service - The final stage - we deploy again on the client system to make sure that all access is working.

@db$ sudo /opt/puppetlabs/bin/puppet agent --test

What happened:

- A

.envfile was created on the client system under the localwebappuser:- there is a set of variables with the prefix

DB_(by default) for access to the roledb1same name with the base, - plus a set of variables with the

DBRO_ prefix to access thedb2rorole in thedb2database, - if desired, besides

.envany specific approach can be used (seecfdb::access::custom_config).

- there is a set of variables with the prefix

- On the second pass we load the facts.

- Then we update the DBMS configuration, where for each role the client node will be added to the list of allowed and the maximum number of connections will increase.

- Check that all accesses work - done automatically when deployed

That's all, there is no significant difference in the type of database. All the same type.

Migrating existing data directories

For the convenience of switching from previously installed DBMS configurations, a feature was added in the form of the init_db_from parameter of the tweaking. The format of the value is somewhat different for different types of DBMS due to the specifics of the upgrade processes. Usage example:

cfdb::instances: mymigrate: type: mysql ... settings_tune: cfdb: init_db_from: '/var/lib/mysql' pgmigrate: type: postgresql ... settings_tune: cfdb: init_db_from: '9.5:/var/lib/postgresql/9.5/main/' By the way, the updated cfpuppetserver module already uses cfdb to organize high availability. During installation, the fact base is migrated without losing the meta-information.

Perform manual operations on the instance

By default, home folders are /db/{type}_{name}/ , where the bin/ directory is located with useful wrappers for the standard mysql , psql , repmgr , etc. cfdb_ with the cfdb_ prefix. They can be run as root , but this is not safe due to possible substitution through extensions of the same PostgreSQL. An example of logging in to the database under the super-user:

@db$ sudo -u mysql_mysrv /db/mysql_mysrv/bin/cfdb_mysql # @db$ /db/mysql_mysrv/bin/cfdb_mysql Backup and Restore

The ability to manually back up and restore is always available through the ~/bin/cfdb_backup and ~/bin/cfdb_restore in the home folder of the instance . Automatic periodic backup is enabled when $cfdb::instance::backup = true . Setting is done via the $cfdb::instance::backup_tune . The specific implementation depends on the type of DBMS. Currently xtrabackup used for MySQL and pg_backup_ctl for PostgreSQL.

Note: XB 2.4 has a problem - it requires at least 1GB of free memory for incremental recovery

For example, raise the hot standby PostgreSQL cluster with repmgr

- Main node configuration

classes: [cfdb] cfdb::instances: pgcluster: type: postgresql port: 5432 # is_cluster: true databases: - db1 - Configuration of secondary nodes

classes: [cfdb] cfdb::instances: pgcluster: type: postgresql port: 5432 # is_secondary: true The client is configured in the same way as with a single node, but HAProxy automatically enters the game transparently.

- We deploy on all related systems. We repeat two more times: at the first step we bring the facts into PuppetDB, and at the second we bring to mind. On the third rep, there should be no changes. * If you need to restart some cluster nodes, then in the case of repmgr, you need to do this starting with the master (

~/bin/cfdb_repmgr cluster show), due to the specificity of themax_connectionsparameter and replication.

Who ever set up a typical PostgreSQL cluster with repmgr, feel the difference?

Integration with containers like Docker and external infrastructure

There are two sides here: the first is the DBMS themselves, the second is conditionally the clients of the DBMS. In a static version of the problems should not be much, but with a dynamic build requires initially deployed maximum infrastructure, and then remove the excess with the graceful disconnection of cluster nodes to maintain a quorum.

In the case of "unmanaged" external clients, there is a $cfdb::role::static_access that allows you to flexibly set the facts about manually declared access bypassing centralized metadata.

What we have in the end

Obviously, this approach allows you to "rivet" and maintain database clusters on an industrial scale in a short time, significantly reducing the risk of errors in such a sensitive area. Of course, at the moment, the entry of infrastructure meta-data into a centralized database somewhat complicates the deployment process. At a certain stage, there is an opportunity to improve this, at once considering the parts that have not yet been deployed, but everything has its time. At the same time, this Puppet module allows minimal effort to get protected and relatively optimally tailored to specific conditions of the DBMS, with an extremely flexible ability to control both the optimization process and the fit of the final config. The overall concept is universal and allows you to easily add support for other types of DBMS as needed.

With all this, the safety of data is in the first place - automation has severe limitations, if there is a risk of data loss, then manual intervention by prompts is required when deploying.

UPD: fixed glitches processing Markdown on Habré.

')

Source: https://habr.com/ru/post/305108/

All Articles