Kaggle: A story about how we learned to predict the relevance of search queries and took 3rd place

Preview

Hello, Habr! On April 25, 2016, the 3-month-long Home Depot Product Search Relevance competition in which our Turing Test team ( Igor Buinyi , Kostiantyn Omelianchuk , Chenglong Chen ) not only managed to get a good idea with Natural Language Processing and ML, but also took 3 2nd place out of 2125 teams. A full description of our solution and code are available here , a short interview here , and the purpose of this publication is not only to tell about the decision that brought us such a result, but also about the difficulties and experiences through which we had to go during the competition.

A few words about Kaggle

Most readers are probably familiar with Kaggle. For the rest, I note that at the moment it is the largest platform in the world, where a significant number of Data Science competitions take place, in which the collective mind of thousands of participants generates a large number of solutions to real applied problems. As a result, competitors receive knowledge and experience (the winners are also prizes), and the organizers receive ideas that can be successfully implemented in practice.

Formulation of the problem

- there is a website for selling tools for repair and building materials, where you can find a greater number of specific products

- there are users who are trying to find the product of interest using the search function

- there are several hundred thousand matches "search query" / "featured item"; product information consists of several text fields, such as name, description, and many attributes (for example, brand or color)

- there are average estimates of relevance from assessors for some part of such correspondences

- the task is to build an algorithm that will well predict the relevance scores that assessors would put for the remaining “request” / “product” pairs

')

Why do you need it?

Relevance scores are needed when optimizing a search algorithm that matches a “query” / “product” match.

Such assessments can be put down by assessors, but they work slowly, and their time is expensive or get automatic, then you can save on assessors and significantly increase the speed of feedback. However, the quality of assessments should remain at the same level.

Assessors are people who may be wrong

We tried to teach the model to imitate the behavior of people grading (and nothing more than that). The answer to the question of how their assessments respond to “real relevance” invariably leads us away from a specific task to the origins of philosophy.

How was the quality of the model evaluated?

RMSE was used as a metric, which, like any other quadratic metric, penalizes large errors most of all.

What was the source data?

The data consisted of pairs of “request” (search term) / “product” (which consisted of three parts: product title, product description, attributes) and an average rating of relevance (which took values from 1 to 3, including some fractional ones).

The breakdown into train / test was made in the proportion of 74 067/166 693 and was not random (there were many requests that were present only in test or only in train and their distribution could not be called normal).

The data was rather dirty (a large number of grammatical errors in the queries)

Little about us

Our team during the 5/6 competition consisted of two people (Igor Buyny and Konstantin Omelyanchuk). We are both analytic colleagues working in a Ukrainian company developing browser games. Before this competition, we had many courses over our back and only one competition on kaggle.com, in which we were only in the top 15%. Igor just finished one project where NLP was used, and called me to take part in this competition.

Our goals

The original goals were:

- gain practical skills in working with real data

- understand deeper with NLP

- improve programming skills

- get into the top 10%

But the appetite, as we know, comes with eating, and as the end of the competition approaches, our goals have been revised towards more ambitious ones.

The first steps

As mentioned earlier, the full description of our solution was published elsewhere , and in this article we would like to talk about the chronology of our actions, so we submit the main results and discoveries in the order in which they were achieved.

Stage 1

We started with the simplest thing we could begin with — we figured out the ready-made scripts that can always be found on the forum. Since there were no “counted variables”, they needed to be generated. The first variables we had (like many other participants) were numeric variables such as the number of word matches between the query and the document. The first word processing was the use of the stemmer, which left its root for each word. The xgboost model on these features after text processing with the help of the stemmer gave RMSE around 0.49.

Stage 2

Next, we began to move in three directions:

- generation of new simple variables

- fitting model parameters

- Correction of the most frequent errors in the text and reduction of words of the same meaning to a single format.

Moving gradually in these three directions, we brought the RMSE closer to 0.48 and came to the conclusion that the third direction gave the greatest improvement in comparison with the others. At this stage, two important discoveries were made:

1. The idea arose to somehow automate the error correction process, and a solution was found to use the Damerau-Levenshtein distance to take into account not only coincident, but also similar words. This distance showed how much to insert / replace / delete / transpose characters to convert one line into another. We considered two words (word1, word2) the same if this distance was less than min (3, max (len (word1) -3,1)). The new variables calculated using this criterion improved the RMSE to 0.478 and served as another confirmation of the idea that text processing is very important in this competition.

2. To the list of our simple variables, we added variables that took into account the number of letters in matching words. Oddly enough, these turned out to be very strong variables with which we improved the RMSE to 0.474 .

Stage 3

It became clear that we will not manage with some simple (keyword match) variables and we need to use more complex ones. Classical variables in this problem are tf / idf variables that take into account the weight of each matching word depending on its frequency in a particular document and in the entire collection of documents. The very first attempt to add these variables brought us 0.468 to RMSE.

In parallel with this, we began to look for useful information among the attributes (attributes) of the document. The inclusion of variables related to brands and materials increased the RMSE to 0.464 .

Stage 4

The next step was the generation of variables that would somehow take into account the semantic relationship between words. In this task, the WordNet package from the NLTK library helped us. Using the built-in functions, which differently considered synonymous similarity between words, we got another category of variables with fundamentally new information that was not used before.

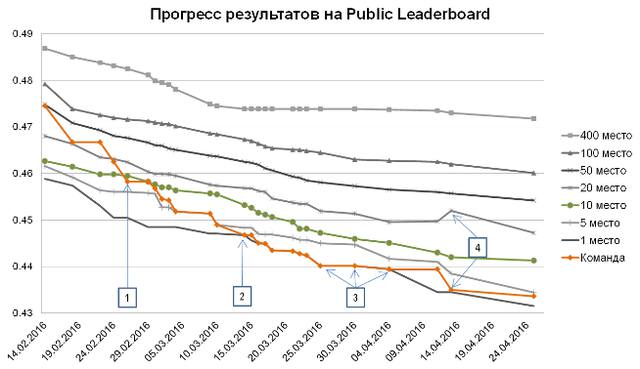

Also, variables were calculated that took into account which part of the speech the words belonged to (the NLTK POS tagger was used). At the same time, we have made significant progress in word processing and error correction. All this together made it possible to improve RMSE to 0.454 and get into the top 10 teams. (Note 1 on the progress chart)

Stage 5

As our progress progressed, it became harder and harder to improve RMSE and the ratio of successful actions to attempts that did not bring the desired result began to lean in our direction. This suggested that it was time to start building ensembles. The first attempts to build an ensemble were not successful, but we continued to work in this direction.

The key actions that allowed us to make the next leap were:

- word processing and error correction;

- local tf / idf variables that were considered at the level of documents related to each specific search query;

- word2vec variables that gave a very significant increase in the quality of the forecast.

Thus, we brought RMSE to 0.445 on a single model (single model) and came out on top in the current rating after the first month and a half since the beginning of the competition. (Note 2 on the progress chart)

Picture. Results Progress Chart

Additional problems for potential winners

Before reaching the first place, we considered this competition as a platform for training, but at that moment we realized that there are chances to at least get into the top 10 (instead of the original goal in the top 10%), and as a maximum - to get into the prizes (first three places). But in order to fight for the prizes, we had to additionally solve two problems.

Reproducible Solution

Competition winners must provide well-documented documentation and code capable of reproducing the final solution. Our solution, to put it mildly, did not satisfy this requirement, since some changes were constantly made to the code, the old versions of the code were often not saved, and the intermediate results of the calculations were recorded in a whole bunch of files. In addition to the fact that it was impossible to establish an exact correspondence between the version of the code and the specific file with variables, the full manual recalculation required more than a week of computation time on our machines.

Sharing all third-party external data

The second important point was the rising discussion on the forum about what text processing is acceptable and what is not. This dispute gave rise to the user's steubk script , which in a cunning way drove all search queries through Google and pulled up the text corrected by Google. The administrators of the competition first spoke against the use of this algorithm and against the “hand-corrected” (hand labeling) errors in the text. But since there was no clear distinction between what is a “manual correction” and what is a generalized rule based on our judgment (feature engineering), as a result, they were allowed to use their own correction dictionaries. The only condition was to post this dictionary to the forum a week before the end of the competition (merger deadline). Understanding the importance of word processing and considering that we created a very good dictionary, we did not want to publish it on the forum and were looking for ways to automate most of the text processing. This automation led to a deterioration in the results, and since without processing the text we could not proceed to recalculate all the variables, this stalled further work.

Is it so difficult?

In total, we spent about a month rewriting the whole code according to a single format and recalculating variables (this also coincided with the lack of free time for the competition; see note 3 on the progress chart ). We received some advancement from the construction of the ensemble. The general idea of the ensemble is to teach a lot of 1st-level models with different parameters and different sets of variables on the basis of the data, and build a 2nd-level model based on the predictions of these models. This approach works well if you correctly divide the data for training and model validation. In our case, this was further complicated by the fact that the data in the train and test differed in their structure. The use of StratifiedKFold (K times to train a model on K-1 parts of the data and collect forecasts on the remaining parts) led to an increase in the gap between the results on cross-validation (cross-validation) and on the current ranking (public leaderboard). Nevertheless, we have come to terms with such an ensemble building scheme, as with the addition of new models of level 1, it continued to improve the result on the current rating.

Thus, three weeks before the end of the competition, we brought our RMSE to 0.44 and were still in the first place with the belief that most of our difficulties were over and there was only a little more pressure for the final breakthrough. And then the team merging began.

To merge or not to merge?

Why do we need associations?

Teaming first of all gives the effect similar to adding a new level to the ensemble. A certain linear combination of the decisions of the teams before the merger will be better than any single decision due to the fact that these solutions are very different among themselves (they have a low correlation coefficient, for example, 0.9). In our competition, this difference in many respects provided a difference in the approaches to text processing and the generation of variables.

The second plus from association is the opportunity to learn from each other, borrow some ideas and exchange experience (for which, in principle, Kaggle exists).

What happened?

We understood that closer to merger deadline, the merger process will go more intensively, but clearly underestimated the scale of the incident. If three weeks before the end of the competition there were only a few teams in the top 10, where there were more than 2 people, then after everything ended, there were no teams less than three people in the top 10 (the average number of participants in the top 10 teams was 4.6 people) .

Initially, we did not plan to unite with anyone, despite the good chances to get into the prizes: not so much from greed, but from the desire to understand our level in particular. But about a week before the deadline of the associations, we were thrown back to the 4-5th place, and we remained almost the only team of 2 participants in the top 10 (not counting the only guy at that time who was alone). We once again discussed the situation and decided to try to offer to unite him. We didn’t count on his consent: this guy Chenglong Chen single-handedly won the previous similar competition CrowdFlower Search Relevance, enters the top 50 of the Kaggle rating and prefers to compete alone (before our competition he took part in about three dozen competitions, and only once was with someone on the team). We believed that since he still continues to compete alone, it means that he does not want to unite with anyone. We were very surprised and delighted when, after 2 hours in response to our proposal, we saw a friendly and concise answer:

“Sure! Just send me a team request :) We will discuss in details later. ”

A certain irony is this: the only available communication channel for us was the Kaggle internal messaging system accessible to participants with a rating above 500. Since before that we had only seriously participated in one competition, it turned out that one of us had a rating slightly below 500 That does not allow to send messages. It was a happy coincidence that the other of us spent a few hours a couple of months ago on another contest, where we were in the top 90% and this fantastic result was enough to be able to send messages. It is quite probable that if it were not for this fact, there would be no our association with Chenglong, just as there would not be this article.

What did it give us?

First, after the merger, we estimated the correlation of our best solutions, and by the standards of Kaggle it turned out to be quite low at 0.87. The simple average of our two solutions gave us RMSE 0.435 and returned us to second place in LB. (Note 4 on the progress chart)

Secondly, we met an incredibly talented, pleasant and modest guy, from whom we managed to learn a lot in such a short period of time.

Discoveries at the finish

There was only two weeks left, and we were at a distance of five time zones from each other. Therefore, we decided that the optimal strategy for us is to exchange ideas, variables and code, but focus on completing our own solutions, which we then combine by simple weighing (blending). As a result, Chenglong used part of our variables and got a better solution, but the correlation between our solutions increased to 0.94. Therefore, the improvement of our overall result was not as great as we would like.

The importance of proper cross-validation

As mentioned above, for cross-validation when building an ensemble, we first studied models for 2/3 of the data and checked on the remaining 1/3 of the data. This approach for this competition cannot be called successful, since it led to an ever-increasing gap between CV and LB.

About a month before the end of the competition, we wrote code that used 1/3 of the data for training and 2/3 of data for validation (that is, used a breakdown close to the breakdown between train and test). The gap between CV and LB has significantly decreased, but the results have worsened. Therefore, we continued to adhere to the original breakdown.

Looking ahead, we say that at that moment we made a mistake. We got good results with the old cross-validation strategy, not because this strategy was the best (it was worse), but because the ensemble was built on hundreds of models that were built in different periods with different approaches to text processing and variable counting. Using such different approaches in this competition improved the solution. About a week before the end, we recalculated all the variables using a single algorithm (we needed to make a reproducible solution in order to qualify for a prize) and were horrified that they had a worse result. This is where the code we dropped earlier came in handy. We no longer had time to recalculate hundreds of models, but even of the eight models, we got a better result than with the old approach.

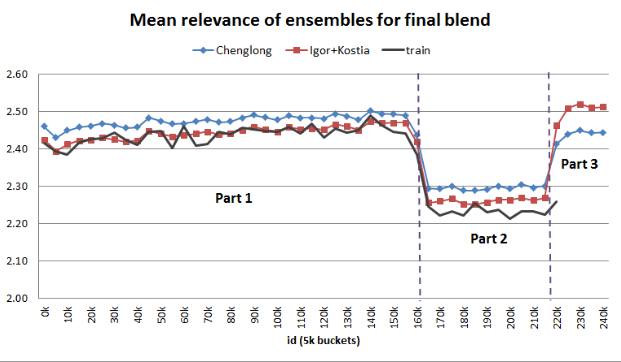

Chenglong initially used a more efficient breakdown. The fact is that part of the search requests was only in the train, part - only in the test, and part - and there and there. The same with products. Chenglong paid attention to this, and made a similar distribution in parts for training and validation (figure below). As a result, he received cross-validation results much more realistic than ours.

We did not have time to implement the same cross-validation in our solution, although we understood that it is better than our approach. Therefore, we did not use variables from Chenglong to:

a) keep the correlation between our decisions as low as possible

b) get a situation in which the strongest solution is calculated using the correct cross-validation algorithm (to avoid surprises at the end)

Picture. Breakdown for cross validation from Chenglong

Is this really private?

Literally in the last days of the competition, we noticed that in the source data there is a relationship between the record ID and the assessment of its relevance. Throughout most of the competition, we, like many others, thought that this sequence of records in the data was random. We experienced a huge surprise when we saw that, depending on the ID, three different areas can be clearly distinguished, in which the average relevance is very different. Even more surprising was the fact that our two best solutions behaved in absolutely different ways in one of the areas (part 3 in the figure). Prior to this, the result we saw on LB corresponded to public data (30% of the test), but the final result should have been calculated for the remaining 70% test (private). Given the fact that the data were ordered in a clearly non-random manner, the question was whether the breakdown into public and private is also non-random? And which of our solutions works best in the disputed area (part3)?

To solve the last question, we glued our two best solutions (took the first two parts from one solution, and the last part from the other). The result on the LB was the same! This meant that a whole huge chunk of data was completely private, and we had no idea which of our two solutions worked better on this piece of data. Since Kaggle allows you to choose two solutions as the final solution, we decided to simply choose diametrically different weights for the controversial part of our solutions, so as not to miss the mark.

Honestly, we expected a big upheaval in the rating due to a non-random breakdown into public / private, but this did not happen, as it turned out that a third of all the data in the test was specifically “infected” in order to discourage the participants to engage. ” manual processing of the text . This part of the data was not evaluated at all. Very cruel move by the organizers.

Honesty to the detriment of the result

During the competition, we considered it a crime to leave computers without payment for the night and most often at night new models for the ensemble were considered. After we had to rewrite the whole code to get a reproducible solution, we had about 300 different models of the 1st level, which we could not exactly reproduce, did not have enough time for it, and did not publish the old dictionaries on the forum for a week until the end of the competition, as required by the rules. Nevertheless, the addition of these models to the ensemble gave a slight improvement on the LB (most likely because these models belonged to different versions of text processing). We decided not to use the solution with these models as final, as this, in our understanding, contradicted the conditions of the competition. We also did not rule out unpleasant surprises due to the non-random distribution of data and considered the old old strategy of cross-validation less reliable.

If we finally decided on this, then the final decision with these models (this was our best solution in public) would bring us 0.43188 to RMSE and first place .

Moment of truth, conclusions and life after victory

So the moment came when both of our final decisions were ready, and we just had to wait for the publication of the final results on private.At this point, the situation was such that we were in third place, with a good margin from us, the team was in first place, teams from 9th place and below were far behind, and the results of teams from 2 to 8 places were quite close, so they are quite could swap places in the final standings. Given the expected shocks, we understood that the result could have been any for us inside the top eight. In the cherished 3 am, after repeated updates of the page, we finally saw our result. 4th place ...

What is victory?

The shock was huge. The second blow followed when we saw that our decision with the old non-reproducible models would bring us second place. It was not easy to fall asleep that night, but closer in the morning there came the thought that in fact the final gap between the top teams is insignificant and the fact of reaching heights of this level is significant in itself, not to mention the enormous experience and skills that were obtained in the process. The monetary reward for the 3rd place was not so large that it could even more grieve, so after a while, sadness came from a pleasant feeling of a job well done. And the next day we learned that the team that was in the first place was disqualified, since one of its participants was caught using several accounts.This strict decision of the organizers brought us the final third place , which we at that moment did not expect.

Life after

After the competition we were overtaken by moral and physical exhaustion, yet we spent a lot of time and effort. But the naive desire to rest after its completion did not come true. Two months after the competition, we additionally:

- made complete documentation on our decision

- prepared an interview

- prepared a presentation and held a call conference with HomeDepot

- prepared a presentation and spoke for the Kaggle community in Kiev

- wrote this article

, , .

. — , :

1. , . , .

2. , , .

3. ! , , . data science — !

— , :

1. .

2. .

3. , natural language processing.

4. .

5. .

, Kaggle, , .

Source: https://habr.com/ru/post/305026/

All Articles